OpenAI Codex and Claude Code represent two leading AI coding assistants in 2025, each with distinct approaches to developer productivity. Codex emphasizes autonomous cloud-based workflows with token-efficient processing, achieving 90.2% on HumanEval benchmarks. Claude Code focuses on local terminal integration with deep codebase understanding, scoring 92% on HumanEval and an impressive 70.3% on SWE-bench compared to Codex’s 49%. The choice between them depends on whether you prioritize cloud automation or local development control.

Codex vs Claude Code: Architecture and Approach Differences

The fundamental architecture difference between these tools shapes their entire user experience. Claude Code operates as a command-line interface that embeds directly into your terminal, powered by Claude 3.5 Sonnet or newer models. This local-first approach means your code never leaves your machine during development, providing enhanced security for sensitive projects. The tool uses agentic search to understand your entire codebase without manual context selection, making coordinated changes across multiple files seamlessly.

OpenAI Codex takes a multi-interface approach in 2025, powered by GPT-5 High models. The platform offers three distinct access points: a cloud-based agent working asynchronously in isolated sandboxes via ChatGPT, a local Codex CLI for terminal operations, and IDE extensions providing deep integration with development environments. This flexibility allows developers to choose their preferred workflow, switching between autonomous cloud processing and hands-on local development as needed.

The architectural choices reflect different philosophies. Claude Code prioritizes developer control and transparency, requiring explicit approval for destructive operations and maintaining a clear audit trail of all changes. Codex leans toward automation and efficiency, capable of handling complex multi-step tasks independently while the developer focuses on other work.

Performance Benchmarks: Codex vs Claude Code in 2025

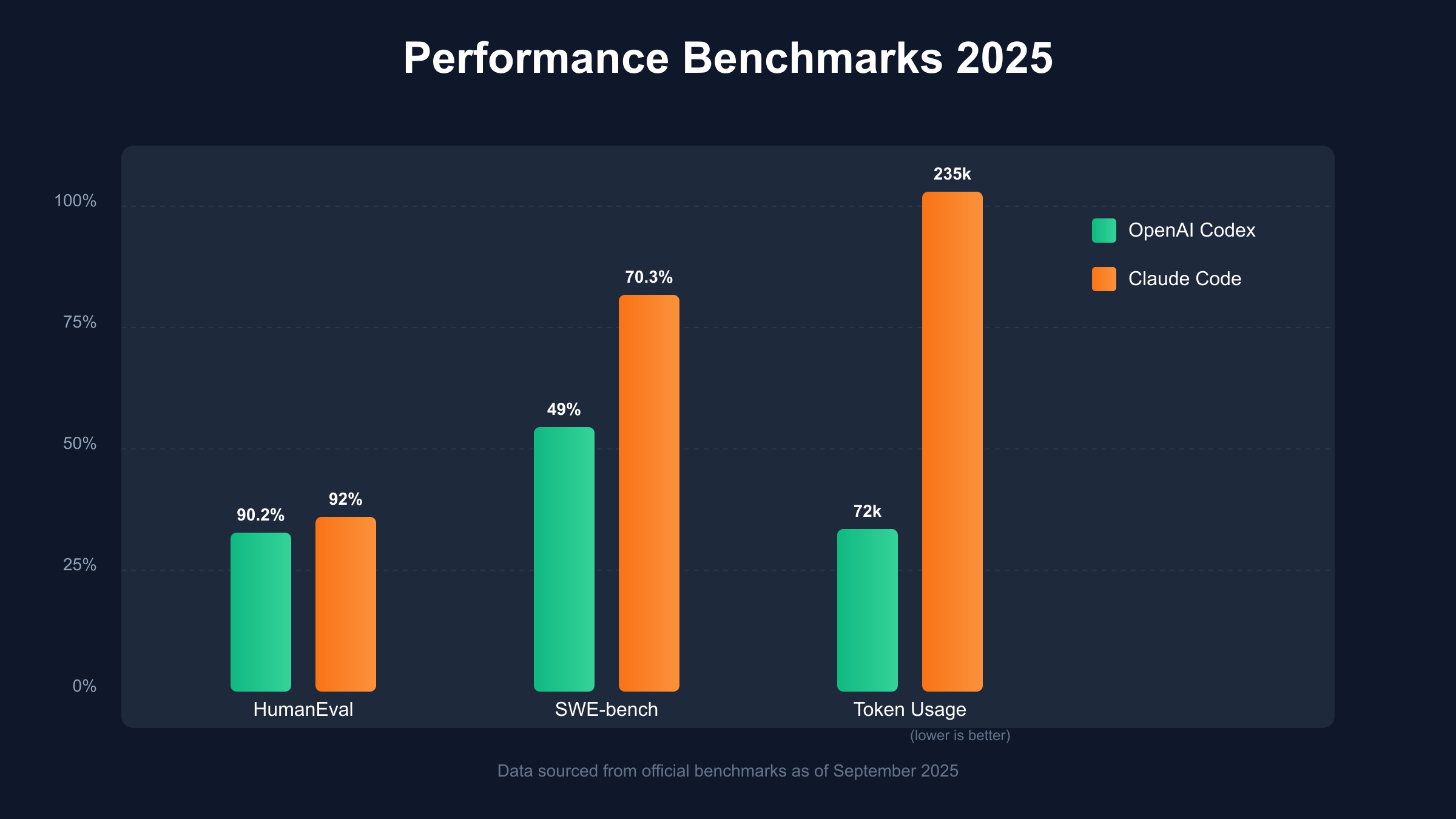

Performance metrics reveal interesting trade-offs between the two platforms. On the HumanEval benchmark, Claude Code edges ahead with 92% accuracy compared to Codex’s 90.2%, demonstrating superior code generation capabilities for single-function problems. The gap widens significantly on SWE-bench, where Claude 3.7 Sonnet achieves 70.3% accuracy for multi-file bug fixing tasks, while OpenAI’s o1/o3-mini models hover around 49%.

Token efficiency tells a different story. In practical tests on complex TypeScript challenges, Codex consumed only 72,579 tokens while Claude Code used 234,772 tokens for the same task. This 3x difference in token consumption translates directly to cost savings, especially for high-volume operations. Developers processing large codebases or running continuous integration workflows might find Codex’s efficiency compelling despite slightly lower accuracy scores.

Security testing through BountyBench experiments in May 2025 revealed complementary strengths. Codex CLI achieved a 90% patch success rate for defensive tasks, equivalent to $14,422 in bug bounty value. Claude Code demonstrated stronger offensive capabilities in vulnerability detection and exploitation, reflecting its extended reasoning capabilities in security contexts.

Pricing Comparison: Claude Code vs OpenAI Codex

Pricing structures reflect different business models and target audiences. Claude Code offers explicit subscription tiers starting at $20 monthly for the Pro plan, suitable for repositories under 1,000 lines with approximately 45 messages every five hours. The Max 5x plan at $100 monthly provides 225 messages per five-hour window, while the Max 20x plan at $200 monthly delivers 240-480 hours of Sonnet 4 usage weekly.

OpenAI bundles Codex within ChatGPT subscriptions, making direct price comparison challenging. ChatGPT Plus users at $20 monthly gain Codex access alongside other GPT features. For API access, GPT-5-Codex pricing matches standard GPT-5 rates. This integration strategy provides better value for developers already using ChatGPT, while standalone coding-focused users might find Claude Code’s dedicated pricing more transparent.

Recent changes highlight platform evolution. Anthropic introduced stricter rate limits in August 2025, affecting less than 5% of users according to their data. These weekly limits reset every seven days, with separate quotas for overall usage and Opus 4 model access. OpenAI’s pivot from standalone Codex API to integrated ChatGPT features reflects market consolidation around comprehensive AI platforms rather than specialized tools.

Developer Experience: Claude Code vs Codex Usability

Setup and onboarding experiences differ significantly between platforms. Codex provides a simpler initial setup, immediately stating its suggestions-first approach and seeking approval before destructive operations. This transparency builds trust quickly, especially for developers new to AI coding assistants. The recent addition of stdio-based MCP support expands integration options, though HTTP endpoint support remains limited.

Claude Code requires more initial configuration but rewards investment with deeper codebase understanding. The developer experience feels more sophisticated once familiarized, with native MCP support enabling seamless third-party integrations. The tool excels at maintaining context across extended coding sessions, understanding project structure and coding patterns to provide increasingly relevant suggestions over time.

Real-world usage patterns emerge from developer feedback. Teams report using Claude Code for complex refactoring tasks requiring deep understanding of interconnected systems. The tool’s ability to reason about architectural implications makes it valuable for legacy code modernization. Codex shines in rapid prototyping scenarios where multiple variations need testing quickly, leveraging cloud sandboxes to explore different approaches simultaneously.

Use Case Analysis: When to Choose Codex vs Claude Code

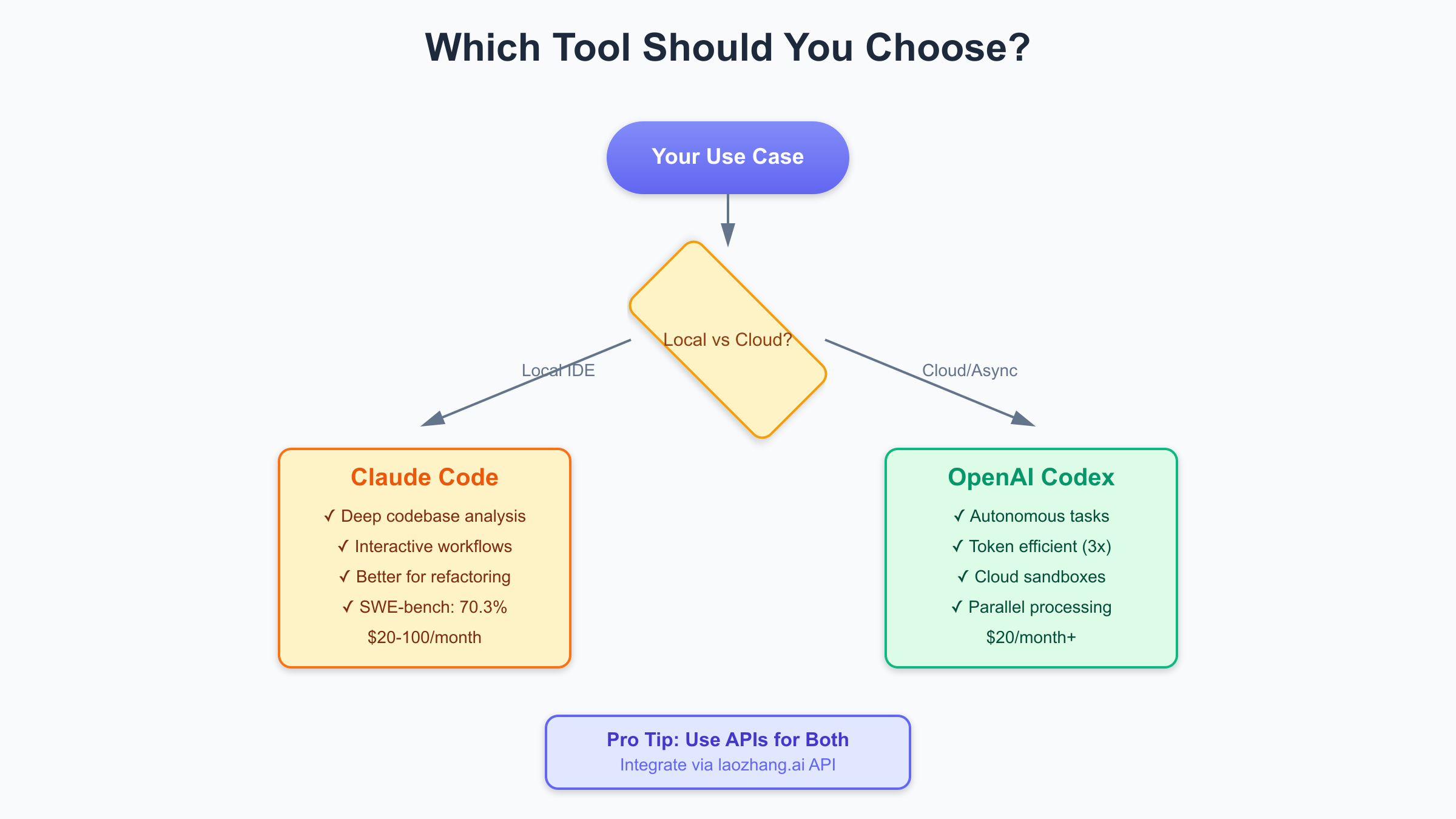

Selecting between Codex and Claude Code depends on specific project requirements and team workflows. Claude Code excels in scenarios requiring deep codebase analysis and careful refactoring. Its local execution model suits enterprises with strict data governance requirements, keeping sensitive code on-premises throughout development. The superior SWE-bench performance makes it ideal for debugging complex multi-file issues in large applications.

Codex becomes the preferred choice for autonomous task delegation and parallel processing needs. Its cloud-based sandboxes enable safe experimentation without affecting local environments, perfect for testing deployment scripts or exploring breaking changes. The token efficiency advantage matters for continuous integration pipelines processing thousands of pull requests daily, where a 3x reduction in token usage significantly impacts operating costs.

Hybrid approaches maximize both tools’ strengths. Development teams increasingly use Claude Code for architectural decisions and complex debugging during active development, then switch to Codex for automated testing and deployment workflows. This complementary usage pattern recognizes that different development phases benefit from different AI assistance models.

API Integration and Third-Party Services

API integration capabilities differentiate these platforms for production deployments. Both tools support programmatic access, but implementation approaches vary. Claude Code’s API maintains the same careful, context-aware approach as the CLI, making it suitable for integration into code review workflows where understanding subtle implications matters. The API excels at providing detailed explanations alongside code suggestions, valuable for automated documentation generation.

Codex’s API design prioritizes throughput and efficiency, handling high-volume requests with minimal latency. The stateless nature of API calls enables horizontal scaling across multiple instances, critical for enterprise deployments. Recent updates introduced batching capabilities, processing multiple code completion requests in parallel to maximize throughput. For teams looking to integrate AI coding assistance at scale, services like laozhang.ai provide unified API access to both platforms, eliminating the complexity of managing multiple integrations while optimizing costs through intelligent routing.

The ecosystem around these tools continues expanding. Third-party services now offer specialized integrations for specific languages and frameworks, building on the base capabilities of both platforms. This growing ecosystem suggests that rather than choosing one tool exclusively, the future lies in orchestrating multiple AI assistants for different aspects of the development lifecycle.

Future Outlook: The Evolution of AI Coding Assistants

Looking ahead, both platforms show clear evolution trajectories. Claude’s roadmap emphasizes longer context windows and improved multi-modal capabilities, potentially incorporating diagram understanding and UI mockup interpretation. The focus on reasoning transparency suggests future versions will provide even clearer explanations of suggested changes, critical for maintaining code quality in regulated industries.

OpenAI’s integration of Codex into the broader ChatGPT ecosystem indicates a platform play, where coding becomes one capability among many. This strategy enables novel workflows like generating code from natural language specifications, then immediately creating documentation, tests, and deployment configurations. The recent GPT-5 integration brought significant performance improvements, closing the gap with Claude on complex reasoning tasks.

The competitive landscape drives rapid innovation. Both platforms now refresh models quarterly, with each update bringing measurable improvements in accuracy and efficiency. This pace of change means today’s performance comparisons become outdated quickly, making it essential to evaluate tools based on fundamental approaches rather than current benchmark scores.

Making the Right Choice for Your Team

Choosing between OpenAI Codex and Claude Code requires evaluating your team’s specific needs against each platform’s strengths. For teams prioritizing local development control and deep codebase understanding, Claude Code’s 70.3% SWE-bench performance and interactive workflow provide compelling advantages. The transparent pricing model and predictable rate limits simplify budgeting for dedicated coding assistance.

Organizations needing autonomous task processing and cloud-scale operations will find Codex’s token efficiency and parallel processing capabilities more aligned with their requirements. The integration with ChatGPT’s broader ecosystem enables workflows beyond pure coding, from requirement analysis to deployment automation. The 3x token efficiency advantage becomes crucial at scale, potentially saving thousands in monthly API costs.

The optimal strategy for many teams involves using both platforms strategically. Claude Code handles complex architectural decisions and careful refactoring during development sprints. Codex automates routine tasks, runs comprehensive test suites, and manages deployment pipelines. This dual-tool approach maximizes productivity while controlling costs, leveraging each platform’s strengths appropriately. As these tools continue evolving rapidly, maintaining flexibility to adapt your toolchain ensures you’ll benefit from ongoing innovations in AI-assisted development.