OpenClaw ships with built-in support for fourteen providers including OpenAI, Anthropic, Google Gemini, and OpenRouter, but the real power emerges when you add your own. Whether you need to connect a private vLLM deployment behind your corporate firewall, route through LiteLLM for unified billing across multiple AI vendors, or integrate a brand-new provider like Moonshot AI's Kimi K2.5 before official support arrives in a release, the models.providers configuration in your openclaw.json file makes it all possible with a few lines of JSON. This guide walks through every configuration parameter with clear explanations of what each one does, demonstrates tested and working examples for five different provider types from cloud APIs to local inference servers, and covers the troubleshooting steps for the most common errors that no other single resource combines in one place. By the end, you will know exactly how to add any OpenAI-compatible or Anthropic-compatible model to OpenClaw, configure multi-model routing for cost optimization, and debug configuration issues when they arise.

What Are Custom Model Providers in OpenClaw?

OpenClaw's model system divides providers into two categories. Built-in providers are part of the pi-ai catalog that ships with every installation. These include OpenAI, Anthropic, Google Gemini, Google Vertex, OpenCode Zen, Z.AI (GLM), Vercel AI Gateway, OpenRouter, xAI, Groq, Cerebras, Mistral, and GitHub Copilot. For these providers, you only need to set authentication credentials and pick a model — no additional configuration required. A single command like openclaw onboard --auth-choice openai-api-key handles the entire setup.

Custom model providers, by contrast, are anything outside this built-in catalog. They require explicit configuration through the models.providers section in your openclaw.json file. This includes cloud API services like Moonshot AI (Kimi), MiniMax, and Alibaba Model Studio. It also covers local inference runtimes like Ollama, vLLM, LM Studio, and text-generation-webui. Proxy and gateway services such as LiteLLM, which aggregate multiple providers behind a single endpoint, fall into this category as well. Enterprise deployments that expose OpenAI-compatible or Anthropic-compatible endpoints also qualify.

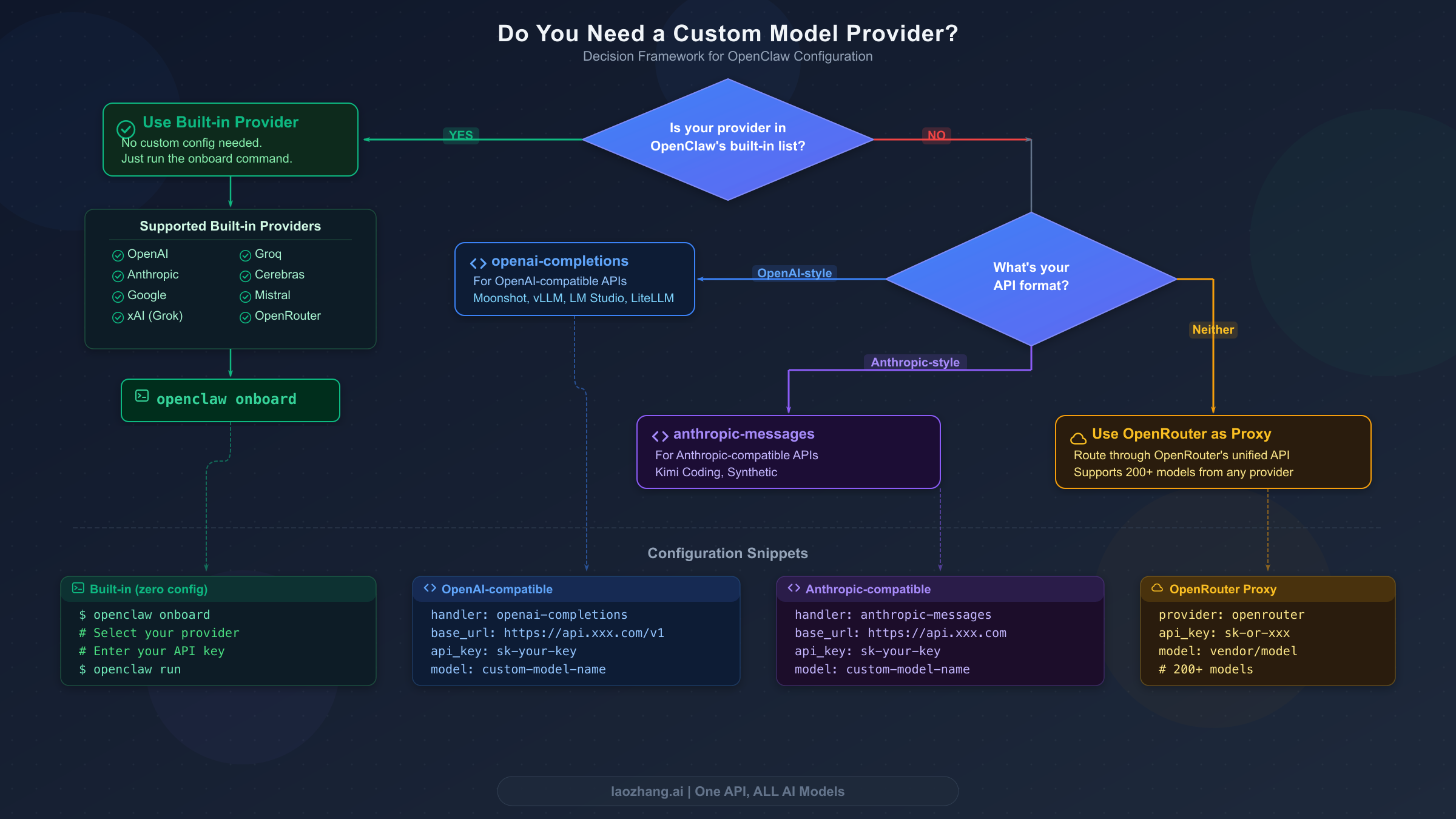

The decision about whether you need a custom provider is straightforward. If your target provider appears in the built-in list above, use the built-in configuration path — no models.providers entry needed, just authentication and model selection. If your provider exposes an OpenAI-compatible chat completions API, configure it as a custom provider with api: "openai-completions". If it uses an Anthropic-compatible messages API, use api: "anthropic-messages". If your provider uses neither format natively, route it through OpenRouter or LiteLLM as an intermediary, which converts the request format automatically. For a deeper look at initial provider setup including Ollama and cloud providers, see our complete OpenClaw LLM setup guide.

The practical impact of custom providers extends beyond simply adding new models. Teams frequently use custom configurations to connect to internal API gateways that enforce organization-wide rate limits and audit logging. Startups use them to route through cost-optimized proxy services that consolidate billing across multiple AI providers into a single invoice. Privacy-conscious developers configure local inference endpoints that ensure no data leaves their network. And early adopters use custom providers to access newly released models — like Moonshot AI's Kimi K2.5 or MiniMax M2.1 — weeks before official OpenClaw support ships in a new release. The models.providers configuration is the mechanism that makes OpenClaw truly model-agnostic rather than locked into a fixed set of supported services.

How OpenClaw's Model System Works

Understanding three core concepts will save you hours of debugging when configuring custom providers. The first concept is the model reference format. Every model in OpenClaw uses the pattern provider/model-id, where OpenClaw splits on the first forward slash to separate the provider name from the model identifier. When you type /model moonshot/kimi-k2.5 in a chat session, OpenClaw knows to route the request to the "moonshot" provider and request the "kimi-k2.5" model. For models whose IDs contain additional slashes, such as OpenRouter-style identifiers like openrouter/anthropic/claude-sonnet-4-5, the split still happens at the first slash — "openrouter" becomes the provider and "anthropic/claude-sonnet-4-5" becomes the model ID.

The second concept is model selection priority. OpenClaw resolves which model to use through a specific cascade. It starts with the primary model defined in agents.defaults.model.primary. If that model is unavailable due to authentication failure or rate limiting, it moves through the ordered fallback list in agents.defaults.model.fallbacks. If the primary model cannot accept images but the current task requires vision capabilities, OpenClaw automatically switches to the image model defined in agents.defaults.imageModel.primary. This cascade means you can configure an affordable primary model for most tasks while keeping a premium model available as a fallback for complex reasoning.

The third concept is the model allowlist. When you configure agents.defaults.models in your openclaw.json, it becomes a strict allowlist. Any model not listed will be rejected with the error "Model 'provider/model' is not allowed." This behavior is intentional for enterprise environments where administrators want to control which models users can access. If you encounter this error after adding a custom provider, you need to add your new model to this allowlist. Alternatively, removing the agents.defaults.models key entirely disables allowlist enforcement and permits any configured model. The openclaw models status command shows the resolved primary model, fallbacks, image model, and authentication overview, making it the best starting point for diagnosing configuration issues.

The CLI provides a comprehensive set of commands for managing models at runtime without editing configuration files manually. The openclaw models list command displays all configured models, with the --all flag showing the full catalog and --provider <name> filtering to a specific provider. The openclaw models set <provider/model> command changes the primary model immediately, while openclaw models set-image <provider/model> configures the image-capable model used when vision input is needed. Alias management through openclaw models aliases list|add|remove lets you create shortcuts like "opus" or "kimi" that map to full provider/model references, reducing typing in daily workflows. Fallback management through openclaw models fallbacks list|add|remove|clear and openclaw models image-fallbacks list|add|remove|clear gives you complete control over the failover chain. Perhaps most useful for custom provider debugging, the openclaw models scan command inspects available models with optional live probes that test tool support and image handling capabilities in real time.

Complete models.providers Configuration Reference

The models.providers section in openclaw.json is where all custom provider definitions live. The configuration follows a specific structure with both required and optional parameters. Understanding each parameter prevents the most common configuration errors.

The top-level structure wraps provider definitions inside models.providers, with an optional mode field that controls how custom providers interact with the built-in catalog. Setting mode: "merge" combines your custom providers with the built-in ones, which is the recommended approach for most users. Without this field, custom providers replace the catalog entirely — rarely the desired behavior.

Each provider definition requires three parameters. The baseUrl parameter specifies the API endpoint that OpenClaw sends requests to, such as https://api.moonshot.ai/v1 for Moonshot AI or http://localhost:1234/v1 for a local LM Studio instance. The apiKey parameter handles authentication and supports environment variable references using the ${VAR_NAME} syntax, so "${MOONSHOT_API_KEY}" tells OpenClaw to read the key from the MOONSHOT_API_KEY environment variable rather than storing credentials in plain text. The api parameter declares the protocol compatibility and accepts either "openai-completions" for OpenAI-compatible endpoints or "anthropic-messages" for Anthropic-compatible endpoints.

The models array within each provider defines which models are available. Each model entry requires only id and name, but optional fields let you declare capabilities that OpenClaw uses for routing decisions. The reasoning field (boolean, defaults to false) indicates whether the model supports chain-of-thought reasoning. The input field (array, defaults to ["text"]) declares accepted input types — add "image" for vision-capable models. The cost object with input, output, cacheRead, and cacheWrite fields (all default to 0) enables cost tracking and optimization when you set accurate per-token prices. The contextWindow field (defaults to 200000) sets the maximum context length in tokens, and maxTokens (defaults to 8192) controls the maximum output length. While these optional fields have sensible defaults, setting explicit values that match your model's actual capabilities prevents subtle issues like context truncation or incorrect cost calculations.

Here is the complete configuration structure with all parameters annotated:

json5{ models: { mode: "merge", providers: { "provider-name": { baseUrl: "https://api.example.com/v1", apiKey: "${API_KEY_ENV_VAR}", api: "openai-completions", models: [ { id: "model-id", name: "Display Name", reasoning: false, input: ["text"], cost: { input: 0, output: 0, cacheRead: 0, cacheWrite: 0 }, contextWindow: 200000, maxTokens: 8192 } ] } } } }

Choosing the correct api type is essential and depends entirely on your provider's endpoint format. Use "openai-completions" for any provider that accepts the OpenAI chat completions request format — this covers the vast majority of third-party providers including Moonshot AI, vLLM, LM Studio, LiteLLM, Ollama, Groq-compatible endpoints, and most self-hosted inference servers. Use "anthropic-messages" specifically for providers that implement the Anthropic messages API format, which currently includes Kimi Coding and Synthetic. When in doubt, check your provider's documentation for whether they describe their API as "OpenAI-compatible" or "Anthropic-compatible" — if they use the /v1/chat/completions endpoint path, use openai-completions.

The following quick-reference table maps common providers to their correct API type, saving you from the most frequent configuration mistake:

| Provider | API Type | Base URL Pattern |

|---|---|---|

| Moonshot AI (Kimi) | openai-completions | api.moonshot.ai/v1 |

| Kimi Coding | anthropic-messages | (built-in endpoint) |

| Synthetic | anthropic-messages | api.synthetic.new/anthropic |

| MiniMax | anthropic-messages | (see /providers/minimax) |

| Alibaba DashScope | openai-completions | coding-intl.dashscope.aliyuncs.com/v1 |

| Fireworks AI | openai-completions | api.fireworks.ai/inference/v1 |

| Ollama | openai-completions | 127.0.0.1:11434/v1 |

| vLLM | openai-completions | localhost:8000/v1 |

| LM Studio | openai-completions | localhost:1234/v1 |

| LiteLLM | openai-completions | localhost:4000/v1 |

| llama.cpp server | openai-completions | localhost:8080/v1 |

Configuring Cloud and API Providers (Step-by-Step)

This section provides tested, working configurations for five different cloud and API provider scenarios. Each example includes the complete openclaw.json snippet, the required environment variable, and verification commands.

Moonshot AI (Kimi K2.5) uses OpenAI-compatible endpoints and offers one of the most capable reasoning models in the open ecosystem. Kimi K2.5 supports both standard and thinking modes, with model variants including kimi-k2.5, kimi-k2-thinking, and kimi-k2-turbo-preview. The configuration from the official OpenClaw documentation (docs.openclaw.ai, February 2026) specifies:

json5{ agents: { defaults: { model: { primary: "moonshot/kimi-k2.5" } }, }, models: { mode: "merge", providers: { moonshot: { baseUrl: "https://api.moonshot.ai/v1", apiKey: "${MOONSHOT_API_KEY}", api: "openai-completions", models: [{ id: "kimi-k2.5", name: "Kimi K2.5" }], }, }, }, }

After saving the configuration, export your API key with export MOONSHOT_API_KEY='sk-...' in your shell profile (append to ~/.zshrc on macOS or ~/.bash_profile on Linux), then source the file with source ~/.zshrc. Verify the setup with openclaw models list --provider moonshot to confirm the model appears in the available list, and run openclaw models status --probe to perform a live connectivity test that confirms the API key is valid and the model responds correctly. This two-step verification catches both configuration errors (wrong provider name, missing model entry) and authentication errors (invalid key, expired credentials) before you attempt to use the model in an actual session.

Synthetic (Anthropic-compatible) provides access to models like MiniMax M2.1 through an Anthropic-compatible API endpoint. This is a good example of when to use the anthropic-messages API type. The configuration follows the same structure but with the alternative API protocol:

json5{ models: { mode: "merge", providers: { synthetic: { baseUrl: "https://api.synthetic.new/anthropic", apiKey: "${SYNTHETIC_API_KEY}", api: "anthropic-messages", models: [{ id: "hf:MiniMaxAI/MiniMax-M2.1", name: "MiniMax M2.1" }], }, }, }, }

Alibaba Model Studio (Qwen) requires regional endpoint selection that affects response speed, available models, and rate limits. Three regions are available: Singapore (ap-southeast-1), Virginia (us-east-1), and Beijing (cn-beijing), each requiring separate API keys from the Model Studio Console. For international users, the OpenAI-compatible endpoint at https://coding-intl.dashscope.aliyuncs.com/v1 provides the lowest latency outside China. The configuration includes explicit cost and context window values to enable accurate cost tracking:

json5{ models: { mode: "merge", providers: { dashscope: { baseUrl: "https://coding-intl.dashscope.aliyuncs.com/v1", apiKey: "${DASHSCOPE_API_KEY}", api: "openai-completions", models: [{ id: "qwen3-max-2026-01-23", name: "Qwen3 Max", contextWindow: 262144, maxTokens: 32768, }], }, }, }, }

API proxy services like laozhang.ai provide a unified endpoint that routes to multiple upstream providers through a single API key. This simplifies billing and eliminates the need to manage credentials for each provider separately. Since most proxy services implement the OpenAI-compatible format, configuration follows the standard pattern — just replace the baseUrl with the proxy's endpoint and use their unified API key. For users comparing model costs across providers, see our guide on detailed comparison of Claude Opus 4 and Sonnet 4 to understand the performance-cost tradeoffs.

Qwen OAuth (free tier) offers a unique authentication path that does not require API keys. Instead, Qwen provides device-code OAuth flow through a bundled plugin. Enable and authenticate with two commands:

bashopenclaw plugins enable qwen-portal-auth openclaw models auth login --provider qwen-portal --set-default

This gives access to qwen-portal/coder-model and qwen-portal/vision-model at no cost, making it an excellent option for development and testing workflows. The OAuth-based approach means there is no API key to manage or rotate, reducing security overhead for individual developers who want to experiment with Qwen models without committing to a paid plan. Note that the free tier has usage limits appropriate for development — production workloads should use the API key-based DashScope configuration described above for higher throughput and guaranteed availability.

Connecting Local Models (Ollama, vLLM, LM Studio, LiteLLM)

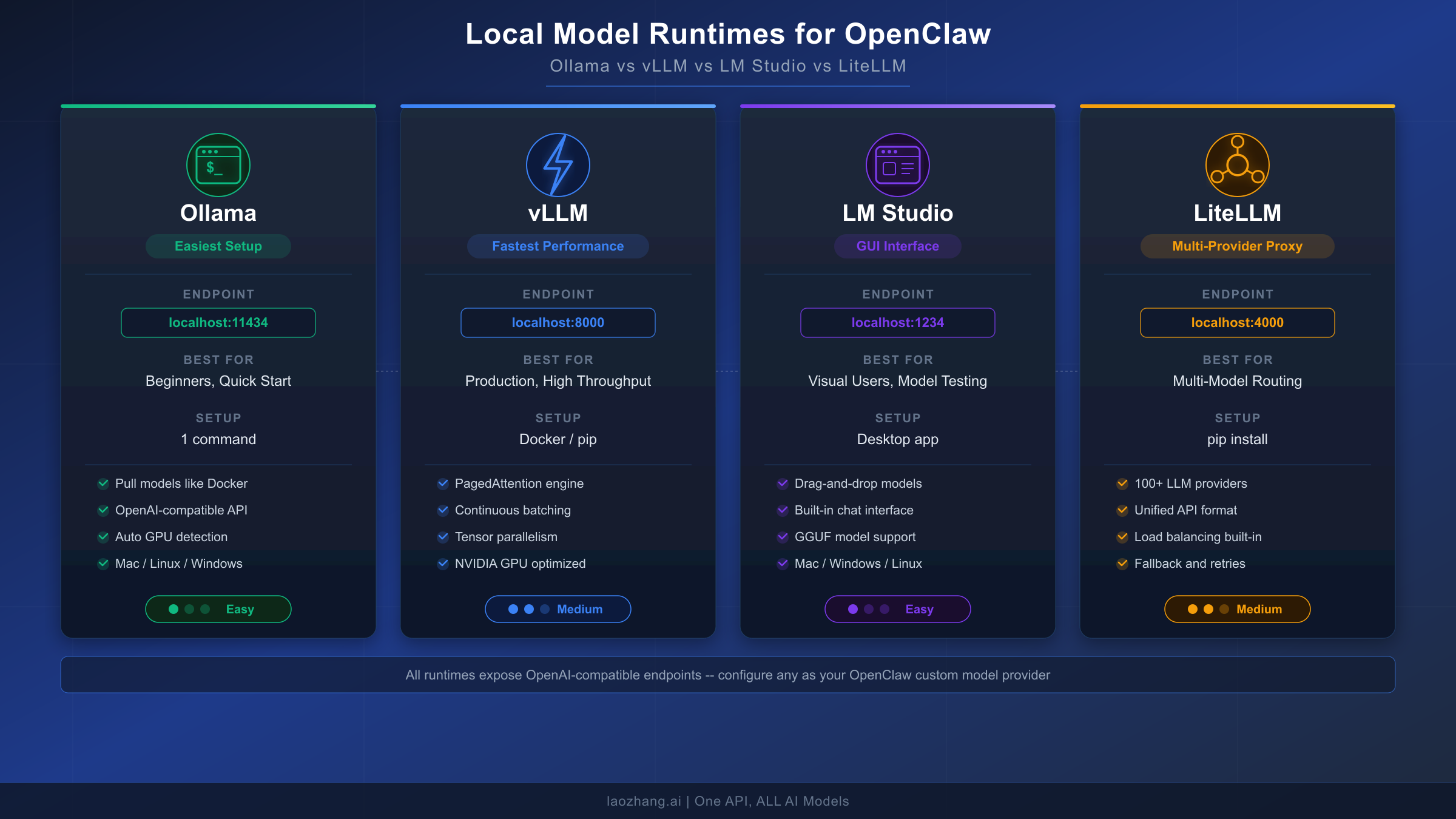

Running models locally eliminates API costs, keeps data on your machine, and removes rate limit concerns entirely. The tradeoff is hardware requirements — local inference needs sufficient RAM and ideally a GPU. OpenClaw supports four major local inference runtimes, each with distinct strengths that serve different workflows.

Ollama is the fastest path to local inference and the only runtime that OpenClaw auto-detects. When Ollama is running at http://127.0.0.1:11434/v1, OpenClaw discovers it without any models.providers configuration needed. Install Ollama, pull a model with ollama pull llama3.3, and set it as primary:

json5{ agents: { defaults: { model: { primary: "ollama/llama3.3" } }, }, }

For models that benefit from extended context or specific quantization, Ollama's model library provides dozens of options. The recommended models for OpenClaw coding tasks include qwen3-coder for code generation and glm-4.7 for general reasoning, both requiring a minimum 64K token context window for effective agent operation. When choosing between model sizes, consider the task complexity carefully. An 8B parameter model handles quick completions and simple queries effectively on modest hardware, while 70B models approach cloud-quality reasoning but demand significantly more VRAM. The Q4_K_M quantization format offers the best balance between quality retention and memory savings for most local deployments — it reduces VRAM requirements by roughly 60% compared to full-precision weights while preserving 95% or more of the original model's capability on coding benchmarks.

vLLM targets production deployments where throughput matters. It runs 24x faster than Hugging Face Transformers through continuous batching and PagedAttention, making it ideal for multi-agent deployments that generate hundreds of requests per minute. The default endpoint is http://localhost:8000/v1 and it uses the standard OpenAI-compatible format:

json5{ models: { mode: "merge", providers: { vllm: { baseUrl: "http://localhost:8000/v1", apiKey: "not-needed", api: "openai-completions", models: [{ id: "meta-llama/Llama-3.1-70B-Instruct", name: "Llama 3.1 70B", contextWindow: 131072, maxTokens: 16384, }], }, }, }, }

One critical detail for Docker deployments: replace localhost with host.docker.internal (Docker Desktop) or the machine's LAN IP address when both vLLM and OpenClaw run in separate containers. The --network=host flag is an alternative but less portable solution. For production vLLM deployments, enable continuous batching with --enable-chunked-prefill and consider setting --max-model-len to match your OpenClaw context requirements — vLLM allocates GPU memory based on this value, so setting it to 65536 instead of the model's maximum 131072 can halve the VRAM requirement while still supporting OpenClaw's typical context needs.

LM Studio provides a desktop GUI for downloading, converting, and serving models. Its visual interface makes it the best choice for users who want to experiment with different models before committing to a configuration. The official OpenClaw documentation (docs.openclaw.ai, February 2026) provides this configuration template:

json5{ agents: { defaults: { model: { primary: "lmstudio/minimax-m2.1-gs32" }, models: { "lmstudio/minimax-m2.1-gs32": { alias: "Minimax" } }, }, }, models: { providers: { lmstudio: { baseUrl: "http://localhost:1234/v1", apiKey: "LMSTUDIO_KEY", api: "openai-completions", models: [{ id: "minimax-m2.1-gs32", name: "MiniMax M2.1", reasoning: false, input: ["text"], cost: { input: 0, output: 0, cacheRead: 0, cacheWrite: 0 }, contextWindow: 200000, maxTokens: 8192, }], }, }, }, }

LiteLLM serves a different purpose than the other three runtimes. Rather than running inference locally, it acts as a proxy that presents a unified OpenAI-compatible interface to multiple upstream providers. This makes it powerful for multi-model routing scenarios where you want to switch between cloud and local models through a single endpoint at http://localhost:4000/v1. Configure it with the same openai-completions API type and define each model that your LiteLLM instance serves. The particular advantage of LiteLLM in an OpenClaw setup is load balancing and rate limit management across multiple provider accounts. If you have API keys for both OpenAI and Anthropic, LiteLLM can automatically route requests to whichever provider has available capacity, effectively doubling your throughput ceiling without any changes to your OpenClaw configuration beyond pointing to the LiteLLM proxy endpoint.

The following table summarizes the key differences between these four local model runtimes to help you choose the right one for your workflow:

| Feature | Ollama | vLLM | LM Studio | LiteLLM |

|---|---|---|---|---|

| Default Endpoint | localhost:11434 | localhost:8000 | localhost:1234 | localhost:4000 |

| Setup Difficulty | 1 command | Docker/pip | Desktop app | pip install |

| Auto-Detection | Yes | No | No | No |

| Best For | Quick start, development | Production, high throughput | Model experimentation | Multi-provider routing |

| GPU Required | Optional (CPU works) | Recommended | Optional | N/A (proxy only) |

| Tool Calling | Model-dependent | Model-dependent | Model-dependent | Passthrough |

The hardware requirements vary significantly across model sizes. An 8B parameter model like Llama 3.1 8B or Mistral 7B runs comfortably on 8GB VRAM (RTX 3060). A 34B model requires 20-24GB VRAM (RTX 3090 or RTX 4090) with quantization. The 70B models demand 40-48GB VRAM (A100 40GB or dual GPU setups) for acceptable inference speed. For Mac users, the M4 chips with unified memory provide a cost-effective path — a Mac Mini M4 with 16GB handles 7-8B models at 15-20 tokens per second (marc0.dev, February 2026).

Advanced Multi-Model Routing and Security

The real power of custom providers emerges when you combine them with OpenClaw's routing and failover capabilities. Instead of relying on a single model, you can design a model stack that optimizes for cost, performance, and reliability simultaneously.

A practical three-tier routing configuration assigns models based on task complexity. Use a premium model like anthropic/claude-opus-4-6 for complex reasoning and architecture decisions — Claude Opus 4.5 costs $15/$75 per million input/output tokens (haimaker.ai, February 2026) but handles multi-file edits and complex debugging better than any alternative. Route daily coding work to a mid-tier model like moonshot/kimi-k2.5 or anthropic/claude-sonnet-4-5 at $3/$15 per million tokens, which covers the vast majority of code generation and review tasks. Assign heartbeat checks, file lookups, and simple completions to budget models like google/gemini-3-flash-lite at $0.50 per million tokens or DeepSeek V3.2 at $0.53 per million tokens — representing a 60x cost reduction compared to Opus for routine operations (velvetshark.com, February 2026). The cost savings compound quickly: light users report approximately 65% monthly savings (~$130/month saved), while power users implementing full three-tier routing save upward of $600/month compared to single-model configurations. The fallback chain configuration ensures continuous operation even when individual providers experience outages:

json5{ agents: { defaults: { model: { primary: "anthropic/claude-sonnet-4-5", fallbacks: [ "moonshot/kimi-k2.5", "openai/gpt-5.1-codex", "ollama/llama3.3" ] }, }, }, }

This cascade means if Anthropic hits rate limits, requests automatically fall to Moonshot, then OpenAI, then your local Ollama instance — ensuring your agent never stops working. For teams managing token management and cost optimization strategies, this approach delivers 50-65% cost savings compared to running everything on a single premium model.

Per-agent model overrides extend the routing model further. The agents.list[].model configuration allows assigning specific models to individual agents within the OpenClaw ecosystem, so a code review agent might use Opus for thoroughness while a file search agent uses a local model for speed. This granular control over model assignment per task type is what separates basic configuration from a truly optimized multi-model setup.

Security deserves deliberate attention when configuring custom providers. The most important practice is storing API keys in environment variables rather than directly in openclaw.json. Use the ${VAR_NAME} syntax consistently so credentials never appear in configuration files that might be committed to version control. For enterprise deployments, the model allowlist provides access control — set agents.defaults.models to explicitly list permitted models, and any unlisted model will be rejected. The openclaw models status command shows authentication status for all configured providers, helping you verify that credentials are correctly loaded without exposing the actual key values. When using laozhang.ai or similar proxy services, a single API key replaces multiple provider credentials, reducing the credential management surface while providing access to dozens of upstream models through one unified endpoint.

For teams sharing an OpenClaw configuration, consider separating the authentication credentials from the structural configuration entirely. Store the openclaw.json in version control with ${VAR_NAME} placeholders for all API keys, and distribute actual credentials through a secrets manager or team password vault. This pattern ensures that new team members can clone the repository and start working by simply setting their environment variables, without the risk of credentials appearing in git history. The model allowlist in agents.defaults.models adds another layer of governance by restricting which models team members can activate, preventing accidental use of expensive premium models for tasks that a budget model handles equally well. Combine this with the cost tracking enabled by accurate cost values in your model definitions, and you have visibility into per-model spending across the entire team without relying on external monitoring tools.

Troubleshooting Custom Model Issues

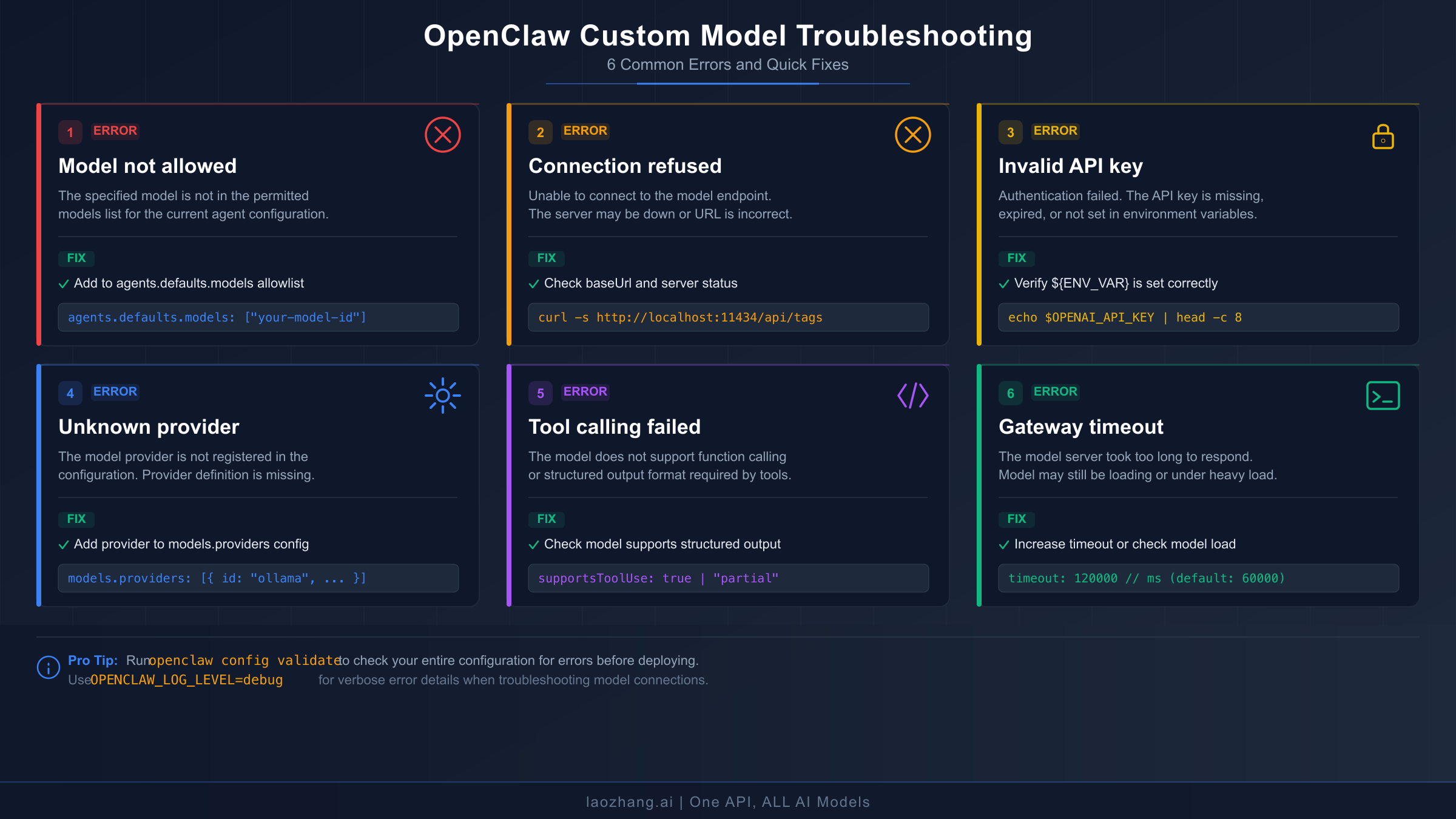

Custom model configurations introduce failure modes that differ from built-in providers. The following covers the most common errors, their root causes, and the exact steps to resolve them. Start every debugging session with openclaw models status --probe, which performs live connectivity testing against all configured providers and surfaces authentication, routing, and connection issues in a single output.

"Model 'provider/model' is not allowed" appears when a model allowlist is active and your custom model is not included. This is the most frequently reported issue when adding new providers. The fix requires adding your model to the allowlist in agents.defaults.models:

json5{ agents: { defaults: { models: { "moonshot/kimi-k2.5": { alias: "Kimi" }, // ... add your custom model here }, }, }, }

Alternatively, remove the agents.defaults.models key entirely to disable the allowlist. This is appropriate for personal setups but not recommended for team environments where model access should be controlled.

"Connection refused" or "ECONNREFUSED" occurs when OpenClaw cannot reach the configured baseUrl. For local runtimes, verify the server is actually running — curl http://localhost:11434/v1/models for Ollama, curl http://localhost:1234/v1/models for LM Studio, curl http://localhost:8000/v1/models for vLLM. If the curl command also fails, the inference server is not running or is listening on a different port. For Docker deployments, remember that localhost inside a container refers to the container itself, not the host machine — use host.docker.internal or the host's IP address instead.

"Invalid API key" or "401 Unauthorized" means the authentication credential is missing or incorrect. First verify the environment variable is set with echo $YOUR_API_KEY_VAR — an empty response means the variable is not exported in your current shell session. Ensure the export statement is in ~/.zshrc or ~/.bash_profile and that you have sourced the file after editing. If using the ${VAR_NAME} syntax in openclaw.json, confirm the variable name matches exactly — ${MOONSHOT_API_KEY} requires export MOONSHOT_API_KEY='sk-...', not MOONSHOT_KEY or moonshot_api_key. For more detailed API key debugging, see our OpenClaw API key authentication error guide.

"Unknown provider" or "Provider not found" typically means the provider name in your model reference does not match any built-in or custom provider definition. Check that the provider key in models.providers matches exactly what you use in the model reference — if you define providers: { "my-llm": { ... } }, the model reference must be my-llm/model-id, not myllm/model-id or myLLM/model-id.

"Tool calling failed" or "Structured output not supported" indicates the model does not support the function calling format that OpenClaw uses for tool interactions. Not all models support structured tool calls — only models specifically trained for function calling (such as Llama 3.1, Mistral with function calling, GPT variants, and Claude variants) work reliably with OpenClaw's tool system. Set reasoning: false in the model definition for models that lack this capability, and consider using them only for simple completion tasks rather than as primary agent models. The openclaw models status --probe command tests tool support directly.

"Gateway timeout" or slow responses point to either network latency or an overloaded inference server. For local models, verify the model is fully loaded in memory — the first request after loading a large model can take 30-60 seconds. Increase the gateway timeout in your configuration if needed. For cloud providers experiencing consistent rate limiting, configure fallbacks to distribute load across providers. Our rate limit troubleshooting guide covers specific strategies for handling 429 errors across different providers.

Configuration syntax errors are particularly insidious because they can prevent the entire gateway from starting. OpenClaw's configuration parser is strict — extraneous commas, missing quotes around string values, or incorrect nesting will cause a silent failure where the gateway starts but ignores the malformed provider definition. The openclaw doctor command provides a comprehensive diagnostic that checks configuration file syntax, provider connectivity, model availability, and authentication status. Run it whenever a configuration change produces unexpected behavior — it catches issues that manual inspection often misses, including the strict JSON validation that "Moltbot [OpenClaw] configuration is strictly validated. Incorrect or extra fields may cause the Gateway to fail" (Alibaba Cloud Community, February 2026). A useful debugging pattern is to start with the minimal configuration — baseUrl, apiKey, api, and a single model with just id and name — verify it works with openclaw models list --provider yourprovider, and then incrementally add optional fields like cost, contextWindow, and reasoning while testing after each addition.

When nothing else works, the three-step diagnostic sequence resolves the majority of remaining issues. First, run openclaw models status --probe to test connectivity and authentication for all providers. Second, check the gateway logs for specific error messages that the CLI might not surface. Third, test the provider endpoint directly with curl — curl -X POST http://your-endpoint/v1/chat/completions -H "Authorization: Bearer YOUR_KEY" -H "Content-Type: application/json" -d '{"model":"model-id","messages":[{"role":"user","content":"test"}]}' — to isolate whether the problem is in OpenClaw's configuration or in the provider itself.

Frequently Asked Questions

Can I use a fine-tuned model with OpenClaw? Yes, as long as the model is served through an OpenAI-compatible or Anthropic-compatible API endpoint. Fine-tuned models hosted on vLLM, Ollama (via custom Modelfiles), or any inference server that exposes a standard chat completions endpoint work with the models.providers configuration. Set the id field to the exact model identifier your server expects, and configure contextWindow and maxTokens to match the fine-tuned model's actual capabilities.

How do I switch between custom models during a chat session? Use the /model command followed by the full provider/model reference. For example, /model moonshot/kimi-k2.5 switches to Kimi K2.5, and /model ollama/llama3.3 switches to local Llama. The /model list command shows all available models, and /model status displays the current selection. No restart is required — the switch takes effect on the next message.

What happens if my custom provider goes down during a task? OpenClaw's failover system activates automatically. If you have configured agents.defaults.model.fallbacks, requests route to the next available provider in the chain. Without fallbacks, the current request fails and you can manually switch models with /model. For production reliability, always configure at least two fallback models from different providers.

Is there a limit to how many custom providers I can add? There is no hard limit on the number of providers in models.providers. Practical considerations include memory usage from maintaining connection pools and the complexity of managing many API keys. Most users find that three to five custom providers — covering a mix of cloud APIs, a local runtime, and a proxy service — provide sufficient flexibility without management overhead.

Does models.providers work with the OpenClaw Web UI and mobile apps? Yes. The models.providers configuration in openclaw.json applies globally across all OpenClaw interfaces, including the CLI, Web UI (accessible via openclaw dashboard), and any connected chat platforms like Discord or Telegram. The dashboard at http://127.0.0.1:18789/ provides a visual way to test custom providers before deploying them to production channels. This means you can configure a custom provider once in the JSON file and immediately use it from any interface, including programmatic access through the gateway API. Changes to the configuration take effect after restarting the gateway with openclaw gateway restart — there is no need to reinstall or re-onboard when adding new providers to an existing setup.