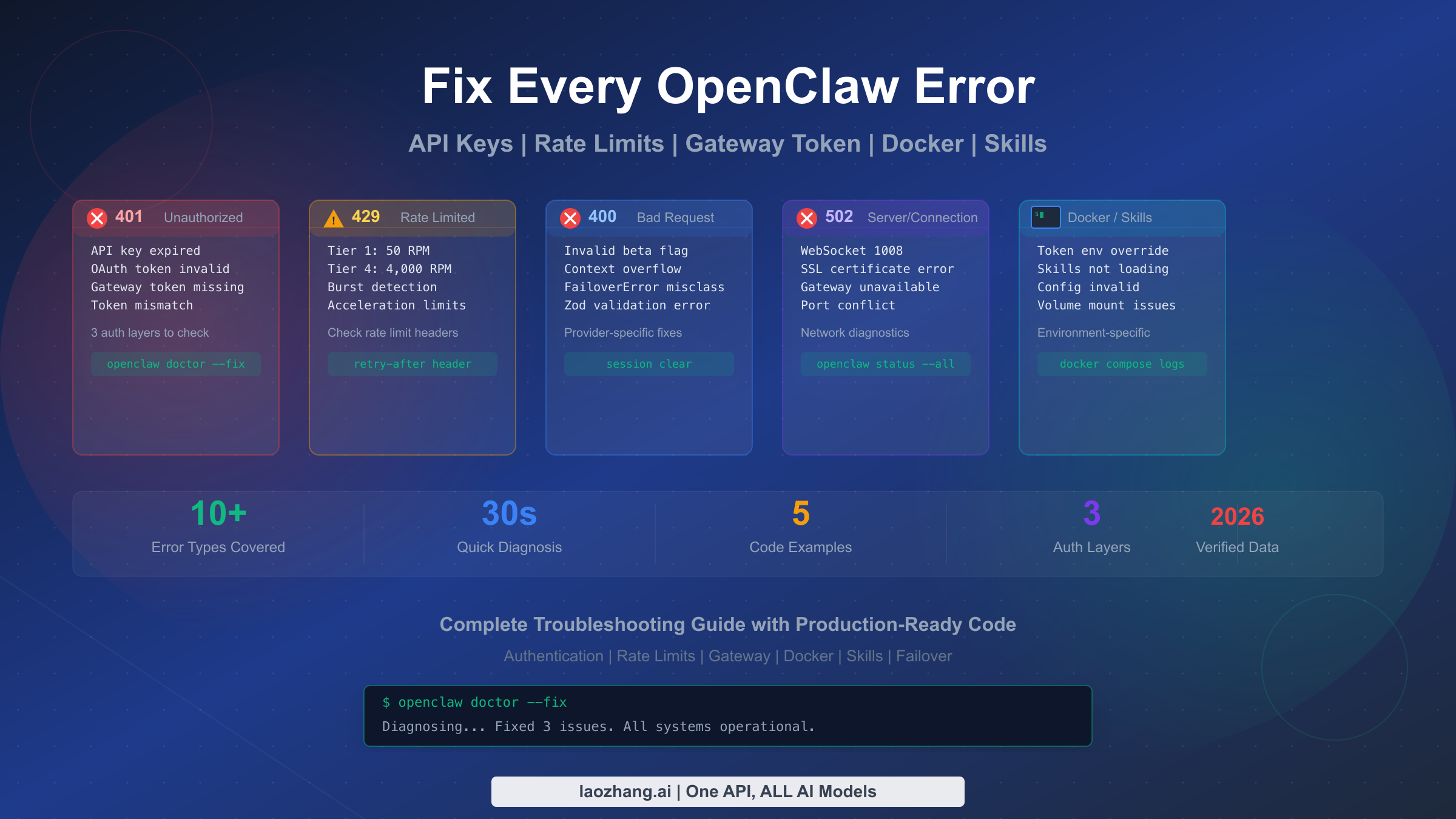

OpenClaw errors like 401 Unauthorized, 429 Rate Limit Exceeded, and gateway token mismatch share a common first step: run openclaw doctor --fix to automatically resolve the majority of configuration issues. This guide covers every OpenClaw error code with step-by-step diagnosis, verified fixes, and production-ready code for handling failures in your applications. Whether you're dealing with authentication failures in a fresh installation, rate limiting on a production deployment, or the notoriously tricky Docker environment variable override, you'll find the exact solution here.

TL;DR

Most OpenClaw errors fall into five categories, each with a distinct first-response action. For 401 authentication errors, verify your API key format starts with sk-ant-api03- and run openclaw models status to check credential validity. For 429 rate limit errors, check your Anthropic tier level and implement exponential backoff with the retry-after header. For 400 bad request errors like invalid beta flags, confirm your provider supports the beta feature you're requesting. For 502/1008 connection errors, regenerate your gateway token with openclaw models auth setup-token. For Docker-specific issues, check whether OPENCLAW_GATEWAY_TOKEN environment variable is silently overriding your configuration. The universal diagnostic command openclaw status --all reveals the root cause of almost any error in seconds.

Quick Diagnosis — Identify Your Error in 30 Seconds

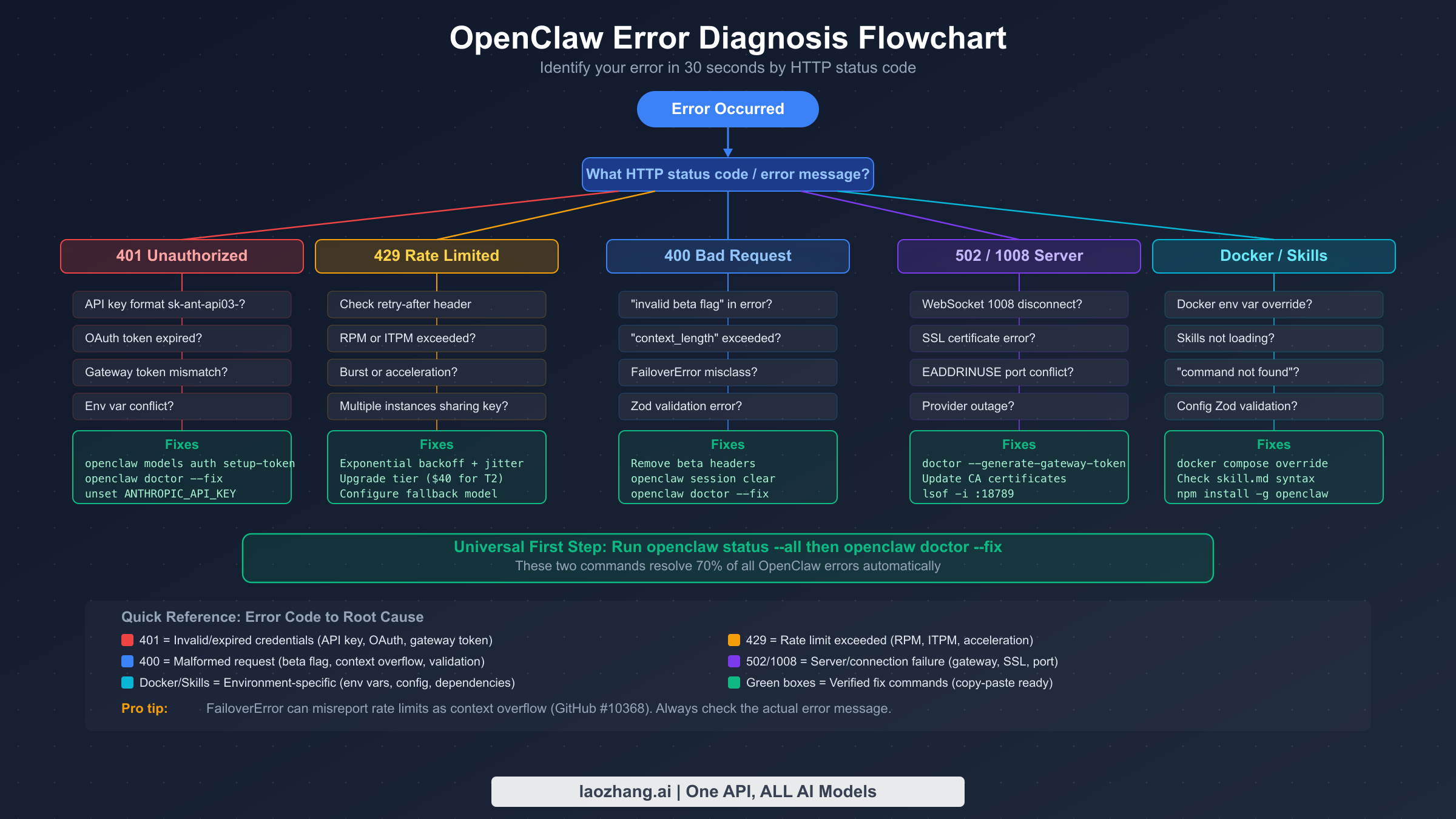

Every OpenClaw error carries a specific HTTP status code or error message that points directly to its root cause. Rather than guessing which fix to try, you can identify your exact problem in under 30 seconds by matching your error output against these diagnostic patterns. This systematic approach prevents the common mistake of applying the wrong solution—like regenerating API keys when the real problem is a rate limit, or adjusting environment variables when the issue is a corrupted gateway token.

Start with the universal diagnostic command that OpenClaw provides for exactly this purpose. Running openclaw status --all produces a complete, credential-safe report of your entire configuration state, including provider status, authentication health, gateway connectivity, and any active error conditions. This single command replaces the need to manually check individual configuration files and is safe to share when seeking help—it redacts sensitive values automatically.

For a faster check focused specifically on authentication, use openclaw models status. This command tests each configured provider's credentials in real time and reports their validity. You'll see clear indicators like "valid," "invalid bearer token," or "no auth configured" for each provider. When the output shows "all in cooldown" for a provider, your credentials might be perfectly fine—OpenClaw has temporarily blocked requests due to repeated failures, which is a protective mechanism rather than a credential problem.

The automated repair tool openclaw doctor --fix handles structural configuration issues like malformed JSON, missing required fields, and incorrect file permissions. It cannot generate new API keys or fix expired credentials, but it resolves a surprising number of first-time setup problems. Always run this command before manual troubleshooting—if it fixes your issue, you've saved significant time.

| Error Pattern | HTTP Code | Category | Jump To |

|---|---|---|---|

| "authentication_error: Invalid bearer token" | 401 | Authentication | H2-2 |

| "No API key found for provider" | 401 | Authentication | H2-2 |

| "OAuth token refresh failed" | 401 | Authentication | H2-2 |

| "rate_limit_error" or "429 Too Many Requests" | 429 | Rate Limiting | H2-3 |

| "overloaded_error: Overloaded" | 529 | Rate Limiting | H2-3 |

| "invalid_request_error: Invalid beta flag" | 400 | Request Error | H2-4 |

| "context_length_exceeded" | 400 | Request Error | H2-4 |

| "502 Bad Gateway" or "1008 token mismatch" | 502/1008 | Connection | H2-5 |

| "ECONNREFUSED" or "SSL handshake failed" | - | Connection | H2-5 |

| Docker: "gateway token mismatch" | 1008 | Docker | H2-6 |

| "skill.md: parse error" or "skill not found" | - | Configuration | H2-7 |

Authentication Errors (401) — API Keys, Tokens, and Gateway

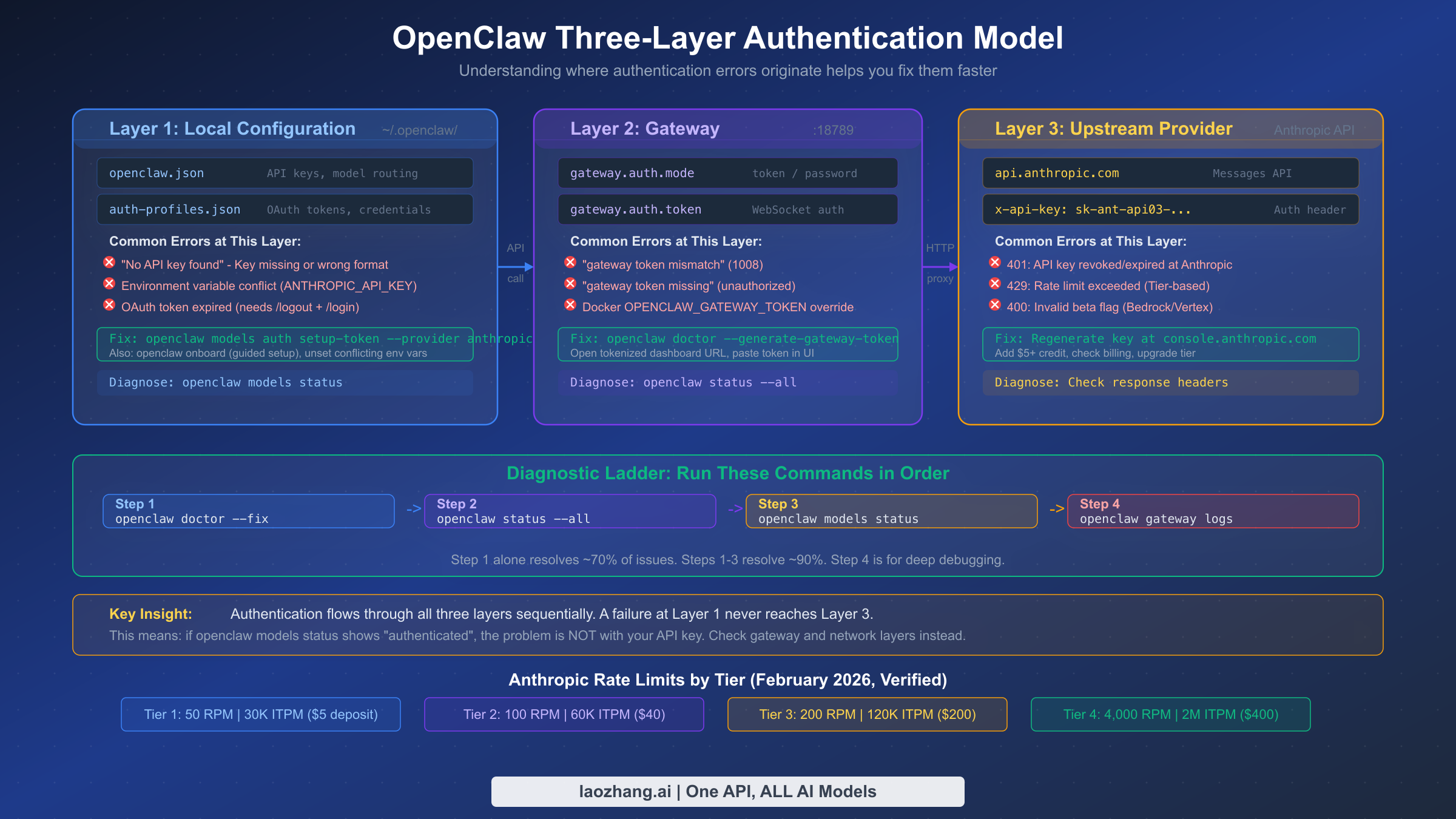

Authentication errors are the most frequently encountered OpenClaw failures, and they're also the most confusing because OpenClaw uses a three-layer authentication architecture where failures can originate at any level. Understanding this architecture is essential for targeted troubleshooting rather than random guessing. The three layers are: your local configuration (~/.openclaw/ directory), the OpenClaw gateway service (running on port 18789 for WebSocket and 18791 for control), and the upstream provider (Anthropic's API at api.anthropic.com). Each layer has its own credential requirements and failure modes.

Layer 1: Local Configuration Errors. The most common authentication failure occurs right at the start—your local OpenClaw configuration either lacks credentials or contains malformed ones. When you see "No API key found for provider 'anthropic'" in your diagnostic output, it means OpenClaw's credential store at ~/.openclaw/openclaw.json contains no Anthropic credentials at all. This happens with fresh installations that skipped the onboarding step, or when creating new agents that don't inherit credentials from the main configuration. The fix is straightforward: run openclaw onboard and follow the prompts to configure Anthropic authentication. The wizard validates your key format and stores it correctly.

Anthropic API keys follow a strict format—they must start with sk-ant-api03- followed by a long alphanumeric string. If your key doesn't match this pattern, it's either not an Anthropic key, has been corrupted during copy-paste (check for trailing spaces or newline characters), or is a deprecated key format from an older Anthropic API version. You can verify your key independently by making a direct API call:

bashcurl -s https://api.anthropic.com/v1/messages \ -H "x-api-key: YOUR_KEY_HERE" \ -H "anthropic-version: 2023-06-01" \ -H "content-type: application/json" \ -d '{"model":"claude-sonnet-4-5-20250929","max_tokens":10,"messages":[{"role":"user","content":"hi"}]}' \ | head -c 200

If this returns a valid response, your key works and the problem lies elsewhere in the OpenClaw stack. If it returns {"type":"error","error":{"type":"authentication_error"}}, your key itself is invalid and needs replacement from the Anthropic Console.

Layer 2: Gateway Token Problems. The OpenClaw gateway uses an internal token to authenticate WebSocket connections between your local agent and the gateway service. When this token becomes mismatched—typically after a gateway restart, configuration migration, or manual editing—you'll see "1008 token mismatch" errors. The gateway token is automatically generated during initial setup and stored in both your local configuration and the gateway's state. Regenerating it requires running openclaw models auth setup-token, which creates a new token and updates both sides of the connection. For deeper analysis of Anthropic-specific authentication issues, see our dedicated guide to Anthropic API key errors in OpenClaw.

Layer 3: Upstream Provider Failures. Even with correct local configuration and gateway tokens, authentication can fail at the Anthropic API level. The most common upstream failures are: expired API keys (Anthropic can revoke keys for policy violations or account issues), insufficient account balance (your Anthropic account must have at least $5 in credit purchases to reach Tier 1, which is required for API access as of February 2026, per platform.claude.com), and permission restrictions (keys created with limited scopes cannot access all models or features).

The Cooldown Mechanism. When OpenClaw detects repeated authentication failures against a provider, it activates a cooldown period of 30-60 minutes during which no requests are sent to that provider. The error message "No available auth profile for anthropic (all in cooldown)" indicates this state. Your credentials might be perfectly valid—perhaps a temporary Anthropic outage triggered the cooldown. You can wait for the cooldown to expire naturally, or restart the OpenClaw gateway service to clear it immediately. The cooldown exists to prevent your account from being flagged for excessive failed authentication attempts, which could lead to permanent key revocation.

Authentication Method Comparison. OpenClaw supports three authentication methods for Anthropic, each suited to different deployment scenarios. Direct API keys (the sk-ant-api03- format) are simplest and recommended for individual developers and CI/CD pipelines. OAuth tokens (using Claude subscription credentials) are useful when you want to avoid managing API keys directly but require periodic token refresh. The setup token method (openclaw models auth setup-token) is designed for team deployments where a central administrator provisions access. Choose based on your operational needs—API keys for simplicity, OAuth for subscription-based access, and setup tokens for managed environments.

Rate Limiting (429) — Headers, Tiers, and Prevention

Rate limit errors are the second most common OpenClaw failure in production environments, and they're frequently misunderstood. A 429 response doesn't mean your credentials are invalid—it means you've exceeded the request quota for your current Anthropic tier. The critical detail that most troubleshooting guides miss is that rate limits apply across multiple dimensions simultaneously (requests per minute, input tokens per minute, and output tokens per minute), and hitting any single dimension triggers the 429 response.

Anthropic implements a tier-based rate limiting system where higher tiers unlock significantly larger quotas. Your tier is determined by the total amount of credit you've purchased (not spent). Understanding your current tier and its limits is the first step to diagnosing and preventing rate limit issues. The following table reflects Anthropic's current rate limits as of February 2026 (verified via platform.claude.com/docs/en/api/rate-limits):

| Tier | Credit Purchase | RPM | Input TPM (Opus/Sonnet) | Input TPM (Haiku) | Output TPM (Opus/Sonnet) |

|---|---|---|---|---|---|

| Tier 1 | $5 | 50 | 30,000 | 50,000 | 8,000 |

| Tier 2 | $40 | 100 | 60,000 | 100,000 | 16,000 |

| Tier 3 | $200 | 200 | 120,000 | 200,000 | 32,000 |

| Tier 4 | $400 | 4,000 | 2,000,000 | 4,000,000 | 400,000 |

The jump from Tier 3 to Tier 4 is dramatic—RPM increases 20x and token limits increase roughly 16x. For production deployments, reaching Tier 4 with a $400 credit purchase is often the most cost-effective way to eliminate rate limiting issues entirely.

Reading Rate Limit Headers. Every response from the Anthropic API includes headers that reveal your current rate limit status. These headers are your most valuable diagnostic tool for understanding why 429 errors occur and when they'll resolve. Most guides mention the retry-after header but miss the complete set of anthropic-ratelimit-* headers that provide full visibility into your quota consumption:

| Header | Description | Example |

|---|---|---|

retry-after | Seconds to wait before retrying | 15 |

anthropic-ratelimit-requests-limit | Maximum RPM for your tier | 50 |

anthropic-ratelimit-requests-remaining | Remaining requests this minute | 3 |

anthropic-ratelimit-requests-reset | Time when request quota resets | 2026-02-09T10:30:00Z |

anthropic-ratelimit-tokens-limit | Maximum tokens per minute | 30000 |

anthropic-ratelimit-tokens-remaining | Remaining tokens this minute | 12500 |

anthropic-ratelimit-tokens-reset | Time when token quota resets | 2026-02-09T10:30:00Z |

When you receive a 429 response, always check anthropic-ratelimit-requests-remaining and anthropic-ratelimit-tokens-remaining to determine which dimension you've exhausted. If requests remaining is 0 but tokens remaining is high, you're sending too many small requests—consider batching. If tokens remaining is 0 but requests remaining is positive, your individual requests are too large—consider reducing context length or splitting conversations.

The FailoverError Misclassification. A particularly tricky issue documented in GitHub Issue #10368 causes rate limit errors to be misreported as context overflow errors. When OpenClaw's failover mechanism activates during a rate limit event, the error message presented to the user may say "context_length_exceeded" rather than "rate_limit_error." If you see context overflow errors that appear suddenly (rather than gradually as conversations grow), check your rate limit headers first—the real cause may be a 429 that was incorrectly classified during failover processing.

For teams that need higher throughput without tier upgrades, distributing requests across multiple API keys or using a relay service like laozhang.ai can spread load across multiple rate limit pools, effectively multiplying your available quota. This approach is especially useful during burst periods when temporary spikes exceed your tier limits. For a comprehensive deep dive into rate limiting strategies, see our complete guide to OpenClaw rate limiting, and for managing costs alongside rate limits, explore optimizing token usage and costs.

Request Errors (400) — Beta Flags and Context Overflow

Request errors with HTTP status 400 indicate that your request was syntactically valid but semantically incorrect—the API understood what you sent but cannot process it. In OpenClaw, the two most common 400 errors are invalid beta flags and context length exceeded, each requiring different diagnosis and resolution strategies.

Invalid Beta Flag Errors. The error message invalid_request_error: Invalid beta flag occurs when your OpenClaw configuration includes a beta feature flag that your provider doesn't support. This is particularly common when using Anthropic Claude through third-party providers like AWS Bedrock or Google Vertex AI, because these providers implement a subset of Anthropic's beta features and may lag behind the direct API in feature availability. For example, a beta flag that works perfectly with the direct Anthropic API (api.anthropic.com) may not be recognized by the Bedrock endpoint, producing a confusing 400 error.

The diagnostic approach is to identify which beta flags your configuration includes and verify they're supported by your specific provider. Check your OpenClaw configuration at ~/.openclaw/openclaw.json for any beta fields in your model configuration. Common beta flags include max-tokens-3-5-sonnet-2024-07-15 (for extended output on Sonnet models), prompt-caching-2024-07-31 (for prompt caching), and token-counting-2024-11-01 (for token counting). The fix is either removing unsupported beta flags from your configuration or switching to a provider that supports them. For step-by-step instructions, see our detailed invalid beta flag troubleshooting guide.

Context Length Exceeded. The context_length_exceeded error fires when your total input (system prompt + conversation history + current message) exceeds the model's context window. Claude models currently support 200K tokens standard context, with 1M tokens available in beta (verified via platform.claude.com, February 2026). In OpenClaw, this error most commonly occurs in long-running conversations where message history accumulates beyond the model's capacity.

OpenClaw provides a built-in history management mechanism through the maxHistoryMessages configuration parameter. By default, OpenClaw retains the last 100 messages in conversation context. If your conversations regularly exceed context limits, reducing this value is the most direct fix. Setting maxHistoryMessages to 50 or even 30 significantly reduces context consumption while preserving enough conversation history for coherent interactions.

For programmatic context management, implement a token counting strategy that monitors accumulated tokens and summarizes or truncates older messages before they push the total over the limit. This is particularly important for production applications where conversations may persist for hours. The key insight is that context overflow should never be a surprise—implement monitoring that warns you at 80% context utilization so you can take action before the hard failure. See managing context length in OpenClaw for implementation patterns.

Be aware of the FailoverError misclassification mentioned in the Rate Limiting section—if you see context_length_exceeded errors that appear suddenly rather than building up gradually over a conversation, check your rate limit status first. GitHub Issue #10368 documents cases where rate limit events are incorrectly surfaced as context overflow during failover processing.

Server and Connection Errors (502, 1008, SSL)

Server and connection errors indicate infrastructure-level problems between your OpenClaw agent, the gateway, and upstream providers. Unlike authentication or request errors, these failures often resolve themselves as transient conditions clear, but persistent occurrences require systematic diagnosis of the network path.

502 Bad Gateway. A 502 error means the OpenClaw gateway received an invalid response from the upstream provider. This typically indicates that Anthropic's API (or whichever provider you're using) is experiencing an outage or degraded performance. Check the Anthropic status page (status.anthropic.com) to confirm whether there's a known incident. If the status page shows all systems operational, the 502 may be caused by a network issue between your gateway and the API endpoint—firewalls, DNS resolution failures, or proxy misconfigurations can all produce 502 errors.

The diagnostic approach for persistent 502 errors is to test the network path directly. Use curl -v https://api.anthropic.com/v1/messages to verify that your server can reach Anthropic's API endpoint. Look for SSL handshake completion, correct DNS resolution, and successful TCP connection. If the direct connection works but OpenClaw still shows 502, the issue is likely in the gateway's proxy configuration or connection pooling settings.

1008 WebSocket Token Mismatch. The WebSocket close code 1008 with "token mismatch" is specific to the OpenClaw gateway's internal authentication. The gateway uses a shared secret token to authenticate WebSocket connections from your local agent. When this token becomes desynchronized—typically after a gateway restart without proper token migration, or after manual editing of configuration files—every WebSocket connection attempt fails immediately with 1008.

The resolution requires regenerating the gateway token on both sides of the connection. Run openclaw models auth setup-token to generate a new token and update the local configuration. If the gateway is running on a remote server, you'll need to copy the new token to the gateway's configuration as well. After regeneration, restart both the local agent and the gateway service to ensure both sides use the new token.

SSL Certificate Errors. SSL handshake failures in OpenClaw fall into two categories: certificate chain problems (the gateway or upstream API presents a certificate your system doesn't trust) and certificate expiration (a certificate in the chain has expired). For self-signed certificates in development environments, you can configure OpenClaw to accept them by setting NODE_TLS_REJECT_UNAUTHORIZED=0 in your environment—but never use this in production as it disables all certificate validation.

For corporate environments with custom certificate authorities, add your CA certificate to the Node.js trust store by setting the NODE_EXTRA_CA_CERTS environment variable to point to your CA bundle file. This is the correct production approach for environments with TLS inspection proxies or internal PKI infrastructure.

Docker and Deployment Troubleshooting

Docker deployments introduce a unique category of OpenClaw errors that don't exist in bare-metal installations. The most insidious is the environment variable override problem documented in GitHub Issue #9028, which affected 12+ users before being identified. Understanding Docker-specific failure modes is critical because they can make correctly configured installations appear broken.

The OPENCLAW_GATEWAY_TOKEN Override Problem. When running OpenClaw in Docker, environment variables set in your docker-compose.yml or docker run command silently override values from the mounted configuration files. This means that even if your ~/.openclaw/openclaw.json contains the correct gateway token, a stale OPENCLAW_GATEWAY_TOKEN environment variable from a previous deployment will take precedence. The symptom is persistent "1008 token mismatch" errors that resist all normal troubleshooting because the configuration files look correct.

The diagnostic approach is to check for environment variable overrides inside the running container. Execute docker exec <container_name> env | grep OPENCLAW to see all OpenClaw-related environment variables. If OPENCLAW_GATEWAY_TOKEN appears in the output and differs from the value in your mounted configuration file, you've found the problem. The fix is either removing the environment variable from your Docker configuration or updating it to match the current gateway token.

Docker Network Configuration. OpenClaw's gateway needs to reach both your local agent and the upstream provider API. In Docker, the default bridge network can prevent the gateway from reaching services on the host or in other containers. Use Docker's host network mode for simplest configuration, or explicitly configure the bridge network with proper DNS resolution. For docker-compose deployments, ensure all OpenClaw services share the same network:

yamlversion: '3.8' services: openclaw-gateway: image: openclaw/gateway:latest ports: - "18789:18789" - "18791:18791" volumes: - ./openclaw-config:/root/.openclaw environment: - NODE_ENV=production # Do NOT set OPENCLAW_GATEWAY_TOKEN here unless intentional networks: - openclaw-net openclaw-agent: image: openclaw/agent:latest depends_on: - openclaw-gateway volumes: - ./openclaw-config:/root/.openclaw networks: - openclaw-net networks: openclaw-net: driver: bridge

Volume Mount Permissions. Configuration files mounted from the host into Docker containers may have incorrect permissions inside the container, preventing OpenClaw from reading or writing its configuration. Ensure the mounted files are readable by the container's user (typically root in most OpenClaw Docker images). Use docker exec <container> ls -la /root/.openclaw/ to verify permissions, and chmod on the host if needed.

For comprehensive Docker deployment guidance including initial setup, see our OpenClaw installation and deployment guide.

Skills Not Loading and Configuration Errors

OpenClaw's skills system extends the agent's capabilities through markdown-based configuration files. When skills fail to load, the agent operates without its extended capabilities—custom tools don't appear, specialized behaviors don't activate, and the agent may seem "dumb" compared to its expected performance. Skills failures are silent by default, making them harder to detect than explicit error messages.

Skill File Syntax Errors. OpenClaw skills are defined in .md files with a specific frontmatter format. The most common syntax errors are: missing or malformed YAML frontmatter (the --- delimiters must be on their own lines), invalid field types (using a string where a list is expected), and encoding issues (non-UTF-8 characters in skill descriptions). The diagnostic command openclaw skills list shows all detected skills and their load status. Skills that failed to parse appear with an error indicator and a brief explanation of the syntax problem.

When a skill file fails to parse, OpenClaw logs the specific parsing error but continues loading other skills. This means a single broken skill file doesn't prevent other skills from loading—but it also means you might not notice the failure unless you explicitly check. The fix is to validate your skill file syntax against the OpenClaw skill schema. Every skill file must include at minimum a name field, a description field, and the skill body content. Optional fields like dependencies, permissions, and triggers follow specific format requirements documented in the OpenClaw skills reference.

Directory Structure Requirements. OpenClaw looks for skills in specific directories, and misplacing skill files is a common cause of "skill not found" errors. The standard skill directories are: ~/.openclaw/skills/ for user-level skills (available to all agents), and .openclaw/skills/ in the project directory for project-specific skills. Skills placed outside these directories won't be discovered by OpenClaw's skill loader.

Dependency Resolution Failures. Some skills declare dependencies on external tools or other skills. When a dependency cannot be satisfied—for instance, a skill requires python3 but the environment only has python—the skill is silently skipped. Check your skill's dependencies field against the available tools in your environment. Running openclaw doctor --fix can identify and sometimes resolve dependency issues automatically by installing missing tools or creating necessary symlinks.

Production Error Handling — Retry Logic and Multi-Provider Failover

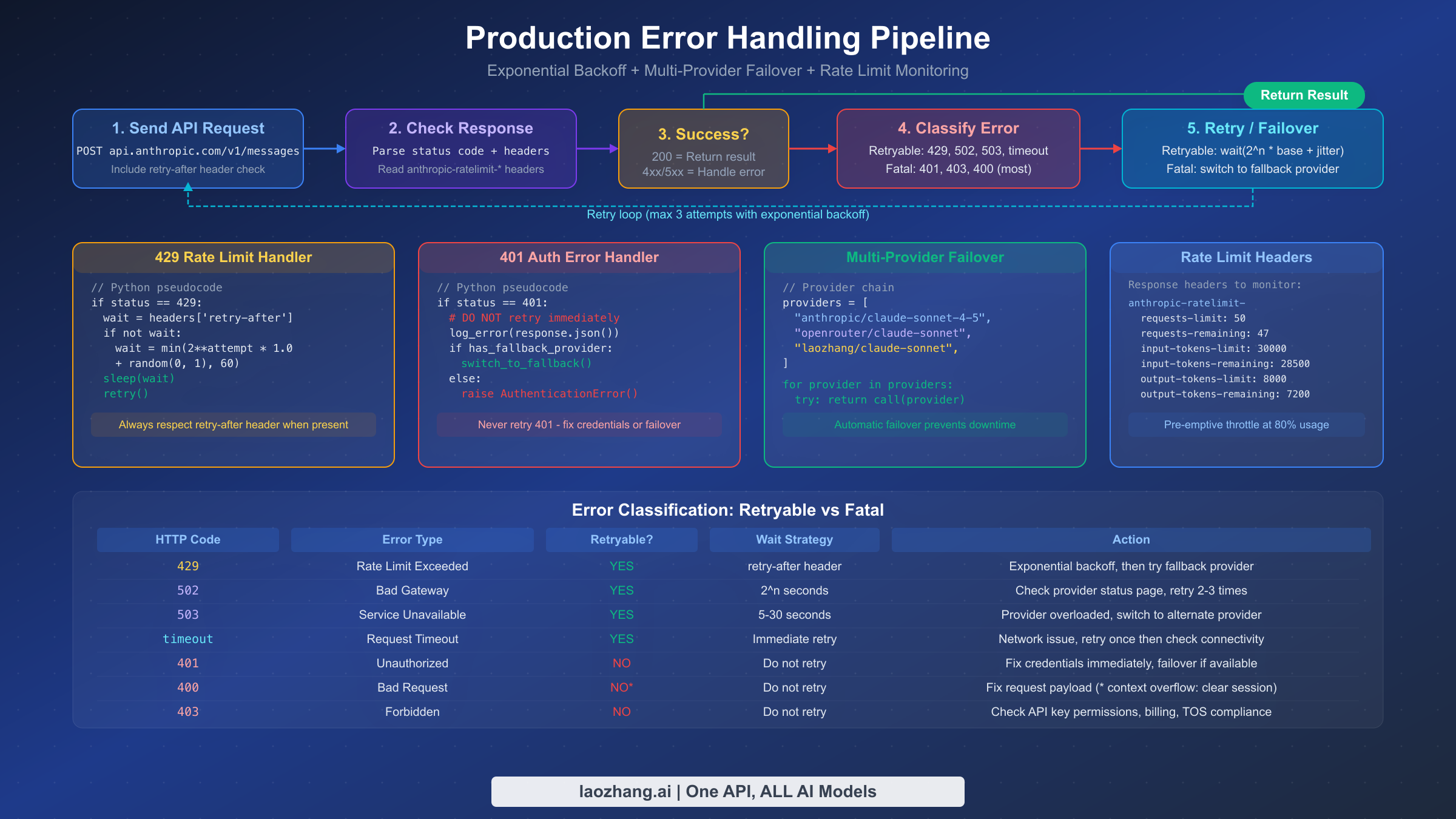

Moving from development troubleshooting to production reliability requires implementing systematic error handling that goes beyond fixing individual errors. Production applications need to classify errors, apply appropriate retry strategies, failover to alternative providers when primary providers fail, and maintain visibility into error patterns through monitoring. The following implementations cover Python and JavaScript—the two most common languages for OpenClaw integrations.

Error Classification: Retryable vs. Fatal. The first decision in any error handling pipeline is whether to retry or fail immediately. Retrying a fatal error wastes time and quota; failing on a retryable error reduces reliability. This classification table guides the decision:

| Error Code | Error Type | Retryable? | Strategy |

|---|---|---|---|

| 429 | Rate Limit Exceeded | Yes | Exponential backoff with retry-after header |

| 529 | Overloaded | Yes | Exponential backoff, 30-60s initial delay |

| 502 | Bad Gateway | Yes | Linear backoff, 3 attempts max |

| 408 | Request Timeout | Yes | Immediate retry, then exponential |

| 401 | Authentication Error | No | Fix credentials, do not retry |

| 400 | Bad Request | No | Fix request payload, do not retry |

| 403 | Permission Denied | No | Fix permissions, do not retry |

Python Production Retry Implementation:

pythonimport time import httpx from typing import Optional class OpenClawRetryHandler: def __init__(self, max_retries: int = 3, base_delay: float = 1.0): self.max_retries = max_retries self.base_delay = base_delay self.retryable_codes = {429, 529, 502, 408} def execute_with_retry(self, request_fn, **kwargs): last_error = None for attempt in range(self.max_retries + 1): try: response = request_fn(**kwargs) return response except httpx.HTTPStatusError as e: last_error = e status = e.response.status_code if status not in self.retryable_codes: raise # Fatal error, don't retry delay = self._calculate_delay(e.response, attempt) print(f"Attempt {attempt+1} failed ({status}), " f"retrying in {delay:.1f}s...") time.sleep(delay) raise last_error def _calculate_delay(self, response, attempt: int) -> float: # Prefer retry-after header when available retry_after = response.headers.get("retry-after") if retry_after: return float(retry_after) # Exponential backoff with jitter import random delay = self.base_delay * (2 ** attempt) jitter = random.uniform(0, delay * 0.1) return min(delay + jitter, 60.0) # Cap at 60 seconds

JavaScript Production Retry Implementation:

javascriptclass OpenClawRetryHandler { constructor({ maxRetries = 3, baseDelay = 1000 } = {}) { this.maxRetries = maxRetries; this.baseDelay = baseDelay; this.retryableCodes = new Set([429, 529, 502, 408]); } async executeWithRetry(requestFn) { let lastError; for (let attempt = 0; attempt <= this.maxRetries; attempt++) { try { return await requestFn(); } catch (error) { lastError = error; const status = error.status || error.response?.status; if (!this.retryableCodes.has(status)) throw error; const delay = this._calculateDelay(error, attempt); console.log(`Attempt ${attempt+1} failed (${status}), ` + `retrying in ${(delay/1000).toFixed(1)}s...`); await new Promise(r => setTimeout(r, delay)); } } throw lastError; } _calculateDelay(error, attempt) { const retryAfter = error.response?.headers?.get?.('retry-after'); if (retryAfter) return parseFloat(retryAfter) * 1000; const delay = this.baseDelay * Math.pow(2, attempt); const jitter = Math.random() * delay * 0.1; return Math.min(delay + jitter, 60000); } }

Multi-Provider Failover. For maximum reliability, configure OpenClaw with multiple providers so that when one fails, requests automatically route to an alternative. The failover pattern extends the retry handler to cycle through providers before giving up entirely. This is where services like laozhang.ai provide value—a unified API interface that routes to multiple model providers (Anthropic, OpenAI, Google) through a single endpoint, simplifying failover configuration significantly.

pythonclass MultiProviderFailover: def __init__(self, providers: list): self.providers = providers # Ordered by preference self.retry_handler = OpenClawRetryHandler(max_retries=2) def execute(self, request_fn): errors = [] for provider in self.providers: try: return self.retry_handler.execute_with_retry( request_fn, provider=provider ) except Exception as e: errors.append((provider, e)) print(f"Provider {provider} failed, " f"trying next...") raise Exception( f"All providers failed: " f"{[(p, str(e)) for p, e in errors]}" )

This pattern ensures that a temporary Anthropic outage, rate limit event, or authentication issue on one provider doesn't bring down your entire application. Combined with the retry logic above, it provides three levels of resilience: retry within a provider, failover between providers, and graceful degradation when all providers are unavailable.

Frequently Asked Questions

How do I fix "OpenClaw API key not working"? Start with openclaw status --all to identify the specific failure. If the output shows "No API key found," run openclaw onboard to configure your Anthropic credentials. If it shows "invalid bearer token," verify your key starts with sk-ant-api03- and hasn't expired. If it shows "all in cooldown," restart the gateway or wait 30-60 minutes for the cooldown to expire. The automated repair command openclaw doctor --fix resolves the majority of configuration-related key issues.

Why does OpenClaw say "unauthorized" when my API key is correct? OpenClaw's three-layer authentication means "unauthorized" can originate from three different sources. Your key may be valid for the Anthropic API but the gateway token may be mismatched (producing a 1008 error), or an environment variable like ANTHROPIC_API_KEY may be overriding your configuration with an old value. Check all three layers: local config (cat ~/.openclaw/openclaw.json | grep -i key), gateway token (openclaw models auth setup-token --check), and direct API test (the curl command in the Authentication section above).

How do I reset the OpenClaw gateway token? Run openclaw models auth setup-token to generate a new gateway token. This updates both the local configuration and the gateway state. If your gateway runs on a remote server, you'll need to copy the new token to the remote configuration file and restart the gateway service. After regeneration, restart both the local agent and gateway to ensure both sides use the updated token.

What causes OpenClaw rate limiting and how do I prevent it? Rate limiting occurs when your requests exceed your Anthropic tier's quota across any dimension—requests per minute (RPM), input tokens per minute (ITPM), or output tokens per minute (OTPM). The most effective prevention is upgrading your tier by purchasing more credits (Tier 4 at $400 offers 4,000 RPM). For immediate relief, implement exponential backoff that respects the retry-after header, reduce request frequency, or distribute load across multiple API keys.

How do I fix OpenClaw context_length_exceeded? Reduce your conversation history by setting a lower maxHistoryMessages value in your OpenClaw configuration (default is 100, try 30-50). For programmatic control, implement token counting that summarizes older messages before the total exceeds the model's 200K token context window. Note: if this error appears suddenly rather than building up over a conversation, check your rate limit status—GitHub Issue #10368 documents cases where rate limits are misclassified as context overflow during failover.

Why are my OpenClaw skills not loading? Run openclaw skills list to check skill load status. Common causes are: YAML frontmatter syntax errors (missing --- delimiters), skill files placed outside the recognized directories (~/.openclaw/skills/ or .openclaw/skills/), and unmet dependencies declared in the skill's dependencies field. The openclaw doctor --fix command can identify and sometimes auto-resolve dependency issues.

How do I fix OpenClaw Docker "gateway token mismatch"? This is almost always caused by the OPENCLAW_GATEWAY_TOKEN environment variable in your Docker configuration overriding the value in your mounted config files. Run docker exec <container> env | grep OPENCLAW to check for environment variable overrides. Either remove the environment variable from your docker-compose.yml or update it to match the current gateway token in your configuration file.

Is there a way to test all OpenClaw connections at once? Yes—openclaw status --all tests every configured provider, gateway connection, and authentication state in a single command. For a focused check on model access, use openclaw models status which verifies credentials for each provider in real time. Both commands produce credential-safe output that can be shared for debugging without exposing sensitive values.