Choosing between Seedance 2.0, Veo 3.1, and Sora 2 comes down to what matters most for your specific workflow. As of February 2026, Seedance 2.0 from ByteDance leads in creative control with its unique quad-modal input system and native 2K resolution. Veo 3.1 from Google DeepMind delivers the only true 4K output at 3840×2160 with cinema-grade visual quality. Sora 2 from OpenAI produces the most realistic physics simulation in the industry, where objects respond to gravity, momentum, and collisions exactly as they would in the real world. On pricing, Sora 2 API starts at $0.10 per second for 720p, Veo 3.1 ranges from $0.10 to $0.75 per second depending on the platform, and Seedance 2.0 pricing is still being finalized ahead of its public release.

TL;DR

The AI video generation landscape in early 2026 is defined by three distinct approaches from three of the world's largest technology companies. Rather than declaring a single winner, the smart approach is understanding which model excels in which dimension — because each one was built with fundamentally different priorities. Seedance 2.0 was designed for maximum creative control, giving you four different input types and multi-scene narrative capabilities that neither competitor can match. Veo 3.1 was built for visual excellence, pushing output resolution to a native 4K that makes it the clear choice for professional cinema and advertising work. Sora 2 was engineered for physical realism, producing videos where gravity, momentum, fluid dynamics, and light refraction behave exactly as they would in the real world. The following table summarizes which model wins in each major category so you can quickly identify which one aligns with your priorities.

| Category | Winner | Why |

|---|---|---|

| Resolution | Veo 3.1 | Only model with native 4K (3840×2160) |

| Physics Realism | Sora 2 | Industry-leading gravity, collisions, fluid simulation |

| Creative Control | Seedance 2.0 | Quad-modal input (text + image + video + audio) |

| Longest Duration | Seedance 2.0 | Up to 15 seconds per clip |

| Lowest Cost | Sora 2 | $0.10/sec standard API (720p) |

| Audio Quality | Veo 3.1 | Native dialogue + sound effects in sync |

| Generation Speed | Seedance 2.0 | Under 60 seconds for a 5-second clip |

| API Documentation | Sora 2 | Most comprehensive developer docs and SDK |

Full Technical Specs Comparison

Understanding the technical specifications of each model is essential before making any commitment, but raw numbers only tell part of the story. The real question is how these specs translate into practical capabilities for your video production needs. Many comparison articles simply list specifications without explaining what they mean in practice — knowing that a model outputs at "2K resolution" or "24 fps" does not tell you whether the output will actually work for your distribution channel, your audience expectations, or your production pipeline. All the data in this section has been verified against official sources and third-party benchmarks as of February 2026, so you can trust these numbers when planning your budget and workflow. Where specifications differ between sources, we have used the data verified through direct page inspection of official pricing and documentation pages.

| Specification | Seedance 2.0 | Veo 3.1 | Sora 2 |

|---|---|---|---|

| Max Resolution | 2K (native) | 4K (3840×2160) | ~1080p (1792×1024) |

| Duration Range | 4–15 seconds | 4–8 seconds | Fixed 4/8/12 seconds |

| Frame Rate | 24 fps | 24 fps (cinema) | 24–30 fps |

| Native Audio | Yes (co-generation) | Yes (dialogue + SFX) | Yes |

| Audio Reference Input | Yes (unique) | No | No |

| Video Reference Input | Up to 3 clips | Scene extension | No |

| Image Input | Up to 9 images | 1–2 images | 1 image |

| Vertical Video | Yes | Yes (native 9:16) | Yes |

| Multi-Scene Narrative | Yes | Limited | No |

| Physics Simulation | Excellent | Good | Industry-leading |

| Character Consistency | Good | Good | Very good |

| Generation Speed | <60s for 5s clip | 60–90s for 8s clip | Variable |

Resolution and Visual Fidelity

Veo 3.1 dominates the resolution category with its native 4K output at 3840×2160 pixels, making it the only model currently capable of producing video that can be used directly in professional broadcast and cinema workflows without upscaling. This is not merely a marketing number — the 4K output has been confirmed through Google's Vertex AI platform and third-party providers like fal.ai, both of which confirm true 4K rendering rather than upscaled 1080p content. The practical implication is significant: if you are producing content for large-screen displays, digital signage, or any distribution channel where pixel density matters, Veo 3.1 is the only option that delivers without requiring post-processing upscaling. Seedance 2.0 outputs at 2K resolution, which sits comfortably above standard HD and is perfectly suitable for social media, web content, and most digital distribution channels. Sora 2 maxes out at approximately 1080p through its highest-tier sora-2-pro model at 1792×1024 resolution, which is adequate for most digital platforms but may feel limiting for projects requiring large-screen projection or print-quality frame extractions.

Input Flexibility and Creative Control

The most significant technical differentiator in this comparison is Seedance 2.0's quad-modal input system, which ByteDance calls the "@reference system." This allows creators to feed the model four distinct types of input simultaneously: text prompts, reference images (up to nine), reference video clips (up to three), and even audio references. No other model in this comparison offers this breadth of creative guidance in a single generation request. In practice, this means you can show Seedance 2.0 a reference video for camera movement, upload character images for visual consistency, provide an audio track for mood matching, and write a detailed text prompt describing the scene — all combined into one generation. The result is a level of creative control that is simply not possible with the other two models. Veo 3.1 supports text and image inputs with its "Ingredients to Video" feature and offers scene extension capabilities, while Sora 2 accepts text and a single reference image, supplemented by its Cameo feature for character consistency and Storyboard tool for multi-shot planning.

Audio Generation Capabilities

All three models now support native audio generation, which marks a major evolution from even six months ago when audio was typically a separate post-production step. However, the implementations differ significantly in both approach and quality. Veo 3.1 produces the most sophisticated audio output with synchronized dialogue and contextual sound effects — characters can speak with lip-synced audio that matches their on-screen movements, and environmental sounds respond naturally to the visual content. This makes it particularly valuable for marketing and narrative content where dialogue is essential. Seedance 2.0 takes a unique approach with audio-visual co-generation, where the audio and visual tracks are generated simultaneously as an integrated whole rather than audio being added as a post-processing layer. It also uniquely accepts audio references to guide the sound design, allowing creators to influence the mood and style of generated audio by providing sample tracks. Sora 2 generates audio that complements its visual content effectively, though early assessments suggest its dialogue naturalness sits slightly behind Veo 3.1's in terms of speech clarity and lip synchronization accuracy.

What Each Model Actually Costs in 2026

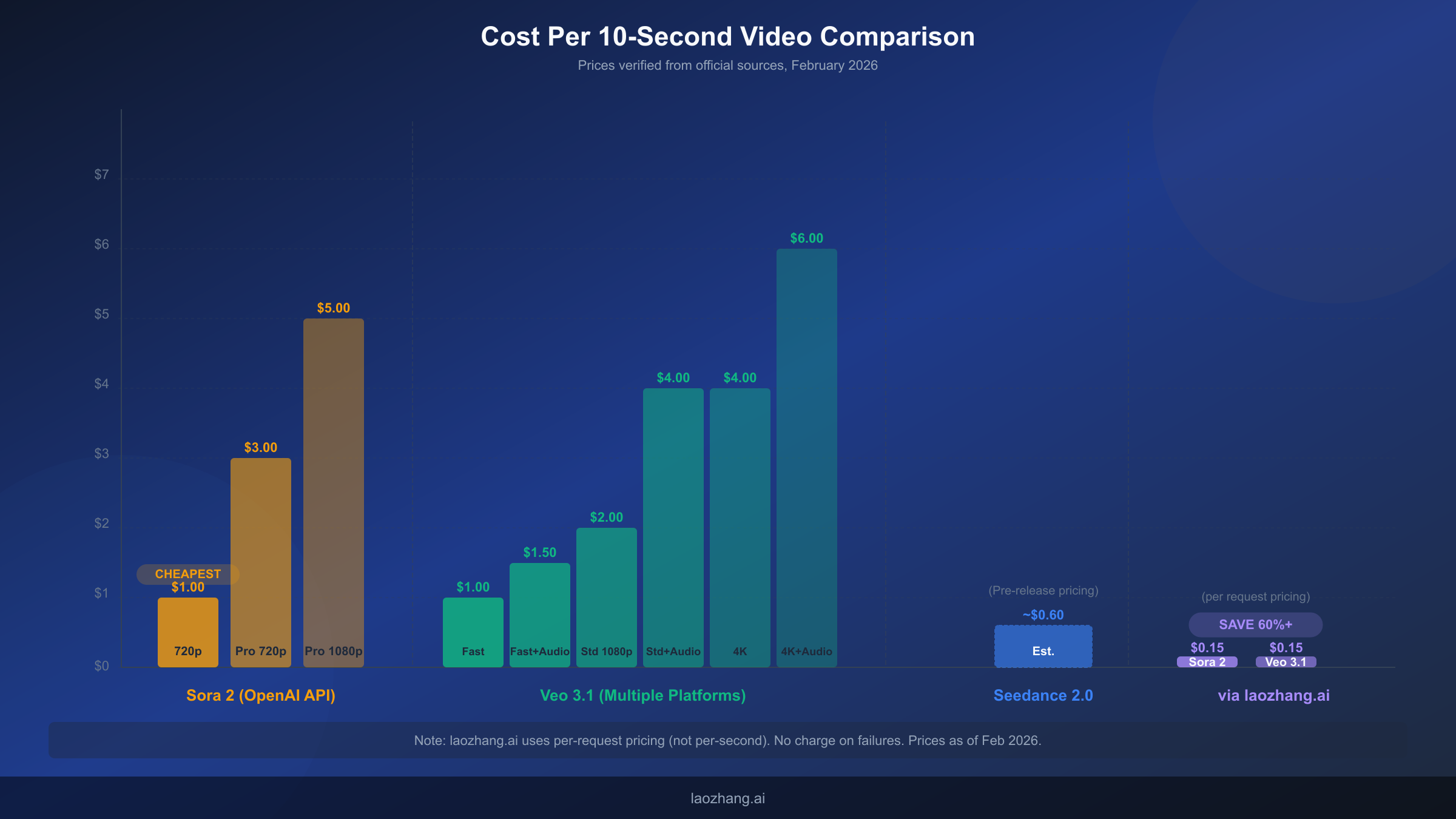

Pricing is where most comparison articles fall short — they either show incomplete data, fail to normalize costs into comparable units, or rely on outdated information that no longer reflects current market rates. The following pricing data has been verified directly from official sources, including real-time inspection of the OpenAI API pricing page (verified February 10, 2026). All costs have been normalized to a standard 10-second video to enable direct cross-model comparison, since each platform uses different billing structures including per-second rates, credit systems, and subscription tiers.

Sora 2 Pricing (OpenAI Official API)

OpenAI offers the most transparent pricing structure of the three models, with straightforward per-second billing through their API that makes cost calculation simple and predictable. The sora-2 standard model costs $0.10 per second at 720p resolution (1280×720), which translates to exactly $1.00 for a 10-second clip — making it the most affordable option for basic video generation across all three models. The sora-2-pro model offers higher quality at $0.30 per second for 720p and $0.50 per second for approximately 1080p resolution (1792×1024), bringing the cost of a high-quality 10-second clip to $3.00 or $5.00 respectively. For users who prefer subscription-based access over API billing, ChatGPT Plus at $20 per month includes 1,000 video generation credits with a maximum resolution of 720p and 5 requests per minute, while ChatGPT Pro at $200 per month provides 10,000 credits with unlimited relaxed-mode generations and 50 requests per minute. The API route offers more flexibility and often better value for production workflows, since you pay only for what you actually generate rather than committing to a monthly subscription that may go underutilized.

Veo 3.1 Pricing (Multiple Platforms)

Veo 3.1 pricing is more fragmented than Sora 2 because Google distributes it through multiple channels, each with its own pricing structure and quality tiers. Through Google AI Pro subscriptions at $19.99 per month with approximately 1,000 credits, the effective per-second cost works out to roughly $0.16 for standard generation. The premium Google AI Ultra tier at $249.99 per month provides 12,500+ credits for teams with heavy generation needs. Through Google's enterprise Vertex AI platform, quality-tier generation costs between $0.40 and $0.75 per second for 1080p to 4K output, while the fast tier runs at approximately $0.15 per second for 720p-1080p resolution. Third-party provider fal.ai offers additional pricing flexibility: standard 1080p generation at $0.20 per second without audio or $0.40 per second with audio, 4K output at $0.40 per second without audio or $0.60 per second with audio, and a fast mode at $0.10 per second without audio or $0.15 per second with audio. The bottom line is that a 10-second Veo 3.1 video can cost anywhere from $1.00 at the fastest and lowest quality setting to $7.50 for premium 4K with audio through Vertex AI, depending entirely on your quality requirements and chosen access platform.

Seedance 2.0 and Cost-Effective API Alternatives

Seedance 2.0 pricing remains officially unannounced as of February 2026, with the model still in a pre-release phase available primarily through ByteDance's Jimeng AI platform. Third-party estimates from benchmark providers suggest approximately $0.60 per 10-second video at 2K resolution, which would position it competitively between Sora 2 standard and Veo 3.1's mid-tier offerings if confirmed. For production teams that need access to both Sora 2 and Veo 3.1 without the complexity of managing multiple API accounts and billing systems, aggregated API providers offer a compelling cost-effective alternative. Services like laozhang.ai provide access to both Sora 2 and Veo 3.1 through a single unified API endpoint, with Sora 2 available at $0.15 per request and Veo 3.1 fast mode at $0.15 per request. The critical advantage of these aggregated platforms is their per-request pricing with a no-charge-on-failures policy — meaning you do not pay for video generations that fail, which can represent meaningful savings when iterating on prompts or running batch operations at scale.

| Model | Tier | Cost per 10s Video | Source |

|---|---|---|---|

| Sora 2 | Standard 720p | $1.00 | openai.com/api/pricing |

| Sora 2 | Pro 720p | $3.00 | openai.com/api/pricing |

| Sora 2 | Pro ~1080p | $5.00 | openai.com/api/pricing |

| Veo 3.1 | Fast (no audio) | $1.00 | fal.ai |

| Veo 3.1 | Fast (with audio) | $1.50 | fal.ai |

| Veo 3.1 | Standard 1080p | $2.00 | fal.ai |

| Veo 3.1 | 4K (with audio) | $6.00 | fal.ai |

| Seedance 2.0 | Estimated | ~$0.60 | Third-party estimate |

Quality, Physics, and Audio — Where Each Model Excels

Beyond specifications and pricing, the true differentiator between these three models lies in the subjective but critically important dimensions of visual quality, physical realism, and audio sophistication. These are the areas where you actually see and hear the difference in your final output, yet they are the hardest to quantify in a comparison table. Understanding these quality dimensions requires looking past marketing language and examining what each model actually produces in practice across different content scenarios. The quality differences are not marginal — they represent fundamentally different engineering philosophies that make each model distinctly superior for certain categories of content.

Visual Quality and Color Science

Veo 3.1 produces the most visually polished output of the three models, with color science that production professionals consistently describe as "cinema-grade." The 4K output is not merely higher resolution — it demonstrates superior color grading with more natural skin tones, better highlight and shadow detail retention, and more sophisticated handling of mixed lighting conditions compared to both competitors. This quality difference becomes especially evident in scenes with challenging lighting, where Veo 3.1 maintains detail in bright highlights while preserving information in deep shadows that other models tend to clip or crush into flat black. Seedance 2.0 delivers strong visual quality at 2K that competes favorably with Veo 3.1 when compared at equivalent resolutions, particularly excelling in scenes with vibrant color palettes and dynamic camera movements where its co-generation approach produces fluid and coherent motion. Sora 2 focuses less on cinematic color grading and more on photorealistic accuracy — its colors may appear less stylistically "polished" but are arguably more true-to-life, which is precisely what you want for product demonstrations, documentation footage, and documentary-style content where accuracy trumps aesthetics.

Physics Simulation and Motion Realism

Sora 2's physics engine remains the industry benchmark as of February 2026, and the gap between it and the competition in this specific dimension is substantial. Objects in Sora 2 videos respond to gravity, momentum, and collision forces in ways that are nearly indistinguishable from real-world footage captured with a camera. Water flows realistically around obstacles with proper turbulence and surface tension effects, fabrics drape and blow in wind with accurate weight simulation, and light refracts through transparent materials with physically correct Fresnel effects that respond to viewing angle. This is not just about making videos look aesthetically pleasing — it is about making them look physically believable. When a glass falls off a table in a Sora 2 video, it shatters and bounces exactly as a real glass would, with fragment trajectories and sound effects that match the visual physics. Seedance 2.0 also demonstrates excellent physics simulation that has improved significantly from version 1.5, though expert evaluations generally rate it slightly below Sora 2 for complex scenarios involving multi-object interactions with different material properties. Veo 3.1 produces acceptable physics for most common scenarios but can occasionally generate physically implausible interactions in edge cases involving fluid dynamics or soft-body deformation.

Audio Integration and Dialogue Quality

The audio landscape across AI video models has evolved rapidly, with all three now offering native audio generation — but the quality gap between implementations remains significant and can be the deciding factor for content that relies on sound. Veo 3.1 leads decisively in dialogue quality with tightly synchronized lip movements and natural-sounding speech that can produce multiple distinct character voices within a single scene. Its environmental sound effects are contextually appropriate and well-mixed with the dialogue track, creating a cohesive audio-visual experience that requires minimal post-production audio work. Seedance 2.0's audio-visual co-generation approach produces uniquely integrated sound that feels organic precisely because the audio and video are generated as a unified whole rather than as separate tracks that are subsequently synchronized. Its audio reference input capability — a feature unique to Seedance 2.0 — allows creators to influence the mood, genre, and style of generated audio by providing reference tracks, opening creative possibilities that simply do not exist with the other two models. Sora 2 generates competent audio that enhances its already-strong visual output, and its environmental sound effects are well-matched to the physics simulation, though dialogue naturalness is an area where OpenAI continues to iterate and improve.

How to Cut Your AI Video Costs by 60% or More

For individual creators generating a handful of videos per month, official API pricing is perfectly manageable — a few dollars per video is a reasonable cost of doing business. But when you scale to dozens or hundreds of generations per month — whether for A/B testing marketing creative, building out a content library, iterating on prompt strategies, or running a production agency serving multiple clients — costs compound rapidly and can become a significant line item in your budget. Consider the math: a marketing team generating 50 ten-second Sora 2 Pro videos per month at 1080p faces $250 in generation costs alone, and that number increases dramatically once you factor in failed generations, prompt iteration rounds, quality re-rolls, and the inevitable creative exploration that produces videos you ultimately do not use.

The most impactful cost-saving strategy available today is using aggregated API providers that offer access to multiple models through a unified endpoint. These platforms negotiate volume pricing with model providers and pass savings on to end users, often at 40–60% below official API rates. For Sora 2 and Veo 3.1 specifically, laozhang.ai offers one of the most cost-effective access points available. Their Sora 2 access costs $0.15 per request for 720p video (10–15 seconds), compared to $1.00–$1.50 through the official API for equivalent output. Their Veo 3.1 fast mode runs at $0.15 per request, versus $1.00–$1.50 through fal.ai's comparable fast tier for a 10-second clip. The critical differentiator is the no-charge-on-failure policy — failed generations, which can account for 10–20% of requests during active prompt development, cost nothing. If you are evaluating different API providers for reliability and uptime, we have published a detailed guide on finding the most stable Sora 2 API access that compares providers across several performance dimensions.

Beyond provider selection, several operational strategies can further reduce your video generation costs without sacrificing output quality. First, generate at the lowest viable resolution for your distribution channel — many social media platforms compress video aggressively during upload, making the quality difference between 720p and 1080p generation virtually invisible in the final published post, so paying the 1080p premium often delivers no perceptible benefit to your audience. Second, batch your prompt iterations at lower quality tiers before running final generations at higher settings — use Sora 2 standard at $0.10 per second to validate concepts, then switch to Pro or Veo 3.1 only for final renders once the prompt is dialed in. Third, use shorter clip durations when possible, since all platforms bill per second and engagement data consistently shows that many social media videos perform better at 5–8 seconds than at longer durations anyway. Fourth, consider combining models strategically: use the most affordable model for the task at hand rather than defaulting to your "favorite" model for everything.

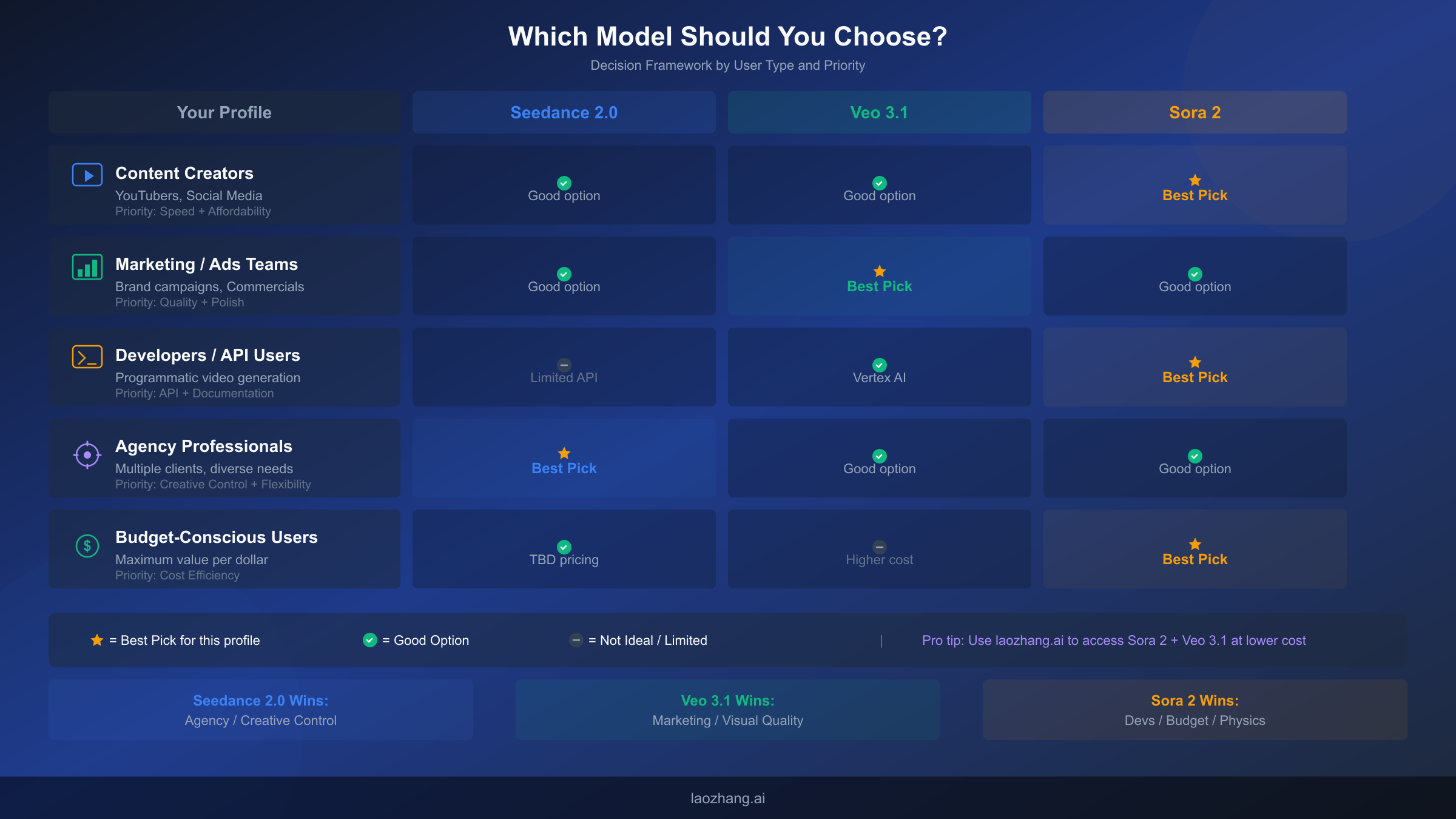

Which Model Should You Choose? (By Use Case)

The answer to "which model is best" always depends on who is asking. A solo YouTuber creating weekly social content has fundamentally different requirements than a marketing agency producing national-brand campaign assets, and a developer building a video generation API integration has different priorities than either of them. Instead of offering a generic one-size-fits-all recommendation, this section walks through the five most common user profiles and explains exactly why one model fits better than the others for each specific scenario. The goal is not just to tell you which to choose — it is to explain the reasoning clearly enough that you can confidently make the decision even if your situation does not exactly match one of these profiles.

Content Creators and YouTubers

If you create content for YouTube, TikTok, Instagram, or similar platforms, Sora 2 is likely your best starting point for several converging reasons. Social media content rarely benefits from 4K resolution since platforms compress video aggressively during upload, making Sora 2's 1080p output more than sufficient for any current distribution channel. The standard tier at $0.10 per second delivers the lowest per-video cost in this entire comparison, which matters enormously when you are generating multiple videos per week and experimenting with different creative approaches. Sora 2's superior physics simulation also makes it ideal for the kind of attention-grabbing, visually striking content that performs well on social platforms — dramatic product reveals with realistic object interactions, surreal visual effects that obey natural physics, or hyper-realistic scenarios that stop viewers mid-scroll. The Storyboard feature enables multi-shot planning that aligns well with narrative content formats popular on YouTube.

Marketing and Advertising Teams

For brand campaigns, product launches, and commercial advertising, Veo 3.1 is the recommended choice. Marketing video needs to look polished, professionally graded, and visually consistent with brand guidelines, and Veo 3.1's cinema-grade color science and 4K resolution deliver the visual quality that brand standards demand without requiring extensive post-production color correction. The native dialogue audio capability is particularly valuable for marketing — you can generate spokesperson videos, product explanation clips, and testimonial-style content with synchronized speech without needing to build a separate voice-over pipeline. The higher per-video cost is easily justified when each piece of content represents a brand-level investment that will be seen by hundreds of thousands of viewers, and the quality difference between Veo 3.1 and the alternatives is most visible precisely in the controlled lighting, precise color accuracy, and polished aesthetic that professional advertising demands.

Developers and API-First Users

Developers building applications that integrate video generation should strongly consider Sora 2 for its superior API infrastructure and documentation ecosystem. OpenAI's API documentation is the most comprehensive of the three, with well-maintained SDKs for Python and JavaScript, clear rate limit documentation, predictable billing per second of generated content, and robust error handling that simplifies production deployments. The fixed-duration tiers (4, 8, or 12 seconds) simplify programmatic integration since you always know exactly how long each generation will take and how much it will cost before sending the request. Veo 3.1 through Google's Vertex AI is a solid alternative for developers already deeply embedded in the Google Cloud ecosystem, though the video generation API surface is less mature than OpenAI's and requires more initial setup. Seedance 2.0 has limited public API access as of February 2026, making it less suitable for production applications that require reliable, well-documented programmatic access.

Agency Professionals Handling Multiple Clients

Creative agencies working across multiple clients with diverse aesthetic needs and brand guidelines should consider Seedance 2.0 as their primary workhorse model. The quad-modal input system provides the creative flexibility that agency work demands — you can match specific client brand guidelines by uploading reference images for visual consistency, replicate precise camera movements from approved reference footage, maintain character appearance across campaign assets using reference images, and even set the audio mood by providing reference music tracks. The multi-scene narrative capability allows generating connected sequences rather than isolated clips, which streamlines production workflows for campaigns that require visual storytelling across multiple scenes. In practice, agencies can complement Seedance 2.0 with Veo 3.1 for premium hero content requiring 4K polish and Sora 2 for rapid concept development and client presentation mock-ups, creating a strategic multi-model pipeline that covers the full spectrum of client requirements.

Budget-Conscious Users Seeking Maximum Value

If cost efficiency is your primary constraint, Sora 2 standard at $0.10 per second offers the best value proposition by a significant margin. A 10-second video at 720p costs just $1.00 through the official API, and even less through aggregated providers that offer per-request pricing. The key insight for budget-conscious users is that 720p video with exceptional physics quality (which Sora 2 excels at) often looks more impressive and professional than 1080p video with mediocre physics and motion — so choosing the right model at a lower resolution can paradoxically produce superior-looking results at a fraction of the cost of a "higher spec" alternative.

How to Get Started With Each Model

Getting from "I want to try this" to "I am actually generating videos" requires navigating different onboarding processes for each model, and the ease of access varies significantly between them. Here is a practical guide that covers every major access pathway currently available for each model, including both official channels and third-party alternatives that may offer simpler setup or lower costs.

Sora 2: Multiple Access Pathways Available

The fastest way to start generating videos with Sora 2 is through a ChatGPT Plus subscription at $20 per month, which gives you 1,000 generation credits and a web-based interface that requires zero technical setup — you simply type a prompt and receive video output. For developers who need API access, you will need an OpenAI API key from platform.openai.com, after which you can send generation requests directly to the sora-2 or sora-2-pro endpoints using the well-documented REST API or official Python/JavaScript SDKs. The API documentation includes comprehensive code examples, parameter descriptions, and troubleshooting guides. If you need a simpler integration point or want to reduce per-generation costs, aggregated providers like laozhang.ai provide Sora 2 API access through a single endpoint with OpenAI-compatible request formatting, meaning you can typically use existing OpenAI SDK code with just a base URL change and start generating immediately.

Veo 3.1: Platform-Dependent Access

Veo 3.1 access depends heavily on your preferred platform and technical requirements. The simplest consumer entry point is through Google's AI Studio interface or a Google AI Pro subscription at $19.99 per month, which provides a web-based generation experience similar to ChatGPT's Sora integration. For enterprise and programmatic API access, Google's Vertex AI platform provides full API capabilities with both quality and fast generation tiers, though the initial setup requires a Google Cloud account and basic familiarity with GCP project configuration. Third-party platforms like fal.ai offer alternative API access with their own pricing structures, simpler onboarding than the official Vertex AI process, and often faster initial setup for developers who want to start generating without the overhead of GCP account configuration. Through aggregated providers, you can also access Veo 3.1 alongside Sora 2 through the same API endpoint without managing separate platform accounts or billing relationships.

Seedance 2.0: Early Access Through ByteDance

Seedance 2.0 is currently available primarily through ByteDance's Jimeng AI platform, with public API access being progressively expanded as the model moves toward general availability. The Jimeng AI web interface provides the most straightforward access path for creators who want to experiment with Seedance 2.0's unique quad-modal input capabilities, including the ability to upload reference videos, multiple images, and audio tracks alongside text prompts. Regional availability may vary, and pricing is expected to be finalized as the model transitions from pre-release to full commercial availability. For professional users who need to start building workflows around Seedance 2.0 now, monitoring official ByteDance announcements and establishing early access through partner platforms is the recommended approach to ensure you are ready when full API access launches.

The Pro Workflow — Combining Multiple Models

Professional video production teams in 2026 are increasingly adopting multi-model workflows that leverage each model's specific strengths rather than committing exclusively to a single platform. This approach requires more operational planning and familiarity with multiple systems, but it delivers results that no single model can match on its own — and it provides built-in resilience against the platform outages and rate limits that inevitably affect any single provider. The fundamental insight driving this trend is that different stages of the creative process genuinely benefit from different model capabilities, and the cost of using the "wrong" model for a given task is both financial (paying for capabilities you do not need) and qualitative (getting inferior results compared to what a better-suited model would produce).

A practical multi-model production workflow typically follows three distinct phases, each optimized for a different priority. In the concept development phase, use Sora 2 standard at $0.10 per second to rapidly prototype ideas and test prompt variations at minimal cost. Generate multiple concept versions at 720p to explore different creative directions — at $1.00 per 10-second clip, you can produce 20 concept variations for $20, which is vastly more efficient than trying to refine a single expensive high-quality generation through iterative prompting. Once you have identified the creative direction that works for the project, the production phase is where you select the optimal model for the final output based on the content requirements. Use Veo 3.1 for any content requiring 4K resolution, cinema-grade color science, or synchronized dialogue audio — this is where its strengths justify the higher cost. Use Sora 2 Pro for content where physics realism is the primary quality dimension, such as product demonstrations where objects need to interact naturally. Use Seedance 2.0 when you need precise creative control through reference video inputs, character consistency across multiple clips, or multi-scene narrative continuity. The final post-production phase may involve combining outputs from multiple models into a single edit — for example, using Veo 3.1 for establishing shots that benefit from 4K resolution and cinematic color, and Sora 2 for action sequences where physics accuracy and motion realism drive the impact.

This multi-model approach also provides critical operational resilience. If one platform experiences downtime, rate limiting, or quality degradation during a tight production deadline, you can redirect generation requests to an alternative model that produces acceptable results. This redundancy is particularly valuable for agencies and production houses operating under client deadlines where missing a delivery date is not an option. For teams that want to streamline multi-model access without managing separate API accounts for each provider, aggregated platforms that support multiple models through a single endpoint eliminate the operational overhead of maintaining parallel integrations while still enabling full model flexibility.

Frequently Asked Questions

Is Seedance 2.0 better than Sora 2?

Neither model is universally "better" — they excel in fundamentally different areas based on different engineering philosophies. Seedance 2.0 offers superior creative control through its unique quad-modal input system, accepting text, images, video clips, and audio references simultaneously, and supports longer clip durations up to 15 seconds versus Sora 2's maximum of 12 seconds. Sora 2 delivers measurably better physics simulation and has a significantly more mature API ecosystem with transparent per-second pricing starting at just $0.10. Choose Seedance 2.0 when creative flexibility, multi-scene storytelling, and reference-based generation are your priorities. Choose Sora 2 when physical realism, cost efficiency, and robust API integration matter most for your workflow. For many professional production teams, using both models for their respective strengths produces the best overall results.

How much does Veo 3.1 actually cost?

Veo 3.1 pricing varies significantly depending on your chosen access platform and generation quality tier. Through Google AI Pro subscriptions, the effective cost is approximately $0.16 per second based on the $19.99 monthly fee for roughly 1,000 credits. Through Google's enterprise Vertex AI platform, quality-tier generation costs $0.40 to $0.75 per second for 1080p to 4K output, while the fast tier runs at approximately $0.15 per second. Third-party platforms like fal.ai offer fast-mode access starting from $0.10 per second without audio. For a standard 10-second video, expect to pay between $1.00 at the lowest tier and $7.50 for premium 4K with synchronized audio, depending on your specific quality and feature requirements.

Which AI video model produces the highest quality output?

Quality assessment depends entirely on which dimension you are measuring and what type of content you are producing. For raw resolution and color science, Veo 3.1 at 4K is unmatched in the current market — its cinema-grade output is the closest any AI model gets to professional camera footage in terms of visual fidelity, dynamic range, and color accuracy. For physics realism where objects need to interact naturally with gravity, momentum, fluid dynamics, and light refraction, Sora 2 leads the industry by a significant margin. For creative versatility and tightly integrated audio-visual output, Seedance 2.0's co-generation approach produces results with exceptional internal coherence. Most professionals define "best quality" based on the specific requirements of their content rather than absolute technical benchmarks.

Can I access multiple AI video models through a single API?

Yes, aggregated API platforms provide access to both Sora 2 and Veo 3.1 through unified API endpoints. Services like laozhang.ai allow you to switch between models by changing a request parameter rather than integrating with entirely separate platforms, which simplifies both development and billing management. Seedance 2.0 API access is more limited as of February 2026 but is expected to expand as the model progresses toward full general availability. Using a unified provider offers the additional benefit of consolidated billing and often provides per-request pricing that can be more cost-effective than official per-second API rates.

What is the fastest AI video generation model in 2026?

Seedance 2.0 currently holds the speed advantage among the three models, generating a 5-second video in under 60 seconds — approximately 30% faster than its predecessor Seedance 1.5. Veo 3.1 typically requires 60 to 90 seconds for an 8-second clip through standard quality generation, though fast-tier options on platforms like fal.ai can reduce this. Sora 2 generation times vary depending on the selected resolution, model tier, and current platform load. For production workflows where generation speed is a critical bottleneck, the fast tiers available through third-party providers — including Veo 3.1 fast mode and Sora 2 standard — offer the best balance of speed and output quality, with typical generation times under two minutes for 10-second clips.