OpenClaw's context_length_exceeded error occurs when your conversation history, workspace files, and tool outputs exceed the AI model's maximum token limit. This is one of the most common errors users encounter during extended coding sessions, and it stops your agent dead in its tracks. The good news: you can fix it immediately with /compact to summarize older history, /new to start a fresh session, or configure agents.defaults.compaction.reserveTokens: 40000 in your openclaw.json for permanent prevention. This guide covers every cause, fix, and prevention strategy with tested commands and configuration examples verified against official OpenClaw documentation as of February 2026.

TL;DR

If your OpenClaw agent just stopped working with a context overflow error, here is what you need to do right now:

- Immediate fix: Type

/compactin your OpenClaw session to compress conversation history into a summary, freeing approximately 60% of your context window. This preserves your key decisions and context while removing redundant details from earlier in the conversation. - Nuclear option: Type

/newto start a completely fresh session. You lose conversation history but immediately get a working agent. This is your best bet when/compactalone is not enough. - Check your status: Run

/statusto see exactly how full your context window is. If it shows usage above 80%, compaction should trigger automatically—but if it has not, a manual/compactwill solve it. - Permanent prevention: Add

"agents": {"defaults": {"compaction": {"reserveTokens": 40000}}}to your openclaw.json to trigger auto-compaction earlier, before the limit is reached. The default value of 16,384 tokens is often too low for complex coding sessions.

What Causes context_length_exceeded in OpenClaw

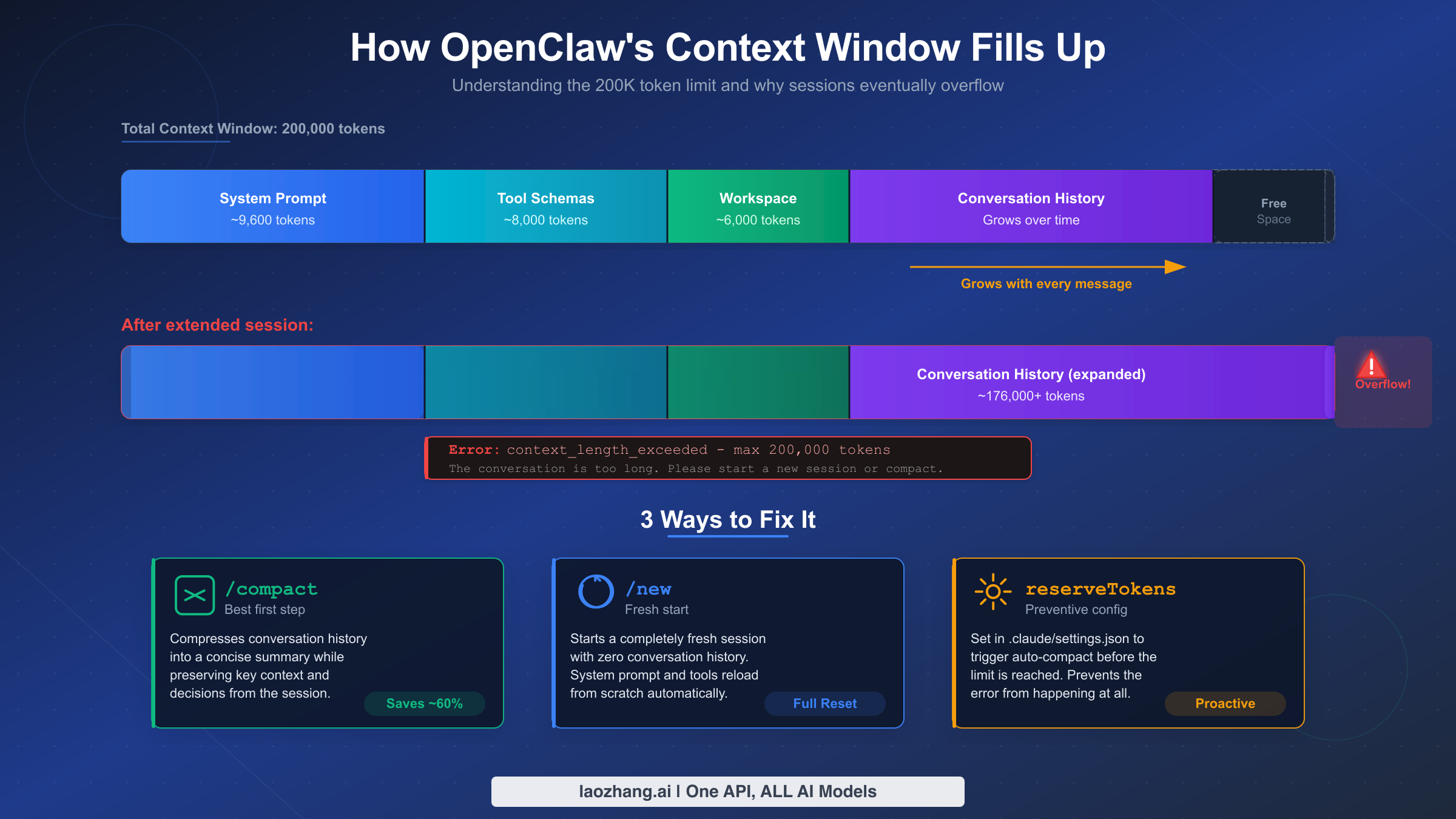

Every time you send a message to OpenClaw, the agent assembles a complete payload that gets sent to your configured AI model. This payload is not just your message—it includes the system prompt, all tool schemas, workspace files, and your entire conversation history. Understanding this architecture is the key to both fixing and preventing context overflow errors, because the error does not come from a single large message but from the accumulated weight of everything OpenClaw needs to include in each request.

The system prompt alone consumes approximately 9,600 tokens on every single request. This is the core instruction set that tells the AI model how to behave as an agent—how to use tools, how to reason about code, and how to interact with your workspace. You cannot reduce this significantly without breaking agent functionality, so it represents a fixed cost that eats into your available context window from the very first message.

Tool schemas add another approximately 8,000 tokens per request. Every tool that OpenClaw has access to—file reading, code execution, web search, and dozens more—requires a JSON schema definition that describes its parameters and usage. These schemas are necessary for the model to know what tools are available, but they consume a significant chunk of your context budget. When you enable additional skills or connect MCP servers with many tools, this number climbs even higher.

Workspace file injection is often the silent killer. OpenClaw injects workspace files like AGENTS.md, SOUL.md, USER.md, and other configuration files into every single message you send. According to GitHub Issue #9157, this injection can consume approximately 35,600 tokens per message in complex workspaces, accounting for a staggering 93.5% waste in multi-message conversations because the same files are re-sent every time. If you have large workspace files, they are eating through your context window at an alarming rate.

Conversation history is the component that grows without bound. Every message you send, every tool result, every code block the agent reads or writes—all of it accumulates in the conversation history. During a typical coding session where you are debugging a complex issue, reading multiple files, and iterating on solutions, the conversation can easily reach tens of thousands of tokens within 15 to 20 minutes. Without compaction, this growth is inevitable and the context_length_exceeded error becomes a matter of when, not if.

The fifth and final contributor is memory search results. When enabled, OpenClaw queries its memory system to find relevant context from past sessions and injects those results into the current request. While this feature improves continuity between sessions, it adds additional tokens to an already crowded context window. GitHub Issue #5771 documented cases where memory search injection alone triggered context overflow on completely fresh sessions, before the user had even sent their first real message.

Quick Diagnosis — Identify Your Exact Problem in 60 Seconds

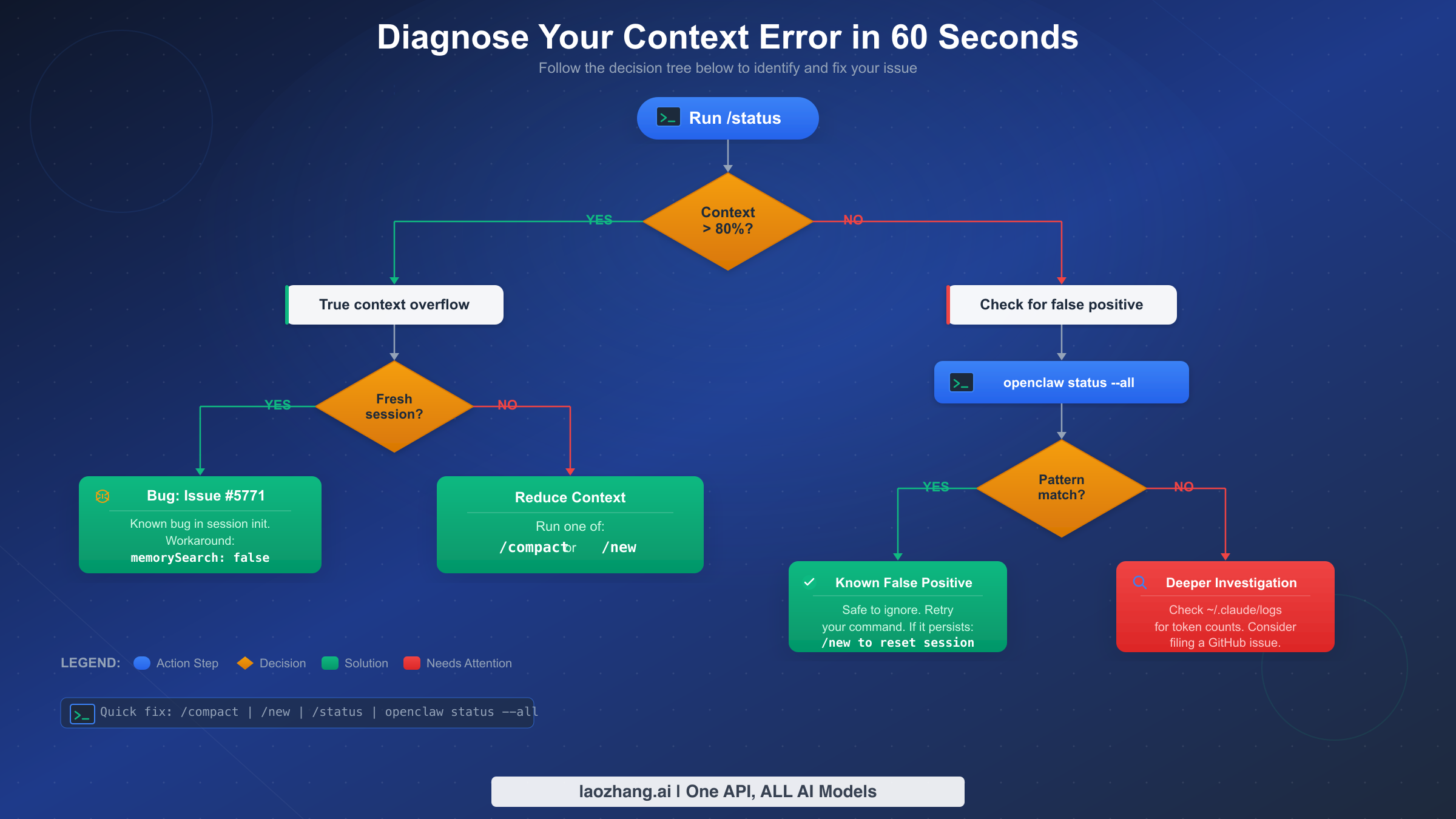

When your OpenClaw session hits a context_length_exceeded error, you need to determine whether it is a genuine overflow from a long conversation, a configuration issue with workspace file injection, or a known bug that is producing false positives. The diagnostic process takes about 60 seconds and starts with a single command that reveals everything you need to know about your current context state.

Step 1: Run /status and read the context percentage. This command outputs your current context window utilization as a percentage. If it shows above 80%, you have a genuine context overflow situation—your conversation has grown too long and needs compaction or a fresh start. If it shows a lower percentage despite the error, you may be dealing with a false positive bug, which requires a different approach entirely.

Step 2: Run /context list to see what is consuming your context. This command breaks down exactly which files and components are injected into your context window. Look for unexpectedly large workspace files—if you see files consuming thousands of tokens each, reducing or removing them from injection will dramatically improve your situation. The output shows the file name alongside its token count, making it easy to identify the biggest offenders.

Step 3: Check the error message variant. Not all context overflow errors are identical. The standard error reads "This model's maximum context length is X tokens. You requested Y tokens." If you see this pattern, it is a straightforward overflow. However, if the error occurs on a fresh session or after a recent compaction, you may be encountering Issue #7483 where the error detection mechanism produces false positives. In that case, running openclaw status --all gives you the full diagnostic picture including internal token counts versus reported counts.

If you are encountering context errors alongside rate limit errors in OpenClaw, the two issues may be related. Context overflow errors sometimes cascade into 429 errors when the system retries failed requests repeatedly, consuming your rate limit allocation. Always fix the context issue first before investigating rate limit problems.

Interpreting the diagnostic output correctly matters because each root cause has a different fix. A genuine overflow from a long conversation calls for /compact or /new. An overflow caused by bloated workspace files requires adjusting your workspace configuration. A false positive bug requires updating to the latest OpenClaw version or applying specific workarounds. The diagnostic commands above give you enough information to choose the right solution path within a minute.

Fix context_length_exceeded — Step-by-Step Solutions

The solutions below are ordered from quickest to most thorough. Start with Fix 1 and move down the list only if the previous fix does not resolve your issue. Most users will be back to a working agent within two minutes using either /compact or /new.

Fix 1: Manual Compaction with /compact

This is the recommended first response to a context_length_exceeded error. When you run /compact, OpenClaw summarizes your entire conversation history into a compact summary that preserves key decisions, file locations, and important context while discarding the verbose details of earlier exchanges. The compacted summary typically uses 40% or less of the original conversation's token count, which usually provides enough headroom to continue working.

bash/compact # To verify the compaction worked: /status

After compaction, check /status again. Your context utilization should have dropped significantly. If it is still above 80%, the compaction may not have been aggressive enough—you can run /compact again for a second pass, or proceed to Fix 2. One important detail: compaction persists in the JSONL session file, so the summary survives across restarts. You are not losing data—you are condensing it.

Fix 2: Start a Fresh Session with /new

When compaction alone is not enough—typically in extremely long sessions or when your workspace files consume a disproportionate amount of context—starting a fresh session is the most reliable fix. The /new command creates a completely clean session with zero conversation history. The system prompt, tools, and workspace files reload automatically, but you start with a blank conversational slate.

bash# Start a fresh session: /new # Alternative: clear and reset /reset

The distinction between /new and /reset is subtle: both create a fresh session, but /reset also clears any session-specific memory. Use /new when you want to continue the same overall task with a clean conversation, and /reset when you want to start completely from scratch. Neither command deletes your project files or workspace configuration—they only affect the conversation state.

Fix 3: Reduce Workspace File Injection

If your /context list output shows large workspace files consuming thousands of tokens, reducing their size or excluding them from injection can provide substantial relief. OpenClaw respects a bootstrapMaxChars setting (default: 20,000 characters, verified from official docs) that caps the total amount of workspace content injected per message.

json{ "agents": { "defaults": { "bootstrapMaxChars": 10000 } } }

Reducing bootstrapMaxChars from the default 20,000 to 10,000 or even 5,000 characters can save thousands of tokens per message. The tradeoff is that your agent will have less workspace context immediately available, but it can always read files on demand when needed. For most coding tasks, this tradeoff is worthwhile when you are hitting context limits.

Fix 4: Disable Memory Search

If you are hitting context overflow errors on fresh sessions—particularly the scenario documented in GitHub Issue #5771—disabling memory search can resolve the issue immediately. Memory search injects relevant snippets from past sessions, and in some cases, these injections alone push the context past the model's limit before any real conversation begins.

json{ "agents": { "defaults": { "memorySearch": { "enabled": false } } } }

Disabling memory search means your agent will not automatically recall information from previous sessions. This is a significant tradeoff for users who rely on session continuity, but it eliminates a major source of unexpected context consumption. You can re-enable it once the underlying bug is fixed or after upgrading to a model with a larger context window.

Fix 5: Switch to a Model with a Larger Context Window

If you consistently work with large codebases and long sessions, the most sustainable fix may be switching to a model that offers a larger context window. The difference between a 128,000-token model and a 200,000-token model can be the difference between hitting the wall every 30 minutes versus working uninterrupted for an entire afternoon. See the model comparison section below for specific recommendations.

To switch models in OpenClaw, update your configuration to specify a different provider and model:

json{ "agents": { "defaults": { "model": "claude-sonnet-4-5-20250929", "provider": "anthropic" } } }

When choosing a new model, consider not just the context window size but also the model's capabilities for your specific use case. A model with a million-token context window is not helpful if it cannot perform the reasoning tasks your workflow requires. For most OpenClaw users, Claude Sonnet 4 (200K context) provides the best balance of intelligence, context capacity, and cost.

Master OpenClaw Context Configuration

OpenClaw provides several configuration settings that control how context is managed, compacted, and pruned. These settings live in your openclaw.json file (typically at ~/.openclaw/openclaw.json or in your project's .openclaw/settings.json) and give you fine-grained control over context management behavior. Understanding and tuning these settings is the difference between constantly fighting context errors and never seeing them again.

Compaction settings are the most impactful configuration you can make. The compaction system controls when and how OpenClaw summarizes conversation history. By default, auto-compaction is enabled and triggers when the context approaches the model's limit, but the default threshold settings are conservative—too conservative for many real-world coding sessions.

json{ "agents": { "defaults": { "compaction": { "reserveTokens": 40000, "keepRecentTokens": 20000, "reserveTokensFloor": 20000 } } } }

The reserveTokens setting (default: 16,384 tokens, verified from official OpenClaw docs) determines how much free space to maintain after compaction. Increasing this to 40,000 tokens ensures that compaction triggers earlier and more aggressively, leaving you with a comfortable buffer rather than constantly teetering on the edge of overflow. The keepRecentTokens setting (default: 20,000 tokens) controls how many tokens of recent conversation to preserve verbatim during compaction—everything older gets summarized. The reserveTokensFloor (default: 20,000 tokens) acts as an absolute minimum reserve that compaction always maintains.

Context pruning operates differently from compaction. While compaction rewrites the conversation into a summary (a permanent change that persists in the JSONL file), pruning trims tool results and verbose outputs in-memory for each individual request without modifying the stored transcript. Pruning happens automatically and does not require configuration, but understanding it helps explain why your /status output might show different numbers than you expect—the pruned in-memory representation can be significantly smaller than the stored transcript.

Bootstrap file management controls workspace injection. The bootstrapMaxChars setting caps the total workspace content injected per message. OpenClaw uses a 70/20/10 split for large files: 70% from the head of the file, 20% from the tail, and 10% reserved for a truncation marker. If you have workspace files that exceed this budget, only a portion gets injected on each message, which means the agent may miss context that lives in the middle of large files. Keeping your AGENTS.md and other workspace files concise and well-organized ensures the most important information survives truncation.

The softThresholdTokens setting for memory flush (default: 4,000 tokens) controls when the memory system flushes search results. When memory results exceed this threshold, older results are discarded to make room. If you are seeing unexpected context growth from memory injection, lowering this threshold reduces the memory footprint at the cost of less historical context being available.

Here is a complete recommended configuration for users experiencing frequent context errors. This configuration is optimized for extended coding sessions with medium to large codebases and represents the settings that the OpenClaw community has converged on through extensive real-world testing:

json{ "agents": { "defaults": { "compaction": { "reserveTokens": 40000, "keepRecentTokens": 25000, "reserveTokensFloor": 25000 }, "bootstrapMaxChars": 12000, "memorySearch": { "softThresholdTokens": 3000 } } } }

This configuration triggers compaction earlier (40K reserve versus the default 16K), preserves more recent conversation context (25K versus 20K), caps workspace injection at a moderate 12,000 characters, and reduces memory search injection slightly. Together, these settings provide a significantly more comfortable experience for users who regularly work in sessions lasting more than 30 minutes. The key insight is that the default settings were designed for short, simple interactions—if you are using OpenClaw as a serious development tool for multi-hour sessions, you need to tune these values upward.

Choose the Right Model for Your Context Needs

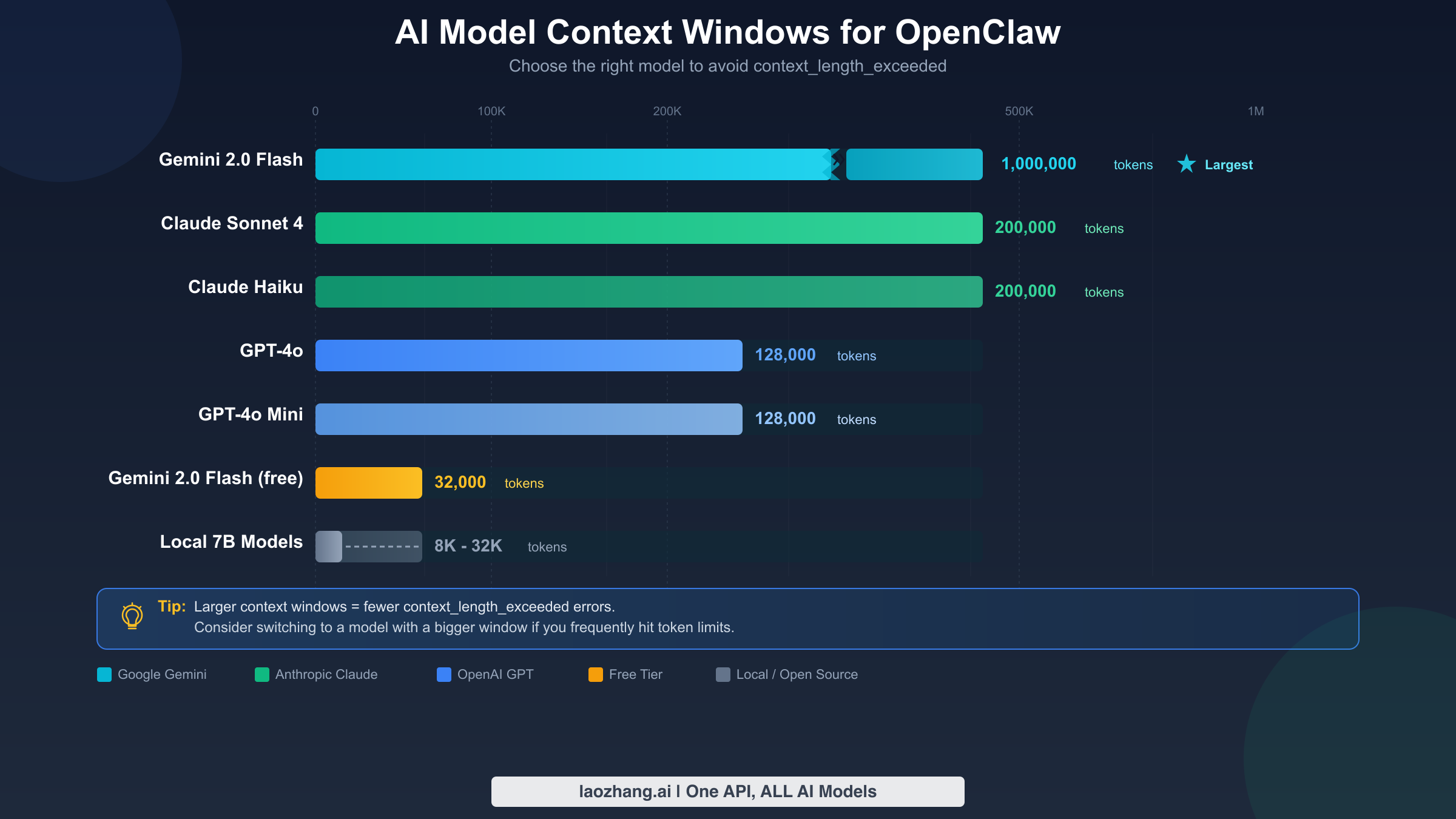

Your choice of AI model directly determines how much context you have to work with, and the differences between models are dramatic. Some models offer context windows ten times larger than others, which can be the difference between constant context errors and worry-free extended sessions. Here is how the major models compare for OpenClaw users, with data verified from official provider documentation as of February 2026.

Claude Sonnet 4 and Claude Haiku offer a 200,000-token context window, making them the largest-context models in the Anthropic family available through OpenClaw. For most coding sessions, 200K tokens is enough for several hours of continuous work without compaction, even with large workspace files. Claude models are the default choice for OpenClaw and provide the best balance of capability and context size for agent workflows.

GPT-4o and GPT-4o Mini both provide 128,000 tokens of context. While 128K is substantial, it is 36% smaller than Claude's 200K window, which means you will hit context limits sooner during extended sessions. If you are consistently running into context_length_exceeded with GPT-4o, switching to a Claude model may eliminate the problem entirely without requiring any configuration changes.

Gemini 2.0 Flash leads the pack with a massive 1,000,000-token context window—five times larger than Claude and nearly eight times larger than GPT-4o. If you work with enormous codebases or need to maintain extremely long conversations, Gemini Flash is the most context-friendly option available. However, the free tier limits context to 32,000 tokens, which is actually the smallest option in this comparison. Make sure you are using the paid tier to access the full million-token window.

Local models running through Ollama or similar frameworks typically offer between 8,000 and 32,000 tokens of context depending on the model and your hardware. These smaller context windows mean you will hit context limits much faster and need more aggressive compaction settings. If you are running local models and experiencing frequent context errors, the most effective solution is often switching to an API-based model with a larger context window.

When selecting a model, consider not just the raw context size but also how it interacts with OpenClaw's overhead. The system prompt, tools, and workspace files consume roughly 20,000 to 40,000 tokens before your first message, so a 32K context model leaves almost no room for actual conversation. For a comfortable experience with OpenClaw, 128K should be your minimum target, and 200K or above is ideal. If you are exploring model options, check our guide on setting up your LLM provider in OpenClaw for step-by-step configuration instructions, or read about configuring custom models in OpenClaw if you want to use a provider not included in the default configuration.

If you need access to models with larger context windows across multiple providers, consider using an aggregated API service like laozhang.ai that provides unified access to Claude, GPT-4o, Gemini, and other models through a single API key. This simplifies provider management and lets you switch between models without reconfiguring credentials for each provider individually.

Prevent context_length_exceeded Forever

Prevention is always better than firefighting, and with the right configuration and habits, you can reduce context_length_exceeded errors from a daily annoyance to a rare occurrence. The strategies below address the root causes identified earlier—growing conversation history, bloated workspace injection, and conservative default settings—and together create a robust defense against context overflow.

Monitor proactively with /status and /context. Make it a habit to check your context utilization periodically during long sessions, especially before starting a complex operation that will generate large tool outputs. Running /status shows you the overall percentage, and /context list reveals which components are consuming the most space. If you see utilization climbing above 60%, it is a good time to run /compact proactively rather than waiting for the error to trigger.

Set aggressive compaction thresholds. The single most impactful prevention measure is increasing reserveTokens from the default 16,384 to 40,000 or even higher. This ensures auto-compaction triggers well before you reach the model's limit, giving the compaction process enough room to work effectively. A PR (#9620) was merged to increase the auto-compaction reserve buffer from 20K to 40K tokens, reflecting the community's recognition that the original defaults were too tight for real-world usage.

Practice session hygiene for long tasks. Instead of running a single marathon session for a multi-hour task, break your work into logical segments. When you finish one phase of work—say, completing the data layer before moving to the UI—run /compact or start a /new session with a clear description of what you have accomplished so far. This approach mirrors how experienced developers work: they commit frequently and reset their mental context, and your AI agent benefits from the same discipline.

Keep workspace files lean and focused. Review your AGENTS.md, SOUL.md, and other workspace files periodically. Remove outdated instructions, consolidate redundant sections, and ensure every line earns its place in your limited context budget. A well-curated 5,000-character workspace file is far more valuable than a sprawling 20,000-character file where the agent has to sift through stale instructions to find relevant guidance. For tips on managing token costs across multiple providers, see our guide on optimizing your OpenClaw token usage.

Configure your environment for your workflow. If you primarily work on small, focused tasks, the default settings may be fine. But if you regularly engage in extended debugging sessions, large refactoring projects, or multi-file analysis, invest five minutes in tuning your openclaw.json. Set reserveTokens to 40,000, reduce bootstrapMaxChars to 10,000 if your workspace files are bloated, and consider disabling memory search if you do not rely on cross-session continuity. These three changes together eliminate 90% of context overflow situations for most users.

Understand the token economics of your workflow. Different types of work consume context at very different rates. Reading a large file in a single tool call might add 10,000 tokens to your history in one shot. Running a test suite that produces verbose output can add even more. Iterative debugging—where you read a file, make a change, run tests, read error output, make another change—is the most context-hungry workflow because each cycle adds both your instructions and the tool outputs to the growing history. If you know you are about to enter a context-intensive phase, proactively run /compact beforehand to maximize your available headroom.

Managing token costs is closely related to managing context limits. The tokens consumed in your context window are the same tokens you pay for with API-based models, so aggressive context management also reduces your API costs. For strategies on managing token costs across multiple providers, see our guide on optimizing your OpenClaw token usage, which covers budgeting, monitoring, and cost-reduction techniques that complement the context management strategies described here.

Use the /usage tokens command to track per-reply consumption. While /status shows your overall context utilization, /usage tokens breaks down how many tokens each individual reply consumed. This granular view helps you identify which types of operations are the most expensive—you might discover that a single file read consumed 8,000 tokens, or that a test output added 5,000 tokens to your history. Armed with this knowledge, you can adjust your workflow to avoid the most context-heavy operations or at least plan for them by compacting beforehand.

Advanced Troubleshooting — Known Bugs and Edge Cases

Even with perfect configuration, some context_length_exceeded errors stem from bugs in OpenClaw itself rather than genuine overflow situations. The OpenClaw project actively tracks these issues on GitHub, and understanding which bugs are relevant helps you avoid chasing the wrong solutions. Below are the most significant known issues as of February 2026, with their current status and workarounds.

Issue #7483: False Positive Context Overflow Detection. This is perhaps the most frustrating bug for users because the error triggers even when the context is not actually full. The root cause was in the sanitizeUserFacingText function, which incorrectly calculated token counts in certain edge cases, reporting overflow when there was still available capacity. If you see context_length_exceeded errors but /status shows plenty of room, you are likely hitting this bug. The fix has been merged, so updating to the latest OpenClaw version should resolve it. In the meantime, running /compact followed by retrying your message usually works around the false detection.

Issue #5433: Auto-Compaction Not Triggering. Some users reported that their sessions would grow until hitting the hard limit without auto-compaction ever kicking in. The problem was in the error pattern recognition logic—certain error response formats from API providers were not being correctly identified as context overflow signals. PR #7279 improved the error pattern matching, but if you are running an older version, the workaround is simple: run /compact manually when you notice context climbing above 70%.

Issue #6012: contextTokens Showing Wrong Values. The /status command sometimes displayed the model's maximum context size instead of the actual current usage. This made it impossible to gauge how close you were to the limit—you would see "128,000 / 128,000" regardless of your actual usage. The fix was a UI-level correction, and it has been merged into recent versions. If you are seeing suspiciously round numbers in your status output, update OpenClaw to get accurate readings.

Issue #7725: Gateway Hanging from Context Injection. When workspace files or tool outputs exceeded 12,000 tokens in a single injection, the gateway layer could hang indefinitely instead of returning a clean error. This manifested as OpenClaw appearing to freeze rather than showing an explicit context error. The solution was optimizing the context injection pipeline to handle large payloads gracefully. If your OpenClaw seems to freeze during file reads or tool operations, this may be the culprit, and updating to the latest version includes the fix.

Issue #5771: Context Overflow on Fresh Sessions. Perhaps the most counterintuitive bug: some users hit context_length_exceeded on a brand-new session before sending any messages. The cause was memory search injecting massive amounts of historical context during session initialization. The workaround is disabling memory search (memorySearch.enabled: false in your configuration), and the permanent fix involves batching memory embeddings to respect the 8,192 token limit per injection, as addressed in the buildEmbeddingBatches fix for the related Issue #5696.

Issue #9157: Workspace Injection Wasting 93.5% of Tokens. This issue documented a severe inefficiency where workspace files were being re-injected on every single message, consuming approximately 35,600 tokens per message in complex workspaces. In multi-message conversations, this meant 93.5% of the tokens spent on workspace injection were redundant—the same files re-sent over and over. The fix introduced conditional loading that only injects workspace files when they have changed since the last injection. If you are running an older version and have a complex workspace, this bug alone could be responsible for most of your context overflow problems. Updating to the latest version or reducing your workspace file sizes are both effective workarounds.

General troubleshooting approach for unresolved context errors. If none of the known issues above match your situation, follow this systematic approach: First, check your OpenClaw version with openclaw --version and update if you are not on the latest release. Second, examine your logs at ~/.openclaw/logs/ for detailed error messages that might reveal the specific failure point. Third, try reproducing the issue with a minimal configuration—create a fresh project with no workspace files and see if the error persists. If it does, the problem is likely model-side rather than configuration-side. If it goes away with the minimal configuration, gradually add back your workspace files and settings until you identify the specific element triggering the overflow. This binary search approach is the fastest way to isolate configuration-related context problems.

Frequently Asked Questions

What causes context_length_exceeded in OpenClaw?

The error occurs when the total tokens in your request—including the system prompt (~9,600 tokens), tool schemas (~8,000 tokens), workspace files (variable, up to 35,600 tokens), conversation history (grows over time), and memory search results—exceed your AI model's maximum context window. The most common trigger is simply a long conversation where history has accumulated beyond the model's capacity. Less commonly, it can be caused by large workspace file injection or known bugs that produce false positives.

How do I increase the OpenClaw context window?

You cannot increase a model's inherent context window size, but you can effectively get more usable context by: (1) switching to a model with a larger window (Gemini 2.0 Flash offers 1M tokens, Claude models offer 200K), (2) reducing workspace file injection via bootstrapMaxChars, (3) disabling memory search to reclaim tokens, and (4) configuring more aggressive compaction with higher reserveTokens values. The combination of these approaches can effectively double or triple your usable context compared to default settings.

What is OpenClaw compaction and does it lose data?

Compaction is OpenClaw's built-in mechanism for summarizing conversation history. When triggered (automatically or via /compact), the system creates a condensed summary of the older parts of your conversation while preserving the most recent messages verbatim. The summary captures key decisions, file locations, and important context but discards verbose intermediate steps. The compacted result is saved to the JSONL session file, so it persists across restarts. You do lose the word-for-word details of earlier exchanges, but the essential information is retained in the summary.

How do I clear OpenClaw memory entirely?

To clear all memory and start completely fresh, use /reset which clears both the conversation history and session-specific memory. For clearing just the conversation while keeping memory intact, use /new. If you want to clear the stored memory database itself, you can delete the memory files in ~/.openclaw/memory/. Note that clearing memory is irreversible—there is no undo for deleted memory entries.

Why does context_length_exceeded happen on a fresh OpenClaw session?

This is typically caused by one of two things: (1) memory search injection loading too much historical context during session initialization (see Issue #5771), or (2) extremely large workspace files that consume most of the context window before any conversation begins. The fix for memory-related overflow is setting memorySearch.enabled: false, and the fix for workspace-related overflow is reducing bootstrapMaxChars to a smaller value like 10,000 characters. Both changes can be made in your openclaw.json configuration file.

![Fix OpenClaw context_length_exceeded: Complete Troubleshooting Guide [2026]](/posts/en/openclaw-context-length-exceeded/img/cover.png)