Gemini 3.1 Pro Preview, Google's latest frontier reasoning model released on February 19, 2026, can be tried for free in Google AI Studio but does not offer a free API tier. The Gemini API charges $2.00 per million input tokens and $12.00 per million output tokens for Gemini 3.1 Pro Preview. The model achieves 77.1% on ARC-AGI-2, more than double its predecessor's score of 31.1%. This guide covers every way to access 3.1 Pro — from free AI Studio testing to paid API setup to cost optimization strategies that can cut your bill by 50% or more.

TL;DR

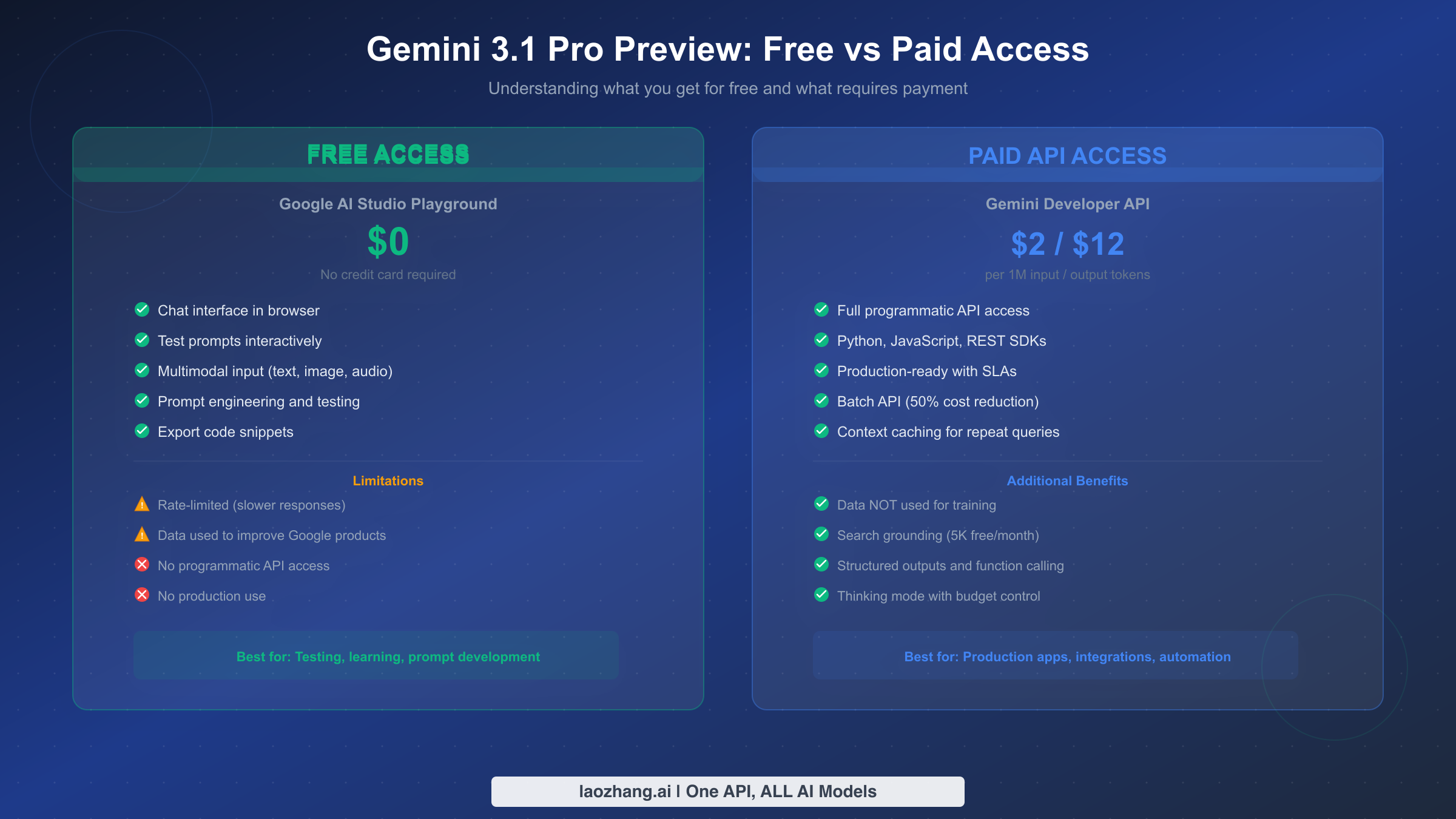

- Free access exists but is limited: You can test Gemini 3.1 Pro Preview for free in Google AI Studio's browser-based playground. No credit card required, but no programmatic API access.

- API pricing: $2.00 per million input tokens, $12.00 per million output tokens (including thinking tokens). No free API tier is available for this model.

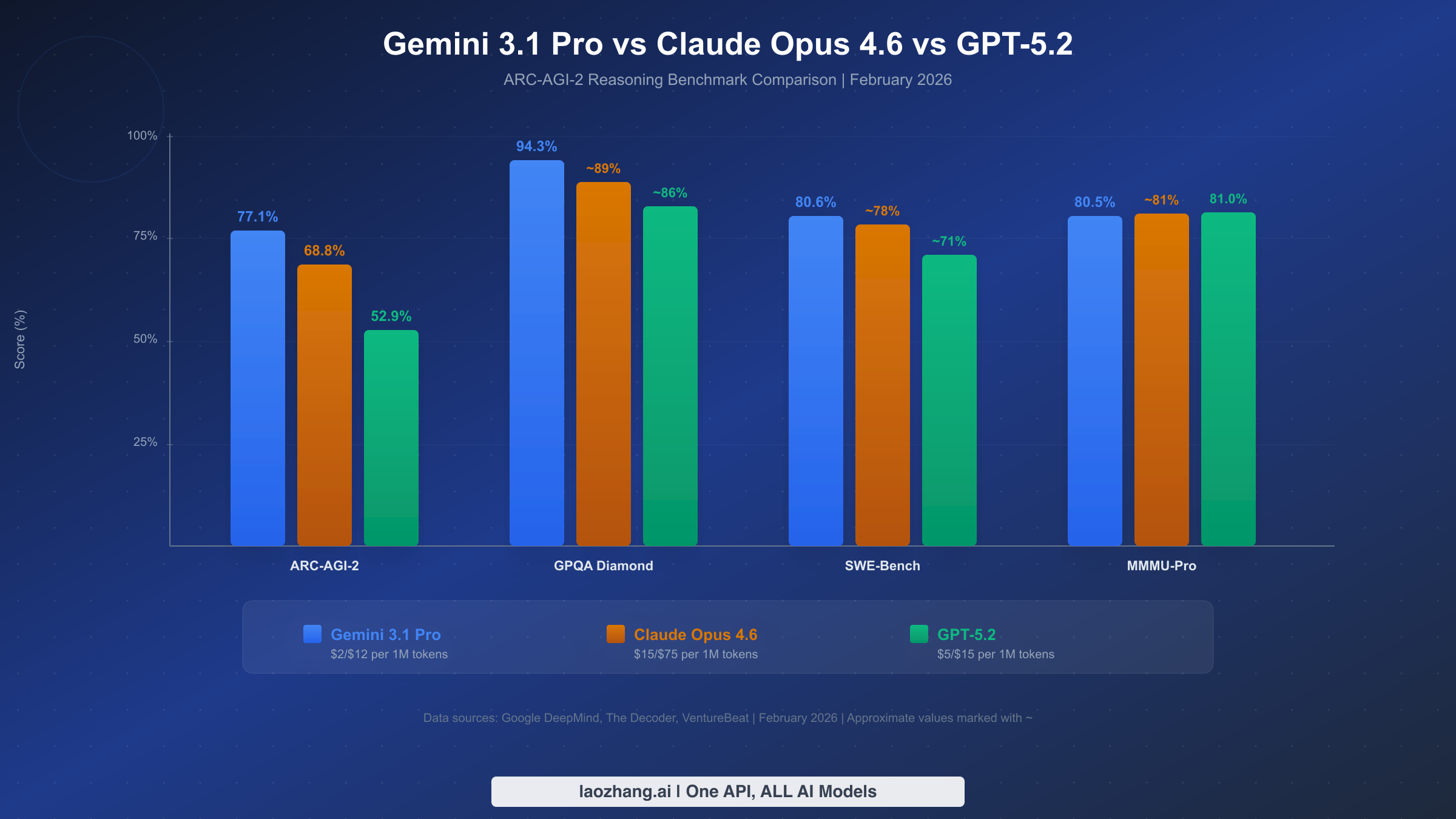

- Performance leader: 77.1% on ARC-AGI-2, beating Claude Opus 4.6 (68.8%) and GPT-5.2 (52.9%). Scores 94.3% on GPQA Diamond and 80.6% on SWE-Bench Verified.

- Cost optimization: Use Batch API for 50% off, context caching for up to 90% savings on repeated queries, and thinking budget control to manage output token costs.

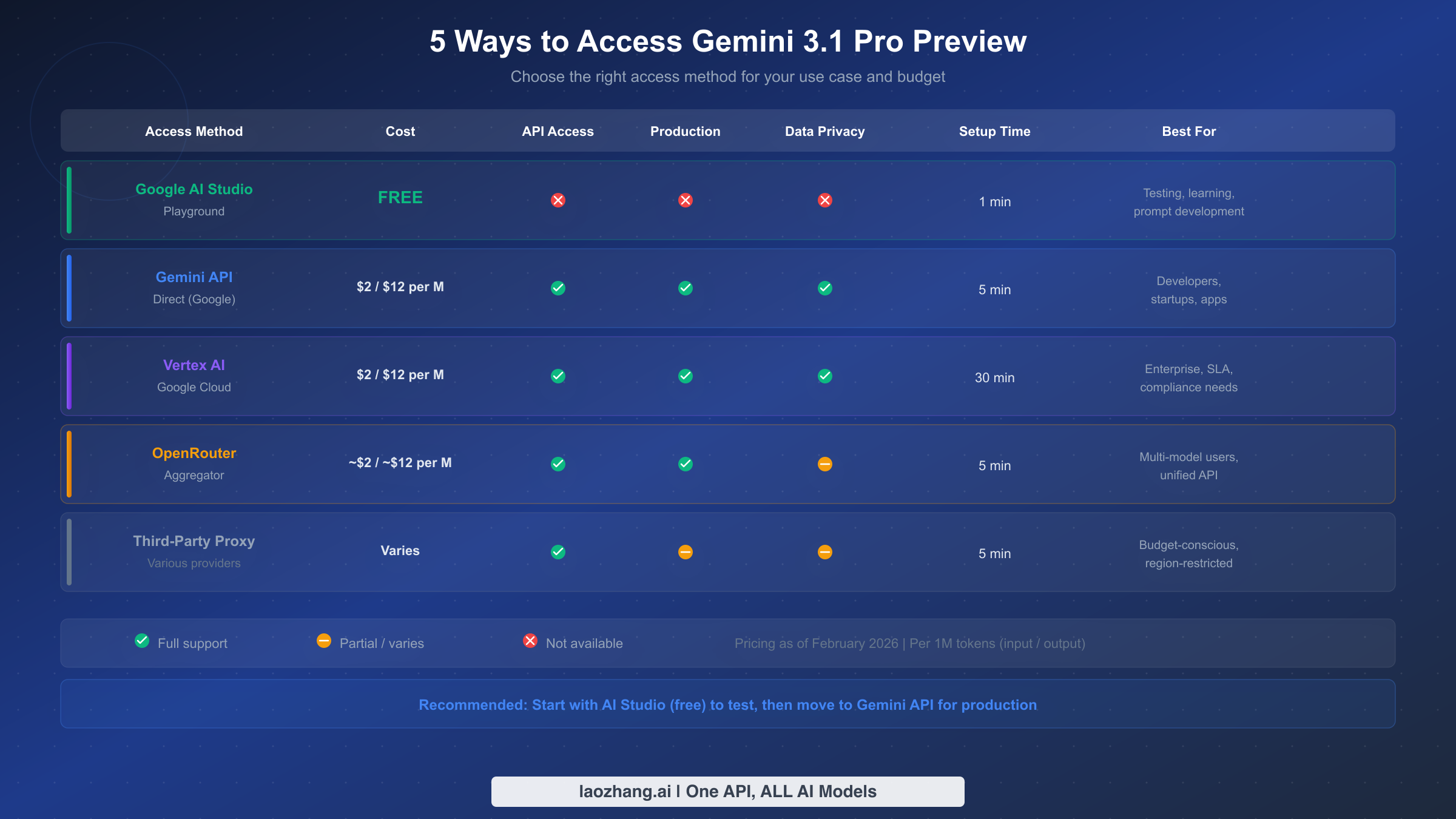

- Multiple access methods: Google AI Studio (free), Gemini API (paid), Vertex AI (enterprise), OpenRouter (aggregator), and third-party proxy services.

What Is Gemini 3.1 Pro Preview and Why Developers Are Excited

Google DeepMind announced Gemini 3.1 Pro Preview on February 19, 2026, positioning it as the most capable reasoning model in the Gemini family. The release generated immediate excitement in the developer community, largely because the model achieved a 77.1% score on the ARC-AGI-2 benchmark — a test specifically designed to measure general reasoning ability in AI systems. To put that number in perspective, Gemini 3 Pro scored just 31.1% on the same benchmark, meaning the 3.1 version more than doubled its predecessor's performance in what Google describes as a single generational leap. This kind of improvement is rare in AI development, where benchmark gains typically come in single-digit percentage points.

The ARC-AGI-2 result matters because it places Gemini 3.1 Pro Preview ahead of both major competitors. Claude Opus 4.6, Anthropic's flagship reasoning model, scores 68.8% on the same test, while OpenAI's GPT-5.2 manages 52.9% (according to The Decoder, February 2026). Beyond reasoning, the model also excels at scientific knowledge with a 94.3% score on GPQA Diamond, and software engineering with an 80.6% result on SWE-Bench Verified (Google DeepMind model card, February 2026). These numbers suggest a model that isn't just theoretically powerful but practically useful for real developer workflows including code generation, debugging, and technical analysis.

What makes Gemini 3.1 Pro Preview particularly interesting from a practical standpoint is its combination of performance and context capacity. The model supports a 1,048,576-token context window — roughly equivalent to processing an entire codebase, a 700-page book, or hours of audio in a single prompt. Output is capped at 65,536 tokens, and the model accepts multimodal inputs including text, images, video, audio, and PDF documents (ai.google.dev, February 2026). For developers who regularly work with large document collections or need to analyze extensive codebases, this context window eliminates the need for complex chunking strategies that other models require. The model also features a "thinking" mode where it reasons step-by-step before generating responses, similar to approaches used by Claude and OpenAI's o-series models, though Google's implementation allows developers to set explicit thinking budgets to control costs.

The release timing of Gemini 3.1 Pro Preview is noteworthy in the broader context of the AI industry. Coming just weeks after OpenAI's GPT-5.2 release and Anthropic's Claude Opus 4.6 update cycle, it represents Google's aggressive push to reclaim technical leadership in frontier model capabilities. The "Preview" designation in the model name indicates that this is not yet the final production release — Google typically uses this label for models that are feature-complete but may receive further optimizations before being designated as stable. For developers, this means the model is fully functional for production use today, but you should monitor Google's model deprecation announcements and be prepared to update your model identifier when the stable version launches. Google's track record suggests the preview-to-stable transition typically takes 2-4 months and usually involves only the model ID change with no API breaking changes.

How to Try Gemini 3.1 Pro Preview for Free in Google AI Studio

The most common question developers ask is whether Gemini 3.1 Pro Preview is free to use. The honest answer requires a distinction that most articles fail to make clearly: you can interact with the model for free through Google AI Studio's web-based playground, but there is no free API tier for programmatic access. Understanding this distinction is critical before you invest time in any integration plan. Google AI Studio at aistudio.google.com provides a chat interface where you can type prompts, upload images, and test the model's capabilities without creating a billing account or entering a credit card. This playground environment is genuinely useful for prompt engineering, comparing model behavior across different configurations, and getting a hands-on feel for the model's reasoning capabilities before committing to paid access.

To start using Google AI Studio for free, visit the website and sign in with any Google account. Select "Gemini 3.1 Pro Preview" from the model dropdown — it should appear as an available model since February 19, 2026. You can immediately begin testing prompts, adjusting system instructions, and experimenting with temperature and top-p settings. The playground supports multimodal inputs, so you can upload images, documents, and even audio files to test the model's understanding capabilities. You can also export your prompts as code snippets in Python, JavaScript, or cURL format, giving you a head start when you transition to the paid API. The free playground also includes a "Get code" button that generates ready-to-use API calls based on your current prompt configuration.

However, the free AI Studio access comes with meaningful limitations that you should understand before relying on it. Responses in the free tier are rate-limited and may be slower than paid API calls, especially during periods of high demand following the model's recent release. More significantly, Google's terms of service state that data submitted through the free AI Studio playground may be used to improve Google products, which means you should never input sensitive, proprietary, or confidential information through this channel. There is no programmatic API access through the free tier — you cannot make HTTP calls to the model or integrate it into your applications without switching to the paid Gemini API. For developers who need Gemini API free tier options, note that while older models like Gemini 2.5 Flash still offer rate-limited free API access, the 3.1 Pro Preview model is available only through paid plans. If your goal is production integration, automated workflows, or any use case that requires API calls, you will need to set up billing.

One practical workflow that many developers adopt is starting with AI Studio for prompt engineering and evaluation, then transitioning to the paid API once they have validated their approach. AI Studio lets you save and organize prompt templates, compare responses across different temperature settings, and export working configurations as code — all without spending a cent. This "test free, deploy paid" approach is particularly effective because you can iterate on system prompts and few-shot examples in the playground environment, reducing the number of paid API calls needed during development. The "Get code" feature in AI Studio generates complete Python or JavaScript snippets that include your exact prompt configuration, making the transition from free testing to paid integration nearly seamless.

Setting Up the Gemini 3.1 Pro Preview API — Developer Quick Start

Getting started with the paid Gemini 3.1 Pro Preview API requires three things: a Google Cloud or AI Studio API key, the Google Generative AI SDK installed in your development environment, and a billing account connected to your Google Cloud project. The setup process is straightforward and typically takes under five minutes for developers familiar with API integrations. Start by visiting aistudio.google.com, clicking "Get API key" in the top navigation, and either creating a new API key or selecting an existing Google Cloud project. Make sure your billing is active — unlike some Gemini models that offer free tiers, Gemini 3.1 Pro Preview requires billing from the first API call.

For Python developers, install the official SDK with pip install google-genai and set up your first API call with the following code. This example demonstrates a basic text generation request using the Gemini 3.1 Pro Preview model with the thinking feature enabled:

pythonfrom google import genai client = genai.Client(api_key="YOUR_API_KEY") response = client.models.generate_content( model="gemini-3.1-pro-preview", contents="Explain the key differences between TCP and UDP protocols, including when to use each one.", config={ "thinking_config": {"thinking_budget": 2048} } ) print(response.text)

For JavaScript and Node.js developers, install the SDK with npm install @google/genai and use this equivalent setup. The JavaScript SDK follows a similar pattern, making it easy to switch between languages if you work in a polyglot environment:

javascriptimport { GoogleGenAI } from "@google/genai"; const ai = new GoogleGenAI({ apiKey: "YOUR_API_KEY" }); async function main() { const response = await ai.models.generateContent({ model: "gemini-3.1-pro-preview", contents: "Explain the key differences between TCP and UDP protocols, including when to use each one.", config: { thinkingConfig: { thinkingBudget: 2048 } } }); console.log(response.text); } main();

When working with the API, there are several practical details worth knowing about. The thinking_budget parameter controls how many tokens the model can use for internal reasoning before generating its response — setting this value too low may reduce reasoning quality, while setting it too high increases your output token costs since thinking tokens are billed at the same rate as output tokens ($12.00 per million, ai.google.dev pricing page, February 2026). A good starting point is 2,048 tokens for straightforward questions and 8,192 or higher for complex reasoning tasks. You should also be aware of rate limits and quotas for the 3.1 Pro Preview model, which may differ from other Gemini models due to the model's preview status. For error handling, wrap your API calls in try-catch blocks and implement exponential backoff for 429 (rate limit) and 503 (service unavailable) errors, which are common during high-traffic periods immediately after a model launch.

Beyond basic text generation, the API supports structured output through JSON mode, which is essential for building reliable applications. By specifying a JSON schema in your request configuration, you can force the model to return responses in a predictable format — making it straightforward to parse outputs programmatically without fragile regex or string manipulation. The API also supports function calling, where you define a set of tools (functions) that the model can invoke during its response generation. This enables you to build agents that can search databases, call external APIs, or perform calculations as part of their reasoning process. For developers building production applications, these features transform Gemini 3.1 Pro Preview from a simple text generator into a programmable reasoning engine that can be integrated into complex workflows. The gemini-3.1-pro-preview-customtools variant is specifically optimized for function calling scenarios and may provide better tool selection accuracy compared to the standard model ID.

Gemini 3.1 Pro vs Claude Opus 4.6 vs GPT-5.2 — Performance and Value

Choosing between the three leading frontier models in February 2026 requires looking beyond individual benchmark scores and considering the full picture: performance across different task types, pricing structure, and price-to-performance ratio. Gemini 3.1 Pro Preview leads on general reasoning (77.1% ARC-AGI-2), scientific knowledge (94.3% GPQA Diamond), and software engineering (80.6% SWE-Bench Verified). Claude Opus 4.6 leads on Humanity's Last Exam at 53.1% versus Gemini's 44.4%, suggesting stronger performance on extremely difficult multi-domain questions. GPT-5.2 leads on MMMU-Pro (81.0% vs Gemini's 80.5%), indicating a slight edge in multimodal understanding tasks. The differences on some benchmarks are narrow enough that real-world performance may vary depending on your specific use case and prompt engineering approach.

The pricing comparison reveals a much clearer picture of where value lies. Gemini 3.1 Pro Preview charges $2.00 per million input tokens and $12.00 per million output tokens (ai.google.dev, February 2026). Claude Opus 4.6 costs $15.00 per million input tokens and $75.00 per million output tokens — making it 7.5 times more expensive on input and 6.25 times more expensive on output. GPT-5.2 sits in the middle at $5.00 per million input tokens and $15.00 per million output tokens. When you calculate a price-per-performance metric using the ARC-AGI-2 scores, Gemini 3.1 Pro delivers roughly $0.18 per percentage point (using output pricing as the dominant cost), while Claude Opus 4.6 costs around $1.09 per percentage point — more than 6 times higher for lower benchmark performance on reasoning tasks. For teams running thousands of API calls daily, this cost differential translates to meaningful savings without sacrificing quality.

| Feature | Gemini 3.1 Pro Preview | Claude Opus 4.6 | GPT-5.2 |

|---|---|---|---|

| ARC-AGI-2 | 77.1% | 68.8% | 52.9% |

| GPQA Diamond | 94.3% | ~89% | ~86% |

| SWE-Bench Verified | 80.6% | ~78% | ~71% |

| MMMU-Pro | 80.5% | ~81% | 81.0% |

| Input Cost / 1M | $2.00 | $15.00 | $5.00 |

| Output Cost / 1M | $12.00 | $75.00 | $15.00 |

| Context Window | 1M tokens | 200K tokens | 128K tokens |

| Max Output | 65K tokens | 32K tokens | 16K tokens |

| Batch API | 50% off | Not available | 50% off |

That said, benchmarks do not tell the whole story. Claude Opus 4.6 remains highly regarded for its instruction-following precision, nuanced safety behavior, and consistency in agentic coding workflows. GPT-5.2 benefits from OpenAI's mature ecosystem with extensive third-party tool integrations and a large developer community. Your choice should depend on your specific requirements: if raw reasoning performance and cost efficiency matter most, Gemini 3.1 Pro Preview offers the best value in February 2026. If you need specific qualities like Claude's reliability in complex agentic tasks or GPT's ecosystem, the premium pricing may be justified. Many developers are adopting a multi-model strategy, using Gemini for cost-sensitive bulk processing and a competitor model for specialized tasks. To understand how Gemini 3 models compare across the full family including Flash variants, see our dedicated comparison guide.

How to Cut Your Gemini 3.1 Pro API Costs by 50% or More

While Gemini 3.1 Pro Preview is already the most affordable frontier reasoning model, you can reduce costs dramatically through three official optimization strategies: the Batch API, context caching, and thinking budget management. These techniques can be combined, and a well-optimized pipeline can reduce effective costs by 60-80% compared to standard pricing — making 3.1 Pro Preview competitive with mid-tier models like Gemini Flash on a per-task basis.

The Batch API is the simplest optimization to implement. Instead of sending requests synchronously and getting immediate responses, you batch multiple prompts into a single asynchronous job and receive results within 24 hours. Google rewards this patience with a 50% discount on all token costs — meaning your effective pricing drops to $1.00 per million input tokens and $6.00 per million output tokens (ai.google.dev, February 2026). The Batch API is ideal for any workload where you don't need real-time responses: document analysis pipelines, bulk content generation, dataset labeling, code review across large repositories, and overnight processing jobs. The implementation requires minimal code changes — you prepare a JSONL file with your requests, submit it to the batch endpoint, and poll for completion. For many teams, shifting 40-60% of their workload to batch processing cuts their monthly bill nearly in half with no quality degradation.

Context caching represents an even more significant opportunity for cost reduction in repetitive workloads. When you send the same large system prompt, document, or reference material across multiple API calls, context caching lets you store that content once and reference it in subsequent requests. Cached input tokens cost $0.20 per million (versus $2.00 standard) — a 90% reduction (ai.google.dev pricing, February 2026). The cache has a storage cost of $4.50 per million tokens per hour, so the economics work best when you're making frequent calls against the same context within a short time window. For example, if you're building a customer support bot that references a 100-page product manual, caching that manual and making 1,000 queries against it would cost roughly $0.02 for the cached context per query versus $0.20 without caching — a 10x savings on the input side alone.

The third and most often overlooked optimization is thinking budget management. Since Gemini 3.1 Pro Preview's output pricing ($12.00 per million tokens) includes thinking tokens, the amount of internal reasoning the model performs directly impacts your bill. By default, the model may use thousands of thinking tokens for even simple questions. Setting a thinking_budget parameter allows you to control this: use 1,024-2,048 tokens for straightforward tasks like formatting or translation, 4,096-8,192 for moderate reasoning, and 16,384+ only for complex multi-step problems. In practice, most queries don't benefit from more than 4,096 thinking tokens, and reducing the default can cut output costs by 30-50% on typical workloads. You can also combine all three strategies: batch processing with cached context and controlled thinking budgets can bring effective per-query costs down by 70-80%, making Gemini 3.1 Pro Preview remarkably cost-effective for large-scale applications.

To put these optimizations into a concrete example, consider a company processing 100,000 customer support tickets per month through Gemini 3.1 Pro Preview. At standard pricing with an average of 800 input tokens and 2,000 output tokens (including thinking) per ticket, the monthly cost would be approximately $160.00 for input plus $2,400.00 for output, totaling $2,560.00. Applying batch processing to 60% of non-urgent tickets saves $768.00. Adding context caching for the shared product documentation (used across all tickets) saves another $120.00 on input costs. Reducing the thinking budget from the default to 2,048 tokens for simple classification tasks (40% of tickets) saves approximately $480.00 on output costs. The combined optimized cost drops to roughly $1,192.00 — a 53% reduction from the original estimate. For teams scaling to millions of API calls, these optimizations transform Gemini 3.1 Pro Preview from "expensive frontier model" to "cost-competitive with mid-tier alternatives."

Alternative Ways to Access Gemini 3.1 Pro Preview API

Beyond Google's direct API, several alternative pathways let you access Gemini 3.1 Pro Preview, each with different trade-offs in pricing, privacy, setup complexity, and feature support. Your choice depends on your specific situation — whether you need multi-model flexibility, enterprise compliance, regional access, or simplified billing. Understanding these options ensures you pick the access method that best fits your technical requirements and organizational constraints.

Google AI Studio and Gemini API (Direct) is the recommended starting point for most individual developers and small teams. You get the official SDK, full feature support including thinking mode and context caching, official rate limits, and a guarantee that your data is not used for training when accessing through the paid API. Setup takes under 5 minutes and you pay exactly the published pricing with no markup. The main limitation is that you're locked into a single provider — if you later want to compare Gemini's output with Claude or GPT, you need separate API integrations for each.

Vertex AI through Google Cloud is the enterprise-grade path. It offers the same model at the same base pricing but adds enterprise features: SLA guarantees, VPC-SC data isolation, IAM-based access controls, CMEK encryption, and SOC 2 / ISO 27001 compliance certifications. The trade-off is setup complexity — you need a Google Cloud project, proper IAM configuration, and familiarity with the Vertex AI SDK. For organizations that require compliance documentation, data residency guarantees, or integration with other Google Cloud services (BigQuery, Cloud Storage, Cloud Functions), Vertex AI is the appropriate choice. Setup typically takes 30 minutes to an hour for someone new to Google Cloud.

OpenRouter is a multi-model aggregator that provides a unified API across dozens of AI models including Gemini 3.1 Pro Preview. The pricing is typically identical or very close to Google's direct pricing (approximately $2/$12 per million tokens), with OpenRouter taking a small margin or passing through at cost. The primary advantage is developer experience: a single API key, a single SDK, and consistent request/response formatting across all supported models. This makes it trivial to switch between Gemini, Claude, and GPT in your application code by simply changing the model parameter. For teams evaluating multiple models or building applications that need fallback providers, OpenRouter eliminates the need to maintain multiple SDK integrations.

Multi-model aggregator services like laozhang.ai provide another option, particularly for developers who need access to multiple AI models through a single unified API endpoint. These services can offer competitive pricing and simplified billing while supporting dozens of models including Gemini 3.1 Pro Preview. For developers looking for affordable Gemini image API options alongside text models, aggregator platforms often bundle both capabilities. The key consideration with any third-party service is to verify their data handling policies, uptime guarantees, and whether they pass through the full model capabilities (thinking mode, context caching, streaming) or operate with reduced feature sets. For detailed documentation on available models and integration examples, check the API documentation.

When choosing between these access methods, the decision usually comes down to three factors: how many models you need, what compliance requirements you have, and how much operational overhead you're willing to manage. For a solo developer building a side project, Google's direct API is the simplest choice — one API key, official documentation, and straightforward billing. For a startup evaluating multiple models before committing to one, an aggregator like OpenRouter saves significant integration time by providing a single API surface for all providers. For an enterprise with regulatory requirements, Vertex AI is the only option that provides the compliance certifications and data isolation guarantees that legal teams require. The good news is that the Gemini 3.1 Pro Preview model itself is identical across all access methods — you get the same 77.1% ARC-AGI-2 performance regardless of whether you access it through Google directly, Vertex AI, or a third-party aggregator.

Gemini 3.1 Pro Preview API Pricing — Complete Breakdown

Understanding the full pricing structure of Gemini 3.1 Pro Preview requires looking beyond the headline $2/$12 numbers. The model uses a tiered pricing system based on context length, with different rates for standard versus cached tokens, and important nuances around thinking tokens that can significantly impact your actual costs. All pricing data below was verified against the official Google AI pricing page on February 20, 2026.

| Pricing Category | Standard (<=200k context) | Long Context (>200k) |

|---|---|---|

| Input tokens | $2.00 / 1M | $4.00 / 1M |

| Output tokens (incl. thinking) | $12.00 / 1M | $18.00 / 1M |

| Cached input tokens | $0.20 / 1M | $0.40 / 1M |

| Cache storage | $4.50 / 1M tokens / hr | $4.50 / 1M tokens / hr |

| Batch API input | $1.00 / 1M | $2.00 / 1M |

| Batch API output | $6.00 / 1M | $9.00 / 1M |

| Search grounding | 5,000 free/month, then $14/1,000 | Same |

The tiered context pricing means that prompts exceeding 200,000 tokens cost double the standard rate — $4.00 per million input and $18.00 per million output. This threshold is important for planning: if your use case involves processing very large documents or codebases that push past 200k tokens, your effective costs will be higher than the headline numbers suggest. Most typical API usage stays below 200k tokens per request, but developers working with Gemini's full 1M context window should budget accordingly. One practical strategy is to use context caching aggressively when working with large documents — even with the storage cost of $4.50 per million tokens per hour, cached tokens at $0.20-$0.40 per million are dramatically cheaper than reprocessing the same content at $2.00-$4.00 per million with each request.

To illustrate real-world costs, consider three common usage scenarios that span different scales and use cases. A chatbot handling 10,000 conversations per day with an average of 500 input tokens and 1,000 output tokens (including thinking) per conversation would cost approximately $10.00 for input ($2.00 x 5M tokens) plus $120.00 for output ($12.00 x 10M tokens), totaling about $130.00 per day or roughly $3,900 per month. Using the Batch API for non-real-time conversations would cut this to approximately $1,950 per month. A code review pipeline processing 500 files per day with 2,000 input tokens and 3,000 output tokens per file would run about $2.00 for input plus $18.00 for output, totaling roughly $20.00 per day or $600 per month — well within budget for most development teams. A document analysis system processing 50 large PDFs (50,000 tokens each) per day with cached context would cost under $50 per month when combining context caching with batch processing, making it competitive with manual review costs by orders of magnitude.

One important detail that many pricing comparisons overlook is the role of search grounding in Gemini 3.1 Pro Preview. Google provides 5,000 grounding requests per month for free, after which additional requests cost $14.00 per 1,000 requests (ai.google.dev, February 2026). Search grounding allows the model to access real-time web information when generating responses, which is valuable for applications that need up-to-date data. If your use case involves answering questions about current events, recent product releases, or live pricing data, search grounding adds significant value — but you should budget for the costs if you expect to exceed the free 5,000 monthly requests. For most development-phase usage and many production applications, the free allocation is sufficient.

FAQ — Common Questions About Gemini 3.1 Pro Preview API

Is Gemini 3.1 Pro Preview API free?

No, the Gemini 3.1 Pro Preview API does not offer a free tier. Unlike some other Gemini models (such as Gemini 2.5 Flash which has a rate-limited free tier), 3.1 Pro Preview requires paid billing from the first API call. However, you can test the model for free through Google AI Studio's browser-based playground at aistudio.google.com — no credit card required. The playground lets you experiment with prompts, upload files, and evaluate the model's capabilities before committing to the paid API.

How much does Gemini 3.1 Pro Preview cost per API call?

The cost depends on your token usage. At the standard pricing of $2.00 per million input tokens and $12.00 per million output tokens (including thinking tokens), a typical API call with 500 input tokens and 1,500 output tokens would cost approximately $0.019 — less than two cents. You can reduce this further with the Batch API (50% off), context caching (90% off cached input tokens), and thinking budget control. Most individual developers spend $5-50 per month during development and testing phases.

What is the difference between Gemini 3 Pro and 3.1 Pro Preview?

Gemini 3.1 Pro Preview represents a major leap over Gemini 3 Pro, scoring 77.1% on ARC-AGI-2 compared to 3 Pro's 31.1% — more than doubling its reasoning performance. The 3.1 version also adds improved thinking capabilities with budget control, enhanced code generation (80.6% SWE-Bench vs previous scores), and better performance across scientific and mathematical benchmarks. Both models share the same 1M token context window and multimodal input support, but 3.1 Pro Preview's reasoning improvements make it substantially more capable for complex analytical tasks.

Can I use the Gemini CLI to access Gemini 3.1 Pro Preview?

Yes, the Gemini CLI supports Gemini 3.1 Pro Preview. You can install it and use the model directly from your terminal for interactive coding assistance, file analysis, and command-line workflows. The CLI requires a valid API key with billing enabled and uses the same pricing as the standard API. This is particularly useful for developers who want to integrate AI assistance into their shell workflow without building a custom application.

How does Gemini 3.1 Pro compare to Claude Opus 4.6 for coding tasks?

On the SWE-Bench Verified benchmark (a standard measure of real-world software engineering ability), Gemini 3.1 Pro Preview scores 80.6% compared to Claude Opus 4.6's approximately 78% (The Decoder, February 2026). However, the practical difference is nuanced — Claude Opus 4.6 is widely regarded for its consistency in agentic coding workflows and instruction-following precision, particularly in scenarios where the model needs to follow complex multi-step instructions across long conversations. The most significant difference is pricing: Gemini costs $2/$12 per million tokens versus Claude's $15/$75, making Gemini approximately 6x more cost-effective for coding tasks. Many developers are adopting a hybrid approach in February 2026, using Gemini 3.1 Pro Preview for high-volume code analysis, automated testing generation, and bulk refactoring tasks where cost efficiency matters, while reserving Claude Opus 4.6 for complex agentic coding tasks where instruction-following precision and consistency across extended interactions are critical to success.