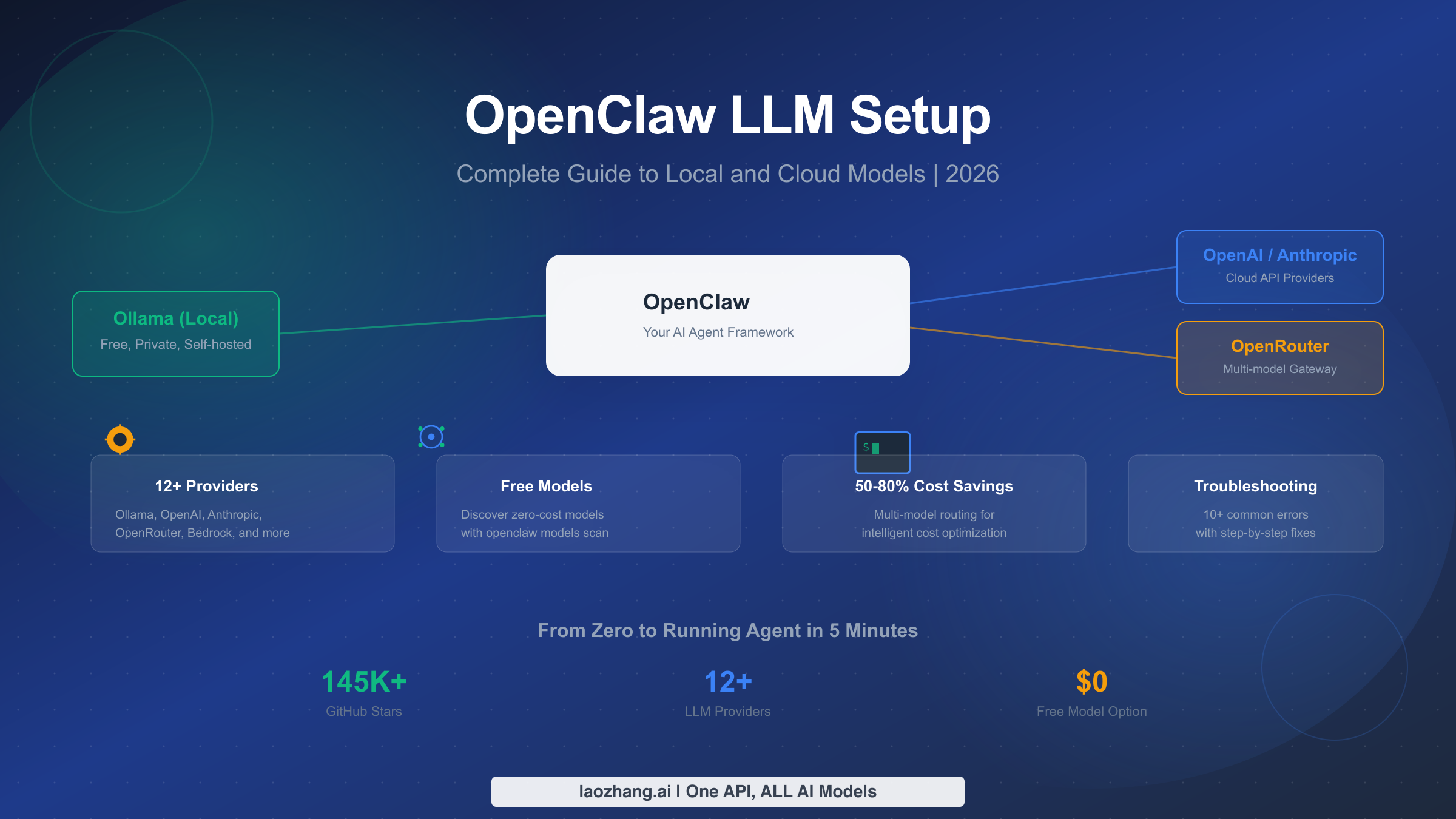

OpenClaw supports 12+ LLM providers including Ollama for local privacy, OpenAI and Anthropic for peak performance, and OpenRouter for multi-model access. Run openclaw onboard to launch the interactive setup wizard, pick your provider, and your AI agent is ready in under five minutes. This guide covers every provider path, helps you match models to your hardware and budget, reveals how to use OpenClaw completely free, and provides step-by-step fixes for the most common setup errors you will encounter.

What Is OpenClaw and Why Your LLM Choice Matters

OpenClaw is an open-source AI agent framework with over 145,000 GitHub stars (February 2026) that turns large language models into autonomous coding assistants, DevOps helpers, and general-purpose agents. Created by Peter Steinberger and formerly known as Moltbot and then Clawdbot, the project has grown into one of the most actively maintained agent frameworks in the ecosystem. Unlike simpler chat wrappers, OpenClaw gives the LLM the ability to execute shell commands, edit files, browse the web, and orchestrate complex multi-step workflows — which means the model you choose directly shapes what your agent can accomplish and how much it costs to run.

The LLM provider you configure during setup is not just a technical checkbox. It determines three things that will define your daily experience with OpenClaw. First, it sets the intelligence ceiling of your agent: a small local model like Qwen2.5 7B can handle straightforward file edits, but it will struggle with multi-file refactors that Claude Sonnet 4.5 or GPT-5.2 handle effortlessly. Second, it controls your operating costs: cloud APIs charge per token, and an active OpenClaw session can consume millions of tokens per day if you are running complex agentic loops. Third, it determines data privacy: every prompt you send to a cloud provider leaves your machine, which matters if you work with proprietary code or sensitive configurations.

OpenClaw currently supports 12 official providers: Ollama, OpenAI, Anthropic, OpenRouter, Amazon Bedrock, Vercel AI Gateway, Moonshot AI, MiniMax, OpenCode Zen, GLM Models, Z.AI, and Synthetic. Each provider has different strengths. GLM Models are described in the official documentation as "a bit better for coding and tool calling," while MiniMax is noted as "better for writing and vibes" (docs.openclaw.ai, February 2026). This diversity is a strength — but it also means you need a clear decision framework before running openclaw onboard. The rest of this guide provides exactly that framework, starting with how to choose the right provider, then walking through setup for every major option, and finishing with cost optimization and troubleshooting.

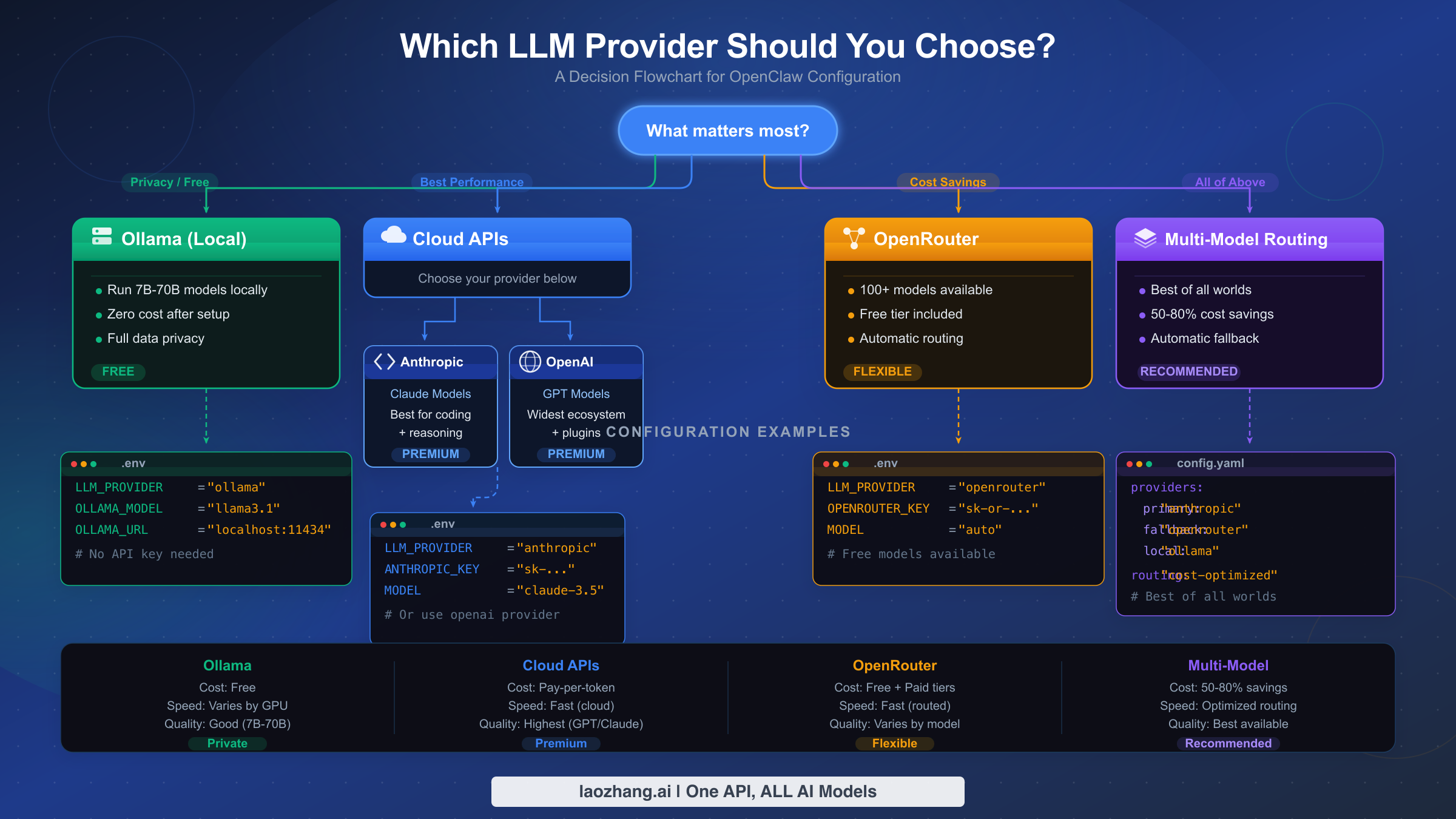

How to Choose the Right LLM Provider

Choosing the right LLM provider for OpenClaw comes down to four factors: your budget, your hardware, your privacy requirements, and the complexity of tasks you plan to run. Rather than listing every provider and leaving you to figure it out, this section gives you a concrete decision path based on what matters most to you. The flowchart above summarizes the four main paths, and the decision table below maps common scenarios to specific recommendations.

| Priority | Recommended Provider | Best Model | Monthly Cost | Setup Difficulty |

|---|---|---|---|---|

| Privacy + Free | Ollama (local) | Qwen2.5-Coder 7B | $0 | Medium |

| Best Performance | Anthropic | Claude Sonnet 4.5 | $50-200 | Easy |

| Budget Cloud | OpenRouter | DeepSeek V3.2 | $5-30 | Easy |

| Maximum Flexibility | OpenRouter + Ollama | Mixed | $10-50 | Medium |

| Enterprise | Amazon Bedrock | Claude via Bedrock | Variable | Hard |

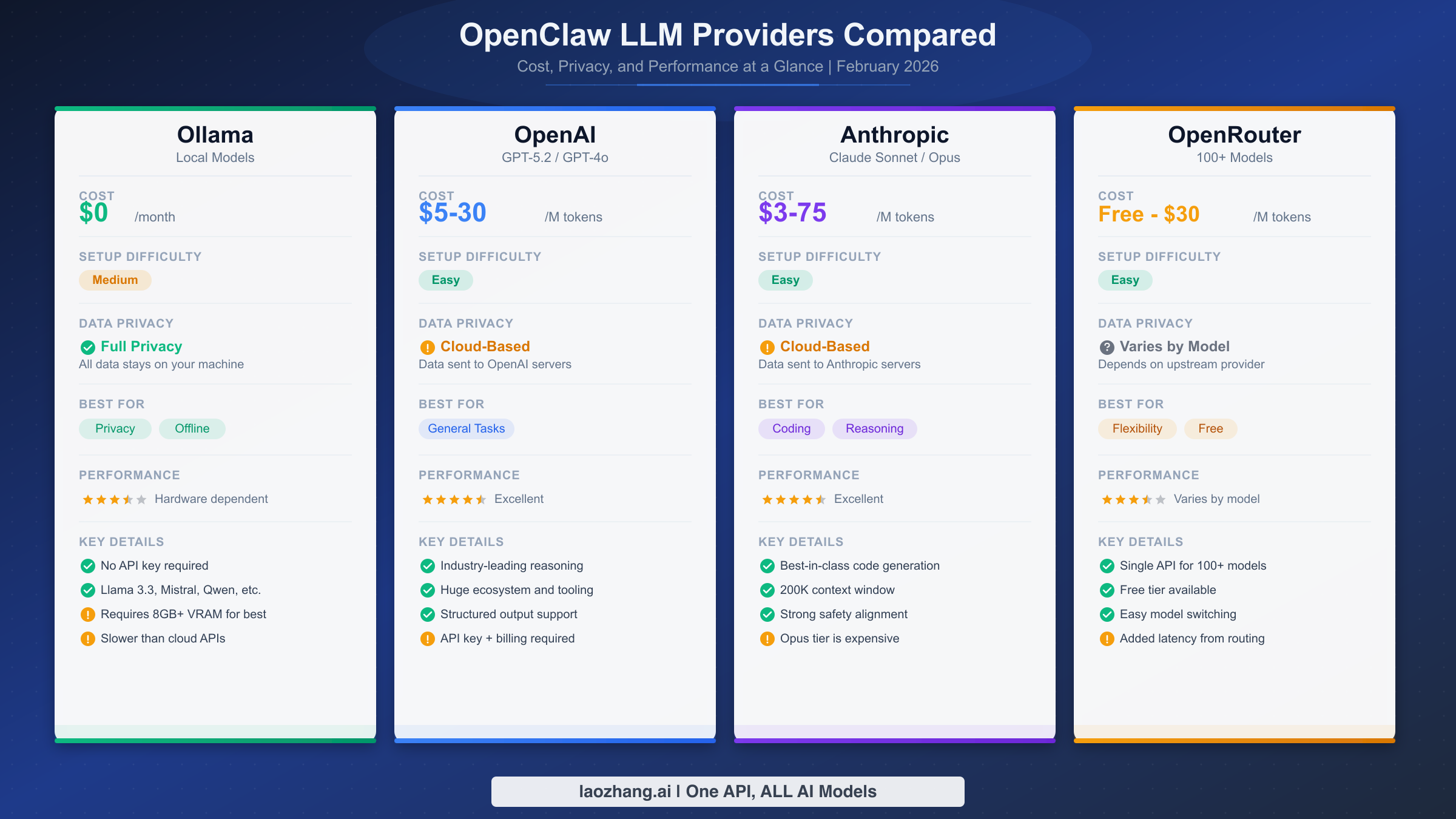

If privacy is your top priority, Ollama is the only answer. Every prompt stays on your machine, no API key is needed, and the cost is zero beyond electricity. The tradeoff is capability: local models top out around 32B parameters on most consumer hardware, which means they handle single-file tasks well but struggle with complex multi-step reasoning. If you have a machine with 64GB or more of RAM, you can run 30-32B parameter models like DeepSeek-Coder-V2 at roughly 10-15 tokens per second on an Apple M4 chip (marc0.dev benchmark, January 2026), which is genuinely competitive with cloud models for many coding tasks.

If you want the best possible agent performance, Anthropic's Claude Sonnet 4.5 at $3/$15 per million input/output tokens (haimaker.ai, January 2026) offers the strongest balance of capability, tool-calling reliability, and cost. For tasks requiring deeper reasoning — architectural decisions, complex debugging, large-scale refactoring — Claude Opus 4.5 at $15/$75 per million tokens is the premium tier. You can find a detailed comparison between Claude Opus and Sonnet that breaks down exactly when the price difference is justified. OpenAI's GPT-5.2 is a strong alternative at similar price points, particularly if you are already invested in the OpenAI ecosystem.

If you want to minimize costs while still using cloud models, OpenRouter is the best starting point. It acts as a gateway to dozens of models from different providers, and it surfaces free-tier models that you can use with zero spending. Models like DeepSeek V3.2 at $0.53 per million tokens and Xiaomi MiMo-V2-Flash at $0.40 per million tokens (velvetshark.com, February 2026) deliver surprisingly strong coding performance at a fraction of premium model pricing. OpenRouter also enables the multi-model routing strategy covered in the cost optimization section below.

If you want maximum flexibility, combine Ollama for local privacy with OpenRouter for cloud fallback. This gives you the best of both worlds: sensitive tasks stay local, complex tasks escalate to more capable cloud models, and your agent keeps running even if one provider has an outage. The configuration for this approach is covered in the multi-model routing section.

Setting Up Local Models with Ollama

Ollama is the most popular local LLM runtime for OpenClaw, and for good reason: it is free, keeps all data on your machine, and requires no API keys. The setup process has three stages — installing Ollama, pulling a model, and connecting it to OpenClaw. The entire process takes under ten minutes on a modern machine with a decent internet connection.

Installing Ollama is straightforward on all three major platforms. On macOS, download the installer from ollama.com or use Homebrew. On Linux, the official install script handles everything. On Windows, Ollama now provides a native installer.

bashbrew install ollama # Linux (official script) curl -fsSL https://ollama.com/install.sh | sh # Windows: download from ollama.com/download

After installation, start the Ollama server. On macOS and Windows, the desktop app starts it automatically. On Linux, you may need to start the service manually with ollama serve. The server listens on http://127.0.0.1:11434 by default — this is the endpoint OpenClaw will connect to.

Pulling the right model depends entirely on your available RAM. This is where most users make their first mistake: they try to pull a 70B parameter model on a 16GB laptop and wonder why everything grinds to a halt. The rule of thumb is that you need roughly 1GB of RAM per billion parameters for quantized models, plus overhead for the operating system and OpenClaw itself.

| Available RAM | Recommended Model | Parameters | Expected Speed | Use Case |

|---|---|---|---|---|

| 8GB | qwen2.5-coder:3b | 3B | 25-35 tok/s | Light edits, simple scripts |

| 16GB | qwen2.5-coder:7b | 7B | 15-20 tok/s | Single-file coding, debugging |

| 32GB | deepseek-coder-v2:16b | 16B | 12-18 tok/s | Multi-file tasks, moderate reasoning |

| 64GB+ | deepseek-coder-v2:33b | 33B | 10-15 tok/s | Complex refactoring, architecture |

Pull your chosen model with a single command:

bash# For 16GB machines (most common) ollama pull qwen2.5-coder:7b # Verify the model is available ollama list

Connecting Ollama to OpenClaw happens through the onboard wizard. Run openclaw onboard and select Ollama as your provider. The wizard will detect models you have already pulled and let you choose one. Behind the scenes, it writes the configuration to your OpenClaw settings file with the key agents.defaults.model.primary.

bash# Start the setup wizard openclaw onboard # Or configure manually via CLI openclaw models set ollama/qwen2.5-coder:7b

If you prefer to configure manually, the key environment variable is OLLAMA_API_KEY. Despite the name, Ollama does not actually require authentication — set it to any non-empty string like ollama-local to satisfy OpenClaw's validation:

bashexport OLLAMA_API_KEY="ollama-local"

One important detail from the official documentation: Ollama streaming is disabled by default in OpenClaw. This means you will see the complete response appear at once rather than token by token. For most agent workflows this is fine, but if you prefer streaming output, you can enable it in the provider configuration.

Verifying your setup is a critical step that many guides skip. After connecting Ollama to OpenClaw, send a simple test prompt to confirm everything works end-to-end. If the agent responds with coherent output and can execute basic tool calls (like listing files in a directory), your local LLM is correctly configured and every token is being processed on your own hardware. If you see garbled output or the agent refuses to use tools, the model may lack sufficient tool-calling capability — in that case, switch to Qwen2.5-Coder or DeepSeek-Coder-V2, which have the strongest local tool-calling support as of February 2026.

Performance tuning for local models makes a noticeable difference in daily usage. If responses feel slow, check how many models Ollama has loaded in memory with ollama ps. Ollama keeps recently used models in memory, and having multiple large models loaded simultaneously will degrade performance. Use ollama stop MODEL_NAME to unload models you are not actively using. On Apple Silicon Macs, ensure that Ollama is using the GPU acceleration — this happens automatically, but you can verify it in the Ollama logs. For Linux users with NVIDIA GPUs, Ollama supports CUDA acceleration when the appropriate drivers are installed, which can increase inference speed by 3-5x compared to CPU-only inference.

Configuring Cloud LLM Providers

Cloud providers give your OpenClaw agent access to the most capable models available, at the cost of sending your prompts over the internet and paying per token. The three most commonly used cloud providers are OpenAI, Anthropic, and OpenRouter. Each requires an API key, and the setup process through openclaw onboard is nearly identical for all three — the main differences are where you get your key and what models become available.

OpenAI Setup requires an API key from platform.openai.com. Create an account, navigate to API Keys, and generate a new key. Then either pass it through the onboard wizard or set it as an environment variable:

bashexport OPENAI_API_KEY="sk-proj-..." # Run the setup wizard openclaw onboard # Select OpenAI → paste your key → choose a model

OpenAI gives you access to the GPT family of models. For OpenClaw agent work, GPT-5.2 offers the best balance of capability and cost. The key advantage of OpenAI is its extensive ecosystem — if you already have billing set up and team API access, adding OpenClaw is trivial.

Anthropic Setup follows the same pattern. Get your API key from console.anthropic.com, then configure it:

bashexport ANTHROPIC_API_KEY="sk-ant-..." openclaw onboard # Select Anthropic → paste your key → choose Claude Sonnet 4.5 or Opus 4.5

Claude models are widely regarded as the strongest for agentic coding tasks, particularly for tool calling and multi-step workflows. Claude Sonnet 4.5 at $3/$15 per million tokens is the sweet spot for daily use, while Opus 4.5 at $15/$75 per million tokens is reserved for the most demanding tasks. If you encounter issues during setup, our guide on Anthropic API key configuration issues covers the most common pitfalls including key format validation and region restrictions.

OpenRouter Setup is slightly different because OpenRouter is not a model provider itself — it is a gateway that routes your requests to models from dozens of different providers. This means a single OpenRouter API key gives you access to Claude, GPT, Gemini, Llama, Mistral, DeepSeek, and many more. Get your key from openrouter.ai/keys:

bashexport OPENROUTER_API_KEY="sk-or-..." openclaw onboard # Select OpenRouter → paste your key → browse available models

The unique advantage of OpenRouter is model diversity. You can switch between providers without changing your OpenClaw configuration — just change the model name. This also enables the cost optimization strategy covered in the next sections, where you route simple tasks to cheap models and complex tasks to premium ones.

If you need a single API endpoint that routes to multiple AI models with simplified billing and reliable uptime, API aggregation platforms like laozhang.ai provide unified access to OpenAI, Anthropic, and other providers through one API key. This can simplify your setup when you want to use multiple cloud providers without managing separate accounts and billing for each.

Using Free Models with OpenClaw

One of OpenClaw's least-known features is that you can run it with zero API costs. There are two paths to free usage: local models through Ollama (which we covered above) and free cloud models through OpenRouter. The second path is particularly interesting because it gives you access to cloud-grade inference without spending a cent.

OpenClaw includes a built-in model scanner that discovers free models available on OpenRouter. Running openclaw models scan queries the OpenRouter API and returns a list of models with zero pricing — these are models that providers offer at no cost, typically to drive adoption or as community contributions. The availability changes over time, but there are consistently 10-20 free models available at any given moment.

bash# Discover free models openclaw models scan # Example output (availability varies): # ✓ meta-llama/llama-3.2-8b-instruct (free) # ✓ google/gemma-2-9b-it (free) # ✓ mistralai/mistral-7b-instruct (free) # ✓ qwen/qwen-2.5-7b-instruct (free)

To use a free model, select it during openclaw onboard or set it directly:

bashopenclaw models set openrouter/meta-llama/llama-3.2-8b-instruct

The honest tradeoff with free models is that they are typically smaller (7-9B parameters) and may have rate limits or higher latency compared to paid models. For learning OpenClaw, experimenting with agent workflows, or handling simple tasks like file organization and basic code generation, free models are genuinely useful. For production coding assistance or complex multi-step tasks, you will eventually want to upgrade to a paid model — but starting with free models means you can learn the entire OpenClaw workflow without any financial commitment.

You can also combine free and paid models using the multi-model routing strategy. Configure a free model as your default for simple tasks, and a paid model as a fallback for complex ones. This way, the majority of your interactions cost nothing, and you only pay when the task genuinely requires a more capable model. The configuration for this approach is covered in the next section.

Another path to zero-cost OpenClaw usage is through provider free tiers. OpenAI, Anthropic, and Google all offer limited free API access for new accounts. These free tiers typically include enough tokens to experiment extensively with OpenClaw before committing to paid usage. Check each provider's current free tier terms, as they change frequently.

The strategic approach to free models is to use them as your learning environment. When you are first configuring OpenClaw, experimenting with different agent prompts, or testing new workflows, there is no reason to burn expensive tokens on Claude Opus or GPT-5.2. Start with a free model, get your workflow dialed in, and then upgrade to a paid model only when you have confirmed that the free model is genuinely the bottleneck. Many users discover that a well-configured agent with a 7B parameter model handles 60-70% of their daily tasks acceptably — they only need cloud models for the remaining complex interactions that require stronger reasoning or larger context windows.

Cost Optimization and Multi-Model Routing

Running OpenClaw with cloud models can get expensive quickly. An active agentic session might consume 500,000 to 2,000,000 tokens per hour depending on the complexity of the task and how much context the agent needs to maintain. At Claude Sonnet 4.5 rates of $3/$15 per million input/output tokens, a heavy day of usage could easily reach $20-50. Multi-model routing is the single most effective strategy for reducing these costs, with real-world savings of 50-80% reported by users who configure it properly (velvetshark.com, February 2026).

The core idea behind multi-model routing is simple: not every interaction with your agent requires the most expensive model. When OpenClaw asks a clarifying question, generates a simple file, or executes a routine command, a $0.40/M token model like Xiaomi MiMo-V2-Flash performs just as well as Claude Opus at $75/M output tokens. By routing simple tasks to cheap models and reserving expensive models for complex reasoning, you dramatically reduce your average cost per interaction without meaningfully impacting quality.

OpenClaw supports this through its fallback chain configuration in agents.defaults.model.fallbacks. The primary model handles every request first, and if it fails, encounters rate limits, or is unavailable, the agent automatically falls back to the next model in the chain. You can structure this as a cost optimization strategy by making your cheapest adequate model the primary and your most capable model the fallback:

yaml# Cost-optimized configuration example agents: defaults: model: primary: "openrouter/deepseek/deepseek-v3.2" # \$0.53/M - daily driver fallbacks: - "openrouter/anthropic/claude-sonnet-4.5" # \$3/\$15/M - complex tasks - "ollama/qwen2.5-coder:7b" # \$0 - offline fallback

This configuration means your agent uses DeepSeek V3.2 for most interactions at $0.53 per million tokens. When the task requires stronger reasoning, you manually switch to Claude Sonnet using the /model command in the OpenClaw interface. And if both cloud providers are down, the agent falls back to your local Ollama model — ensuring it never stops working entirely.

For more granular cost control, you can track your token usage through the OpenClaw dashboard at http://127.0.0.1:18789/ and set spending alerts through your provider's billing dashboard. OpenRouter is particularly useful here because it provides unified billing across all models, making it easy to see exactly where your money is going. For comprehensive strategies on managing your API spending, our guide on token management and cost optimization strategies goes deeper into budgeting, usage patterns, and alert configuration.

Some API aggregation services like laozhang.ai offer access to multiple model providers through a single endpoint with competitive pricing, which can further simplify your cost management. You can find their API documentation at docs.laozhang.ai for integration details.

The fallback chain also serves as a reliability mechanism. If Anthropic experiences an outage, your agent automatically switches to the next available model rather than stopping entirely. For teams that depend on OpenClaw for production workflows, configuring at least two providers in the fallback chain is strongly recommended — the few minutes of setup time pays for itself the first time a provider goes down during a critical task.

To put the savings in concrete terms, consider a typical developer using OpenClaw for 4 hours of active coding per day. With Claude Sonnet 4.5 as the only model, that is roughly 2-4 million tokens per day at an average blended cost of around $9/M tokens, resulting in $18-36 per day or $400-800 per month. With multi-model routing — using DeepSeek V3.2 at $0.53/M for 70% of interactions and Claude Sonnet for the remaining 30% — the same usage pattern drops to approximately $5-12 per day or $100-260 per month. That is a 55-67% reduction in costs with minimal impact on output quality, because the majority of agent interactions (file reads, simple edits, command execution, status checks) do not benefit from a premium model's reasoning capabilities.

Troubleshooting Common OpenClaw LLM Issues

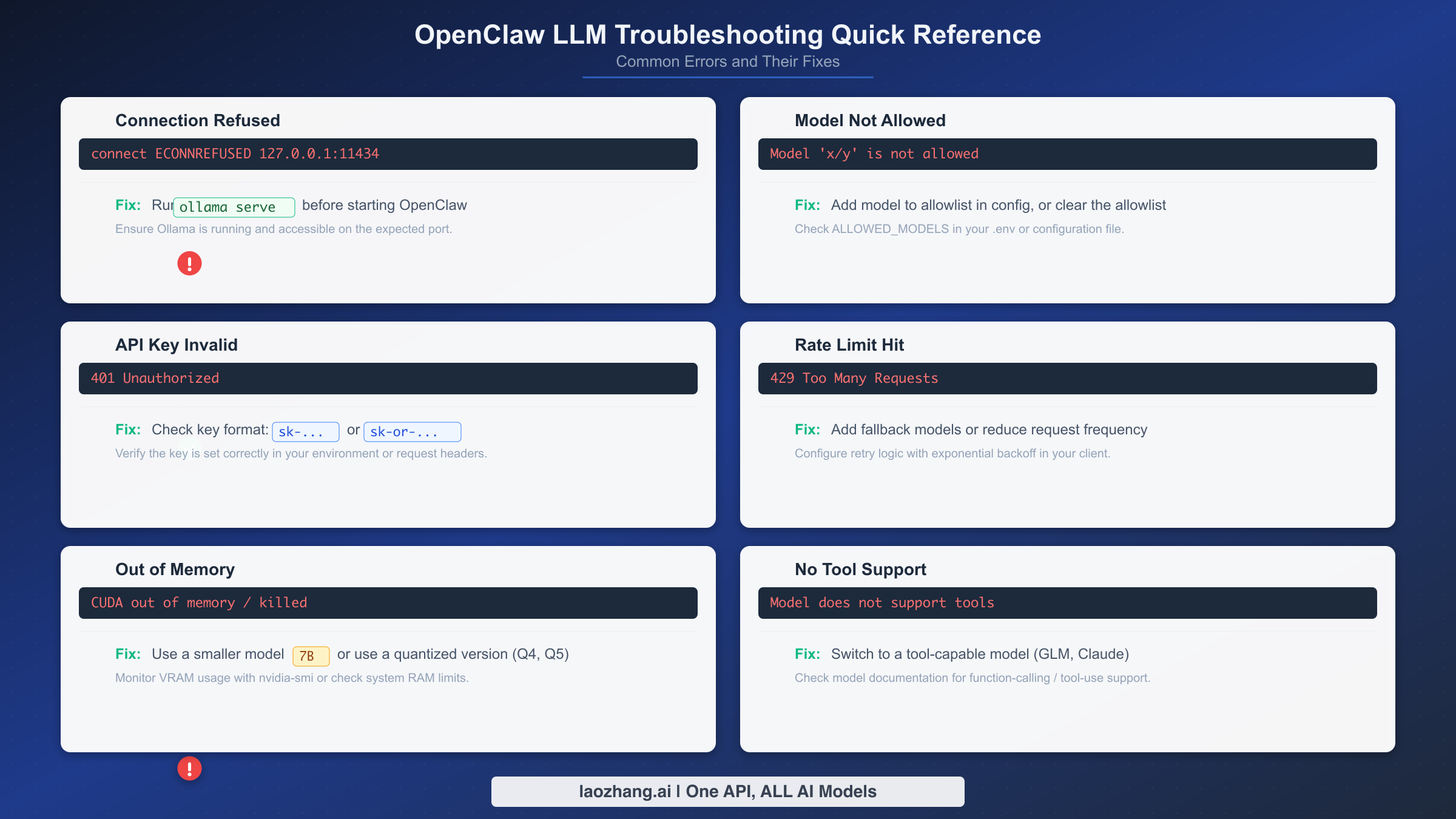

Every OpenClaw user hits at least one of these errors during setup. The frustration is real — you have spent time configuring everything, and then a cryptic error message blocks you. This section covers the ten most common LLM-related errors with their exact error messages and verified solutions, so you can get back to productive work in minutes rather than hours.

Error 1: "Connection refused" when using Ollama. This means OpenClaw cannot reach the Ollama server at http://127.0.0.1:11434. The fix is almost always that the Ollama server is not running. On macOS, open the Ollama desktop app. On Linux, run ollama serve in a separate terminal. Verify the server is accessible with curl http://127.0.0.1:11434/api/tags — if you get a JSON response listing your models, the server is running correctly and the issue is likely a firewall or proxy configuration blocking localhost connections.

bash# Check if Ollama is running curl http://127.0.0.1:11434/api/tags # Start Ollama server (Linux) ollama serve # If using a custom port, update OpenClaw config export OLLAMA_BASE_URL="http://127.0.0.1:YOUR_PORT"

Error 2: "Model not allowed" or "Model not found." This occurs when you request a model that either does not exist on your provider or is not available for your API tier. For Ollama, it means you have not pulled the model yet — run ollama pull MODEL_NAME. For cloud providers, double-check the exact model identifier: OpenClaw uses the format provider/model-name, and a typo in either part triggers this error. Use openclaw models list to see all available models for your configured providers.

Error 3: "Invalid API key" or authentication failures. The API key is either missing, malformed, or expired. For Anthropic, keys start with sk-ant-. For OpenAI, keys start with sk-proj- (newer format) or sk- (legacy). For OpenRouter, keys start with sk-or-. Check that you have set the correct environment variable (ANTHROPIC_API_KEY, OPENAI_API_KEY, or OPENROUTER_API_KEY) and that it does not contain trailing whitespace or newline characters. A common mistake is copying the key with invisible characters from a password manager.

Error 4: "Rate limit exceeded" (HTTP 429). You are sending too many requests too quickly. This is especially common with free-tier API accounts that have low rate limits. The immediate fix is to wait 30-60 seconds and retry. The long-term fix is to configure multi-model routing with fallbacks so that when one provider rate-limits you, the agent automatically switches to another. For persistent rate limit issues, our detailed guide on rate limit exceeded errors covers provider-specific limits and mitigation strategies.

Error 5: "Out of memory" when running local models. You are trying to run a model that exceeds your available RAM. The fix is simple: switch to a smaller model. If you have 16GB of RAM and pulled a 30B parameter model, switch to the 7B variant. Use ollama list to see which models you have installed, and refer to the hardware-to-model table in the Ollama setup section above to find the right fit for your machine.

Error 6: "Tool calling not supported" or agent cannot execute commands. Some models — particularly smaller open-source models — do not support function calling, which OpenClaw relies on for agent actions like file editing and command execution. The fix is to switch to a model that supports tool calling. On the cloud side, Claude Sonnet 4.5, GPT-5.2, and Gemini 3 Pro all have robust tool calling support. For local models, Qwen2.5-Coder and DeepSeek-Coder-V2 include tool calling capabilities.

Error 7: "Invalid beta flag" errors. This is a version-related issue that occurs when OpenClaw sends API headers that the provider does not recognize. The most common cause is running an outdated version of OpenClaw. Update to the latest version with npm install -g openclaw@latest (requires Node.js v24+). For a detailed walkthrough of this specific error, see our guide on the invalid beta flag error and how to fix it.

Error 8: Context window exceeded. Long conversations or large files can push the total token count beyond the model's context window. Symptoms include truncated responses or errors about maximum context length. Start a new conversation with /clear, or switch to a model with a larger context window. Claude Sonnet 4.5 supports 200K tokens, which is sufficient for most agent workflows.

Error 9: Slow response times with local models. If your local model is responding but taking 30+ seconds per response, you are likely running a model too large for your hardware. Check ollama ps to see current model memory usage, and refer to the hardware table to right-size your model. Also ensure no other memory-intensive applications are running while using OpenClaw with local models.

Error 10: OpenClaw not recognizing provider after configuration. After running openclaw onboard, the agent still defaults to the wrong provider. Use openclaw models status to check the current configuration, and openclaw models set PROVIDER/MODEL to explicitly set your desired model. The /model command within an active OpenClaw session also lets you switch models on the fly without restarting.

What to Do After Setup — Getting Productive

With your LLM provider configured and verified, you are ready to start using OpenClaw as a genuine productivity tool rather than a toy to experiment with. The difference between users who get real value from OpenClaw and those who abandon it after a week usually comes down to three habits that you should establish right now while the setup is fresh.

Start by testing your configuration with a real task, not a "hello world" prompt. Ask OpenClaw to analyze a file in your current project, refactor a function, or write a test for existing code. This immediately validates that tool calling works correctly, that the model has sufficient capability for your work, and that response times are acceptable. If anything feels off — slow responses, incomplete code, or tool calling failures — revisit the provider choice section and consider whether a different model would serve you better.

Next, configure your fallback chain even if you are happy with your primary model. Provider outages happen, rate limits get hit during crunch time, and having a fallback means your workflow never stops completely. At minimum, add one cloud alternative and one local model to your fallback configuration. The five minutes you spend now will save you hours of frustration during the inevitable provider downtime.

Finally, establish a cost monitoring routine if you are using cloud models. Check your API usage dashboard weekly, set billing alerts at thresholds you are comfortable with, and track whether your actual spending matches your budget expectations. If costs are higher than expected, the multi-model routing strategy from the previous section is your most effective lever — route routine tasks to cheaper models and reserve premium models for complex work that genuinely benefits from stronger reasoning.

OpenClaw is a tool that rewards investment in configuration. The users who get the most value are those who treat the initial setup not as a one-time chore but as the foundation of an evolving workflow. Your model choice, fallback chain, and cost strategy will change as your needs evolve and new models become available. The openclaw onboard wizard and /model command make it easy to adapt — the key is to keep iterating rather than settling for a configuration that works "well enough."

The OpenClaw ecosystem is evolving rapidly. New model providers are added regularly, pricing changes as competition intensifies, and local model capabilities improve with each generation. Bookmark this guide and revisit the provider decision framework whenever you consider changing your setup. The principles — match model capability to task complexity, configure fallbacks for reliability, and monitor costs actively — remain constant even as the specific model recommendations evolve. Your initial five minutes of setup is just the beginning of a workflow that will grow more powerful as you refine it over weeks and months of daily use.

Frequently Asked Questions

Can I use multiple LLM providers simultaneously with OpenClaw?

Yes. OpenClaw's fallback chain configuration in agents.defaults.model.fallbacks supports multiple providers. Your primary model handles requests first, and if it fails or is rate-limited, the agent automatically tries the next model in the chain. You can also switch providers mid-session using the /model command. Many users configure a local Ollama model as the final fallback to ensure the agent works even when all cloud providers are unreachable.

What is the minimum hardware required to run OpenClaw with a local LLM?

The absolute minimum is 8GB of RAM, which supports 3B parameter models at usable speeds. For a productive experience with local models, 16GB of RAM running a 7B parameter model like Qwen2.5-Coder is the practical starting point. You also need Node.js v24 or later and sufficient disk space for model files (typically 4-8GB per model). Apple Silicon Macs with unified memory offer the best local inference performance per dollar.

How do I switch models without restarting OpenClaw?

Use the /model command within an active OpenClaw session. Type /model followed by the provider and model name (for example, /model anthropic/claude-sonnet-4.5). This takes effect immediately for the next interaction. You can also use openclaw models set from the terminal to change your default model for all future sessions. Use openclaw models list to see all available models for your configured providers.

Is my data safe when using cloud LLM providers with OpenClaw?

When using cloud providers (OpenAI, Anthropic, OpenRouter), your prompts and code context are sent to the provider's servers for processing. Each provider has its own data retention and privacy policies. If data privacy is a hard requirement, use Ollama — all inference happens locally and no data leaves your machine. For a middle ground, consider using local models for sensitive tasks and cloud models for non-sensitive work, configured through the multi-model routing system.

How much does it cost to run OpenClaw with cloud models?

Costs vary widely based on usage intensity and model choice. Light usage (a few interactions per day) with Claude Sonnet 4.5 costs $5-15 per month. Heavy usage (continuous agentic sessions) can reach $20-50 per day with premium models. Budget models through OpenRouter (DeepSeek V3.2 at $0.53/M tokens, MiMo-V2-Flash at $0.40/M) reduce costs by 80-90% compared to premium models. Multi-model routing with a cost-optimized fallback chain is the most effective way to control spending while maintaining quality.