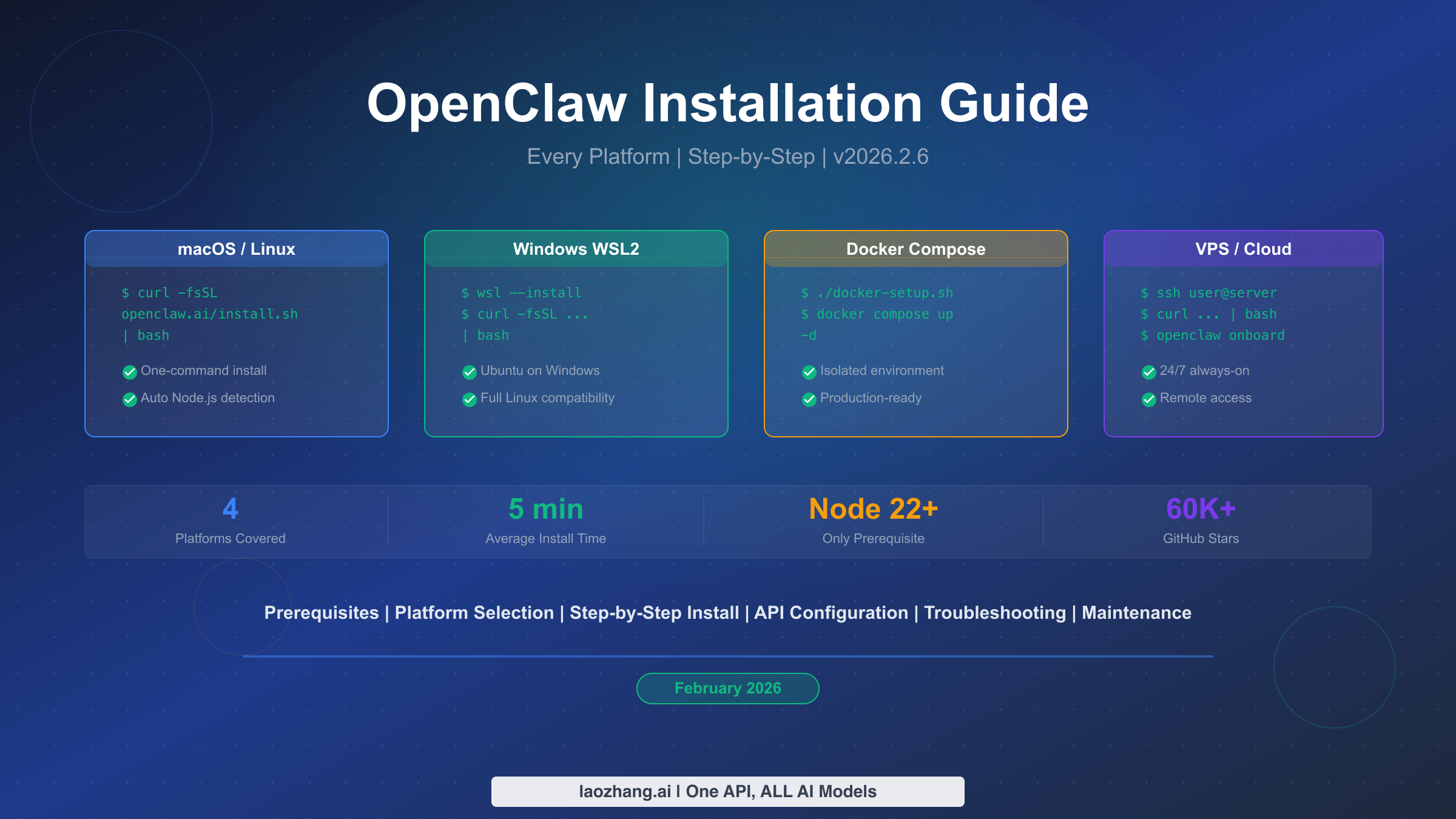

OpenClaw (v2026.2.6, February 2026) installs in under five minutes on macOS, Linux, or Windows WSL2 with a single command: curl -fsSL https://openclaw.ai/install.sh | bash. The only prerequisite is Node.js 22 or later, which the installer handles automatically. For always-on deployment, Docker Compose or a 2-vCPU VPS with 4 GB RAM is recommended. This guide covers every platform, from local machines and Raspberry Pi to cloud servers, with step-by-step commands, security hardening, and maintenance workflows.

TL;DR

OpenClaw is an open-source personal AI assistant (MIT license, 60,000+ GitHub stars as of January 2026) that connects your favorite LLM — Claude, GPT, Gemini, or a local Ollama model — to messaging platforms like WhatsApp, Telegram, Slack, Discord, Signal, iMessage, Microsoft Teams, and Google Chat. Originally created by Peter Steinberger (under the names ClawdBot and MoltBot before the open-source rebrand), OpenClaw has grown into one of the most actively maintained personal AI assistant projects in the Node.js ecosystem. Here is the fastest path to a working installation:

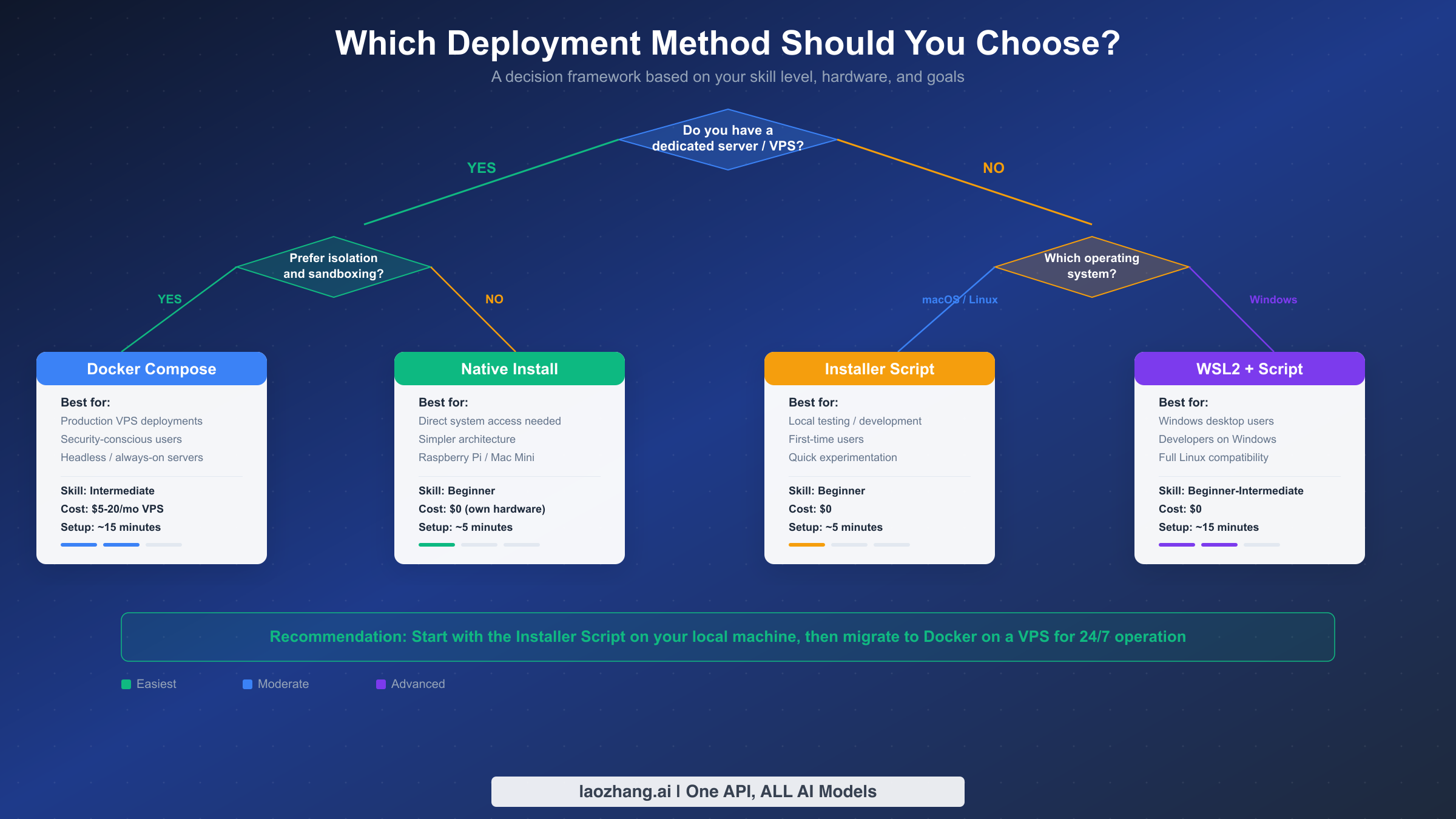

Quick decision: Run it locally first to test, then move to Docker on a VPS for 24/7 availability.

Essential commands:

bashcurl -fsSL https://openclaw.ai/install.sh | bash # First-time setup openclaw onboard # Verify health openclaw doctor # Launch web dashboard openclaw dashboard

Minimum requirements: Node.js 22+, 2 GB RAM, 20 GB disk. The installer script handles Node.js detection and installation automatically on all supported platforms. For always-on VPS deployment, budget $5-20 per month depending on provider and traffic volume.

How to Choose Your OpenClaw Deployment Method

Most installation guides jump straight into commands without addressing the most important question first: which method should you actually use? OpenClaw offers seven installation methods (installer script, npm, pnpm, source, Docker, Nix, Ansible, and experimental Bun support), but most users only need to consider three. The right choice depends on your hardware situation, your comfort level with the terminal, and whether you plan to leave the assistant running around the clock.

The Installer Script is the recommended starting point for anyone installing OpenClaw for the first time. It works on macOS, Linux, and Windows via WSL2, automatically detects your Node.js version (and installs it if missing), and completes setup in under five minutes. This method installs OpenClaw directly on your operating system without any containerization layer, which means lower resource overhead and simpler debugging. The trade-off is that you share your system's Node.js environment with other applications, and keeping OpenClaw running 24/7 requires a process manager like pm2 or a systemd service. Choose this method if you want to experiment locally, test on a Raspberry Pi, or prefer a minimal setup on your Mac Mini.

Docker Compose provides process isolation, reproducible environments, and painless upgrades at the cost of slightly more complexity. The Docker deployment bundles OpenClaw with its own Node.js runtime inside a container, maps the gateway to 127.0.0.1:18789, and persists your configuration through Docker volumes. Updates become a matter of pulling the latest image and restarting the container rather than managing Node.js versions manually. This method is ideal for production VPS deployments, security-conscious operators who want container-level sandboxing, and teams running multiple services on the same server. You will need Docker Engine and Docker Compose installed beforehand, and a basic understanding of port mapping and volume mounts.

Native npm/pnpm Install is a middle ground for developers who already manage Node.js environments professionally. Running npm install -g openclaw (or the pnpm equivalent) gives you complete control over the version, update cadence, and global package location. This approach is best suited for developers who maintain multiple Node.js tools on the same machine and want OpenClaw managed through the same package system. For most users, the installer script is preferable because it handles environment detection automatically.

The following comparison captures the key trade-offs. If you are optimizing for detailed strategies for managing OpenClaw API costs, keep in mind that the deployment method itself has minimal impact on API expenses — the real cost driver is which LLM you connect and how frequently the assistant processes messages.

| Criteria | Installer Script | Docker Compose | npm/pnpm Global |

|---|---|---|---|

| Skill level | Beginner | Intermediate | Developer |

| Setup time | ~5 minutes | ~15 minutes | ~10 minutes |

| Node.js isolation | Shared | Containerized | Shared |

| Always-on support | Requires pm2/systemd | Built-in restart | Requires pm2/systemd |

| Update method | openclaw update | docker compose pull | npm update -g openclaw |

| Best for | Local testing, Raspberry Pi | Production VPS, teams | Developers with existing Node.js workflow |

| Monthly cost | $0 (own hardware) | $5-20 (VPS) | $0-20 (depends on hosting) |

Our recommendation: Start with the installer script on your local machine to confirm everything works. Once you are satisfied, migrate to Docker Compose on a VPS (Hetzner, Fly.io, or a comparable provider) for reliable 24/7 operation. This two-step approach lets you validate your configuration risk-free before committing to a hosted environment.

Installing OpenClaw on macOS and Linux

Installing OpenClaw on macOS or a Linux distribution is the most straightforward path because both operating systems natively support the installer script and the underlying Node.js runtime. The installer detects your platform, checks for Node.js 22 or later, installs it through your system's package manager if necessary, and configures the OpenClaw binary in your PATH. The entire process typically completes in under three minutes on a modern machine with a broadband connection.

Step 1: Verify your Node.js version. Open a terminal and run:

bashnode --version

You need v22.0.0 or later. If the command returns an older version or "command not found," the installer will attempt to install Node.js automatically. However, if you prefer to manage Node.js manually, install it through your platform's recommended method first. On macOS, brew install node@22 works cleanly. On Ubuntu or Debian, the NodeSource repository provides the latest LTS builds:

bashcurl -fsSL https://deb.nodesource.com/setup_22.x | sudo -E bash - sudo apt-get install -y nodejs

Step 2: Run the installer script. This single command downloads and executes the official installer:

bashcurl -fsSL https://openclaw.ai/install.sh | bash

The script performs several actions automatically: it verifies system requirements, downloads the latest OpenClaw release (currently v2026.2.6, released February 7, 2026), installs the openclaw binary, and creates a default configuration directory at ~/.openclaw. On macOS, the installer places the binary in /usr/local/bin/openclaw; on Linux, it uses /usr/local/bin/openclaw or ~/.local/bin/openclaw depending on permissions.

Step 3: Run the onboarding wizard. After installation completes, the onboarding command walks you through initial configuration:

bashopenclaw onboard

The wizard prompts you for three critical pieces of information: which LLM provider to use (Anthropic Claude, OpenAI GPT, Google Gemini, or a local Ollama model), the API key for that provider, and which messaging platform to connect first (WhatsApp, Telegram, Slack, Discord, Signal, iMessage, Microsoft Teams, or Google Chat). Each selection generates the appropriate environment variables in ~/.openclaw/.env.

Step 4: Verify the installation. Run the built-in diagnostic tool to confirm everything is connected properly:

bashopenclaw doctor

A healthy installation produces output confirming that Node.js meets the minimum version, the configuration file is valid, API keys are reachable, and the messaging bridge is operational. If any check fails, the output includes a specific error message and suggested fix. The most common failure at this stage is an invalid or expired API key — double-check that your key is active in the provider's dashboard before troubleshooting further.

macOS-specific note: On recent versions of macOS (Sequoia and later), the system may prompt you to allow network access for the OpenClaw process. Accept this prompt; OpenClaw needs outbound HTTPS access to reach LLM APIs and messaging platform webhooks. If you are running macOS with a third-party firewall like Little Snitch, create a rule allowing node to access api.anthropic.com, api.openai.com, and generativelanguage.googleapis.com on port 443.

Linux daemon setup: If you want OpenClaw to start automatically on boot and restart after crashes, create a systemd service. This is particularly important for headless servers and Raspberry Pi deployments where you cannot rely on a terminal session staying open:

bashsudo tee /etc/systemd/system/openclaw.service > /dev/null <<EOF [Unit] Description=OpenClaw AI Assistant After=network.target [Service] Type=simple User=$USER ExecStart=/usr/local/bin/openclaw start Restart=on-failure RestartSec=10 Environment=HOME=/home/$USER [Install] WantedBy=multi-user.target EOF sudo systemctl enable openclaw sudo systemctl start openclaw

After enabling the service, verify it is running with systemctl status openclaw. The service logs are accessible through journalctl -u openclaw -f for real-time monitoring.

Installing OpenClaw on Windows WSL2 and via Docker

Windows does not natively support OpenClaw because the underlying Node.js ecosystem and process management tools assume a Unix-like environment. However, Windows Subsystem for Linux version 2 (WSL2) provides a fully functional Linux kernel inside Windows, making OpenClaw installation nearly identical to a native Linux setup. Docker Compose offers an alternative for users who prefer containerized deployments or need to avoid WSL2 entirely.

Windows WSL2 Installation

WSL2 has matured significantly since its introduction and is now the recommended approach for running OpenClaw on Windows machines. If you do not already have WSL2 enabled, the setup requires a single PowerShell command with administrator privileges followed by a system restart.

Step 1: Install WSL2. Open PowerShell as Administrator and run:

powershellwsl --install

This command enables the WSL2 feature, downloads and installs the default Ubuntu distribution, and configures the virtual machine. Restart your computer when prompted. After rebooting, Ubuntu will launch automatically to complete its initial setup, asking you to create a Linux username and password.

Step 2: Update packages and install Node.js. Inside the WSL2 Ubuntu terminal:

bashsudo apt update && sudo apt upgrade -y curl -fsSL https://deb.nodesource.com/setup_22.x | sudo -E bash - sudo apt-get install -y nodejs

Step 3: Install OpenClaw. The same installer script used for native Linux works inside WSL2 without modification:

bashcurl -fsSL https://openclaw.ai/install.sh | bash openclaw onboard

The onboarding wizard behaves identically to the macOS/Linux version described in the previous section. One WSL2-specific consideration is that if you need to access the OpenClaw dashboard from your Windows browser, the WSL2 networking layer automatically maps localhost ports, so navigating to http://localhost:3000 in Edge or Chrome will reach the dashboard running inside WSL2.

Docker Compose Installation

Docker Compose is the preferred deployment method for production environments, teams managing multiple services, and anyone who values container-level isolation. The OpenClaw project provides an official Docker image that bundles the correct Node.js version and all dependencies, eliminating version conflicts and simplifying upgrades to a single docker compose pull command.

Step 1: Install Docker. If Docker is not already present on your system, install it using the official convenience script (for Linux and WSL2) or Docker Desktop (for macOS and Windows):

bash# Linux / WSL2 curl -fsSL https://get.docker.com | sh sudo usermod -aG docker $USER # Log out and back in for group changes to take effect

Step 2: Create a project directory and configuration file. OpenClaw's Docker setup uses a docker-compose.yml file that defines the container, port mappings, volume mounts, and restart policy:

bashmkdir -p ~/openclaw-docker && cd ~/openclaw-docker cat > docker-compose.yml <<EOF version: '3.8' services: openclaw: image: openclaw/openclaw:latest container_name: openclaw restart: unless-stopped ports: - "127.0.0.1:18789:18789" volumes: - ./data:/app/data - ./.env:/app/.env environment: - NODE_ENV=production EOF

The port mapping 127.0.0.1:18789:18789 restricts the gateway to localhost access only, which is a security best practice for production deployments. The ./data volume persists conversation history, configuration, and logs outside the container, ensuring nothing is lost when you update or recreate the container.

Step 3: Create your environment file. Before starting the container, you need a .env file with your API keys and messaging platform tokens. The environment variable reference in the next section covers all available options; at minimum, you need:

bashcat > .env <<EOF OPENAI_API_KEY=sk-your-key-here TELEGRAM_BOT_TOKEN=your-bot-token-here EOF

Step 4: Start the container. Launch OpenClaw in detached mode:

bashdocker compose up -d

Verify the container is running and healthy:

bashdocker compose ps docker compose logs --tail 50

The logs should show OpenClaw initializing, connecting to your LLM provider, and registering with the messaging platform. If you see connection errors, check your API keys and ensure outbound HTTPS access is not blocked by a firewall.

Docker networking note: If you run other services behind a reverse proxy like Nginx or Caddy, you can route external traffic to OpenClaw's gateway port. However, for most personal deployments, the localhost binding is sufficient since messaging platforms communicate with OpenClaw through webhooks that the container establishes outbound. If you do need external access (for example, to expose the dashboard to other devices on your network), change the port mapping to 0.0.0.0:18789:18789 — but be aware that this makes the gateway accessible to anyone who can reach your server's IP address, so protect it with a reverse proxy that enforces authentication.

Docker resource limits: On VPS instances with limited RAM, you can constrain the container's memory usage to prevent OpenClaw from competing with other services:

yamlservices: openclaw: # ... other settings deploy: resources: limits: memory: 512M cpus: '1.0'

This configuration caps OpenClaw at 512 MB of RAM and one CPU core, which is more than sufficient for personal use handling up to 200-300 messages per day across all connected platforms.

Hardware Requirements and Performance Optimization

OpenClaw itself is lightweight — the Node.js process typically uses 150-300 MB of RAM and negligible CPU during idle periods. The real hardware question is not "can my machine run OpenClaw" but rather "can my machine run it reliably around the clock?" The answer depends on your deployment scenario, traffic volume, and whether you run additional services alongside the assistant.

The following hardware comparison covers the four most common deployment targets based on real-world usage patterns reported across OpenClaw community forums and deployment guides. Each option represents a different balance of cost, performance, and reliability.

| Specification | Raspberry Pi 5 (8 GB) | Mac Mini M2 | VPS Basic (2 vCPU) | VPS Recommended (4 vCPU) |

|---|---|---|---|---|

| RAM | 8 GB | 8-16 GB | 2-4 GB | 4-8 GB |

| CPU | Cortex-A76 (4 cores) | Apple M2 (8 cores) | 2 shared vCPU | 4 dedicated vCPU |

| Storage | microSD/NVMe | 256+ GB SSD | 20-40 GB SSD | 40-80 GB SSD |

| Monthly cost | $0 (one-time ~$80) | $0 (one-time ~$600) | $4-7/mo | $12-24/mo |

| Always-on | Yes (with UPS) | Yes (with UPS) | Yes (99.9% SLA) | Yes (99.9% SLA) |

| Network | Home internet (variable) | Home internet (variable) | Datacenter (stable) | Datacenter (stable) |

| Best for | Hobbyists, home lab | Power users, multi-service | Budget always-on | Production, heavy traffic |

Raspberry Pi 5 has become a popular choice since OpenClaw's Node.js process runs comfortably within 300 MB of RAM even under sustained messaging load. The 8 GB model provides ample headroom for running OpenClaw alongside Pi-hole, Home Assistant, or other lightweight services. The primary risk with Raspberry Pi deployment is power reliability — an unexpected outage can corrupt the SD card. Investing in a USB-C UPS (approximately $25-40) and using an NVMe SSD via the M.2 HAT instead of a microSD card significantly improves reliability.

Mac Mini M2 is arguably the most cost-effective option for users who already own one or are considering a dedicated home server. The Apple Silicon chip delivers exceptional single-threaded performance for Node.js workloads while consuming minimal power (idle draw under 7 watts). Running OpenClaw on a Mac Mini combined with a local Ollama model means you can have a completely self-hosted AI assistant with zero ongoing API costs. The downside is that home internet connections may have variable upload speeds and occasional outages that affect webhook reliability.

VPS hosting eliminates hardware management entirely and provides datacenter-grade network reliability. For most personal users, a basic 2 vCPU / 4 GB RAM instance from Hetzner (~$5/month), Fly.io, or a comparable provider handles OpenClaw's workload comfortably. The 4 GB RAM recommendation accounts for OpenClaw plus the operating system overhead plus a small buffer for log processing and temporary spikes. For teams or heavy-traffic deployments (multiple messaging platforms, frequent conversations), upgrade to 4 dedicated vCPUs and 8 GB RAM.

Cost-performance sweet spot: Based on the deployment patterns observed across the OpenClaw community, the most popular production configuration is a Hetzner CX22 instance (2 shared vCPU, 4 GB RAM, 40 GB SSD) running Docker Compose, which costs approximately EUR 4.35 per month ($4.70 USD). This setup handles the workload of a personal assistant serving one to three active messaging platforms comfortably, and the European datacenter location provides excellent latency for users across Europe, Africa, and western Asia. For US-based users, Fly.io's free tier or DigitalOcean's $6/month droplet offers comparable performance with North American proximity. When evaluating VPS providers, prioritize consistent network throughput over raw CPU speed — OpenClaw's performance bottleneck is almost always the round-trip time to LLM APIs rather than local computation.

Performance tuning tips: Regardless of your hardware choice, three configuration adjustments can improve OpenClaw's responsiveness. First, set the NODE_OPTIONS environment variable to --max-old-space-size=512 to cap Node.js heap usage and prevent memory leaks from degrading system performance over time. Second, enable response streaming in your LLM provider configuration so that the assistant begins delivering messages before the full response generates — this dramatically improves perceived latency for long responses. Third, configure log rotation (either through the built-in openclaw gateway logs command with the --rotate flag or via logrotate on Linux) to prevent log files from consuming disk space over weeks of continuous operation. A standard log rotation policy that keeps seven days of compressed logs adds minimal disk overhead while providing enough history for debugging any issues that arise.

Environment Variables and API Key Configuration

OpenClaw's behavior is controlled almost entirely through environment variables stored in ~/.openclaw/.env (for native installations) or the .env file mounted into your Docker container. Understanding these variables is essential because they determine which LLM powers your assistant, which messaging platforms it connects to, and how it handles security and rate limiting.

LLM Provider Configuration

OpenClaw supports multiple LLM providers simultaneously, meaning you can configure Claude as your primary model and GPT as a fallback, or route different conversation types to different models. At minimum, you need one provider key set.

bash# Anthropic Claude (recommended for conversational quality) ANTHROPIC_API_KEY=sk-ant-your-key-here # OpenAI GPT OPENAI_API_KEY=sk-your-key-here # Google Gemini GOOGLE_AI_API_KEY=your-google-key-here # Local Ollama (no API key needed, just the endpoint) OLLAMA_BASE_URL=http://localhost:11434

For a deep walkthrough of configuring each provider, including model selection and parameter tuning, see the comprehensive guide to configuring LLM providers in OpenClaw. If you want to connect custom or self-hosted models, the configuring custom models in OpenClaw guide covers endpoint configuration and model aliasing.

API Gateway Alternative: If you prefer accessing multiple LLM providers through a single unified endpoint rather than managing separate API keys, services like laozhang.ai offer an API gateway that consolidates Claude, GPT, Gemini, and other models behind one key. This simplifies your .env file to a single OPENAI_API_KEY pointed at the gateway URL and can reduce costs through volume pricing.

Messaging Platform Tokens

Each messaging platform requires its own authentication token. OpenClaw supports eight platforms, and you can enable as many as you need simultaneously:

bash# Telegram (most common for personal use) TELEGRAM_BOT_TOKEN=123456:ABC-your-bot-token # WhatsApp (requires Meta Business API) WHATSAPP_TOKEN=your-whatsapp-token WHATSAPP_PHONE_NUMBER_ID=your-phone-id # Slack SLACK_BOT_TOKEN=xoxb-your-slack-token SLACK_SIGNING_SECRET=your-signing-secret # Discord DISCORD_BOT_TOKEN=your-discord-token

For Telegram, create a bot through BotFather (@BotFather on Telegram), copy the token, and paste it into the TELEGRAM_BOT_TOKEN variable. For Slack and Discord, you need to create an application in each platform's developer portal, configure OAuth scopes, and extract the bot token. The OpenClaw documentation provides platform-specific setup guides linked from the openclaw onboard wizard.

Security Best Practices for Credentials

Your .env file contains sensitive credentials that, if exposed, grant access to your LLM accounts and messaging platforms. Treat this file with the same care as SSH private keys or database passwords. On Linux servers, restrict file permissions immediately after creation:

bashchmod 600 ~/.openclaw/.env

Never commit .env files to version control. If you use a configuration management tool like Ansible for multi-server deployments, store secrets in an encrypted vault rather than plaintext playbooks. For Docker deployments, consider using Docker secrets or a secrets manager rather than bind-mounting a plaintext .env file, especially in team environments where multiple people have access to the Docker host.

Rate Limiting and Cost Control

OpenClaw processes every incoming message through your LLM provider, which means a busy Telegram group or an active Discord channel can generate significant API costs quickly. Without rate limits, a single active user sending 100 messages per day at an average of 500 tokens per response could generate $3-5 per day in API costs with Claude or GPT-4-class models. Configure rate limits in your environment to prevent runaway spending:

bash# Maximum messages per user per hour RATE_LIMIT_PER_USER=30 # Maximum total API spend per day (in dollars) DAILY_COST_LIMIT=5.00 # Model selection (controls cost per message) DEFAULT_MODEL=claude-3-haiku # Use cheaper models for casual chat, premium for complex tasks

These limits provide a safety net while you calibrate expected usage. Monitoring actual costs over the first week of operation gives you the data to set permanent limits that balance accessibility with budget control. A practical strategy is to start with a conservative daily limit ($2-3), observe actual usage patterns through the OpenClaw dashboard, and adjust upward based on real data rather than estimates.

Advanced configuration tips: OpenClaw supports model routing, which lets you assign different LLM models to different conversation types or users. For example, you can route simple Q&A to a cheaper model like Claude Haiku while directing complex analysis requests to Claude Sonnet or GPT-4o. This hybrid approach can reduce API costs by 60-70% compared to using a premium model for every message, without noticeably degrading the experience for routine conversations.

First Run, Testing, and Troubleshooting

After installation and configuration, the first run is where you confirm that every component — Node.js runtime, LLM provider, messaging bridge, and web dashboard — functions correctly as an integrated system. A methodical verification process saves hours of debugging later.

Step 1: Run the diagnostic suite. The openclaw doctor command performs a comprehensive health check:

bashopenclaw doctor

A fully healthy system produces output resembling:

[OK] Node.js v22.11.0 (minimum: v22.0.0)

[OK] OpenClaw v2026.2.6

[OK] Configuration file found at ~/.openclaw/.env

[OK] Anthropic API key valid (Claude model accessible)

[OK] Telegram bot connected (username: @YourBotName)

[OK] Gateway listening on 127.0.0.1:18789

If any check fails, the output identifies the specific issue and suggests a resolution. Address failures in order from top to bottom, since later checks often depend on earlier ones (for example, the Telegram connection check will fail if the configuration file cannot be read).

Step 2: Send a test message. Open your configured messaging platform (Telegram, WhatsApp, Discord, or whichever you chose during onboarding) and send a simple message to your bot — something like "Hello, are you working?" A working installation responds within 2-5 seconds with an LLM-generated reply. The response time depends primarily on your LLM provider's latency: Claude and GPT typically respond in 1-3 seconds for short messages, while local Ollama models vary based on your hardware. If the message is delivered but no response appears within 30 seconds, the issue is almost always the LLM API connection rather than the messaging bridge — check your API key validity and network connectivity before investigating the messaging platform configuration.

Step 2.5: Test multi-turn conversation. Send two or three follow-up messages to verify that OpenClaw maintains conversation context. Ask it to remember something from your first message, then reference it in a later message. This confirms that the conversation history system is working correctly and that the LLM is receiving the full context window rather than treating each message as an isolated query.

Step 3: Open the web dashboard. The dashboard provides a visual overview of system status, recent conversations, and configuration:

bashopenclaw dashboard

This opens your default browser to the local dashboard interface. From the dashboard, you can monitor active conversations, adjust model parameters, and review usage statistics.

Common errors and their solutions:

Error: "ANTHROPIC_API_KEY is invalid or expired" — This is the single most common issue reported by new users. Verify that your key starts with sk-ant- and has not been revoked in the Anthropic console. If you recently generated the key, wait 60 seconds for propagation before retrying. For detailed troubleshooting of Anthropic key issues, see troubleshooting Anthropic API key configuration errors.

Error: "EACCES permission denied" during installation — The installer tried to write to a directory that requires elevated permissions. On Linux, either run the installer with sudo or change ownership of the target directory. On macOS, the Homebrew prefix (/usr/local/) usually has correct permissions, but if you installed Node.js through a different method, ~/.local/bin/ may need creation.

Error: "Port 18789 already in use" — Another process occupies the gateway port. Identify and stop it with:

bashlsof -i :18789 kill -9 <PID>

Then restart OpenClaw. If the conflict is persistent (another service legitimately uses that port), configure OpenClaw to use a different port through the GATEWAY_PORT environment variable.

Error: "429 Too Many Requests" — Your LLM provider is rate-limiting your API calls. This typically happens during initial testing when you send many messages in quick succession. Wait a few minutes and retry. For persistent rate limit issues, see how to resolve rate limit errors in OpenClaw.

Error: "WebSocket connection failed" (Telegram/Discord) — This usually indicates a network-level block on outbound WebSocket connections. Corporate firewalls, restrictive VPN configurations, and some ISPs in certain regions block WebSocket traffic. Test from a different network or configure OpenClaw to use long-polling mode as a fallback.

Diagnostic commands reference: When standard troubleshooting does not resolve an issue, these commands provide deeper visibility:

bash# Real-time log output openclaw gateway logs # System status summary openclaw status # Check connectivity to all configured providers openclaw doctor --verbose # Reset configuration (last resort) openclaw reset-config

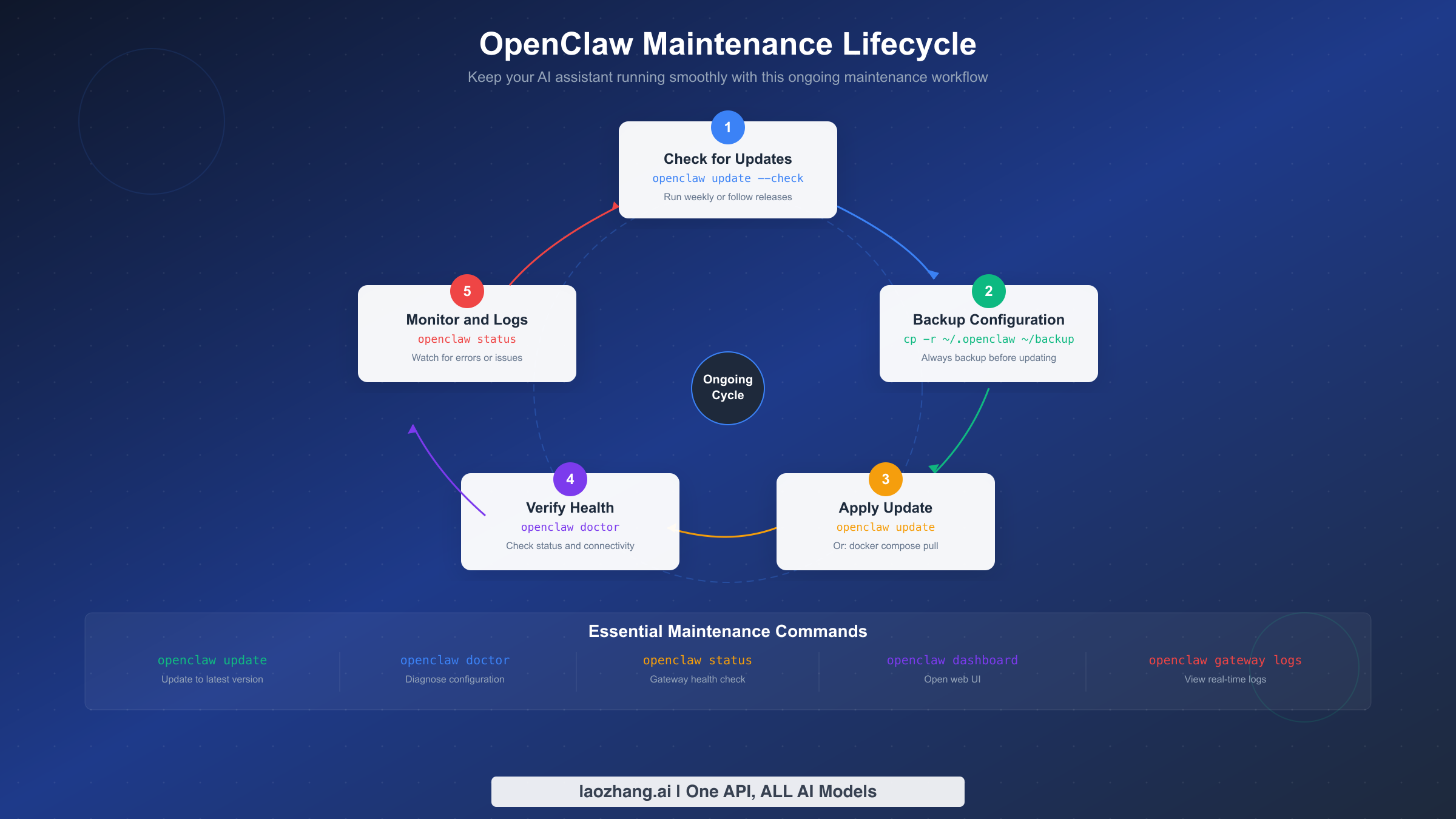

Upgrading, Backup, and Long-Term Maintenance

Installation is a one-time event; maintenance is ongoing. OpenClaw releases updates frequently (the project shipped v2026.2.6 on February 7, 2026, just one day before this guide), and keeping your installation current ensures you receive security patches, performance improvements, and new features. Equally important is establishing a backup routine before any update so that a failed upgrade never results in lost configuration or conversation history.

Checking for updates: OpenClaw includes a built-in update checker that compares your installed version against the latest release:

bashopenclaw update --check

This command queries the GitHub releases API and reports whether a newer version is available. Running this weekly, or subscribing to the OpenClaw GitHub releases feed, keeps you informed without requiring manual checks.

Backup procedure: Before applying any update, back up your configuration and data. The critical files live in two locations:

bash# Native installation backup cp -r ~/.openclaw ~/openclaw-backup-$(date +%Y%m%d) # Docker installation backup cp -r ~/openclaw-docker/data ~/openclaw-data-backup-$(date +%Y%m%d) cp ~/openclaw-docker/.env ~/openclaw-env-backup-$(date +%Y%m%d)

The backup captures your .env file (API keys and tokens), conversation history, custom configurations, and any plugins or extensions you have installed. Store at least one backup generation off-machine — a USB drive, cloud storage, or a different server — so that a hardware failure does not take both your installation and its backup.

Applying updates: The update command varies by installation method:

bash# Installer script method openclaw update # npm global method npm update -g openclaw # Docker Compose method cd ~/openclaw-docker docker compose pull docker compose up -d

For Docker deployments, the pull command downloads the latest image, and up -d recreates the container with the new image while preserving your data volume. This makes Docker the smoothest upgrade path because there is zero risk of Node.js version conflicts or dependency issues — the new image bundles everything it needs.

Rollback procedure: If an update introduces problems, restoring your backup is straightforward:

bash# Native installation rollback openclaw stop rm -rf ~/.openclaw mv ~/openclaw-backup-YYYYMMDD ~/.openclaw openclaw start # Docker rollback (to previous image) docker compose down docker compose pull openclaw/openclaw:v2026.2.5 # specify previous version docker compose up -d

For Docker, you can also pin a specific version in your docker-compose.yml by changing image: openclaw/openclaw:latest to image: openclaw/openclaw:v2026.2.5, which prevents accidental upgrades until you are ready.

Ongoing monitoring: A healthy maintenance routine includes periodic checks beyond just updating. The following commands form a lightweight monitoring workflow that catches issues before they become outages:

bash# Quick health check (run daily or via cron) openclaw status # Check gateway connectivity and API key validity openclaw doctor # Review recent logs for errors or warnings openclaw gateway logs --tail 100 # Open the dashboard for a visual status overview openclaw dashboard

For headless servers without a desktop browser, you can forward the dashboard port through SSH (ssh -L 3000:localhost:3000 user@your-server) and access it from your local machine.

Log management: OpenClaw generates logs continuously during operation. Without rotation, these logs can consume significant disk space over months. On Linux, configure logrotate to manage OpenClaw's log files automatically:

bashsudo tee /etc/logrotate.d/openclaw > /dev/null <<EOF /var/log/openclaw/*.log { daily rotate 7 compress missingok notifempty } EOF

For Docker deployments, Docker's built-in log driver handles rotation. Add the following to your docker-compose.yml under the OpenClaw service to limit log size:

yamllogging: driver: json-file options: max-size: "10m" max-file: "3"

Security maintenance: Periodically rotate your API keys and messaging tokens, especially if you suspect any may have been exposed. Update the keys in your .env file and restart OpenClaw. Additionally, keep your host operating system and Docker engine updated to receive security patches that protect the platform underlying OpenClaw itself.

Summary and Next Steps

You now have a complete roadmap for deploying OpenClaw on any platform, from a five-minute local install to a production-grade Docker deployment on a VPS. The key decisions come down to three factors: your hardware situation (own machine vs. rented VPS), your comfort level with containerization (native install vs. Docker), and your availability requirements (occasional use vs. always-on).

For most users, the optimal path is to start locally with the installer script, validate your API keys and messaging platform connections, and then migrate to a Docker Compose deployment on an affordable VPS like Hetzner or Fly.io once you have confirmed the assistant works the way you want. This two-phase approach minimizes wasted time and money while giving you a production-quality setup.

What to do next:

After your installation is running, the natural next steps involve deepening your LLM configuration and optimizing costs. The comprehensive guide to configuring LLM providers in OpenClaw walks you through switching between Claude, GPT, Gemini, and local models based on task type and budget constraints. For users operating on a tight API budget, the detailed strategies for managing OpenClaw API costs guide covers rate limiting, model routing, and cost monitoring techniques that keep spending predictable.

Recommended exploration path: Once the basics are working, consider these enhancements in order of impact. First, connect a second messaging platform — if you started with Telegram, adding WhatsApp or Discord takes only a few minutes and dramatically increases the assistant's utility across your communication channels. Second, experiment with model routing by assigning a cheaper model to casual conversations and a premium model to complex tasks; this optimization alone can cut API costs by more than half. Third, explore the plugin ecosystem through the OpenClaw dashboard; community-built plugins add capabilities like web search, image generation, document analysis, and calendar integration without requiring code changes.

Keeping up with the project: OpenClaw's development pace is remarkable — the project shipped over 40 releases in 2025 alone, and the v2026.x series continues that cadence. Subscribe to the GitHub releases feed (github.com/openclaw/openclaw/releases) to receive notifications when new versions drop. Major version bumps occasionally introduce breaking changes, so reading the release notes before updating is a habit worth building. The OpenClaw Discord server and GitHub Discussions are the best venues for getting help from other users and the core development team.

OpenClaw's open-source community is active and welcoming. Whether you are automating customer support, building a personal research assistant, or experimenting with multi-model conversations, the foundation you have built today scales to meet those ambitions. The hardest part — choosing a method and getting the first message through — is behind you.