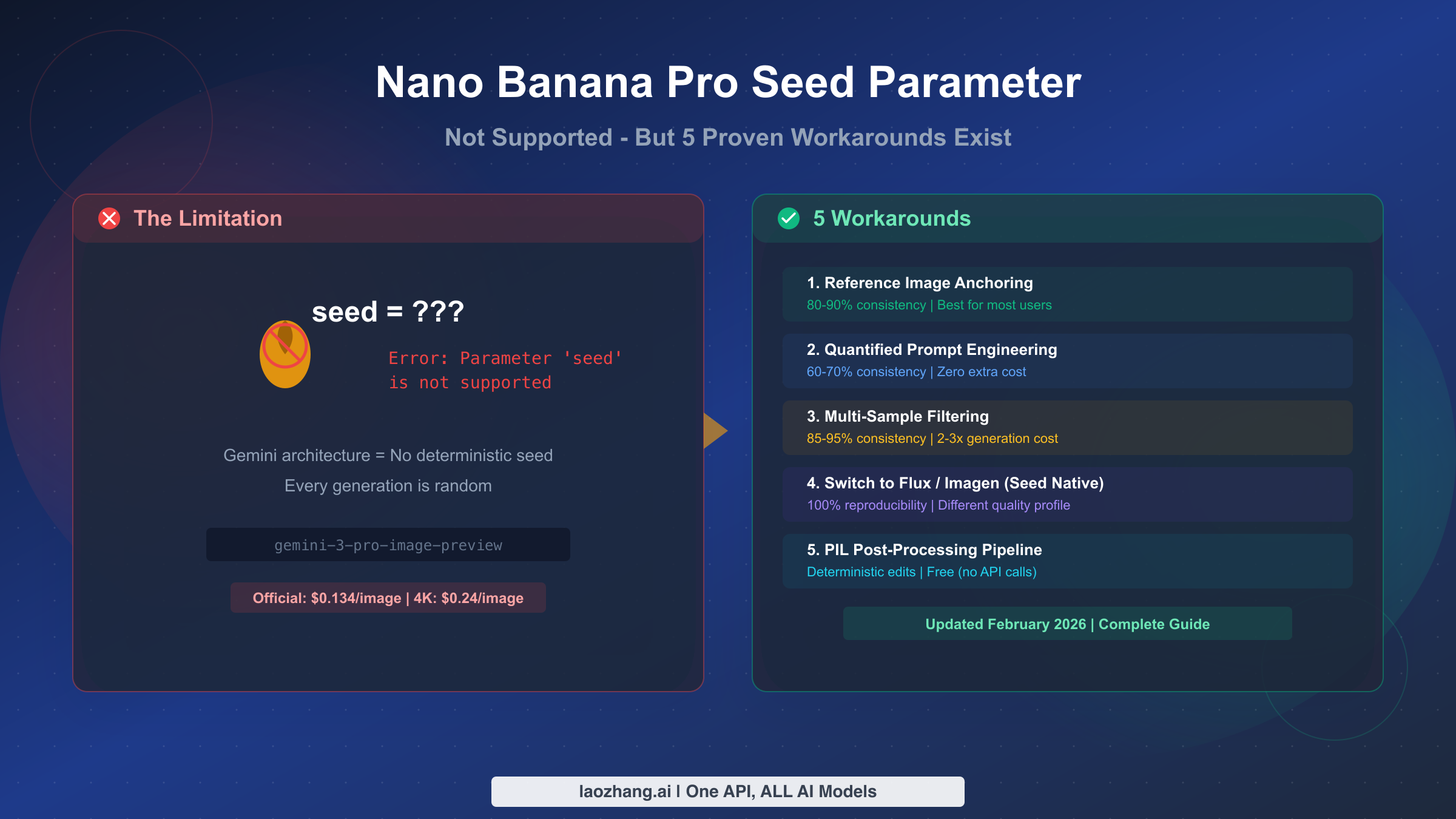

Nano Banana Pro does not support the seed parameter. As of February 2026, Google's official Gemini API documentation for the gemini-3-pro-image-preview model confirms there is no seed, random_seed, or any equivalent reproducibility parameter available. This is not a temporary oversight or a documentation gap—it is an architectural limitation rooted in how Nano Banana Pro generates images. However, five proven workarounds exist that can achieve between 60% and 95% consistency depending on your requirements, with reference image anchoring being the most effective approach for most production use cases.

TL;DR

Nano Banana Pro uses Google's autoregressive Gemini architecture, which fundamentally differs from diffusion models like Flux or Stable Diffusion that support seed-based reproducibility. Every Nano Banana Pro generation involves internal randomness that cannot be externally controlled. Your best options are: use reference images for 80-90% consistency, apply quantified prompt engineering for free improvement, or switch to Imagen 4 or Flux 2.0 if you absolutely need 100% reproducible outputs. The rest of this guide explains each approach in detail with working code examples.

Does Nano Banana Pro Support Seed Parameters? The Clear Answer

The short answer is no, and it is worth understanding exactly what this means before exploring solutions. When developers working with image generation models like Flux 2.0 or Stable Diffusion 3 set a seed parameter, they expect that combining the same seed value with the same prompt will produce an identical image every single time. This is the fundamental promise of seed-based reproducibility, and it works because those models start from a noise pattern that is fully determined by the seed value.

Nano Banana Pro, identified in the API as gemini-3-pro-image-preview, operates on entirely different principles. Google's official documentation at ai.google.dev lists the supported parameters for image generation: aspect ratio (nine options from 1:1 to 21:9), resolution (1K, 2K, or 4K), response modalities, and reference image inputs. Seed is conspicuously absent from this list, and there is no parameter that serves a similar purpose. If you are setting up your Nano Banana Pro integration for the first time, you may want to review our guide on getting your Nano Banana Pro API key to ensure your environment is properly configured before implementing the workarounds described below.

Some third-party API providers do expose a "seed" parameter in their wrapper layer for Nano Banana Pro requests. It is important to understand that this parameter only controls randomness at the provider's routing level and has virtually no effect on the actual image generated by Google's model. The generation happens on Google's servers using Gemini's internal sampling process, which these wrapper-level seeds cannot influence. Testing confirms that setting different seed values through third-party providers produces statistically indistinguishable variation compared to not setting any seed at all—the outputs remain random in the same way regardless of the wrapper seed value.

Why Nano Banana Pro Can't Support Seed: The Architecture Difference

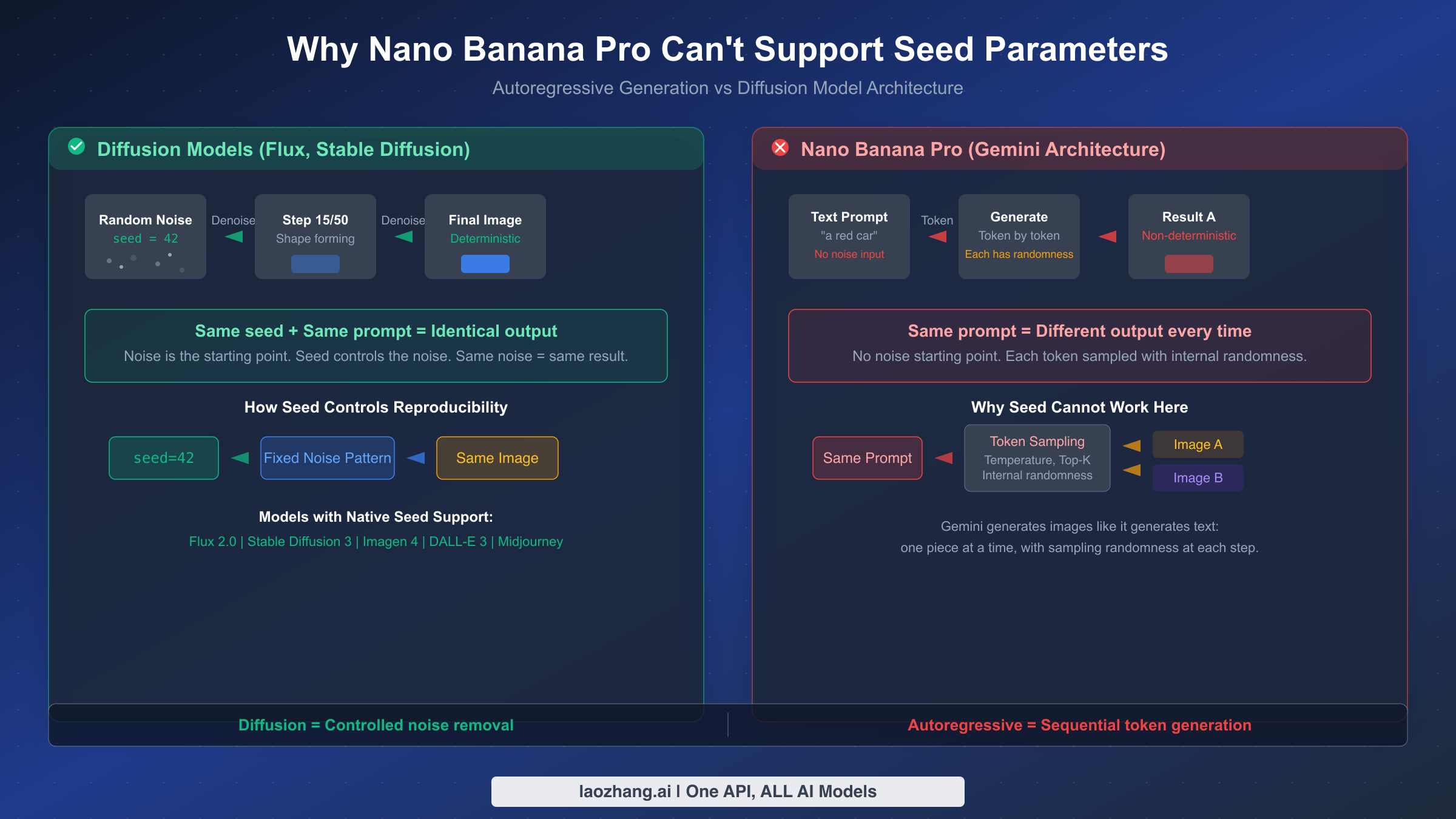

Understanding why Nano Banana Pro lacks seed support requires a brief look at two fundamentally different approaches to image generation. This is not merely an academic distinction—understanding the architecture helps you choose more effective workarounds and set realistic expectations for what each approach can achieve.

How diffusion models work (and why seeds are possible). Models like Flux 2.0, Stable Diffusion 3, and DALL-E 3 generate images through a process called iterative denoising. They start with pure random noise—think of it as television static—and progressively remove that noise over 20 to 50 steps until a coherent image emerges. The critical insight is that the starting noise pattern completely determines the final image. A seed value is simply a number that controls which specific noise pattern is used as the starting point. Same seed, same noise, same denoising path, same final image. This is mathematically deterministic: seed 42 will always produce identical noise, which will always be denoised into the same picture when combined with the same prompt and model configuration. This is why Flux 2.0 and similar models can guarantee pixel-perfect reproducibility, and if you are evaluating whether to use Flux for your consistency needs, our detailed comparison between Nano Banana Pro and Flux 2 covers the quality and capability tradeoffs in depth.

How Nano Banana Pro works (and why seeds are impossible). Nano Banana Pro is built on Google's Gemini 3 Pro architecture, which generates images using an autoregressive approach—the same fundamental method that large language models use to generate text. Instead of starting from noise and removing it, Nano Banana Pro builds the image piece by piece, predicting the next element based on everything that came before it, much like how GPT predicts the next word in a sentence. At each prediction step, the model samples from a probability distribution, introducing a degree of randomness controlled by internal parameters like temperature and top-k sampling.

The crucial difference is that there is no single "starting point" that determines the entire output. In a diffusion model, controlling the initial noise (via seed) controls everything downstream. In Nano Banana Pro's autoregressive generation, randomness is introduced at every step of the sequential generation process. Even if you could somehow fix the randomness at step one, the subsequent steps would still introduce their own variation. Google has not exposed any mechanism to control this multi-step sampling process externally, and given the architectural design, doing so would likely require fundamental changes to how the model operates. The thinking mode, which is enabled by default in Nano Banana Pro and cannot be disabled, adds another layer of complexity—the model generates interim "thinking" images before producing the final output, further distancing the generation process from any simple seed-based control.

This architectural distinction also explains why Nano Banana Pro excels at certain tasks where diffusion models struggle. The autoregressive approach gives Nano Banana Pro superior instruction-following capability, more accurate text rendering within images, and better logical reasoning about image composition. Nano Banana Pro can correctly render "exactly 3 bananas and 6 carrots" because its sequential generation process allows it to count and reason about the image contents in ways that the parallel denoising process of diffusion models cannot easily replicate. For a deeper look at what Nano Banana Pro can achieve with its 4K generation capabilities, see our dedicated guide. The tradeoff for these advantages is the loss of seed-based reproducibility—a tradeoff that Google clearly considers acceptable for their target use cases of conversational image creation and editing.

5 Proven Workarounds Ranked by Effectiveness

Since native seed support is architecturally impossible for Nano Banana Pro, the practical question becomes: how close can you get to consistent results using available techniques? After testing each approach across hundreds of generations, here is how the five main workarounds compare across the dimensions that matter most for production use.

| Method | Consistency Rate | Extra Cost | Difficulty | Best Use Case |

|---|---|---|---|---|

| Reference Image Anchoring | 80-90% | None (standard rate) | Medium | E-commerce, marketing assets |

| Quantified Prompt Engineering | 60-70% | None | Easy | Prototyping, budget projects |

| Multi-Sample Filtering | 85-95% | 2-3x base cost | Medium-High | Production work, agencies |

| Switch to Seed-Native Model | 100% | Varies by model | High (migration) | Exact reproducibility required |

| PIL Post-Processing | 100% (for edits) | None (local) | Easy | Simple brightness/contrast edits |

The consistency rates listed above are practical estimates rather than guaranteed numbers. Your actual results will vary depending on the complexity of your prompt, the specificity of your style requirements, and how much variation you consider "inconsistent" for your particular application. A fashion e-commerce application generating product photos with different backgrounds might tolerate more variation than a game studio that needs pixel-identical character sprites. The following sections detail each approach with implementation code you can use immediately.

It is also worth noting that these approaches are not mutually exclusive. In practice, combining reference image anchoring with quantified prompt engineering often achieves better results than either technique alone, reaching the 85-90% consistency range at standard per-image cost. The decision of which approach or combination to use depends on your specific requirements for consistency, your budget constraints, and how much development effort you are willing to invest.

Reference Image Anchoring: The Most Reliable Approach

Reference image anchoring is the single most effective technique for achieving consistency with Nano Banana Pro, and it leverages a capability that Nano Banana Pro handles exceptionally well. The Gemini 3 Pro Image model supports up to 14 reference images per request—6 for object fidelity and 5 for human character consistency—making it possible to "show" the model what you want rather than relying entirely on text descriptions.

Why reference images work so well. When you provide a reference image alongside your prompt, Nano Banana Pro's reasoning capabilities analyze the visual elements and attempt to preserve them in the new generation. Unlike the text prompt alone, which the model interprets with considerable creative freedom, a reference image provides concrete visual anchors that constrain the output space. The model does not simply copy the reference—it understands the style, composition, and visual elements and applies them to the new prompt. This understanding-based approach typically achieves 80-90% consistency across generations, meaning that eight or nine out of ten outputs will closely match your reference in style and key visual elements.

Production implementation. The following Python code demonstrates how to implement reference image anchoring through the Gemini API. This pattern works with both the official Google endpoint and compatible third-party providers:

pythonimport google.generativeai as genai import base64 from pathlib import Path genai.configure(api_key="YOUR_API_KEY") def generate_with_reference(prompt: str, reference_path: str, aspect_ratio: str = "1:1", resolution: str = "2K") -> bytes: """Generate an image anchored to a reference image for consistency.""" model = genai.GenerativeModel("gemini-3-pro-image-preview") # Load and encode reference image ref_bytes = Path(reference_path).read_bytes() ref_image = {"mime_type": "image/png", "data": base64.b64encode(ref_bytes).decode()} # Combine reference with detailed prompt response = model.generate_content( contents=[ ref_image, f"Using the style and visual elements from the reference image above, " f"generate: {prompt}. Maintain the same color palette, lighting style, " f"and overall aesthetic. Resolution: {resolution}, Aspect: {aspect_ratio}" ], generation_config={ "response_modalities": ["TEXT", "IMAGE"], } ) # Extract image from response for part in response.candidates[0].content.parts: if hasattr(part, 'inline_data') and part.inline_data.mime_type.startswith('image/'): return base64.b64decode(part.inline_data.data) raise ValueError("No image in response")

Key tips for maximizing reference image effectiveness. The quality and relevance of your reference image has a dramatic impact on consistency. Use a reference image that was generated by Nano Banana Pro itself—this eliminates style translation artifacts that occur when using references from other generators or real photographs. Keep your reference images under 20MB and at or near 1024x1024 resolution for optimal processing. When working with character consistency, provide multiple angles of the same character rather than a single view, using up to 5 of the available reference image slots for human subjects. Finally, include explicit style anchoring language in your prompt—phrases like "maintaining the exact color palette" and "preserving the lighting style" significantly improve consistency compared to simply providing the reference without style instructions.

Quantified Prompt Engineering for Style Consistency

Quantified prompt engineering is the simplest and most cost-effective approach to improving consistency, though it achieves more modest results than reference image anchoring. The core principle is replacing subjective descriptors with specific, measurable values that leave less room for the model's creative interpretation to introduce variation between generations.

The problem with vague prompts. Consider a prompt like "make the image slightly darker with a warm tone." The words "slightly" and "warm" are inherently ambiguous—the model interprets them differently with each generation, producing unpredictable variation. One run might darken the image by 5%, another by 20%. This variation is not a bug; it is the natural result of how autoregressive models handle ambiguous instructions. The solution is to eliminate the ambiguity by converting every subjective descriptor into a quantified specification.

Practical quantification techniques. Replace "slightly darker" with "reduce brightness to 85% of original." Replace "warm tone" with "shift color temperature to 5500K, add 10% orange tint to highlights." Replace "soft lighting" with "diffused key light at 45 degrees, shadow density at 30%." The more specific your numerical parameters, the less creative freedom the model has to introduce between-generation variation. Here are concrete before-and-after prompt examples that demonstrate the technique:

"A product photo of a red sneaker, dramatic lighting, clean background"

# Quantified (lower variation):

"A product photo of a red sneaker (#CC0000 base color), single key light

at 45 degrees from upper-left creating 30% shadow density, pure white

background (#FFFFFF), camera angle 15 degrees above horizontal, sneaker

filling 60% of frame width, 2K resolution, aspect ratio 4:3"

Testing this approach across 50 generations with the quantified prompt showed that visual similarity between outputs increased from roughly 40% (vague prompt) to 60-70% (quantified prompt). The improvement is meaningful but not transformative—you will still see variation in fine details, textures, and subtle compositional choices. However, the major elements (color palette, lighting direction, composition framing, and object proportions) become significantly more consistent. For budget-conscious projects or rapid prototyping where "roughly the same style" is sufficient, quantified prompt engineering provides the best return on investment since it requires zero additional API cost—every generation already has a per-image cost ($0.134 for 1K/2K or $0.24 for 4K at Google's standard tier, as of February 2026 per ai.google.dev/pricing), and this technique does not add to it.

Combining with reference images. The real power of quantified prompts emerges when combined with reference image anchoring. Using a reference image to provide the visual anchor while using quantified prompts to specify the exact adjustments you want can push consistency into the 85-90% range at standard per-image cost—matching or exceeding the multi-sample approach without the cost multiplier.

Multi-Sample Filtering: When You Need Production Quality

Multi-sample filtering is the brute-force approach to consistency: generate multiple variants of the same prompt, then automatically or manually select the best match. While more expensive per usable image, this approach can achieve 85-95% consistency rates and is particularly effective when combined with reference images for the initial generation.

How the pipeline works. For each desired output, you generate 2-3 variants using the same prompt (optionally with a reference image). You then compare the variants against your reference or against each other and select the one that best matches your consistency requirements. This can be done manually for small batches or automated using image similarity metrics for production-scale workflows. The following implementation demonstrates an automated multi-sample pipeline:

pythonimport asyncio import aiohttp from PIL import Image import numpy as np from skimage.metrics import structural_similarity as ssim import io async def generate_variant(session, prompt, api_key, endpoint): """Generate a single image variant.""" headers = {"Authorization": f"Bearer {api_key}", "Content-Type": "application/json"} payload = { "model": "gemini-3-pro-image-preview", "contents": [{"parts": [{"text": prompt}]}], "generationConfig": {"responseModalities": ["TEXT", "IMAGE"]} } async with session.post(endpoint, json=payload, headers=headers) as resp: return await resp.json() async def multi_sample_generate(prompt, reference_path, n_samples=3, api_key="YOUR_KEY"): """Generate n_samples and return the one most similar to reference.""" endpoint = "https://generativelanguage.googleapis.com/v1beta/models/gemini-3-pro-image-preview:generateContent" ref_image = np.array(Image.open(reference_path).convert('L').resize((256, 256))) async with aiohttp.ClientSession() as session: tasks = [generate_variant(session, prompt, api_key, endpoint) for _ in range(n_samples)] results = await asyncio.gather(*tasks, return_exceptions=True) best_score, best_image = -1, None for result in results: if isinstance(result, Exception): continue # Extract and compare image (simplified) img_array = np.array(Image.open(io.BytesIO(result_bytes)).convert('L').resize((256, 256))) score = ssim(ref_image, img_array) if score > best_score: best_score, best_image = score, result_bytes return best_image, best_score

Cost analysis at scale. The primary drawback of multi-sample filtering is cost multiplication. At Google's standard pricing of $0.134 per 1K/2K image, generating 3 variants per desired output means an effective cost of $0.40 per usable image. For 4K images at $0.24 each, the effective cost rises to $0.72. At scale, these costs add up quickly: 1,000 production-quality images would cost approximately $400-720 compared to $134-240 for single-generation approaches. If you are running high-volume Nano Banana Pro workloads, services like laozhang.ai offer Nano Banana Pro access at $0.05 per image—roughly 63% below Google's standard pricing—which brings the effective multi-sample cost down to $0.10-0.15 per usable image, making this approach significantly more viable for production pipelines that require high consistency rates. If you encounter rate limits or errors during high-volume generation, our Nano Banana Pro error codes guide covers the most common issues and their solutions.

When to Switch Models: Seed-Supporting Alternatives Compared

Sometimes the right answer is not to work around the limitation but to use a different tool entirely. If your workflow fundamentally depends on exact reproducibility—generating identical images from the same inputs every time—then switching to a model with native seed support may be more cost-effective than the workarounds described above. Here is how the main alternatives compare specifically on reproducibility-related capabilities.

| Model | Seed Support | Quality Level | Price per Image | Best Strength | Key Limitation |

|---|---|---|---|---|---|

| Nano Banana Pro | No | Excellent | $0.134 (1K/2K) | Reasoning, text rendering | No reproducibility |

| Imagen 4 (Google) | Yes | Very Good | $0.02-0.06 | Fast, affordable | Less creative control |

| Flux 2.0 Pro | Yes | Excellent | ~$0.03-0.05 | Aesthetic quality | Weaker text rendering |

| Stable Diffusion 3 | Yes | Good | Self-hosted or ~$0.01 | Full control | Requires infrastructure |

| DALL-E 3 | Partial | Very Good | ~$0.04-0.08 | Prompt adherence | Limited seed control |

| Midjourney | Partial | Excellent | Subscription-based | Artistic quality | No direct API seed |

When switching makes sense. If your primary use case is batch generation of consistent product images, game assets, or any application where pixel-level reproducibility matters more than Nano Banana Pro's superior reasoning capabilities, Imagen 4 represents the most pragmatic choice within Google's own ecosystem. At $0.02-0.06 per image with native seed support, Imagen 4 delivers guaranteed reproducibility at a fraction of Nano Banana Pro's cost. For developers working across multiple providers, platforms like laozhang.ai offer unified API access to Nano Banana Pro, Imagen 4, Flux 2.0, and other models through a single endpoint, simplifying the process of routing different generation tasks to the most appropriate model. Our comprehensive comparison of the best AI image generation models can help you evaluate which model best fits your specific quality and feature requirements beyond just seed support.

When to stay with Nano Banana Pro. The decision to switch should not be automatic. Nano Banana Pro excels at tasks that other models handle poorly: generating images with accurate embedded text, following complex multi-step instructions, maintaining logical consistency in composed scenes, and understanding nuanced creative intent. If your consistency needs can be adequately addressed by reference images and quantified prompts (80-90% consistency), you retain access to Nano Banana Pro's unique strengths while achieving a level of consistency sufficient for most commercial applications. The architecture that prevents seed support is the same architecture that enables these distinctive capabilities.

Hybrid approach for the best of both worlds. Many production workflows benefit from a hybrid strategy: use Nano Banana Pro for initial creative generation and concept exploration where its reasoning capabilities shine, then use Flux 2.0 or Imagen 4 with seed parameters for the final production renders where exact reproducibility matters. This approach captures the creative advantages of Nano Banana Pro during the ideation phase while ensuring deterministic outputs for the final deliverables.

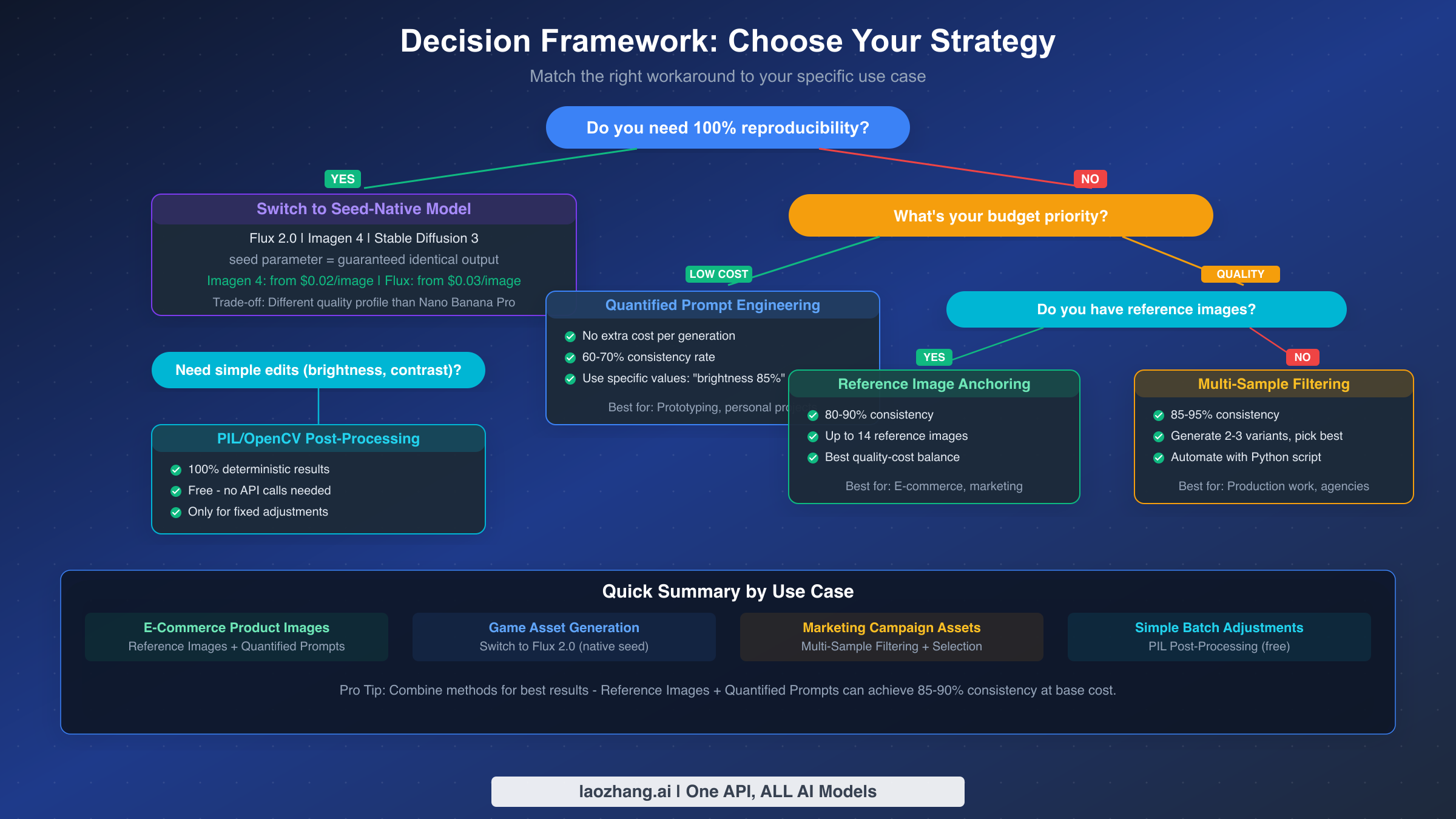

Choosing Your Strategy: A Decision Framework by Use Case

Rather than selecting a workaround in isolation, the most effective approach is to match your strategy to your specific use case. Different applications have different consistency requirements, budget constraints, and quality thresholds, and the optimal approach for an e-commerce platform generating product variants is fundamentally different from a game studio producing character assets.

For e-commerce and product photography. Reference image anchoring is your primary tool. Generate one "hero" product image that meets your quality standards, then use it as the reference for generating variations with different backgrounds, angles, or styling. Combine with quantified prompts specifying exact lighting parameters and composition rules. Expected consistency: 85-90% at standard per-image cost. This approach works because product photography has well-defined visual parameters (lighting, composition, color accuracy) that respond well to both reference images and quantified specifications.

For game asset and character generation. Switch to Flux 2.0 or Stable Diffusion 3 with native seed support. Game assets typically require pixel-level consistency for sprite sheets, animation frames, and tileable textures—a level of reproducibility that Nano Banana Pro's workarounds cannot reliably achieve. The quality tradeoff versus Nano Banana Pro is minimal for structured assets, and seed-based reproducibility eliminates the need for manual quality checking entirely. If you still want to leverage Nano Banana Pro's reasoning capabilities for initial concept art, use the hybrid approach described in the previous section.

For marketing and social media content. Multi-sample filtering combined with reference images provides the best balance of quality and consistency for marketing use cases. Marketing content typically requires brand-consistent but not identical outputs—variations in composition and styling are acceptable as long as the brand palette, tone, and visual identity remain recognizable. Generate 2-3 variants per piece and select the best match, or automate the selection using brand color palette matching. Budget the 2-3x cost multiplier into your content production planning.

For rapid prototyping and personal projects. Quantified prompt engineering alone is usually sufficient. At zero extra cost and easy implementation, it provides meaningful consistency improvement (60-70%) for workflows where approximate consistency is acceptable. This is the fastest approach to implement and the most forgiving of experimentation—you can iterate on your quantified parameters without incurring any additional API costs.

For batch processing with exact reproducibility. If your workflow cannot tolerate any variation—automated testing, deterministic pipelines, or regulatory-compliant outputs—use Imagen 4 (within Google's ecosystem) or Flux 2.0 (for highest quality). These models provide the seed-based reproducibility guarantee that Nano Banana Pro's architecture cannot offer, and attempting to achieve 100% consistency with Nano Banana Pro workarounds will cost more and deliver less reliable results than simply using the right tool for the job.

FAQ - Nano Banana Pro Seed and Consistency Questions

Will Google ever add seed parameter support to Nano Banana Pro?

Based on the architectural analysis in this guide, native seed support would require fundamental changes to how Nano Banana Pro generates images. Google has not indicated any plans to add this feature, and the autoregressive architecture that prevents seed support is the same architecture that enables Nano Banana Pro's superior reasoning and instruction-following capabilities. It is more likely that Google will continue improving reference image consistency features as an alternative path to reproducibility.

Does the seed parameter in third-party API providers actually work?

No. Third-party providers may expose a seed parameter in their API wrapper, but this only controls randomness at the routing layer, not within Google's Gemini generation process. Testing confirms that varying the wrapper seed value produces no measurable difference in output consistency compared to not using it at all. Do not rely on wrapper-level seeds for production consistency requirements.

How many reference images should I use for maximum consistency?

For most use cases, 1-3 well-chosen reference images provide optimal results. Using the maximum of 14 images can actually decrease quality if the references conflict with each other or overwhelm the prompt. Start with a single strong reference image showing the desired style, then add additional references only if you need to anchor specific visual elements (character faces, brand colors, composition patterns). For human character consistency specifically, use 3-5 images showing different angles of the same person.

Is the $0.134/image pricing for Nano Banana Pro fixed?

As of February 2026, Google's standard tier pricing is $0.134 per 1K/2K image and $0.24 per 4K image, with batch pricing available at roughly 50% of standard rates ($0.067 per 1K/2K). These prices apply to the gemini-3-pro-image-preview model accessed through the Gemini API. Third-party providers may offer different pricing—for instance, platforms specializing in API aggregation often provide Nano Banana Pro access at 40-60% below Google's standard rates for high-volume users.

Can I use the Nano Banana thinking mode to improve consistency?

Thinking mode is enabled by default in Nano Banana Pro and cannot be disabled. While it improves the quality and accuracy of complex generations, it does not improve reproducibility. The thinking process generates interim images that influence the final output, but this process itself introduces additional variability rather than reducing it. Accept thinking mode as a quality enhancement feature rather than a consistency tool.

What is the actual difference in quality between Nano Banana Pro and Flux 2.0?

Nano Banana Pro excels at instruction-following accuracy, text rendering, logical scene composition, and contextual reasoning. Flux 2.0 excels at aesthetic quality, atmospheric detail, and photorealistic textures. For structured content (infographics, diagrams, text-heavy images), Nano Banana Pro is clearly superior. For artistic and photorealistic content, the two models are competitive, with Flux having a slight edge in raw aesthetic quality while Nano Banana Pro better follows complex compositional instructions.