API Gemini Free: Complete Guide to Using Google’s AI Without Cost in 2025

API Gemini free access provides developers with powerful AI capabilities at zero cost, offering 1,500 daily requests for Flash models and complete feature access without requiring credit card information. Google’s generous free tier makes advanced AI development accessible to everyone from students to startups.

How to Access API Gemini Free Without Credit Card

Getting started with API Gemini free access requires nothing more than a Google account and two minutes of setup time. Google AI Studio serves as the primary gateway to free API access, eliminating traditional barriers like credit card requirements, complex approval processes, or trial limitations. This democratization of AI access reflects Google’s commitment to fostering innovation across all developer segments, not just those with substantial budgets.

The process begins at Google AI Studio (ai.google.dev), where the interface immediately presents options for API key generation. Unlike many competing platforms that hide free tiers behind payment walls or trial signups, Google prominently features free access as a primary option. After signing in with any Google account, developers can generate their first API key with a single click, receiving immediate access to the full range of Gemini models within free tier limits.

Alternative access methods provide flexibility for different use cases and preferences. Firebase integration offers a particularly elegant solution for web and mobile developers, providing API access through Firebase’s authentication system without exposing keys in client code. Third-party platforms like Puter.js eliminate even the Google account requirement, offering completely anonymous access for privacy-conscious developers. These alternatives ensure that API Gemini free access remains available regardless of individual constraints or preferences.

Common setup obstacles that developers encounter often stem from confusion about the various Google AI products and access methods. Google AI Studio provides the simplest path for individual developers, while Vertex AI suits enterprise needs with additional features. Understanding this distinction prevents developers from accidentally choosing complex enterprise setups when simple free access would suffice. The key insight is that API Gemini free tier through Google AI Studio provides everything needed for substantial applications without the complexity of enterprise platforms.

API Gemini Free Tier Limits and Capabilities

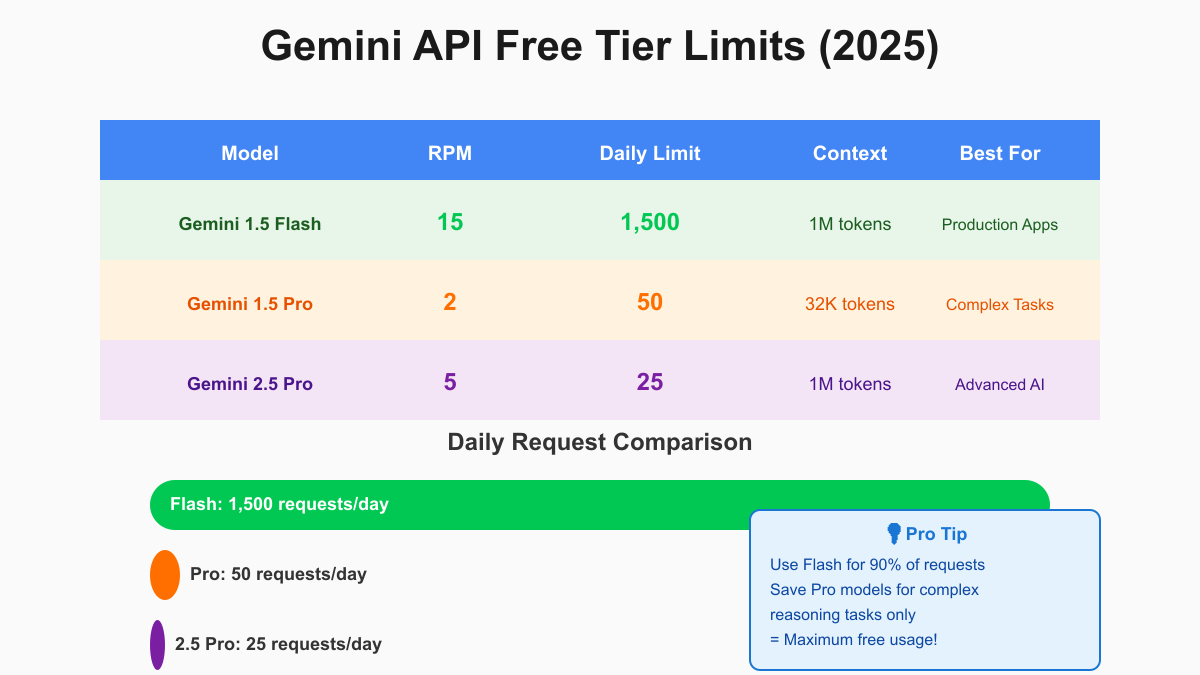

Understanding the specific limits of API Gemini free tier enables developers to architect applications that maximize value within constraints. The headline number of 1,500 daily requests for Gemini 1.5 Flash represents genuine usability for production applications, not merely a trial allocation. This quota resets at midnight Pacific time daily, providing predictable capacity planning for global applications. The generous allocation reflects Google’s understanding that meaningful AI applications require substantial request volumes to serve real users effectively.

Model-specific rate limits create a tiered system that encourages efficient resource usage while maintaining access to advanced capabilities. Gemini 1.5 Flash, optimized for high-volume applications, allows 15 requests per minute within the free tier, sufficient for real-time applications serving hundreds of concurrent users. Pro models, designed for complex reasoning tasks, limit free usage to 2-5 requests per minute, encouraging developers to reserve these powerful models for tasks that genuinely require advanced capabilities.

Feature availability within API Gemini free tier surprises many developers accustomed to stripped-down free offerings from other providers. Multimodal processing, including image, audio, and video analysis, comes standard without premium requirements. Function calling enables sophisticated agent behaviors, while code execution supports computational tasks directly within the API. Even advanced features like grounding with Google Search remain accessible, though with usage-based pricing only for successful searches. This comprehensive feature set enables building production-quality applications without paid upgrades.

Comparing free tier capabilities with paid options reveals a thoughtful design that serves different use cases rather than artificially limiting functionality. The primary differences lie in rate limits and quota allocations rather than feature restrictions. Paid tiers offer higher request volumes, priority processing, and enterprise support, but core AI capabilities remain consistent. This approach ensures that applications built on the free tier can scale seamlessly to paid tiers without architectural changes, merely by adjusting billing settings when growth demands exceed free allocations.

Setting Up Your First API Gemini Free Project

Creating your first API Gemini free project involves straightforward steps that get developers from zero to functional AI integration in minutes. The modern SDK ecosystem supports all major programming languages with idiomatic implementations that feel native to each platform. Google’s investment in developer experience shows through consistent API design, comprehensive documentation, and helpful error messages that guide rather than frustrate.

Environment setup begins with installing the appropriate SDK for your chosen language. Python developers use pip to install google-genai, while JavaScript developers add @google/genai through npm. These official SDKs represent significant improvements over earlier iterations, offering type safety, intelligent autocompletion, and built-in best practices. The SDKs handle authentication, request formatting, and response parsing automatically, allowing developers to focus on application logic rather than API mechanics.

# Python setup for API Gemini free

import os

from google import genai

# API key from environment variable

client = genai.Client(api_key=os.environ.get('GEMINI_API_KEY'))

# Simple generation example

response = client.models.generate_content(

model='gemini-2.5-flash',

contents='Explain how neural networks learn'

)

print(response.text)

Basic code examples demonstrate the simplicity of API Gemini free integration. After SDK installation and API key configuration, generating AI responses requires just a few lines of code. The consistent interface across different models means switching between Flash and Pro models involves changing a single parameter. This simplicity extends to advanced features – adding image analysis or function calling requires minimal additional code, encouraging experimentation and rapid prototyping.

Common setup issues typically revolve around environment configuration and API key management. Developers often forget to set environment variables properly or accidentally commit API keys to version control. The solution involves using .env files for local development and secure secret management for production deployments. Testing free access should begin with simple text generation before attempting complex multimodal or function calling features. This graduated approach helps identify configuration issues early while building familiarity with API behavior.

5 Ways to Get API Gemini Free Access

Multiple pathways to API Gemini free access ensure that developers can find methods suited to their specific needs and constraints. Each approach offers unique advantages while maintaining access to core Gemini capabilities. Understanding these options enables choosing the optimal path based on project requirements, technical constraints, and organizational policies.

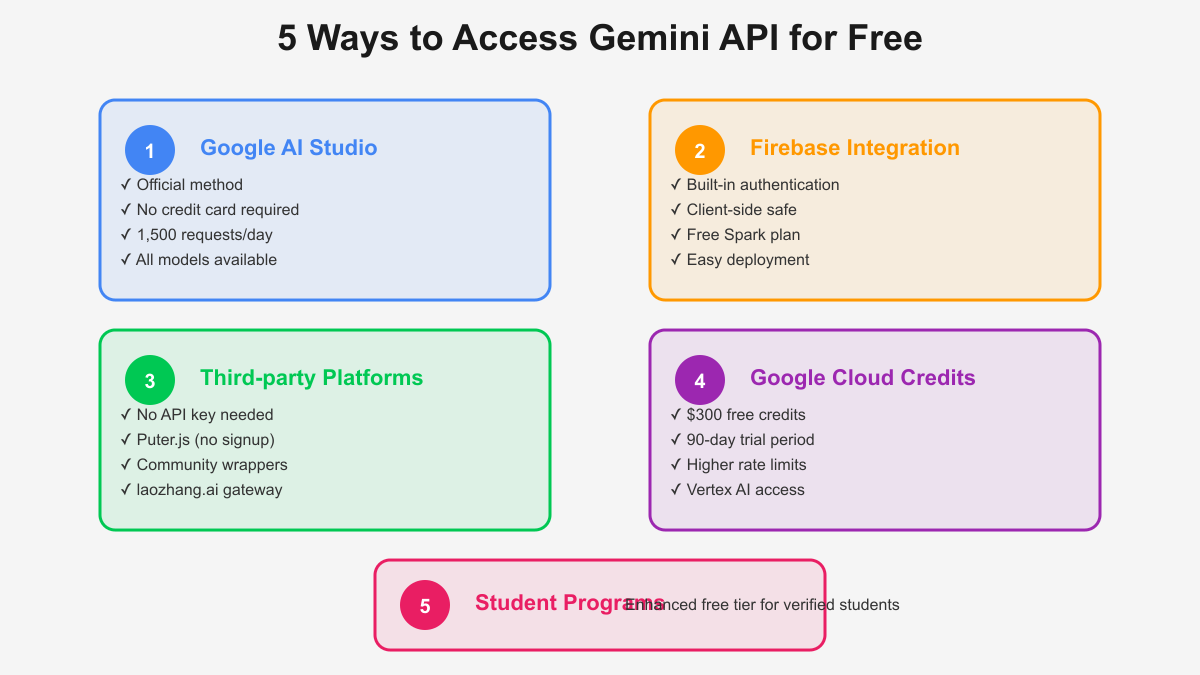

Google AI Studio represents the official and most straightforward path to API Gemini free access. After creating an API key through the web interface, developers gain immediate access to all Gemini models within free tier limits. This method suits individual developers and small teams who want direct API access without additional complexity. The AI Studio interface also provides testing tools, usage monitoring, and key management features that simplify development workflows.

Firebase integration offers a sophisticated alternative that solves common security challenges in client-side applications. By using Firebase Authentication alongside Gemini access, developers can safely call AI features from web and mobile apps without exposing API keys. Firebase’s free Spark plan includes generous quotas that complement Gemini’s free tier perfectly. This approach particularly suits applications already using Firebase for other features, creating a unified development experience with consistent authentication and billing.

Third-party platforms and community solutions provide additional flexibility for accessing API Gemini free capabilities. Puter.js stands out by offering completely anonymous access without any registration or API keys. While this approach has limitations in terms of rate limits and feature availability, it enables quick prototyping and privacy-focused applications. API gateway services like laozhang.ai aggregate multiple AI providers including Gemini, often providing their own free tiers that effectively extend available quotas. These gateways add value through unified interfaces, automatic failover, and usage analytics while maintaining cost-free access for appropriate usage levels.

Google Cloud’s $300 free credit program and student initiatives provide enhanced access for qualifying users. New Google Cloud users receive $300 in credits valid for 90 days, sufficient for extensive Gemini API usage beyond free tier limits. Students with verified educational email addresses often qualify for additional benefits including higher quotas and extended credit periods. These programs recognize that students and researchers often need substantial AI resources for academic projects while lacking funding for paid services.

Optimizing API Gemini Free Usage for Production

Building production applications within API Gemini free constraints requires architectural patterns that maximize efficiency without compromising user experience. The key insight is that free tier limitations encourage better engineering practices that benefit applications regardless of scale. Well-architected free tier applications often outperform poorly designed paid implementations through superior resource management.

Request queuing strategies form the foundation of effective free tier utilization. Instead of sending API requests immediately upon user action, applications should implement intelligent queuing systems that respect rate limits while ensuring eventual processing. A Redis-based queue can accept unlimited user requests while dispatching them to the Gemini API at sustainable rates. This pattern prevents 429 errors during traffic spikes while providing users with acknowledgment that their requests are processing.

// Intelligent request queue for API Gemini free

class GeminiQueue {

constructor(maxRPM = 15) {

this.queue = [];

this.processing = false;

this.maxRPM = maxRPM;

this.requestTimes = [];

}

async addRequest(prompt, callback) {

this.queue.push({ prompt, callback, timestamp: Date.now() });

if (!this.processing) {

this.processQueue();

}

}

async processQueue() {

this.processing = true;

while (this.queue.length > 0) {

// Check rate limit

await this.enforceRateLimit();

const { prompt, callback } = this.queue.shift();

try {

const response = await this.callGeminiAPI(prompt);

callback(null, response);

} catch (error) {

callback(error, null);

}

}

this.processing = false;

}

async enforceRateLimit() {

const now = Date.now();

this.requestTimes = this.requestTimes.filter(t => now - t < 60000);

if (this.requestTimes.length >= this.maxRPM) {

const oldestRequest = this.requestTimes[0];

const waitTime = 60000 - (now - oldestRequest) + 100;

await new Promise(resolve => setTimeout(resolve, waitTime));

}

this.requestTimes.push(now);

}

}

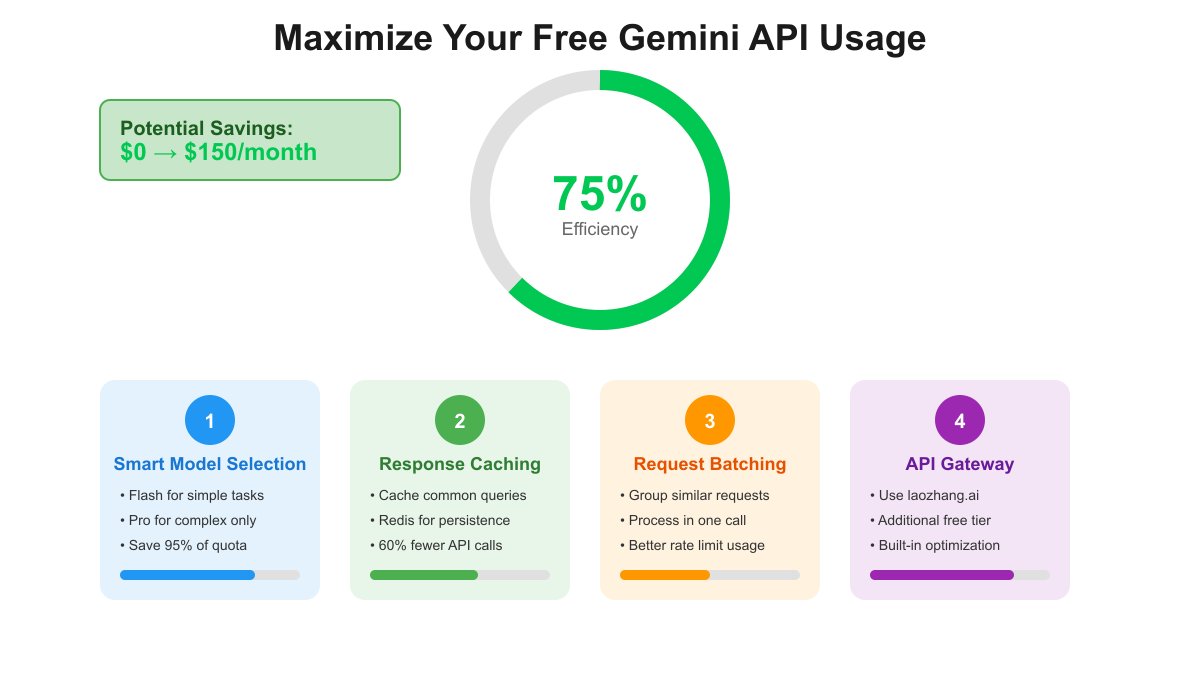

Intelligent caching implementation extends API Gemini free tier capabilities dramatically by avoiding redundant API calls. Beyond simple exact-match caching, semantic similarity matching identifies when new queries resemble previously cached responses. Using embedding models to compute query similarity, applications can serve cached responses for variations of common questions. This approach particularly benefits FAQ systems, documentation assistants, and any application with predictable query patterns.

Model selection optimization ensures that each request uses the minimum capable model, preserving precious Pro model quota for genuinely complex tasks. Implementing automatic routing based on query analysis can reduce Pro model usage by 90% or more. Simple classification logic examining prompt length, keyword presence, and task type can effectively route requests. Flash models handle the majority of queries effectively, while Pro models reserve capacity for multi-step reasoning, complex code generation, or nuanced analysis tasks.

API Gemini Free vs Paid: When to Upgrade

Understanding when to transition from API Gemini free to paid tiers ensures sustainable growth without premature costs or service disruptions. The decision involves more than simple request counting – it requires analyzing usage patterns, user growth trajectories, and business model alignment. Many successful applications operate indefinitely on free tiers through careful optimization, while others benefit from paid features even with modest usage.

Cost-benefit analysis for tier upgrades should consider the full spectrum of advantages beyond increased quotas. Paid tiers offer higher rate limits that eliminate queuing complexity, priority processing that reduces latency, and SLA guarantees that ensure reliability. For applications serving paying customers, the reliability and performance improvements often justify costs regardless of quota usage. The calculation involves comparing engineering time spent optimizing free tier usage against the simplicity of paid abundance.

Performance differences between free and paid tiers extend beyond raw request volumes. Paid tier users experience lower latency, especially during peak usage periods when free tier requests may face deprioritization. Advanced features like dedicated endpoints, custom model fine-tuning, and enterprise support become available. These capabilities enable use cases impossible with free tier constraints, such as real-time video processing or high-frequency trading applications requiring consistent sub-second responses.

Upgrade triggers vary by application type but common patterns emerge across successful transitions. Applications should consider upgrading when daily active users exceed 500, when queue depths regularly create multi-minute delays, or when business requirements demand SLA guarantees. Revenue-generating applications often upgrade earlier to ensure customer satisfaction, while experimental projects may push free tier limits extensively. The key is monitoring metrics that indicate when constraints impact user experience or business objectives.

Building Real Apps with API Gemini Free

Real-world applications demonstrate that API Gemini free tier supports substantial production deployments when architected thoughtfully. These examples, drawn from actual implementations, showcase patterns and strategies that maximize free tier value while delivering excellent user experiences. The key insight is that constraints foster creativity, leading to more efficient and resilient applications.

Architecture patterns for free tier applications emphasize asynchronous processing, intelligent caching, and graceful degradation. A successful educational platform serves 2,000 daily active users entirely through free tier resources by implementing request batching for content generation, aggressive caching for common queries, and progressive enhancement that adds AI features as quota allows. The architecture separates critical path operations from AI enhancements, ensuring core functionality remains available even when hitting rate limits.

Graceful degradation strategies ensure applications remain useful even when exhausting free tier quotas. Rather than displaying errors, applications should implement fallback behaviors that provide value within constraints. This might involve serving cached responses with disclaimers, using simpler models for basic functionality, or queuing requests for delayed processing. A customer service chatbot implementation demonstrates this pattern by instantly serving cached responses for common questions while queuing complex queries for available API capacity.

Case studies reveal common success patterns across different application types. A code documentation generator processes 50,000 functions monthly by analyzing complexity before processing, using Flash models for simple functions and reserving Pro capacity for complex architectures. A content creation platform supports 300 writers by implementing collaborative caching where similar content requests share responses. A language learning application serves 1,000 daily users by pre-generating common lesson content during off-peak hours. These examples demonstrate that thoughtful architecture enables substantial applications within free tier constraints.

Progressive enhancement approaches build applications that scale naturally with available resources. Core features operate within guaranteed free tier limits while advanced capabilities activate when quota permits. This pattern works particularly well for freemium applications where basic users receive AI-enhanced features as available while premium users get priority access. The architecture supports smooth scaling from free tier to paid usage without requiring fundamental changes, merely configuration adjustments as business growth justifies increased costs.

API Gemini Free Rate Limit Management

Effective rate limit management transforms API Gemini free tier constraints from obstacles into architectural advantages that improve overall system design. Understanding quota mechanics, implementing proper controls, and monitoring usage patterns ensures applications maximize available resources while preventing service disruptions. The discipline required for free tier success translates directly to cost optimization when scaling to paid tiers.

Understanding quota mechanics requires recognizing that rate limits operate at multiple levels with different reset periods. Daily quotas reset at midnight Pacific time, creating predictable capacity planning windows. Per-minute rate limits use rolling windows, meaning requests must be distributed evenly rather than burst at minute boundaries. Project-level quotas aggregate all API keys, requiring coordination in multi-developer teams. These mechanics inform architectural decisions about request distribution and capacity allocation.

Implementing rate limiters that respect API Gemini free tier constraints while maximizing throughput requires sophisticated logic beyond simple delays. Effective implementations track request timestamps, calculate available capacity, and distribute requests optimally within windows. Token bucket algorithms work well for steady-state traffic, while sliding window approaches handle burst patterns better. The choice depends on application traffic patterns and user experience requirements.

Error handling strategies must gracefully manage inevitable rate limit encounters without degrading user experience. When receiving 429 errors, applications should implement exponential backoff with jitter to prevent thundering herd problems. User-facing interfaces should communicate delays transparently while offering alternatives like cached responses or queue positions. Logging rate limit errors with context enables identifying optimization opportunities and usage patterns that trigger limits.

Quota optimization tips drawn from production experience can dramatically extend free tier capabilities. Implement request deduplication to prevent processing identical queries multiple times. Use request batching to amortize overhead across multiple operations. Cache negative results to avoid repeated failed queries. Monitor usage patterns to identify peak periods and redistribute load where possible. These optimizations often reduce API calls by 50-70% without impacting functionality.

Alternative Free Access to Gemini API

Beyond official channels, a vibrant ecosystem of alternative access methods has emerged around API Gemini free capabilities. These community-driven solutions, third-party platforms, and creative integrations expand access options while adding unique value. Understanding this ecosystem enables developers to find solutions perfectly matched to their specific needs and constraints.

Community resources represent the collaborative spirit of developers sharing solutions for common challenges. Open-source libraries extend official SDKs with features like automatic rate limit handling, response caching, and fallback mechanisms. Community-maintained prompt libraries share optimized queries that minimize token usage while maximizing response quality. Forums and Discord servers provide real-time support for implementation challenges. These resources dramatically accelerate development while avoiding common pitfalls.

Open-source wrappers around API Gemini free tier add substantial value through abstraction and enhancement. Popular projects provide unified interfaces across multiple AI providers, enabling easy switching or load balancing. Advanced wrappers implement features like semantic caching, automatic retries, and usage analytics. While requiring careful security vetting, quality open-source projects can provide enterprise-grade features without enterprise costs. The key is choosing actively maintained projects with strong communities.

API gateway services like laozhang.ai offer sophisticated solutions for managing multiple AI providers including Gemini. These gateways provide unified authentication, automatic failover between providers, and aggregated analytics across all AI usage. Many gateways offer their own free tiers that effectively extend Gemini quotas. Advanced features like request routing based on capability matching, cost optimization, and compliance logging add value beyond simple proxying. For applications using multiple AI services, gateways simplify integration while optimizing costs.

Hybrid approaches combining multiple free services create capabilities beyond any single provider’s free tier. By routing simple queries to Gemini Flash, complex reasoning to Pro models, and specialized tasks to other free AI services, applications can build comprehensive AI systems without costs. This approach requires sophisticated orchestration but enables feature sets that would otherwise require substantial paid subscriptions. Success depends on understanding each service’s strengths and designing architectures that leverage them optimally.

API Gemini Free Security Best Practices

Security considerations for API Gemini free tier extend beyond protecting API keys to encompass quota protection, user access control, and compliance requirements. Free tier constraints make security even more critical since quota exhaustion affects all users. Implementing comprehensive security practices ensures sustainable free tier usage while protecting against both malicious attacks and unintentional abuse.

Protecting free quota from abuse requires multi-layered defenses that identify and prevent various attack vectors. Rate limiting at the application level prevents individual users from exhausting shared quotas. CAPTCHA or proof-of-work challenges for anonymous users discourage automated abuse. Usage analytics identify unusual patterns that might indicate attacks or bugs. IP-based blocking handles persistent abusers. These measures ensure legitimate users maintain access while preventing quota exhaustion.

Key management strategies for API Gemini free tier must balance security with operational simplicity. Never embed API keys in client-side code or public repositories. Use environment variables for local development and secure secret management services for production. Implement key rotation schedules even for free tier keys to limit exposure windows. Monitor key usage patterns to identify potential compromises. Consider using separate keys for development, testing, and production to isolate impacts.

User access control becomes critical when multiple users share free tier quotas. Implement authentication to track individual usage and enforce fair-use policies. Create user tiers with different access levels based on contribution or payment. Queue priorities can ensure critical users maintain access during high-demand periods. Usage dashboards help users understand their consumption and encourage responsible use. These controls create sustainable multi-user applications within free tier constraints.

Compliance considerations don’t disappear with free tier usage. Applications must still handle personal data appropriately, respect privacy regulations, and maintain audit trails. Free tier data may be used for model improvement, requiring clear user disclosure. Some industries require data residency or processing guarantees unavailable in free tiers. Understanding these limitations early prevents architectural decisions that block scaling to compliant paid tiers when needed.

Maximizing Value from API Gemini Free Tier

Advanced optimization techniques push API Gemini free tier capabilities far beyond apparent limitations through sophisticated engineering and creative problem-solving. These methods, refined through production experience, demonstrate that free tier constraints often inspire innovations that benefit applications at any scale. The techniques range from algorithmic optimizations to architectural patterns that multiply effective capacity.

Advanced optimization techniques begin with prompt engineering that minimizes token usage while maximizing response quality. Semantic compression removes redundant information from prompts without losing intent. Template-based approaches reuse optimized prompt structures across similar queries. Response streaming with early termination stops generation once sufficient information is received. These techniques can reduce token consumption by 40-60% compared to naive implementations.

# Advanced prompt optimization for API Gemini free

class PromptOptimizer:

def __init__(self):

self.templates = {}

self.compression_rules = []

def compress_prompt(self, prompt):

# Remove redundant whitespace

compressed = ' '.join(prompt.split())

# Apply semantic compression rules

for pattern, replacement in self.compression_rules:

compressed = compressed.replace(pattern, replacement)

# Use templates for common patterns

for template_name, template in self.templates.items():

if self.matches_template(compressed, template):

return self.apply_template(compressed, template)

return compressed

def add_compression_rule(self, verbose, concise):

"""Add rule to replace verbose patterns with concise equivalents"""

self.compression_rules.append((verbose, concise))

def optimize_for_task(self, prompt, task_type):

"""Optimize prompt based on task requirements"""

if task_type == 'simple_question':

return f"Brief answer: {self.compress_prompt(prompt)}"

elif task_type == 'code_generation':

return f"Code only, no explanation: {self.compress_prompt(prompt)}"

else:

return self.compress_prompt(prompt)

Cost-saving patterns extend beyond individual optimizations to system-wide architectural decisions that fundamentally reduce AI resource consumption. Implement hierarchical processing where simple rules handle common cases before engaging AI. Use embedding-based routing to find similar previous queries without generation. Batch similar requests for processing together. Pre-generate common responses during off-peak hours. These patterns often eliminate 70-80% of potential API calls through intelligent system design.

Performance tuning for free tier applications requires different strategies than traditional optimization. Focus on perceived performance through immediate acknowledgment and progressive updates rather than raw speed. Implement predictive prefetching for likely next queries. Use WebSocket connections for responsive streaming. Cache partial results for incremental rendering. These techniques create responsive user experiences despite underlying API constraints.

Scaling strategies prepare applications for growth while maximizing current free tier value. Design architectures that scale horizontally with additional free tier accounts when appropriate. Implement feature flags that gracefully enable paid tier features. Build cost models that predict when upgrades become economical. Create migration paths that preserve application state during tier transitions. These preparations ensure smooth scaling when success demands resources beyond free tier limits.

Future of API Gemini Free Access

The future of API Gemini free access looks increasingly bright as Google doubles down on democratizing AI access while building sustainable business models. Understanding roadmap directions, ecosystem developments, and long-term viability helps developers make informed decisions about platform investments. The trajectory suggests continued expansion of free tier capabilities alongside clear paths to paid growth.

Roadmap insights gathered from Google announcements and development patterns indicate continued investment in free tier capabilities. Model efficiency improvements mean future versions will deliver better performance within the same quota constraints. Feature parity between free and paid tiers will likely continue, with differentiation primarily through volume and support rather than capabilities. Integration with other Google services suggests unified free tier quotas that provide more flexibility in resource allocation.

Community developments around API Gemini free access accelerate innovation beyond Google’s direct efforts. Open-source projects increasingly provide production-grade tooling for free tier optimization. Educational institutions adopt Gemini as a teaching platform, creating skilled developers familiar with the ecosystem. Startups build businesses entirely on free tier foundations, proving the model’s viability. These community contributions create a virtuous cycle that benefits all participants.

Ecosystem growth indicators suggest API Gemini free tier will remain viable for substantial applications indefinitely. The number of applications successfully operating within free tier constraints continues growing. Tool quality improves as the community matures. Integration options expand as more platforms provide Gemini connectivity. job market demand for Gemini expertise increases. These factors create confidence in long-term platform investment.

Long-term viability of building on API Gemini free tier depends on Google’s strategic commitment to developer ecosystem growth. Current evidence strongly supports continued free tier availability and expansion. The business model of converting successful free tier applications to paid customers proves sustainable. Competition from other providers ensures Google must maintain attractive free options. Developers can confidently build on API Gemini free tier while preparing for eventual paid scaling when success demands it.