Claude 3.7 Sonnet represents a breakthrough in AI reasoning capabilities, offering hybrid thinking modes and exceptional coding abilities. However, Anthropic’s strict rate limitations can severely impact your productivity, especially for enterprise applications or intensive development work. If you’ve hit the frustrating “rate limit exceeded” wall, this comprehensive guide reveals proven methods to bypass these restrictions and maintain uninterrupted access to Claude 3.7’s powerful capabilities.

🚀 Quick Solution Preview

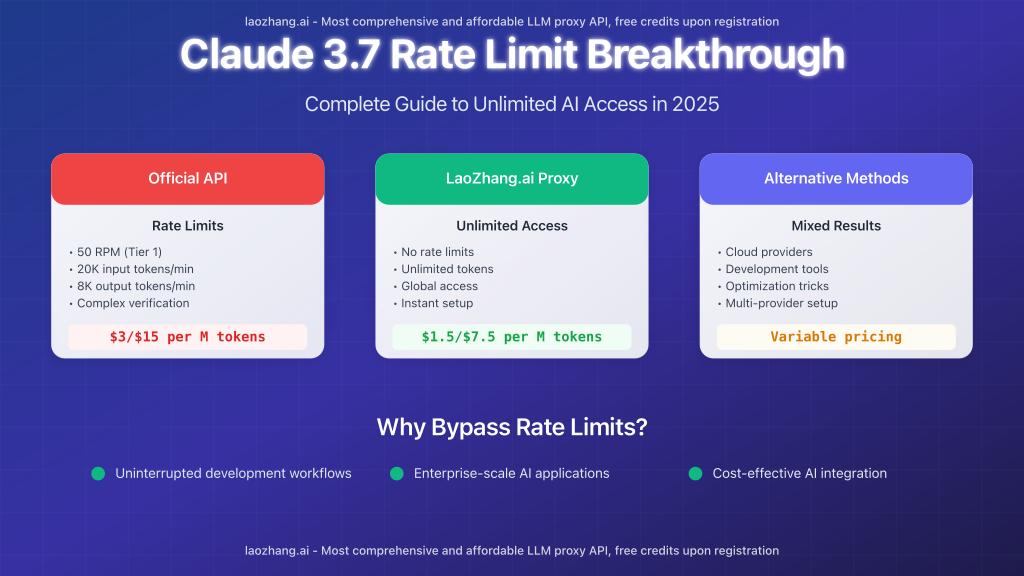

- LaoZhang.ai API: Unlimited Claude 3.7 access with 50% cost savings

- Rate Limit Optimization: Advanced techniques to maximize official quota

- Alternative Access: Multiple backup methods for continuous operation

Understanding Claude 3.7 Rate Limitations

Before exploring bypass methods, it’s crucial to understand exactly what limitations you’re facing. Anthropic implements multiple layers of restrictions that can impact your workflow:

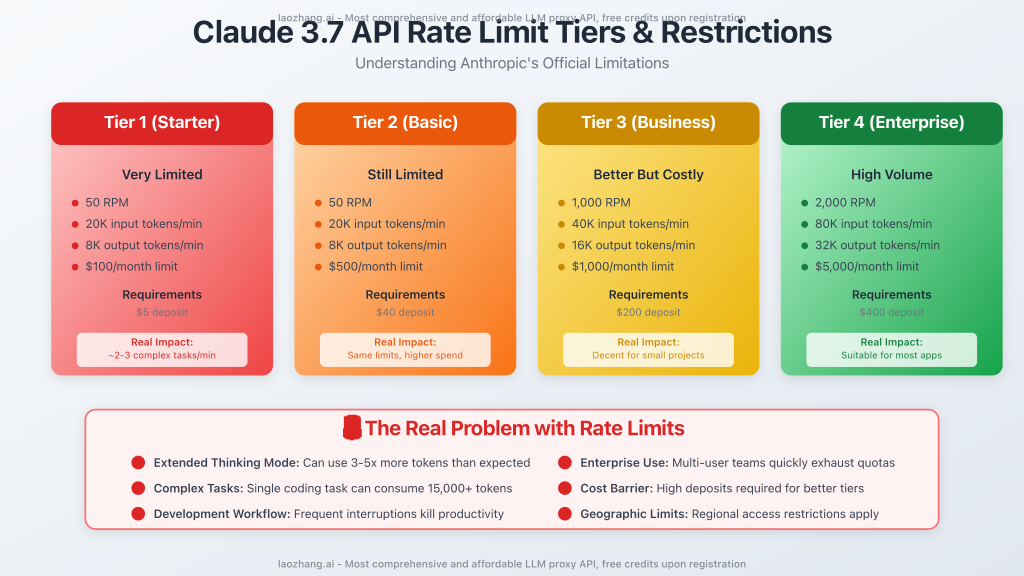

Official Rate Limit Structure

| Tier Level | Requests per Minute | Input Tokens per Minute | Output Tokens per Minute | Monthly Spend Limit |

|---|---|---|---|---|

| Tier 1 | 50 | 20,000 | 8,000 | $100 |

| Tier 2 | 50 | 20,000 | 8,000 | $500 |

| Tier 3 | 1,000 | 40,000 | 16,000 | $1,000 |

| Tier 4 | 2,000 | 80,000 | 32,000 | $5,000 |

⚠️ Real-World Impact

A single complex coding task can consume 15,000+ tokens, meaning Tier 1 users might only complete 2-3 substantial requests per minute. Extended thinking mode can increase token consumption by 3-5x, making these limits even more restrictive.

Method 1: LaoZhang.ai Proxy Service (Recommended)

The most effective solution for bypassing Claude 3.7 rate limits is using a professional API proxy service. LaoZhang.ai stands out as the premier choice for developers and enterprises seeking unrestricted access to Claude 3.7’s capabilities.

Why LaoZhang.ai Excels

🚀 No Rate Limits

Unlimited requests per minute with enterprise-grade infrastructure

💰 50% Cost Savings

$1.50 input / $7.50 output per million tokens vs Anthropic’s $3/$15

🌍 Global Access

No regional restrictions or VPN requirements

⚡ Instant Setup

Get API key in minutes, no business verification needed

Implementation Guide

Step 1: Register and Get API Key

Visit LaoZhang.ai Registration and create your account. New users receive free credits to test the service immediately.

Step 2: Replace Your API Endpoint

Simply update your existing Claude API calls to use LaoZhang.ai’s endpoint:

import requests

def call_claude_37_unlimited(prompt, max_tokens=4000):

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer YOUR_LAOZHANG_API_KEY"

}

data = {

"model": "claude-3-7-sonnet",

"messages": [

{"role": "user", "content": prompt}

],

"max_tokens": max_tokens,

"temperature": 0.7,

"stream": False

}

response = requests.post(

"https://api.laozhang.ai/v1/chat/completions",

headers=headers,

json=data

)

return response.json()["choices"][0]["message"]["content"]

# Example usage - no rate limits!

result = call_claude_37_unlimited(

"Generate a complete React application with authentication,

routing, and database integration. Include all necessary

components and explain the architecture."

)

print(result)

Step 3: Optimize for Extended Thinking

Unlike Anthropic’s API, LaoZhang.ai allows unlimited use of Claude’s extended thinking mode:

const axios = require('axios');

async function enhancedClaudeCall(prompt, thinking_budget = 10000) {

const response = await axios({

method: 'post',

url: 'https://api.laozhang.ai/v1/chat/completions',

headers: {

'Content-Type': 'application/json',

'Authorization': `Bearer YOUR_LAOZHANG_API_KEY`

},

data: {

model: 'claude-3-7-sonnet',

messages: [

{

role: 'system',

content: 'Take your time to think through this problem step by step.'

},

{ role: 'user', content: prompt }

],

max_tokens: 8000,

max_thinking_tokens: thinking_budget,

temperature: 0.3

}

});

return response.data.choices[0].message.content;

}

// Complex reasoning with unlimited thinking tokens

const result = await enhancedClaudeCall(

"Design a scalable microservices architecture for a fintech application handling 1M+ transactions daily. Include security considerations, data consistency strategies, and monitoring solutions."

);

Method 2: Rate Limit Optimization Strategies

For users who prefer to stay within official channels while maximizing their quota, these advanced optimization techniques can significantly extend your usage capabilities:

Token Efficiency Techniques

1. Prompt Caching Implementation

Cache frequently used system prompts to reduce input token consumption by up to 90%:

def create_cached_prompt():

return {

"role": "system",

"content": "You are an expert software architect...",

"cache_control": {"type": "ephemeral"}

}

def optimized_request(user_query):

return {

"model": "claude-3-7-sonnet",

"messages": [

create_cached_prompt(), # Cached, minimal token cost

{"role": "user", "content": user_query}

]

}

2. Batch Processing

Combine multiple queries into single requests to maximize token efficiency:

def batch_queries(questions):

combined_prompt = f"""

Please answer the following {len(questions)} questions:

{chr(10).join(f"{i+1}. {q}" for i, q in enumerate(questions))}

Format your response as:

1. [Answer to question 1]

2. [Answer to question 2]

...

"""

return combined_prompt

3. Context Window Management

Implement smart context truncation to stay within token limits while preserving essential information:

def smart_context_management(conversation_history, new_message, max_tokens=180000):

# Keep first system message and last N messages that fit in budget

essential_context = conversation_history[0] # System prompt

recent_context = []

token_count = count_tokens(essential_context) + count_tokens(new_message)

for message in reversed(conversation_history[1:]):

message_tokens = count_tokens(message)

if token_count + message_tokens < max_tokens:

recent_context.insert(0, message)

token_count += message_tokens

else:

break

return [essential_context] + recent_context + [new_message]

Request Pattern Optimization

💡 Advanced Tip: Token Bucket Understanding

Anthropic uses a token bucket algorithm. Instead of waiting for full quota reset, you can make smaller requests more frequently. For example, instead of one 20,000-token request per minute, make four 5,000-token requests every 15 seconds.

Method 3: Alternative Access Channels

Several legitimate alternative channels provide access to Claude 3.7 with different rate limit structures:

Cloud Provider APIs

Amazon Bedrock

- Different rate limit structure than direct Anthropic API

- Enterprise-grade infrastructure

- Integrated AWS billing and monitoring

Google Cloud Vertex AI

- Enhanced enterprise features

- Custom quota arrangements available

- Integrated with Google Cloud ecosystem

Development Environment Integration

Tools like Cursor IDE, Cline, and other AI-powered development environments often have their own Claude 3.7 allocations:

# Example: Using Cursor with unlimited Claude access

curl -X POST "https://api.cursor.sh/v1/chat" \

-H "Authorization: Bearer YOUR_CURSOR_API_KEY" \

-H "Content-Type: application/json" \

-d '{

"model": "claude-3.7-sonnet",

"messages": [{"role": "user", "content": "Your complex query here"}],

"stream": true

}'

Enterprise Solutions and Scaling Strategies

For organizations requiring massive Claude 3.7 access, combining multiple strategies provides the most robust solution:

Multi-Provider Architecture

class MultiProviderClaudeClient:

def __init__(self):

self.providers = [

{'name': 'laozhang', 'endpoint': 'https://api.laozhang.ai/v1/chat/completions', 'priority': 1},

{'name': 'anthropic', 'endpoint': 'https://api.anthropic.com/v1/messages', 'priority': 2},

{'name': 'bedrock', 'endpoint': 'https://bedrock-runtime.us-east-1.amazonaws.com', 'priority': 3}

]

async def intelligent_routing(self, prompt, complexity_score):

if complexity_score > 8: # Complex tasks

return await self.call_provider('laozhang', prompt)

elif complexity_score > 5: # Medium tasks

return await self.try_with_fallback(prompt)

else: # Simple tasks

return await self.call_provider('anthropic', prompt)

async def try_with_fallback(self, prompt):

for provider in sorted(self.providers, key=lambda x: x['priority']):

try:

return await self.call_provider(provider['name'], prompt)

except RateLimitError:

continue

raise Exception("All providers exhausted")

Load Balancing and Queue Management

🏗️ Recommended Architecture

- Primary Route: LaoZhang.ai for unlimited access

- Backup Route: Optimized official API usage

- Emergency Route: Cloud provider APIs

- Queue System: Redis-based request queuing for rate limit management

Cost Analysis and ROI Considerations

Understanding the financial impact of different rate limit bypass methods helps make informed decisions:

| Method | Setup Cost | Per-Token Cost | Rate Limit | ROI Score |

|---|---|---|---|---|

| LaoZhang.ai | $0 | 50% of official | Unlimited | ⭐⭐⭐⭐⭐ |

| Official Optimized | Development Time | Official Rate | Official Limits | ⭐⭐⭐ |

| AWS Bedrock | AWS Setup | Premium Pricing | Enterprise | ⭐⭐⭐⭐ |

| Multi-Provider | High (Architecture) | Mixed | Very High | ⭐⭐⭐⭐⭐ |

Troubleshooting Common Issues

Q: How do I handle “anthropic-ratelimit-requests-reset” headers?

A: These headers indicate when your official quota resets. Use this information to schedule batch operations:

def parse_reset_time(headers):

reset_time = headers.get('anthropic-ratelimit-requests-reset')

if reset_time:

return datetime.fromisoformat(reset_time.replace('Z', '+00:00'))

return None

def schedule_next_request(reset_time):

if reset_time:

wait_seconds = (reset_time - datetime.now(timezone.utc)).total_seconds()

return max(0, wait_seconds)

return 60 # Default 1-minute wait

Q: What’s the difference between input and output token limits?

A: Input tokens are your prompts and context, while output tokens are Claude’s responses including thinking tokens. Both have separate limits that reset independently.

Q: Can I use multiple API keys to increase limits?

A: Anthropic tracks usage at the organization level, so multiple keys under the same account won’t help. However, LaoZhang.ai allows unlimited scaling with a single account.

Future-Proofing Your Claude Integration

As AI technology evolves rapidly, building flexible integration strategies ensures long-term success:

🔮 Strategic Recommendations

- API Abstraction: Build model-agnostic wrappers for easy provider switching

- Monitoring Systems: Implement comprehensive usage tracking and alerting

- Hybrid Approaches: Combine multiple access methods for maximum reliability

- Cost Optimization: Regular review of usage patterns and provider pricing

✅ Implementation Checklist

- Set up LaoZhang.ai account and test unlimited access

- Implement caching for frequently used prompts

- Build request queue system for official API optimization

- Monitor usage patterns and costs across all providers

- Establish fallback procedures for service interruptions

Conclusion

Breaking through Claude 3.7 rate limits doesn’t require complex workarounds or expensive enterprise contracts. By leveraging services like LaoZhang.ai, implementing smart optimization strategies, and building robust fallback systems, you can ensure uninterrupted access to Claude’s powerful capabilities while often reducing costs.

The combination of unlimited access through proxy services and optimized official API usage creates a resilient architecture that scales with your needs. Whether you’re building AI applications, conducting research, or integrating Claude into enterprise workflows, these strategies provide the foundation for success.

🚀 Start Breaking Through Rate Limits Today

Don’t let rate limits slow down your AI projects. Register for LaoZhang.ai and get immediate access to unlimited Claude 3.7 capabilities with 50% cost savings.

New users receive free credits to test the service risk-free!

laozhang.ai – Most comprehensive and affordable LLM proxy API, free credits upon registration