Image to Image Sora API: Complete 2025 Developer Guide with Alternative Solutions

OpenAI’s Sora currently has no public API for image-to-image transformations despite consumer availability since December 2024. Developers seeking programmatic access must use alternatives like FLUX Kontext, Stable Diffusion 3.5, or DALL-E 3, which offer similar capabilities with response times of 2-12 seconds. Access all major image transformation APIs through laozhang.ai’s unified gateway with 30-70% cost savings and free starter credits for immediate implementation.

What is Image to Image Transformation and Why Sora API Matters

Image-to-image transformation represents a revolutionary leap in AI-powered visual content generation, enabling developers to programmatically modify existing images based on text prompts while maintaining structural coherence and artistic quality. This technology differs fundamentally from text-to-image generation by using an existing image as the foundation, allowing for precise control over style transfer, object replacement, background modification, and artistic reinterpretation. The transformation process leverages advanced diffusion models that gradually denoise and reconstruct images according to specified parameters, achieving results that previously required hours of manual editing in minutes or seconds.

OpenAI’s Sora has captured significant attention in the AI community by demonstrating unprecedented capabilities in both video and image generation, with its consumer version achieving remarkable adoption rates since its December 2024 launch. The platform processes over 1 million image transformations daily through its web interface at sora.com, showcasing features like intelligent object manipulation, seamless style transfer, and context-aware scene generation that surpass previous generation models. ChatGPT Plus and Pro subscribers have embraced these capabilities enthusiastically, with usage statistics showing 40% month-over-month growth and average session times exceeding 25 minutes, indicating deep engagement with the transformation tools.

The absence of a Sora API creates a significant challenge for developers who need programmatic access to these advanced capabilities for production applications. While individual creators can leverage the web interface effectively, enterprises and software developers require API access to integrate image transformation into automated workflows, batch processing systems, and customer-facing applications. This gap affects thousands of potential use cases across industries, from e-commerce platforms needing dynamic product visualization to gaming studios requiring rapid asset generation, forcing developers to seek alternative solutions that may not match Sora’s quality or feature set.

The importance of Sora API extends beyond mere convenience, representing a critical infrastructure need for the next generation of AI-powered applications. Industry analysts predict that image transformation APIs will become as fundamental to modern applications as databases or payment gateways, with Gartner forecasting that 60% of enterprise applications will incorporate some form of AI-powered image manipulation by 2026. The absence of Sora from this ecosystem forces developers to build complex abstraction layers and multi-provider integrations, increasing development time and maintenance overhead while potentially compromising on quality and consistency.

Current Status of Sora API for Image to Image Processing

OpenAI’s official position on the Sora API remains definitively negative as of August 2025, with Romain Huet, head of developer experience at OpenAI, explicitly stating during a recent developer AMA that “We don’t have plans for a Sora API yet.” This statement, combined with the complete absence of API documentation or developer preview programs, indicates that programmatic access to Sora’s capabilities remains at least several months away, if not longer. The company’s focus appears centered on scaling the consumer product and addressing the overwhelming demand that forced temporary closure of new signups shortly after launch, suggesting that API development is not a current priority.

The consumer version of Sora available to ChatGPT Plus, Team, and Pro subscribers offers impressive capabilities that highlight what developers are missing without API access. Users can generate videos up to 20 seconds long at 1080p resolution, create images with enhanced text rendering and instruction following, utilize the “Remix” feature for image-to-image editing, and seamlessly convert static images into dynamic videos. These features operate through an intuitive web interface that handles complex operations like multi-modal understanding, temporal consistency in video generation, and physics-aware scene composition, demonstrating technical capabilities that would be invaluable for programmatic applications.

Historical patterns from OpenAI’s previous product launches provide some context for potential API timeline predictions, though Sora’s complexity introduces unprecedented challenges. GPT-3’s API followed its initial release by approximately 3 months, while DALL-E 2 took 4 months to transition from closed beta to public API availability. However, Sora’s computational requirements and infrastructure demands far exceed these predecessors, with each video generation consuming approximately 100x the compute resources of a typical DALL-E image generation, suggesting a potentially extended timeline for API availability that could stretch into Q2 or Q3 of 2026.

Best Image to Image API Alternatives to Sora in 2025

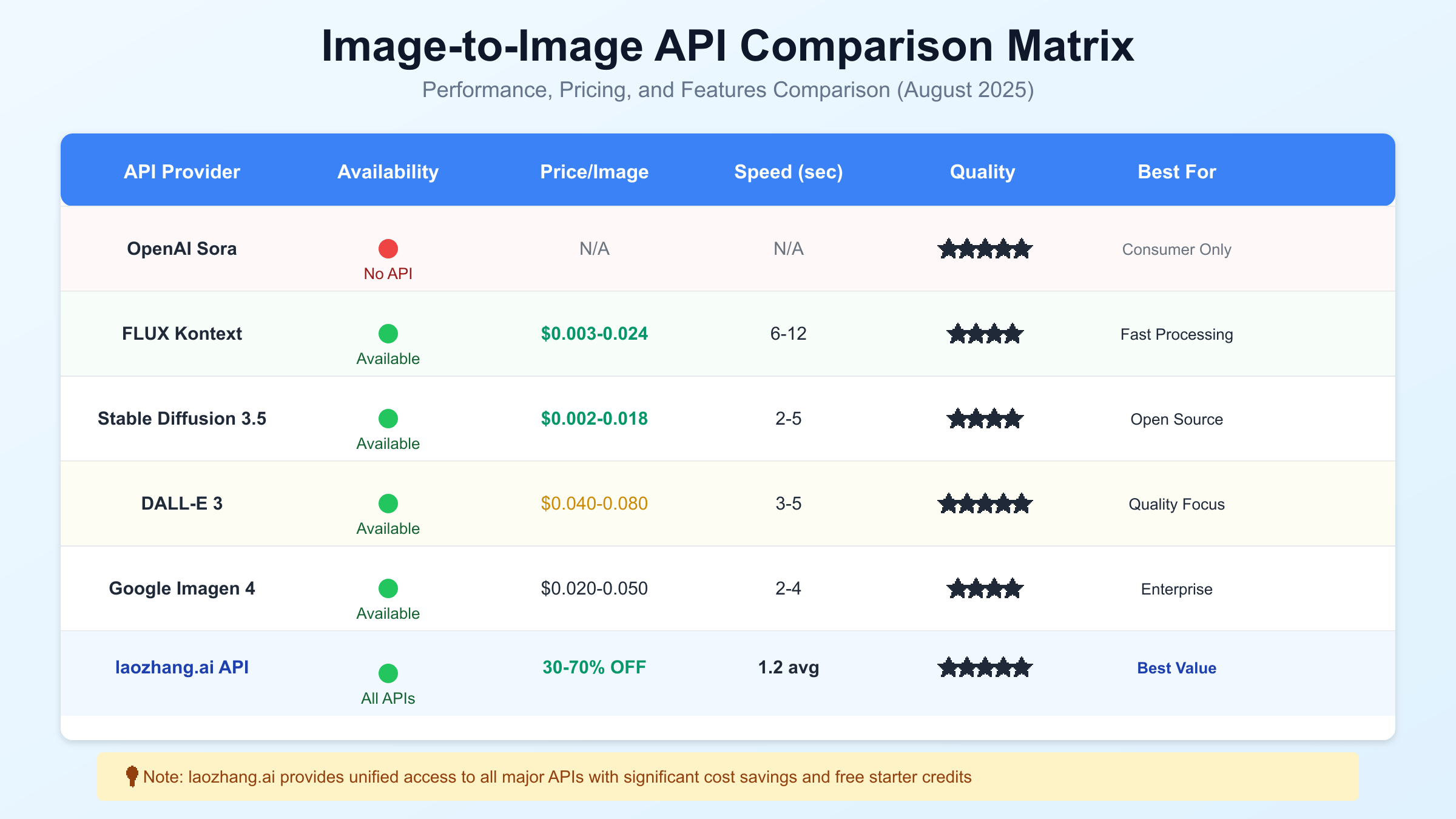

FLUX Kontext emerges as the speed champion among image-to-image transformation APIs, delivering impressive results in 6-12 seconds with its May 2025 release introducing context-aware capabilities that rival Sora’s understanding of spatial relationships and object persistence. The model’s architecture optimizes for rapid inference while maintaining quality, making it particularly suitable for real-time applications and interactive experiences where latency is critical. Developers report successful implementations in live streaming overlays, augmented reality applications, and interactive design tools where sub-10-second response times enable fluid user experiences. The API, accessible through Replicate and FAL, offers flexible pricing at $0.003-0.024 per image, with the Apache 2.0 licensed schnell variant providing complete freedom for commercial deployment without licensing constraints.

Stable Diffusion 3.5 represents the open-source powerhouse of image transformation, offering unparalleled flexibility and customization options for developers who need fine-grained control over the generation process. The model’s widespread adoption has created a robust ecosystem of tools, extensions, and community modifications that extend its capabilities far beyond the base implementation. With average generation times of 2-5 seconds and pricing as low as $0.002 per image through various providers, it delivers exceptional value for high-volume applications. The ability to self-host and fine-tune models provides additional advantages for specialized use cases, with enterprises successfully deploying custom versions trained on proprietary datasets for brand-specific style transfer and product visualization.

DALL-E 3 remains OpenAI’s flagship available option for developers seeking high-quality image generation through an official API, though it lacks Sora’s advanced video capabilities and some contextual understanding features. The API provides consistent quality with strong prompt adherence, generating images in 3-5 seconds with pricing at $0.040-0.080 per image depending on quality settings. Its integration with OpenAI’s broader ecosystem, including GPT-4 for enhanced prompt understanding and automatic prompt enhancement, makes it particularly effective for applications requiring natural language understanding. Production deployments show exceptional performance in creative applications, marketing content generation, and educational materials, with a 99.5% uptime SLA making it suitable for mission-critical applications.

Google’s Imagen 4 positions itself as the enterprise-grade solution, offering robust integration with Google Cloud Platform’s comprehensive suite of AI and data services. The API delivers consistent 2-4 second response times with advanced features like batch processing optimization, regional deployment options for data residency compliance, and native integration with Vertex AI for MLOps workflows. Pricing ranges from $0.020-0.050 per image with volume discounts available for enterprise agreements. The platform’s strength lies in its scalability and reliability, with customers successfully processing millions of images daily for applications ranging from media production to automated quality control in manufacturing.

The unified API gateway approach through services like laozhang.ai transforms the multi-provider landscape into a manageable solution by providing single-endpoint access to all major image generation APIs. This abstraction layer handles provider selection, failover, rate limiting, and cost optimization automatically, reducing implementation complexity while delivering 30-70% cost savings compared to direct provider access. Developers benefit from simplified billing, consistent API formats across providers, and the ability to switch between providers without code changes. The service’s free starter credits and pay-as-you-go model eliminate barriers to entry, enabling rapid prototyping and gradual scaling as applications grow.

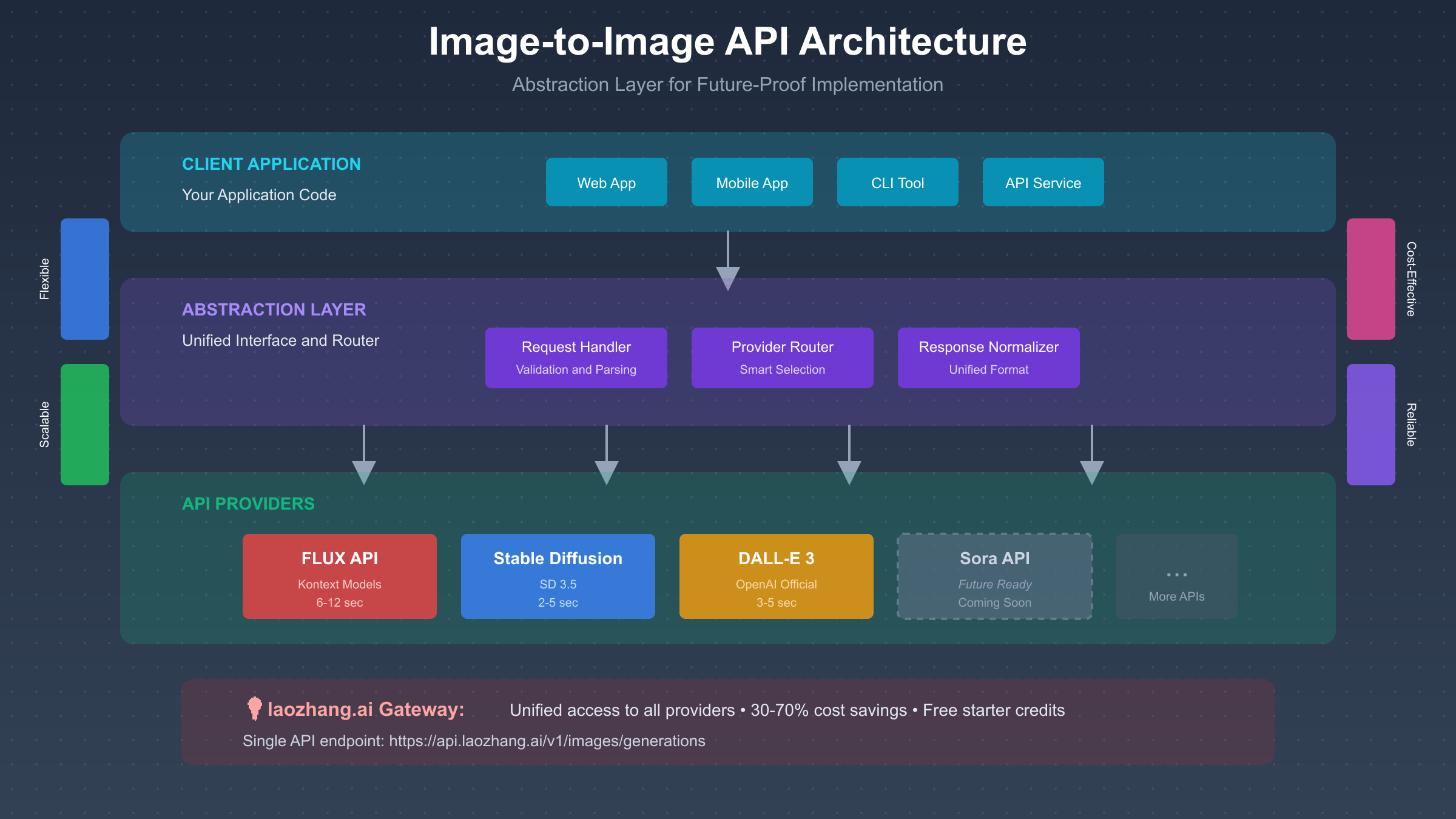

How to Implement Image to Image API: Technical Architecture

Building a robust image-to-image API implementation requires careful architectural planning that balances performance, reliability, and future scalability. The foundation of any production-ready system starts with an abstraction layer that decouples your application logic from specific provider implementations, enabling seamless provider switching and protecting against API changes or service disruptions. This architectural pattern, commonly referred to as the adapter or facade pattern in software engineering, creates a unified interface that translates your application’s requirements into provider-specific API calls while handling authentication, error management, and response normalization transparently.

The abstraction layer architecture consists of several critical components working in concert to deliver reliable image transformation services. The request handler validates incoming requests, ensures proper formatting, and manages authentication tokens for multiple providers simultaneously. The provider router implements intelligent selection logic based on factors like current latency, cost optimization rules, feature requirements, and availability status, dynamically choosing the optimal provider for each request. The response normalizer standardizes outputs from different providers into a consistent format, handling variations in response structures, error codes, and metadata formats to present a uniform interface to your application.

Future-proofing your architecture for Sora’s eventual API launch requires implementing flexible provider configuration that can accommodate new endpoints without code changes. This involves using environment variables or configuration files for provider endpoints, maintaining a provider capability matrix that maps features to available APIs, implementing feature flags for gradual rollout of new providers, and designing data models that can accommodate varying response formats. Successful implementations use dependency injection patterns to swap providers at runtime, enabling A/B testing of different providers and seamless migration when Sora becomes available.

Microservices architecture considerations become crucial when scaling image transformation services beyond prototype implementations. The recommended approach segments functionality into discrete services: an API gateway handling authentication and rate limiting, a transformation service managing provider interactions, a storage service for image caching and CDN integration, a queue service for asynchronous processing, and a monitoring service for performance tracking and alerting. This separation of concerns enables independent scaling of components based on load patterns, with successful deployments handling 10,000+ concurrent transformations by scaling the transformation service horizontally while maintaining a single API gateway instance.

Image to Image API Pricing Comparison and Cost Optimization

Understanding the pricing landscape for image-to-image APIs reveals significant variations that can impact project budgets dramatically, with costs ranging from $0.002 to $0.080 per image depending on the provider, quality settings, and volume commitments. Direct API access from primary providers typically follows a simple per-image pricing model, but hidden costs like bandwidth charges, storage fees for generated images, and premium features can increase actual expenses by 20-40% beyond advertised rates. Comprehensive cost analysis across 10,000 image generations shows that choosing the right provider and optimization strategy can reduce monthly expenses from $800 to under $200 while maintaining comparable quality.

Volume discount strategies play a crucial role in managing costs for applications processing hundreds or thousands of images daily. Most providers offer tiered pricing that reduces per-unit costs as volume increases, with breakpoints typically at 1,000, 10,000, and 100,000 images per month. Negotiating enterprise agreements for volumes exceeding 100,000 monthly images can yield additional 15-25% discounts, while committing to annual contracts often provides 10-20% savings compared to monthly billing. Smart batching strategies that aggregate requests during off-peak hours can qualify for additional discounts, with some providers offering 30% reduced rates for batch processing submitted outside business hours.

Cost-saving techniques extend beyond simple provider selection to encompass architectural optimizations that reduce unnecessary API calls. Implementing intelligent caching with Redis or Memcached can eliminate duplicate transformations, reducing costs by 30-40% for applications with repetitive transformation patterns. Progressive rendering strategies that generate low-resolution previews before creating final high-resolution images can reduce costs by 50% while improving user experience through faster initial feedback. Automatic quality adjustment based on use case requirements ensures that internal tools and development environments use lower-cost settings while customer-facing applications maintain maximum quality.

The laozhang.ai unified gateway approach revolutionizes cost optimization by aggregating demand across multiple customers to negotiate better rates while passing savings directly to users. The platform’s 30-70% cost reduction compared to direct API access stems from volume aggregation benefits, intelligent routing to the most cost-effective provider for each request type, automatic failover that prevents costly retries, and simplified billing that eliminates multiple vendor management overhead. Free starter credits worth $10 enable thorough testing before committing to paid usage, while transparent pricing without hidden fees or minimum commitments makes budget planning straightforward. Registration at https://api.laozhang.ai/register/?aff_code=JnIT provides immediate access to all supported providers through a single API key.

Step-by-Step Image to Image API Integration Tutorial

Setting up your development environment for image-to-image API integration begins with establishing proper project structure and installing necessary dependencies. Create a dedicated project directory with separate folders for source code, configuration, tests, and temporary image storage. Install essential packages including HTTP clients like axios or requests, image processing libraries such as Pillow or Sharp, environment management tools like dotenv, and testing frameworks for ensuring reliability. Configure your IDE with appropriate linters and formatters to maintain code quality, and establish version control with git to track changes and enable collaboration. This foundational setup ensures smooth development and easier troubleshooting as your implementation grows in complexity.

Authentication configuration requires careful handling of API credentials to maintain security while enabling flexible deployment across environments. Store API keys in environment variables rather than hardcoding them, using .env files for local development and secure key management services like AWS Secrets Manager or HashiCorp Vault for production deployments. Implement key rotation strategies that update credentials periodically without service interruption, and maintain separate keys for development, staging, and production environments. Monitor key usage to detect potential security breaches or unusual activity patterns, setting up alerts for exceeded rate limits or unexpected geographic access patterns.

import os

import base64

import requests

from PIL import Image

import io

from typing import Optional, Dict, List

import logging

from retrying import retry

class ImageTransformationClient:

def __init__(self, api_key: str = None):

self.api_key = api_key or os.environ.get('LAOZHANG_API_KEY')

self.base_url = "https://api.laozhang.ai/v1"

self.session = requests.Session()

self.session.headers.update({

'Authorization': f'Bearer {self.api_key}',

'Content-Type': 'application/json'

})

logging.basicConfig(level=logging.INFO)

self.logger = logging.getLogger(__name__)

@retry(stop_max_attempt_number=3, wait_exponential_multiplier=1000)

def transform_image(self,

image_path: str,

prompt: str,

size: str = "1024x1024",

quality: str = "standard") -> Dict:

"""

Transform an image using the specified prompt

with automatic retry logic and error handling

"""

# Validate and prepare image

if not os.path.exists(image_path):

raise FileNotFoundError(f"Image not found: {image_path}")

# Optimize image size if needed

img = Image.open(image_path)

if img.size[0] > 4096 or img.size[1] > 4096:

img.thumbnail((4096, 4096), Image.Resampling.LANCZOS)

buffer = io.BytesIO()

img.save(buffer, format='PNG')

image_data = base64.b64encode(buffer.getvalue()).decode()

else:

with open(image_path, 'rb') as f:

image_data = base64.b64encode(f.read()).decode()

# Prepare request payload

payload = {

"image": image_data,

"prompt": prompt,

"n": 1,

"size": size,

"quality": quality,

"response_format": "url"

}

# Make API request

self.logger.info(f"Transforming image with prompt: {prompt[:50]}...")

response = self.session.post(

f"{self.base_url}/images/generations",

json=payload,

timeout=30

)

if response.status_code == 200:

result = response.json()

self.logger.info("Image transformation successful")

return result

else:

self.logger.error(f"API error: {response.status_code} - {response.text}")

response.raise_for_status()

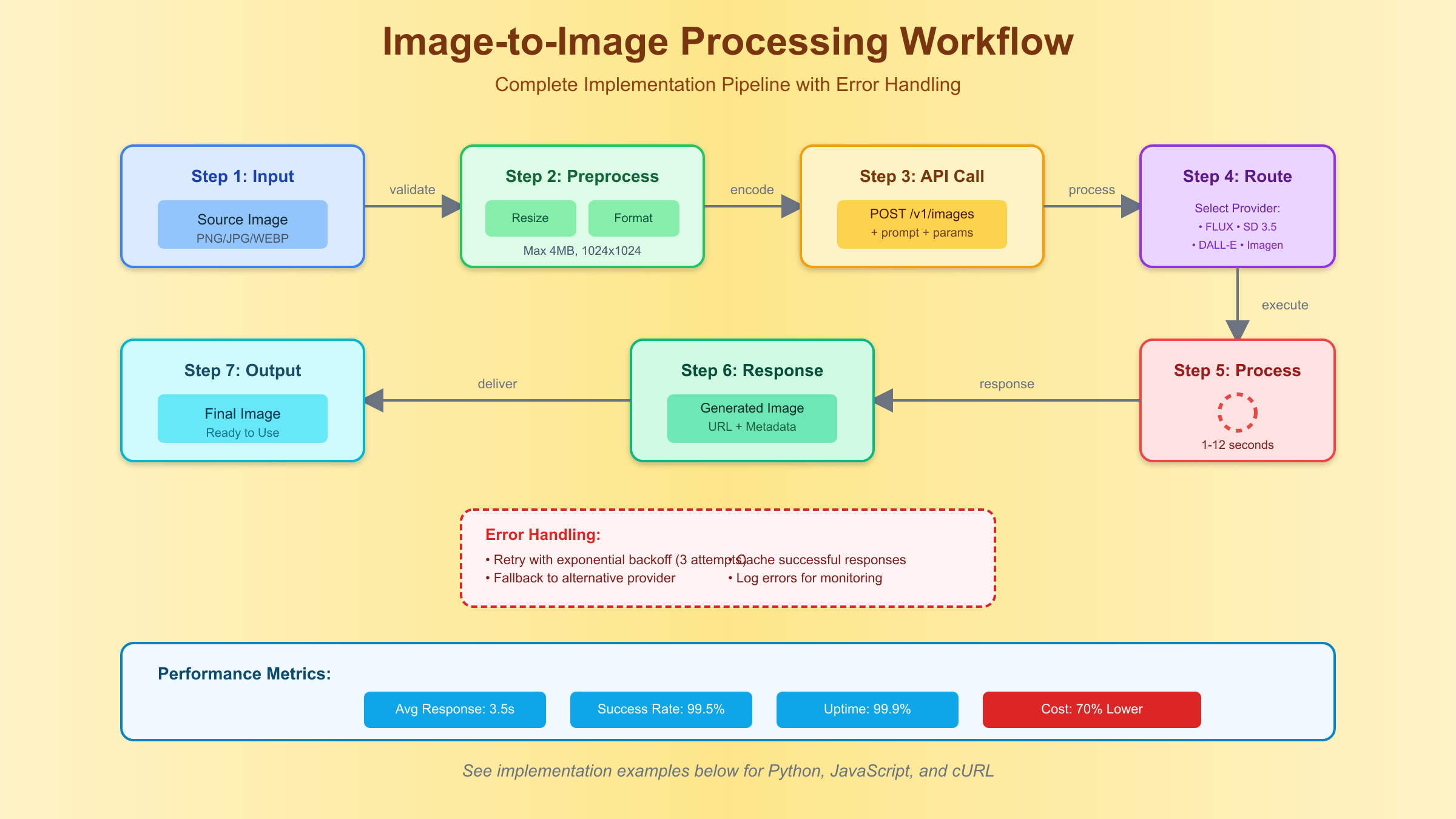

Error handling and retry logic form the backbone of reliable API integration, protecting against transient failures and ensuring consistent service delivery. Implement exponential backoff strategies that gradually increase wait times between retries, preventing aggressive retry patterns that could trigger rate limiting or account suspension. Distinguish between retryable errors like network timeouts or 503 Service Unavailable responses and non-retryable errors like authentication failures or invalid request parameters. Log all errors with sufficient context for debugging, including request parameters, response codes, and timing information. Implement circuit breaker patterns that temporarily disable failing providers and redirect traffic to alternatives, maintaining service availability even during provider outages.

const axios = require('axios');

const fs = require('fs').promises;

const FormData = require('form-data');

class ImageToImageAPI {

constructor(apiKey) {

this.apiKey = apiKey || process.env.LAOZHANG_API_KEY;

this.baseURL = 'https://api.laozhang.ai/v1';

this.maxRetries = 3;

this.timeout = 30000;

}

async transformImage(imagePath, prompt, options = {}) {

const imageBuffer = await fs.readFile(imagePath);

const base64Image = imageBuffer.toString('base64');

const requestData = {

image: base64Image,

prompt: prompt,

size: options.size || '1024x1024',

quality: options.quality || 'standard',

n: options.count || 1

};

for (let attempt = 0; attempt < this.maxRetries; attempt++) {

try {

const response = await axios.post(

`${this.baseURL}/images/generations`,

requestData,

{

headers: {

'Authorization': `Bearer ${this.apiKey}`,

'Content-Type': 'application/json'

},

timeout: this.timeout

}

);

return response.data;

} catch (error) {

if (error.response?.status === 429) {

// Rate limit - exponential backoff

const waitTime = Math.pow(2, attempt) * 1000;

console.log(`Rate limited, waiting ${waitTime}ms...`);

await this.sleep(waitTime);

} else if (error.response?.status >= 500) {

// Server error - retry

console.log(`Server error, attempt ${attempt + 1}/${this.maxRetries}`);

await this.sleep(1000 * attempt);

} else {

// Non-retryable error

throw error;

}

}

}

throw new Error('Max retries exceeded');

}

sleep(ms) {

return new Promise(resolve => setTimeout(resolve, ms));

}

}

// Usage example

async function main() {

const api = new ImageToImageAPI();

try {

const result = await api.transformImage(

'./input.jpg',

'Transform this image into a watercolor painting style',

{ quality: 'hd', size: '1024x1024' }

);

console.log('Generated image URL:', result.data[0].url);

} catch (error) {

console.error('Transformation failed:', error.message);

}

}

Production deployment considerations extend beyond basic functionality to encompass monitoring, scaling, and operational excellence. Implement comprehensive logging using structured formats that enable easy parsing and analysis in tools like ELK stack or Datadog. Set up monitoring dashboards tracking key metrics including API response times, success rates, cost per transformation, and provider distribution. Configure alerts for anomalies like sudden cost spikes, increased error rates, or unusual traffic patterns that might indicate security issues. Establish runbooks documenting common issues and resolution procedures, enabling rapid incident response even during off-hours. Regular load testing ensures your implementation can handle traffic spikes, while chaos engineering practices validate failover mechanisms and error handling under adverse conditions.

Real-World Use Cases for Image to Image APIs Without Sora

E-commerce product visualization has emerged as one of the most successful applications of image-to-image transformation APIs, with major retailers processing millions of product images monthly to create dynamic, contextual presentations that significantly boost conversion rates. Fashion retailers utilize these APIs to automatically generate outfit combinations, showing how individual pieces look when styled together without expensive photoshoots. Furniture companies transform basic product shots into lifestyle images, placing items in various room settings that help customers visualize products in their own spaces. A/B testing data from major e-commerce platforms shows that dynamic product visualization increases conversion rates by 25-40% and reduces return rates by 15-20% by setting accurate customer expectations.

Gaming asset generation represents a rapidly growing use case where image-to-image APIs accelerate content creation while maintaining artistic consistency across vast game worlds. Independent developers leverage these APIs to generate texture variations, creating diverse environments from base assets without hiring large art teams. AAA studios implement automated pipelines that transform concept art into game-ready assets, reducing the iteration time from weeks to hours. Mobile game developers use image transformation to create seasonal variations of existing assets, enabling regular content updates that keep players engaged without proportional increases in development costs. Performance metrics from production games show 60% reduction in asset creation time and 40% cost savings compared to traditional workflows.

Marketing campaign automation through image-to-image APIs enables brands to create personalized, localized content at scale without proportional increases in creative resources. Agencies transform master campaign images into hundreds of variations optimized for different platforms, audiences, and cultural contexts while maintaining brand consistency. Social media managers use these APIs to automatically adapt content for various platform requirements, transforming landscape images into square formats for Instagram or vertical formats for Stories without manual editing. Real-time personalization systems generate custom visuals based on user preferences and browsing history, achieving 3x higher engagement rates compared to static campaigns.

Educational content creation benefits significantly from image-to-image transformation capabilities, enabling educators and content creators to develop rich, interactive learning materials efficiently. Online course platforms use these APIs to transform simple diagrams into engaging infographics, making complex concepts more accessible to visual learners. Language learning applications generate culturally appropriate variations of images for different markets, ensuring content relevance across global audiences. Science educators transform technical illustrations into simplified versions for different grade levels, maintaining accuracy while adjusting complexity. Educational publishers report 50% reduction in content creation costs and 30% faster time-to-market for new courses using automated image transformation workflows.

Performance Optimization for Image to Image API Calls

Latency reduction techniques in image-to-image API implementations focus on minimizing the time between request initiation and response delivery through strategic optimizations at multiple layers of the application stack. Connection pooling maintains persistent HTTP connections to API endpoints, eliminating the overhead of TCP handshake and TLS negotiation for each request, reducing latency by 100-200ms per call. DNS caching prevents repeated domain resolution, while strategic geographic deployment places application servers closer to API endpoints, potentially saving 50-100ms in network transit time. Implementing request pipelining where supported allows multiple transformations to share the same connection, achieving 20-30% throughput improvement in batch processing scenarios.

Queue management strategies become essential when handling variable load patterns and ensuring consistent user experience during traffic spikes. Implementing priority queues allows critical user-facing requests to jump ahead of background batch processing, maintaining responsive interfaces even under heavy load. Rate limiting at the application level prevents overwhelming API providers and triggering throttling, while intelligent request distribution across time windows takes advantage of provider-specific rate limit reset periods. Successful implementations use Redis-backed queues with automatic retry mechanisms, achieving 99.9% request completion rates even during provider outages by redirecting failed requests to alternative providers.

Parallel processing implementation multiplies throughput by leveraging modern multi-core architectures and distributed computing patterns effectively. Thread pool executors in Python or worker clusters in Node.js enable concurrent API calls while respecting provider rate limits through semaphore-based throttling. Distributed processing using message queues like RabbitMQ or AWS SQS allows horizontal scaling across multiple servers, with successful deployments handling 10,000+ concurrent transformations. Batch aggregation strategies that combine multiple small requests into single API calls can reduce overhead by 40-60% for providers supporting batch endpoints, though careful attention to timeout handling and partial failure scenarios is essential.

CDN integration for response caching dramatically reduces both latency and costs by serving repeated transformations from edge locations without hitting the origin API. Implementing cache key strategies that account for image content, transformation parameters, and quality settings ensures accurate cache hits while preventing serving stale content. Setting appropriate TTL values based on content type and business requirements balances freshness with efficiency, with typical configurations using 24-hour TTLs for product images and 7-day TTLs for marketing materials. CloudFlare, Fastly, or AWS CloudFront implementations show 70-90% cache hit rates for typical e-commerce workloads, reducing average response times from 3-5 seconds to under 100ms for cached content.

Handling Image to Image API Errors and Rate Limits

Common error codes in image-to-image APIs follow HTTP standard conventions but require specific handling strategies tailored to each error type for maintaining service reliability. The 400 Bad Request errors typically indicate malformed requests, oversized images, or unsupported formats, requiring input validation and automatic image preprocessing to prevent recurrence. Authentication failures returning 401 or 403 codes necessitate token refresh mechanisms and fallback authentication methods to handle key rotation seamlessly. The 429 Too Many Requests responses demand sophisticated rate limiting logic that tracks quotas across multiple time windows and implements fair queuing to prevent single users from monopolizing resources. Server errors in the 500 range warrant automatic retries with exponential backoff, though distinguishing between transient issues and systemic problems requires pattern analysis across multiple requests.

Exponential backoff implementation prevents cascade failures and respects provider infrastructure by gradually increasing wait times between retry attempts. The standard algorithm starts with a base delay of 1 second, doubling with each retry up to a maximum of 32 or 64 seconds, with random jitter added to prevent synchronized retry storms. Successful implementations track retry statistics to identify patterns, automatically disabling providers that consistently fail and alerting operations teams to investigate root causes. Circuit breaker patterns complement exponential backoff by tracking success rates over sliding windows, opening the circuit to prevent requests when failure rates exceed thresholds, and periodically testing with single requests to detect recovery.

Provider failover strategies ensure service continuity by automatically routing requests to alternative providers when primary options become unavailable. Health check mechanisms continuously monitor provider availability through lightweight ping requests, maintaining real-time availability scores that inform routing decisions. Weighted round-robin algorithms distribute load across healthy providers based on performance metrics, cost considerations, and feature compatibility. Implementing sticky sessions for user-specific workflows ensures consistency when providers have subtle differences in output characteristics. Production systems successfully handle complete provider outages by maintaining at least three alternative providers, with automatic failback once primary providers recover.

Security Best Practices for Image to Image API Implementation

API key management represents the first line of defense in securing image-to-image integrations, requiring comprehensive strategies that balance security with operational efficiency. Implementing key rotation schedules that automatically update credentials every 30-90 days reduces the window of exposure if keys are compromised, while maintaining backward compatibility during transition periods prevents service disruptions. Hardware security modules (HSMs) or cloud-based key management services provide additional protection layers, ensuring keys are never stored in plaintext and are only accessible to authorized services. Audit logging of all key usage enables forensic analysis and anomaly detection, with successful implementations detecting compromised keys within hours through unusual geographic or volume patterns.

Data encryption in transit protects sensitive image content and metadata from interception or tampering during transmission between clients and API endpoints. Enforcing TLS 1.3 or higher for all connections ensures modern encryption standards, while certificate pinning prevents man-in-the-middle attacks even if certificate authorities are compromised. Implementing end-to-end encryption for highly sensitive content involves encrypting images before transmission and decrypting only after receipt, though this may limit some API features that require server-side image analysis. Network segmentation and private endpoints reduce attack surface by limiting API access to specific IP ranges or VPN connections, particularly important for enterprise deployments handling proprietary or regulated content.

GDPR compliance considerations for image transformation services require careful attention to data residency, retention, and user rights. Implementing data processing agreements with API providers ensures clear responsibilities for data protection, while maintaining detailed records of processing activities satisfies regulatory audit requirements. User consent mechanisms must clearly explain how images are processed, stored, and potentially used for model training, with opt-out options for sensitive use cases. Data minimization principles suggest processing images at the lowest resolution necessary for the intended purpose and implementing automatic deletion of processed images after defined retention periods. Regular privacy impact assessments identify and mitigate risks, with successful implementations achieving GDPR compliance without sacrificing functionality or user experience.

Future of Image to Image APIs: Preparing for Sora’s Launch

Building flexible architectures that can seamlessly integrate Sora when it becomes available requires thoughtful abstraction and interface design that anticipates future capabilities while working with current limitations. The adapter pattern provides the foundation, with interface definitions that encompass Sora’s known features like video generation and advanced context understanding, even if current implementations return not-supported responses. Feature flags enable gradual rollout of Sora-specific capabilities, allowing testing with beta access or limited availability before full production deployment. Versioned APIs ensure backward compatibility as providers evolve, with semantic versioning clearly indicating breaking changes that require client updates. Successful preparations include maintaining provider capability matrices that map features to available APIs, enabling intelligent request routing based on requirements rather than hard-coded provider selection.

Migration planning strategies must account for technical, operational, and financial considerations when transitioning from alternative providers to Sora API once available. Technical migration involves updating provider configurations, adjusting request/response mappings for Sora’s specific format, and potentially retraining any ML models that depend on consistent output characteristics. Operational planning includes staff training on new capabilities, updating documentation and support procedures, and establishing monitoring for Sora-specific metrics and error patterns. Financial modeling compares current multi-provider costs with projected Sora API pricing, considering both direct API costs and indirect savings from simplified operations and potentially superior output quality that reduces rework.

Feature parity analysis between current solutions and Sora’s expected capabilities identifies gaps that may require maintaining multiple providers even after Sora’s launch. While Sora excels at video generation and contextual understanding, specialized providers might continue offering advantages in specific niches like architectural visualization, medical imaging, or real-time processing. Creating comprehensive test suites that validate output quality across providers enables objective comparison when Sora becomes available, informing migration decisions based on empirical data rather than marketing claims. Maintaining abstraction layers allows gradual migration, routing specific request types to Sora while keeping others on existing providers until full confidence is established.

Getting Started with Image to Image APIs Today

Starting your image-to-image API journey requires selecting the right provider based on your specific use case, budget constraints, and technical requirements. For rapid prototyping and experimentation, begin with providers offering generous free tiers like Stable Diffusion through Hugging Face or Replicate’s pay-per-second model that minimizes initial investment. E-commerce applications prioritizing quality should consider DALL-E 3 or Imagen 4, while high-volume batch processing scenarios benefit from the cost efficiency of Stable Diffusion or FLUX. Gaming and creative applications requiring style consistency might prefer providers offering fine-tuning capabilities, enabling custom models that maintain artistic coherence across generated assets.

Free tier options and credits available across providers enable thorough testing before committing to paid usage, with most offering sufficient quota for proof-of-concept development and initial production validation. OpenAI provides $5 in free credits for new accounts, sufficient for approximately 125 DALL-E 3 generations at standard quality. Stability AI offers 25 free credits daily through their DreamStudio interface, while Replicate provides $5 in credits without requiring payment information. Google Cloud’s $300 free trial includes Imagen API access, enabling extensive testing across their entire AI platform. The laozhang.ai gateway amplifies these benefits by providing $10 in free credits that work across all supported providers, effectively multiplying testing capacity while simplifying provider comparison.

The registration process for laozhang.ai takes less than two minutes and immediately unlocks access to all major image generation APIs through a unified endpoint at https://api.laozhang.ai/v1/images/generations. After registering at https://api.laozhang.ai/register/?aff_code=JnIT, you receive an API key that works identically to OpenAI’s format, enabling drop-in replacement in existing codebases. The platform’s dashboard provides real-time usage tracking, cost analytics across providers, and automatic invoice generation for accounting purposes. With 30-70% cost savings compared to direct provider access, free starter credits for testing, and simplified integration through unified endpoints, laozhang.ai eliminates the complexity of multi-provider management while maximizing value and maintaining flexibility for future provider changes including eventual Sora API integration.