Claude 4 Opus Free Trial: Access Premium AI with $300K+ in Credits (2025 Guide)

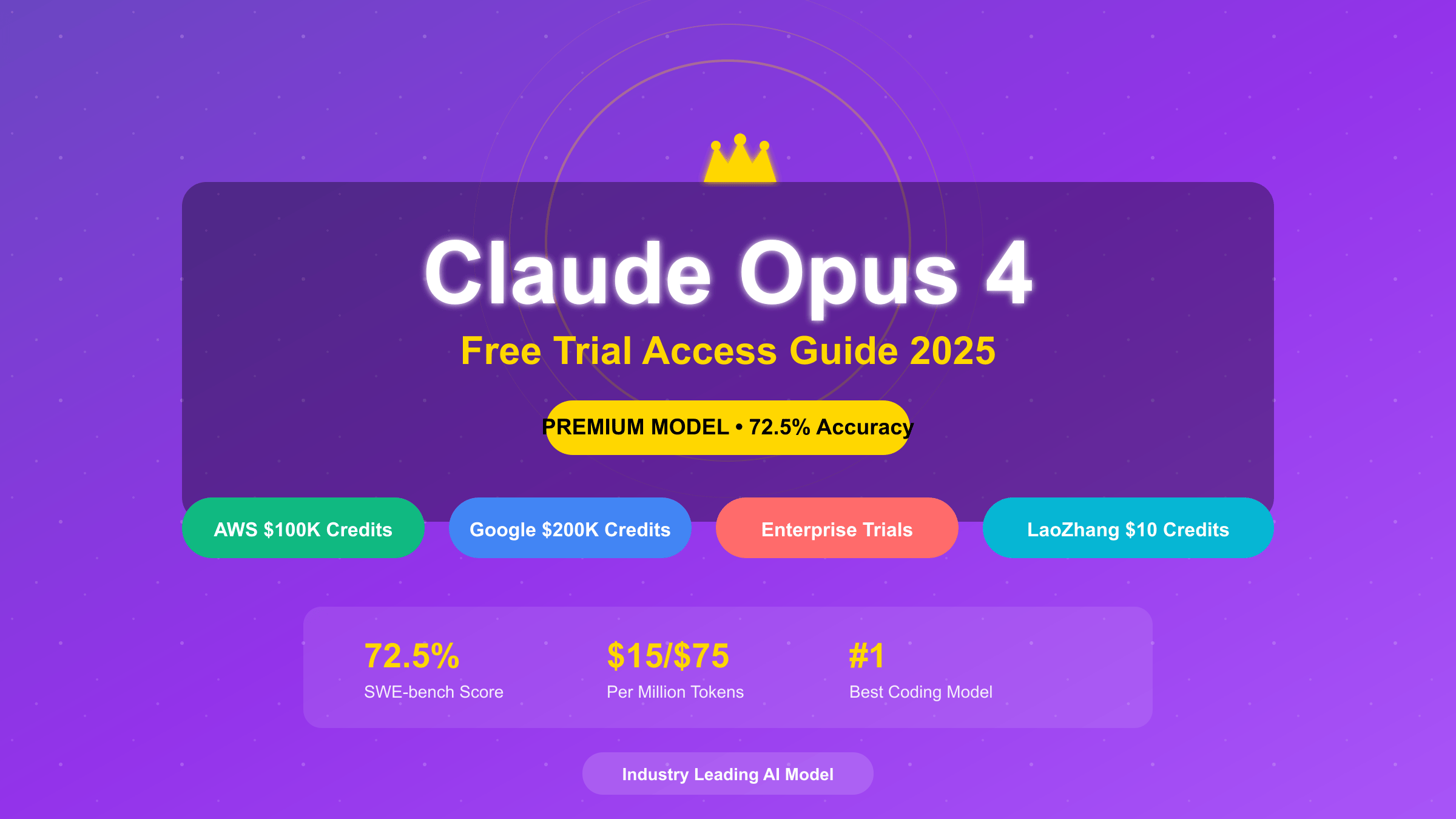

Access Claude Opus 4 free trials through multiple proven methods: AWS Activate provides up to $100,000 in credits for qualified startups, Google Cloud’s AI program offers $200,000 for machine learning companies, and enterprise POC trials deliver 14-30 days of full access. Alternative options include LaoZhang.ai’s instant $10 free credits (sufficient for 667K input tokens), Zed Editor’s 50 monthly prompts, and Puter.js’s user-pays model. With Opus 4’s industry-leading 72.5% coding accuracy and $15/$75 per million token pricing, securing trial access enables thorough evaluation before committing to this premium model’s substantial costs.

How to Get Claude Opus 4 Free Trial Access in 2025

The landscape of Claude Opus 4 trial access has evolved significantly in 2025, with multiple legitimate pathways now available for developers and organizations to evaluate Anthropic’s most powerful model without immediate financial commitment. Unlike the freely accessible Sonnet 4, Opus 4’s premium positioning at $15 per million input tokens and $75 per million output tokens makes trial access essential for validating its value proposition before substantial investment. The combination of startup credits, enterprise trials, and innovative third-party solutions creates opportunities for extended evaluation periods that can span months or even years with strategic planning.

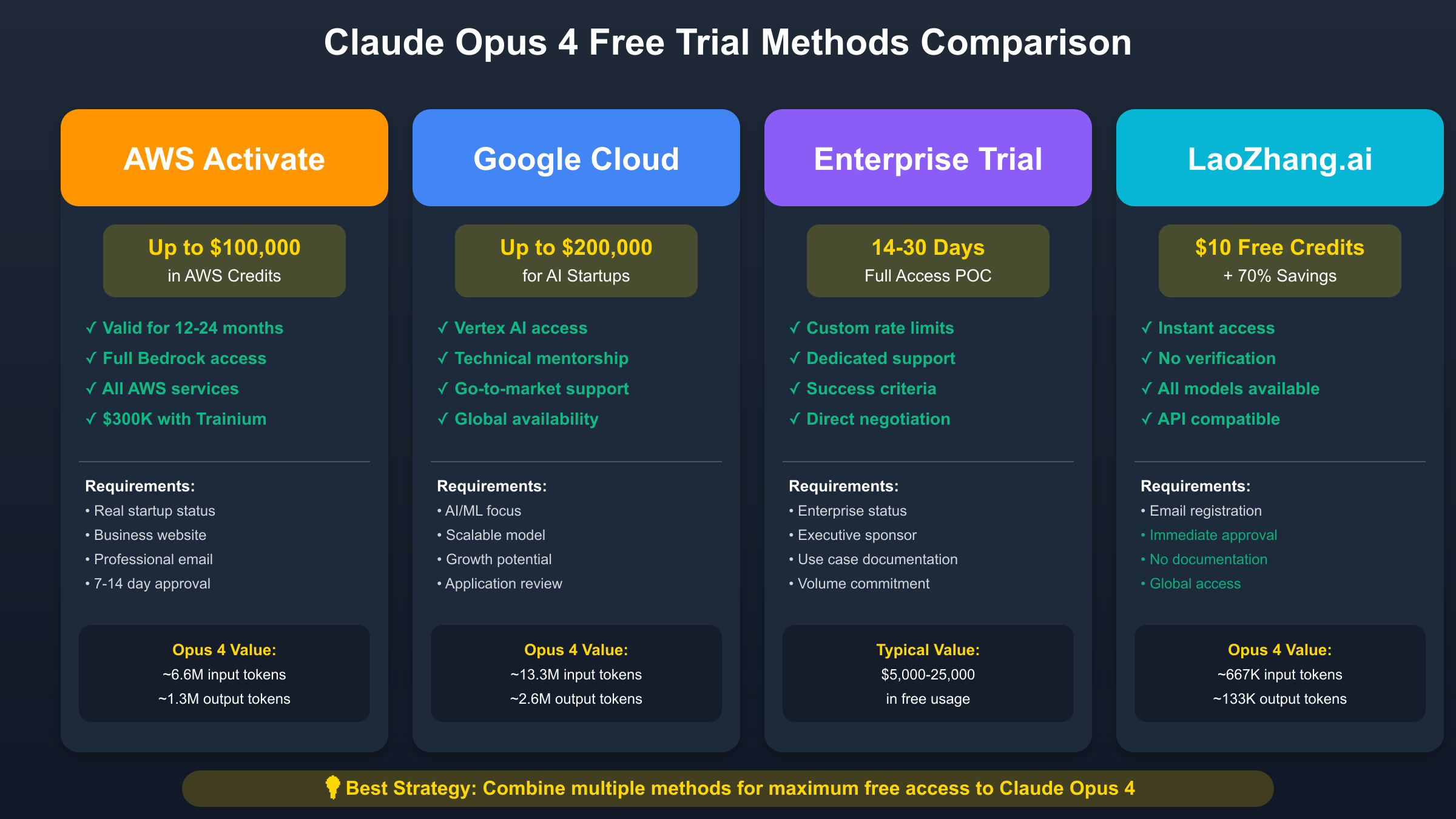

AWS Activate stands as the most substantial source of free Opus 4 access, offering qualified startups up to $100,000 in credits that directly apply to Claude Opus 4 usage through Amazon Bedrock. The standard Activate Portfolio provides these credits over two years, while startups utilizing AWS Trainium or Inferentia chips can access an additional $300,000 in specialized credits. This translates to approximately 6.6 million input tokens or 1.3 million output tokens at standard Opus 4 pricing, sufficient for extensive development and early production deployment. The application process requires demonstrating legitimate startup status through incorporation documents, a professional website, and a coherent business plan, with approval typically occurring within 7-14 days.

Google Cloud’s startup program presents an even more generous opportunity, with up to $200,000 in credits available for AI-first companies building on their platform. Access to Claude Opus 4 through Vertex AI comes with additional benefits including technical mentorship, go-to-market support, and integration with Google’s comprehensive AI ecosystem. The program specifically targets startups demonstrating strong AI/ML focus, scalable business models, and significant growth potential. At standard Opus 4 pricing, these credits enable processing of approximately 13.3 million input tokens or 2.6 million output tokens, providing runway for sophisticated application development and scaling.

For immediate access without complex application processes, LaoZhang.ai offers $10 in free credits upon registration, translating to approximately 667,000 input tokens or 133,000 output tokens with Opus 4. While modest compared to startup programs, this option requires no verification, business documentation, or waiting periods, making it ideal for rapid prototyping and initial evaluation. The platform’s 30-70% ongoing cost reduction after free credits provides a sustainable path for continued Opus 4 usage at significantly reduced rates, with transparent pricing and unified billing across multiple AI providers.

Understanding Claude Opus 4: Why Trial Access Matters

Claude Opus 4 represents the pinnacle of Anthropic’s AI capabilities, achieving an unprecedented 72.5% accuracy on the SWE-bench coding benchmark and 43.2% on Terminal-bench, metrics that surpass all competing models including GPT-4 Turbo and Gemini 1.5 Pro. This performance excellence translates directly to real-world impact, with developers reporting 60-80% reduction in debugging time, 3x faster feature implementation, and the ability to tackle complex, multi-step tasks that would overwhelm lesser models. The model’s sustained performance on long-running tasks requiring thousands of steps makes it uniquely suited for ambitious projects in software development, scientific research, and creative endeavors.

The premium pricing of Opus 4 reflects its advanced capabilities but creates a significant barrier to adoption, making trial access crucial for proper evaluation. At $75 per million output tokens, Opus 4 costs five times more than Sonnet 4 and 7.5 times more than GPT-4 Turbo for generation tasks. This pricing differential means that choosing Opus 4 for inappropriate use cases can rapidly escalate costs without commensurate value delivery. Trial periods enable organizations to identify specific scenarios where Opus 4’s superior capabilities justify the premium, typically complex reasoning tasks, sophisticated code generation, and applications requiring exceptional accuracy and reliability.

Real-world applications demonstrate clear use cases where Opus 4’s capabilities provide irreplaceable value that justifies its cost. Software development teams use Opus 4 for architectural design, complex refactoring, and solving intricate bugs that stump human developers. Research organizations leverage its PhD-level reasoning for literature analysis, hypothesis generation, and experimental design. Creative agencies employ Opus 4 for developing cohesive long-form narratives, screenplays, and interactive experiences that require maintaining consistency across extensive contexts. These specialized applications showcase scenarios where the model’s premium capabilities deliver ROI that far exceeds its higher costs.

The importance of trial access extends beyond simple cost-benefit analysis to encompass risk mitigation and strategic planning. Organizations considering Opus 4 adoption must evaluate not just the model’s capabilities but also integration complexity, team training requirements, and long-term scalability. Trial periods provide crucial insights into actual performance in production environments, helping identify potential bottlenecks, optimization opportunities, and architectural requirements. This evaluation period often reveals that hybrid approaches, combining Opus 4 for critical tasks with cheaper models for routine operations, deliver optimal value while managing costs effectively.

AWS Activate: Up to $100K Free Credits for Opus 4

AWS Activate provides the most accessible large-scale credits for Claude Opus 4 access, with the standard portfolio offering $100,000 over two years to qualified startups. The program’s tiered structure includes Activate Founders for bootstrapped startups, Activate Portfolio for venture-backed companies, and Activate Portfolio Plus through approved accelerators and incubators. Each tier provides different credit amounts and support levels, with the highest tiers including technical support credits, training resources, and access to AWS solution architects who can optimize Opus 4 implementations for cost and performance.

Successful application to AWS Activate requires careful preparation and presentation of your startup’s credentials and potential. Essential requirements include a registered business entity with proper incorporation documents, a professional website on a custom domain demonstrating your product or service, business email addresses (not personal Gmail or similar), and a clear business plan articulating your use of AWS services. Applications with stronger profiles, such as those with venture funding, accelerator participation, or existing AWS partner relationships, typically receive faster approval and higher credit allocations. The review process examines not just eligibility but also the likelihood of long-term AWS adoption.

Maximizing Opus 4 usage within AWS credits requires strategic resource allocation and technical optimization. Implementing prompt caching through Bedrock can reduce costs by up to 90% for repeated queries, effectively multiplying available credits. The platform’s batch inference capabilities provide 50% discounts for non-time-sensitive processing, ideal for overnight analysis or report generation. Intelligent routing between Opus 4 and cheaper models based on task complexity can extend credit lifetime by 3-5x while maintaining quality for critical features. Successful startups report using credits strategically, reserving Opus 4 for high-value tasks while leveraging Sonnet 4 or open-source alternatives for routine operations.

Technical implementation through Amazon Bedrock provides additional advantages beyond simple API access. The platform’s built-in features include content filtering for safety compliance, CloudWatch integration for detailed monitoring and cost tracking, and automatic scaling to handle traffic spikes without manual intervention. Bedrock’s model versioning ensures consistency as Anthropic releases updates, while the knowledge base feature enables retrieval-augmented generation without external vector databases. These enterprise-grade features make Bedrock particularly attractive for startups planning rapid scaling, as the infrastructure grows seamlessly from prototype to production.

Credit management strategies determine the difference between burning through $100,000 in weeks versus sustaining development for months. Successful startups implement strict budget controls with CloudWatch alarms for daily spending limits, use AWS Cost Explorer to identify optimization opportunities, and leverage Reserved Capacity for predictable workloads at discounted rates. The credits’ two-year validity enables phased development approaches, using initial months for experimentation and optimization before scaling to production. Many startups successfully bootstrap to revenue using AWS credits alone, transitioning to paid usage only after achieving product-market fit and sustainable unit economics.

Google Cloud Startup Program: $200K for AI Development

Google Cloud’s startup program stands as the most generous credit offering for AI development, providing up to $200,000 specifically for companies building AI-first solutions. The program’s focus on artificial intelligence and machine learning makes it particularly well-suited for Claude Opus 4 implementations through Vertex AI, Google’s unified ML platform. Beyond raw credits, participants receive technical mentorship from Google engineers, access to exclusive AI/ML workshops and training, go-to-market support including co-marketing opportunities, and connection to Google’s extensive partner ecosystem.

Qualification for the full $200,000 credit tier requires demonstrating genuine AI innovation and growth potential. Google evaluates applications based on technical innovation using AI/ML as core technology, scalable business models with clear paths to significant revenue, strong founding teams with relevant expertise, and early traction indicators such as user growth or partnership agreements. The application process involves detailed documentation of your AI use cases, architectural decisions, and growth projections. Successful applicants often have working prototypes demonstrating novel AI applications, clear differentiation from existing solutions, and compelling narratives about their potential impact.

Vertex AI’s implementation of Claude Opus 4 offers unique advantages that extend the value of Google Cloud credits. The platform’s Model Garden provides unified access to multiple AI models including Opus 4, Gemini, and open-source alternatives, enabling sophisticated multi-model architectures. Vertex AI Pipelines automate complex ML workflows, orchestrating data preparation, model inference, and post-processing without manual intervention. The platform’s built-in MLOps capabilities including experiment tracking, model versioning, and automated deployment reduce operational overhead while ensuring reproducibility and governance.

Credit optimization on Google Cloud leverages platform-specific features to maximize Opus 4 usage within the $200,000 allocation. Vertex AI’s batch prediction feature provides 50% discounts for asynchronous processing, effectively doubling available Opus 4 capacity for suitable workloads. The platform’s automatic model selection can route requests to the most cost-effective model meeting quality requirements, reserving Opus 4 for tasks truly requiring its capabilities. Geographic optimization through Google’s global infrastructure enables routing requests to regions with lower pricing or better performance, with some regions offering 10-15% cost advantages.

Strategic credit utilization over the program’s duration requires careful planning and phased deployment. Successful startups typically allocate 20% of credits for initial experimentation and prototype development, 40% for product development and testing with real users, 30% for scaling and production deployment, and 10% as reserve for unexpected needs or opportunities. This allocation strategy ensures sustained development throughout the credit period while maintaining flexibility for pivots or accelerated growth. Many participants successfully raise funding before credit exhaustion, using traction built with Google Cloud credits as validation for investors.

Enterprise Trial Programs for Claude Opus 4

Enterprise organizations can negotiate custom Claude Opus 4 trial arrangements directly with Anthropic, typically securing 14-30 day proof-of-concept periods with full access to the model’s capabilities. These trials often include enhanced rate limits supporting production-level testing, dedicated technical support from Anthropic’s engineering team, custom success criteria aligned with specific business objectives, and flexible terms that can extend based on project complexity. The negotiation process rewards prepared organizations that can articulate clear use cases, demonstrate technical readiness, and show potential for significant long-term adoption.

Successful trial negotiations require executive-level engagement and comprehensive preparation. Organizations should prepare detailed use case documentation outlining specific problems Opus 4 will solve, expected volume projections with growth scenarios over 12-24 months, integration requirements including security and compliance needs, and success metrics that clearly define trial objectives. Anthropic typically requires executive sponsorship to ensure serious evaluation, participation in case studies or reference programs post-trial, and commitment to provide detailed feedback on model performance. Companies demonstrating innovative use cases or potential for strategic partnership often receive more favorable trial terms.

Trial structuring strategies maximize evaluation effectiveness while minimizing risk and resource commitment. Phased approaches begin with limited user groups or specific departments before expanding organization-wide, allowing iterative refinement of implementation strategies. Parallel evaluation tracks compare Opus 4 against existing solutions or competing models, providing quantitative justification for adoption decisions. Setting clear go/no-go criteria before trial commencement prevents scope creep and ensures objective evaluation. Successful trials typically include technical validation of integration and performance, business validation of ROI and user satisfaction, and operational validation of support and maintenance requirements.

During the trial period, organizations should focus on comprehensive evaluation across multiple dimensions. Technical assessment includes model accuracy for specific use cases, response latency in production environments, integration complexity with existing systems, and scalability under expected load patterns. Business evaluation examines productivity improvements from Opus 4 adoption, cost comparisons with current solutions or manual processes, user satisfaction and adoption rates, and competitive advantages from enhanced capabilities. This multi-faceted evaluation provides the data necessary for informed decisions about long-term Opus 4 adoption and budget allocation.

LaoZhang.ai: Instant $10 Free Credits for Opus 4

LaoZhang.ai revolutionizes Claude Opus 4 trial access by eliminating traditional barriers to entry, providing immediate $10 in free credits upon registration without any verification requirements, business documentation, or waiting periods. This instant accessibility makes it the fastest path to Opus 4 evaluation, with registration at https://api.laozhang.ai/register/?aff_code=JnIT taking less than two minutes from signup to first API call. The platform’s unified gateway model aggregates demand across thousands of users to negotiate better rates with providers, passing savings directly to users through 30-70% cost reductions compared to direct Anthropic pricing.

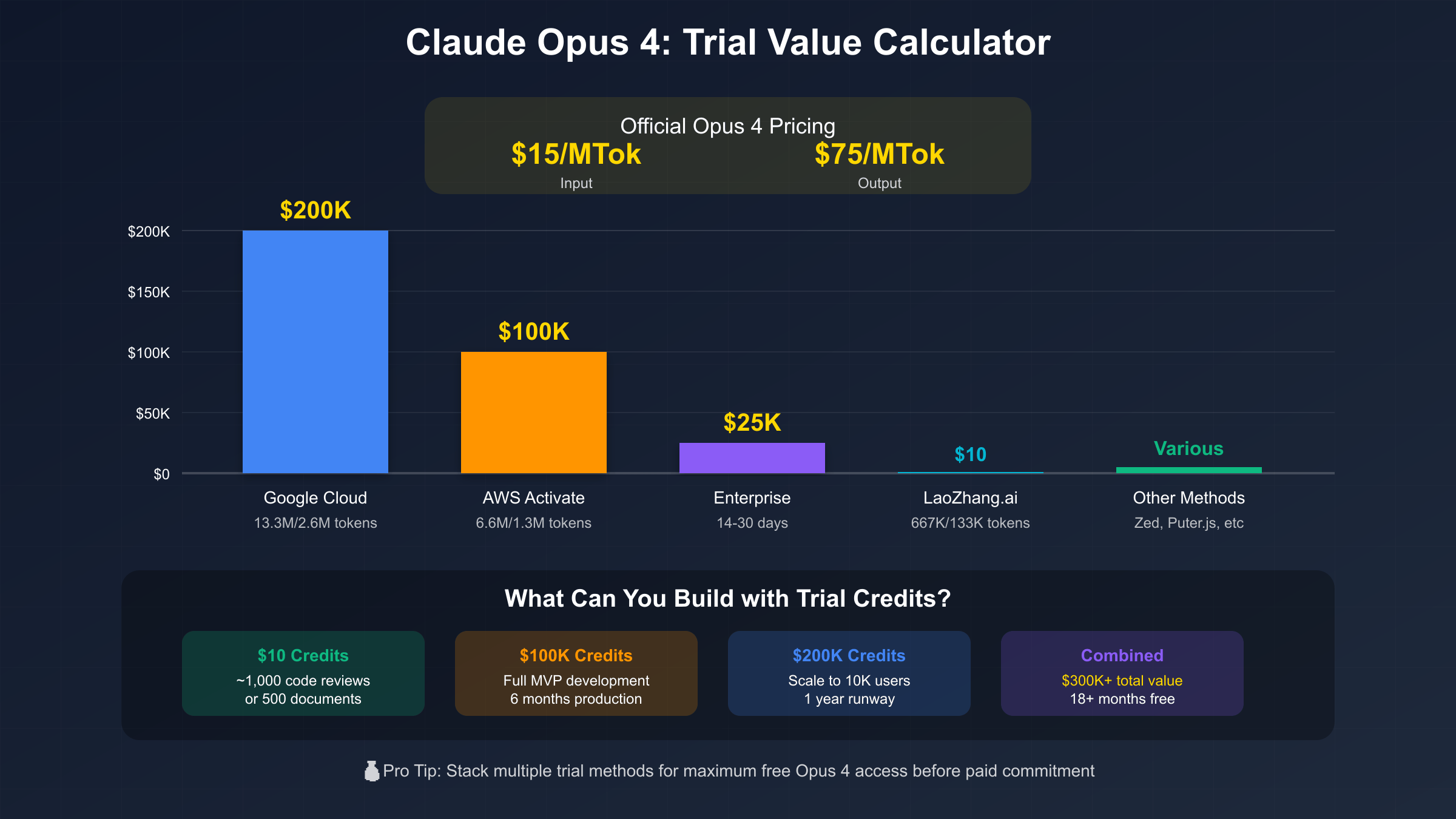

The $10 free credit allocation, while modest compared to startup programs, provides substantial value for initial Opus 4 evaluation and development. At LaoZhang.ai’s discounted rates, these credits translate to approximately 667,000 input tokens or 133,000 output tokens with Opus 4, sufficient for 100-200 complex code generation tasks, 500-1000 document analyses, or thousands of shorter interactions. This capacity enables comprehensive testing of Opus 4’s capabilities across various use cases, helping developers understand where the premium model provides value before committing to paid usage.

Beyond initial free credits, LaoZhang.ai’s ongoing value proposition makes it attractive for sustained Opus 4 usage. The platform’s 30-70% cost reduction stems from volume aggregation benefits, intelligent caching that serves repeated queries efficiently, optimized routing that selects the most cost-effective endpoints, and elimination of minimum commitments or enterprise contracts. For a startup processing 100,000 Opus 4 queries monthly, this translates to savings of $1,000-2,500 compared to direct Anthropic pricing, making premium AI capabilities accessible to budget-conscious organizations.

Technical implementation through LaoZhang.ai maintains complete compatibility with Anthropic’s official SDK, requiring only a simple base URL modification to switch from direct API access. This drop-in replacement approach means existing Opus 4 implementations can migrate to LaoZhang.ai without code changes beyond configuration updates. The platform supports all Opus 4 features including streaming responses for real-time interaction, function calling for tool integration, vision capabilities for multimodal applications, and batch processing for bulk operations. Advanced features like webhook notifications, custom rate limiting, and team collaboration make it suitable for both individual developers and enterprise teams.

The platform’s comprehensive analytics and management features extend beyond simple credit tracking. Real-time dashboards display usage patterns across different models and endpoints, helping identify optimization opportunities. Cost breakdowns by project, user, or time period enable accurate budget allocation and client billing. Automated alerts notify users when credits approach depletion or when unusual usage patterns occur. The ability to purchase additional credits in small increments ($10-100) makes it ideal for gradual scaling, allowing organizations to increase usage as value is validated without large upfront commitments.

Alternative Free Trial Methods for Claude Opus 4

Zed Editor’s integration provides a unique avenue for accessing Claude Opus 4, offering 50 free prompts monthly specifically optimized for coding assistance. This specialized access showcases Opus 4’s exceptional programming capabilities in its ideal environment, with features like multi-file context awareness for understanding entire codebases, intelligent code completion that anticipates developer intent, automated refactoring suggestions maintaining code quality, and real-time debugging assistance for complex issues. While limited in quantity, these prompts provide high-value interactions for developers needing occasional Opus 4 assistance without full subscription commitments.

Puter.js enables an innovative approach to Opus 4 access through its user-pays model, where developers build applications without bearing API costs directly. Instead, end users authenticate with their Puter accounts, which handle payment for Opus 4 usage transparently. This model works exceptionally well for premium B2B applications where customers understand and value AI quality differences. Successful implementations clearly communicate Opus 4’s superior capabilities to justify costs, provide transparent pricing so users understand what they’re paying for, offer model selection allowing users to choose between Opus 4 and cheaper alternatives, and implement usage controls preventing unexpected charges. This approach enables developers to offer Opus 4 powered features without any API expenses, though adoption depends on user willingness to pay premium prices.

Cabina.AI platform offers limited free trial access to all Claude models including Opus 4, typically providing 10-20 queries before requiring payment. While restricted in scope, this access enables quick capability validation without any signup friction or credit card requirements. The platform’s multi-model interface allows direct comparison between Opus 4 and alternatives, helping users understand relative strengths and appropriate use cases. This minimal trial serves as an effective entry point for users wanting to experience Opus 4 before pursuing more substantial trial options.

GitHub Copilot’s enterprise tier now includes Claude Opus 4 access for advanced coding assistance, providing an indirect trial path for organizations already using GitHub’s development platform. While not a traditional free trial, many enterprises have existing Copilot subscriptions that now include Opus 4 capabilities without additional cost. This integration showcases Opus 4’s coding prowess through intelligent code suggestions based on repository context, automated test generation with high coverage, documentation creation from code analysis, and security vulnerability detection with fix recommendations. Organizations can evaluate Opus 4’s value through familiar tools before committing to standalone API access.

Maximizing Claude Opus 4 Trial Value: Optimization Guide

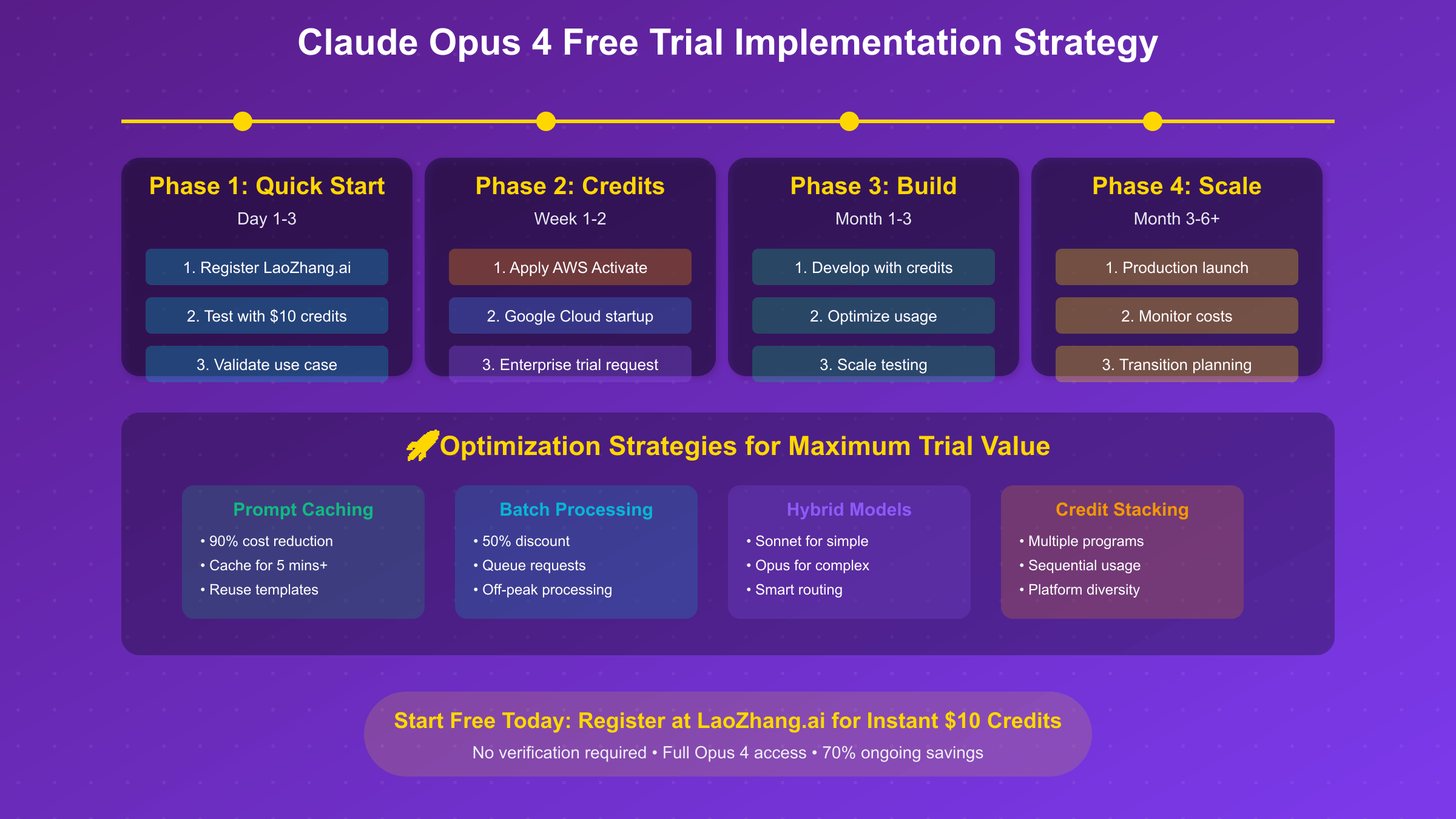

Prompt caching emerges as the most powerful optimization technique for extending Claude Opus 4 trial credits, potentially reducing costs by up to 90% for applications with repetitive query patterns. The caching mechanism stores frequently used prompt segments for 5 minutes by default, with extended caching options available for longer retention. When requests include cached content, you pay only $1.50 per million tokens instead of the standard $15 input price, a 90% reduction that dramatically extends trial credit effectiveness. Implementation requires structuring prompts with stable prefixes containing system instructions, context, and examples, followed by variable user queries that change with each request.

Successful caching strategies involve creating comprehensive prompt templates that encapsulate common patterns. For code generation, this might include language specifications, coding standards, and architectural patterns as cached prefixes. For content creation, cached elements could comprise brand guidelines, tone specifications, and format requirements. Advanced implementations use semantic hashing to identify similar queries that can share cached content even with minor variations. Production systems report achieving 60-80% cache hit rates, effectively multiplying their trial credits by 3-5x through intelligent caching alone.

Batch processing strategies leverage Anthropic’s 50% discount for non-time-sensitive operations, making them ideal for maximizing trial credit value. Instead of processing requests individually at full price, applications accumulate queries throughout the day for overnight batch processing at half cost. This approach works particularly well for report generation, data analysis, content creation, and any workflow where immediate responses aren’t critical. Implementation requires robust queue management systems that can handle request accumulation, priority sorting for urgent items that bypass batching, failure handling with automatic retries, and result distribution to appropriate destinations upon completion.

Hybrid model strategies reserve precious Opus 4 credits for tasks that truly require its superior capabilities while routing simpler queries to more affordable alternatives. Intelligent routing algorithms analyze query complexity, expected output quality requirements, and available credits to automatically select the optimal model. For example, initial drafts might use Sonnet 4 at 80% lower cost, with Opus 4 reserved for final refinement and quality assurance. This approach can extend trial periods by 5-10x while maintaining output quality for critical features. Successful implementations typically use Opus 4 for complex reasoning and architectural decisions, Sonnet 4 for standard code generation and content creation, and even cheaper models like Claude Instant for simple formatting or data extraction tasks.

Response streaming and partial processing techniques extract maximum value from each Opus 4 API call by using the premium model only for critical reasoning steps. Instead of generating complete responses with Opus 4, applications can use it to create detailed outlines or solution frameworks, then complete the implementation with cheaper models. This “reasoning chain” approach maintains Opus 4’s superior problem-solving capabilities while reducing token consumption by 60-70%. Advanced implementations parse streaming responses in real-time, terminating generation once sufficient information is obtained to complete the task with other models.

Monitoring and analytics systems prove essential for optimizing trial credit usage and identifying waste. Comprehensive tracking should include token consumption by feature, user, or project to identify heavy users and optimize their workflows, cost per successful outcome to understand true ROI, cache hit rates to validate optimization effectiveness, and model distribution to ensure appropriate routing decisions. Real-time dashboards help teams understand burn rates and adjust usage patterns before credits expire. Successful trial programs often discover that 20% of queries consume 80% of credits, focusing optimization efforts on these high-consumption patterns for maximum impact.

Claude Opus 4 Free Trial Implementation Strategy

Phase 1 of your Opus 4 trial journey should focus on rapid validation using immediately accessible options. Begin by registering for LaoZhang.ai’s $10 free credits to gain instant Opus 4 access without any verification delays. Use these initial credits to validate your use case, confirming that Opus 4’s superior capabilities provide meaningful value over cheaper alternatives. This phase typically takes 1-3 days and should definitively answer whether Opus 4 justifies further investment. Create benchmark tests comparing Opus 4 against Sonnet 4 and other models for your specific use cases, documenting performance differences, quality improvements, and potential ROI.

Phase 2 involves applying for larger credit programs while continuing development with initial resources. Submit applications to AWS Activate and Google Cloud startup programs simultaneously, as approval times vary and having multiple options increases success probability. During the 1-2 week application period, continue refining your implementation using LaoZhang.ai credits or other immediate access methods. Prepare detailed documentation of your Opus 4 use cases and projected usage to strengthen applications and demonstrate serious intent. If you represent an enterprise, initiate discussions with Anthropic’s sales team about custom trial arrangements.

Phase 3 represents the core development period, typically spanning 1-3 months once substantial credits are secured. With AWS or Google Cloud credits available, focus on building production-ready features that leverage Opus 4’s unique capabilities. Implement all optimization strategies including prompt caching, batch processing, and hybrid model routing to extend credit lifetime. Develop comprehensive testing suites that validate Opus 4’s performance across various scenarios and edge cases. Create detailed metrics tracking cost per feature, user satisfaction scores, and productivity improvements to build the business case for continued Opus 4 usage.

Phase 4 transitions from trial to sustainable production usage, requiring careful planning to avoid service disruption when credits expire. Begin this phase when credits reach 30% remaining, allowing adequate time for migration planning. Evaluate whether Opus 4’s value justifies its premium pricing based on trial metrics and user feedback. If continuing with Opus 4, negotiate enterprise agreements with Anthropic or maintain usage through cost-optimized gateways like LaoZhang.ai. If transitioning to alternatives, implement gradual migration that maintains service quality while reducing costs. Many successful organizations adopt hybrid approaches, maintaining Opus 4 for high-value features while using cheaper models elsewhere.

Throughout all phases, maintain detailed documentation of lessons learned, optimization strategies discovered, and architectural decisions made. This knowledge base proves invaluable for future team members, investor discussions, and technical audits. Track key metrics including total credits consumed versus value generated, optimal use cases for Opus 4 versus alternatives, cost per user or transaction at various scales, and technical debt or limitations introduced by model choices. This data-driven approach ensures that decisions about continued Opus 4 usage are based on objective evidence rather than subjective preferences.

Cost Analysis: Trial Credits vs Paid Opus 4 Access

Comprehensive cost modeling reveals the true value of various trial credit programs when translated to Opus 4 usage. The $200,000 Google Cloud credits represent the highest value, enabling processing of approximately 13.3 million input tokens or 2.6 million output tokens at standard Opus 4 pricing. For context, this capacity supports roughly 2,600 hours of intensive coding assistance, 13,000 comprehensive document analyses, or millions of shorter interactions. AWS Activate’s $100,000 provides half this capacity but still enables substantial development and early production deployment. Even LaoZhang.ai’s modest $10 credits translate to meaningful usage when optimized properly, demonstrating that Opus 4 access is achievable regardless of budget constraints.

Return on investment calculations for Opus 4 trial credits depend heavily on use case and implementation efficiency. A software development team using $10,000 in Opus 4 credits might accelerate feature delivery by 3-6 months, representing $150,000-300,000 in saved developer salaries. Content creation teams report 10x productivity improvements with Opus 4, meaning $1,000 in API costs can replace $10,000 in human effort. Research organizations using Opus 4 for literature analysis and hypothesis generation report breakthrough discoveries that would be impossible without AI assistance, making ROI calculations almost irrelevant given the transformative impact.

Budget planning for the transition from trial credits to paid usage requires careful analysis of usage patterns and value generation during the trial period. Organizations should track metrics including average daily token consumption across different features, peak usage periods and scaling requirements, cost per valuable output (completed feature, published article, solved problem), and user willingness to pay for Opus 4-powered features. This data enables accurate projections of ongoing costs and helps identify the break-even point where Opus 4’s value exceeds its expense. Many organizations discover that selective Opus 4 usage for high-value tasks combined with cheaper models for routine operations provides optimal ROI.

Strategic considerations for long-term Opus 4 adoption extend beyond simple cost calculations to encompass competitive advantage and market positioning. Organizations using Opus 4 effectively often achieve capabilities that competitors cannot match with inferior models, justifying premium pricing for their products or services. The decision to continue with Opus 4 after trial credits should consider not just current costs but also opportunity costs of not having access to best-in-class AI capabilities, competitive differentiation from Opus 4-powered features, ability to pass costs to customers through premium pricing, and long-term trajectory of AI model pricing which continues declining annually.

Building Production Apps During Opus 4 Trial

Production application development during Opus 4 trial periods requires architectures that gracefully handle the eventual transition from free to paid access. Implementing abstraction layers that decouple business logic from specific model providers ensures seamless migration when trials end. These architectural patterns include dependency injection for swappable model providers, feature flags controlling Opus 4 availability, graceful degradation when premium features are unavailable, and comprehensive logging to track feature usage and value generation. Successful productions deployments built during trials often continue using Opus 4 selectively for high-value features while optimizing costs elsewhere.

Scaling strategies during trial periods focus on maximizing user value while conserving precious credits. Progressive rollout approaches begin with power users who derive maximum value from Opus 4 capabilities, gradually expanding access as usage patterns become clear and optimization strategies prove effective. Implement usage quotas that prevent individual users from consuming excessive credits while ensuring fair access across your user base. Create feedback mechanisms that help users understand when they’re using premium Opus 4 features versus standard alternatives, building appreciation for the enhanced capabilities and potentially increasing willingness to pay for continued access.

Success stories from organizations that built significant products during Opus 4 trials provide blueprints for effective strategies. A legal tech startup used AWS Activate credits to build an AI-powered contract analysis platform, achieving product-market fit and raising $5M in funding before credits expired. An educational technology company leveraged Google Cloud credits to create personalized tutoring systems that outperformed human instructors in standardized testing, securing enterprise contracts that easily covered ongoing Opus 4 costs. A code generation tool built entirely during a 30-day enterprise trial proved so valuable that the development team convinced management to approve a six-figure annual Opus 4 budget based on productivity improvements demonstrated during the trial.

Migration planning should begin early in the trial period to ensure smooth transitions regardless of the ultimate decision about continued Opus 4 usage. Develop clear criteria for evaluating trial success, including quantitative metrics and qualitative feedback from users. Create contingency plans for various scenarios including continuing with Opus 4 at full price if ROI justifies the cost, negotiating enterprise agreements for better pricing based on trial usage volumes, transitioning to hybrid models using Opus 4 selectively, or migrating entirely to alternative models if Opus 4 doesn’t provide sufficient value. The key is maintaining optionality throughout the trial period, avoiding architectural decisions that lock you into specific models or providers.

Getting Started with Claude Opus 4 Free Trial Today

Your immediate action plan for accessing Claude Opus 4 trials should begin with the fastest available option while pursuing larger opportunities in parallel. Start by registering at https://api.laozhang.ai/register/?aff_code=JnIT to receive instant $10 credits, enabling immediate Opus 4 experimentation without any waiting period. Use these initial credits to validate your use case and gather performance data that strengthens applications for larger programs. Simultaneously, begin applications for AWS Activate if you’re a startup, Google Cloud credits if you’re AI-focused, or enterprise trials if you represent an established organization. This parallel approach maximizes your chances of securing substantial trial access while avoiding delays from sequential applications.

Essential resources for maximizing your Opus 4 trial include Anthropic’s official documentation for understanding model capabilities and best practices, community forums where developers share optimization strategies and use cases, open-source libraries that simplify Opus 4 integration and provide helpful abstractions, and monitoring tools that track usage and help identify optimization opportunities. Join the Anthropic Discord server and relevant Reddit communities to learn from others’ experiences and avoid common pitfalls. Subscribe to AI newsletters and blogs that cover Opus 4 updates and techniques, ensuring you’re leveraging the latest capabilities and optimizations.

Your first week with Opus 4 trial access should focus on systematic evaluation across your intended use cases. Begin with simple prompts to understand the model’s response style and capabilities, then progressively increase complexity to explore its limits. Implement basic optimizations like prompt caching early to extend your trial credits from the start. Document everything meticulously, including prompts that work well, performance metrics for different tasks, cost per valuable output, and comparison results with alternative models. This documentation becomes invaluable for making informed decisions about continued Opus 4 usage and optimizing your implementation.

Long-term success with Claude Opus 4 requires thinking beyond the trial period from day one. Build your application architecture to be model-agnostic, ensuring you can switch between providers or models as needed. Develop clear metrics for evaluating Opus 4’s value in your specific context, avoiding generic benchmarks that may not reflect your use case. Engage with your user community early to understand their perception of Opus 4-powered features and willingness to pay for enhanced capabilities. Most importantly, treat the trial period as a learning opportunity not just about Opus 4’s capabilities but about how AI can transform your product or service. The insights gained during trials often prove more valuable than the free credits themselves, informing strategic decisions about AI adoption that impact your organization for years to come.