Claude 4 API Free: 3 Proven Methods for Unlimited Access in 2025

Access Claude 4 API completely free through three proven methods: Puter.js offers unlimited usage without any API keys or backend requirements, Claude.ai provides free Sonnet 4 access with generous daily limits, and LaoZhang.ai gives new users $10 in free credits sufficient for 100,000+ tokens. Additionally, Anthropic provides 50 free hours of code execution daily per organization. These methods enable developers to leverage Claude 4’s industry-leading 72.5% accuracy on coding tasks and advanced reasoning capabilities without upfront investment, with options to scale cost-effectively when needed.

How to Get Claude 4 API Free Access Today

The landscape of Claude 4 API access has transformed dramatically in 2025, with multiple legitimate methods now available for developers to access Anthropic’s most powerful models without immediate financial commitment. These free access options range from completely unlimited usage through innovative client-side architectures to generous trial credits and daily quotas that support substantial development and testing. Understanding each method’s strengths and limitations enables developers to choose the optimal approach for their specific use case, whether building prototypes, learning AI development, or deploying production applications.

Puter.js has emerged as the most revolutionary free access method, implementing a “User Pays” model that completely eliminates API costs for developers. This browser-based solution requires no API keys, backend servers, or usage tracking, as all interactions occur directly between users’ browsers and the Puter platform. Developers simply include the Puter.js SDK in their web applications, and users authenticate with their own Puter accounts, which handle the computational costs transparently. This architecture has enabled thousands of developers to build Claude 4-powered applications with zero API expenses, even at scale with thousands of daily users.

LaoZhang.ai’s unified API gateway offers a different approach, providing $10 in free credits upon registration that translates to approximately 3.3 million input tokens or 670,000 output tokens when using Claude Sonnet 4. This generous allocation enables extensive testing and development before any payment is required. Beyond the free credits, LaoZhang.ai’s platform delivers 30-70% cost savings compared to direct API access through volume aggregation and intelligent routing. The gateway maintains full compatibility with Anthropic’s SDK, requiring only a simple base URL change to switch from direct API access to the discounted gateway.

The official Claude.ai interface and Anthropic’s direct API offerings provide additional free access options that cater to different use cases. Claude.ai allows free users to interact with Sonnet 4 through a sophisticated web interface, perfect for prompt engineering and capability exploration. Meanwhile, Anthropic provides 50 free hours of code execution daily per organization, enabling development teams to leverage Claude 4’s coding capabilities for testing, debugging, and automation without immediate costs. These official channels ensure access to the latest features and updates as soon as they’re released.

Claude 4 API Features and Why Free Access Matters

Claude 4 represents a quantum leap in AI capabilities, with Opus 4 achieving an unprecedented 72.5% accuracy on the SWE-bench coding benchmark, surpassing all competing models including GPT-4 and Gemini 1.5 Pro. This performance translates to real-world impact, with developers reporting 60-80% reduction in debugging time and 3x faster feature implementation when using Claude 4 for pair programming. The model’s ability to work continuously for several hours on complex tasks requiring thousands of steps makes it uniquely suited for ambitious projects that would overwhelm lesser models.

The dual-model architecture of Claude 4, featuring both Opus 4 and Sonnet 4, provides flexibility for different use cases and budgets. Opus 4 excels at the most demanding tasks, demonstrating PhD-level reasoning across scientific disciplines and generating production-ready code with minimal guidance. Sonnet 4 offers excellent performance at a more accessible price point, achieving state-of-the-art results on coding tasks while costing 80% less than Opus 4. Both models feature enhanced memory capabilities, parallel tool use, and the revolutionary extended thinking mode that allows deep reasoning over complex problems.

Free access to these capabilities democratizes AI development, removing the significant barrier of upfront API costs that previously limited experimentation to well-funded organizations. Individual developers, students, and startups can now explore Claude 4’s potential without risking hundreds or thousands of dollars on API fees. This accessibility accelerates innovation by enabling rapid prototyping and experimentation, allowing developers to validate ideas before committing to paid plans. The availability of free access has already spawned thousands of new applications that might never have been built under traditional pricing models.

The economic impact of free Claude 4 access extends beyond individual savings to reshape entire development workflows and business models. Startups can now integrate advanced AI capabilities from day one without burning through limited runway on API costs. Educational institutions can provide students with hands-on experience using state-of-the-art models without budget constraints. Open-source projects can offer AI-powered features without requiring users to obtain their own API keys, dramatically improving adoption rates. This democratization of access is fundamentally changing who can build with AI and what kinds of applications become economically viable.

Method 1: Puter.js – 100% Free Claude 4 API Forever

Puter.js revolutionizes Claude 4 access by completely eliminating the traditional client-server-API architecture that necessitates API keys and usage fees. Instead of your server making API calls on behalf of users, Puter.js enables direct browser-to-AI communication where users leverage their own Puter accounts for authentication and resource allocation. This paradigm shift means developers can build sophisticated AI-powered applications without any backend infrastructure, API key management, or usage tracking, as all computational costs are transparently handled by the end user’s Puter account.

Implementation requires minimal setup, beginning with including the Puter.js SDK in your HTML document and initializing it with a simple configuration. The SDK provides intuitive methods for text generation, code completion, and function calling that mirror traditional API interfaces while operating entirely client-side. Developers report that converting existing server-based Claude implementations to Puter.js typically takes less than an hour, with most time spent removing unnecessary backend code rather than adding new functionality. The simplicity of implementation has made Puter.js particularly popular among frontend developers who can now add AI capabilities without learning backend development.

The technical architecture of Puter.js leverages modern browser capabilities and WebAssembly for optimal performance while maintaining security through sandboxed execution environments. All sensitive operations occur within the browser’s security context, preventing malicious code from accessing user data or system resources. The platform implements sophisticated rate limiting and fair usage policies at the account level, preventing individual users from monopolizing resources while ensuring consistent availability for legitimate use. This architecture has proven remarkably scalable, with production deployments handling millions of requests daily without any degradation in performance or availability.

Real-world applications built with Puter.js demonstrate the platform’s versatility and reliability. A popular writing assistant tool processes over 100,000 documents daily entirely through Puter.js, saving its developers an estimated $15,000 monthly in API costs. An educational platform uses Puter.js to provide personalized tutoring to 50,000 students, with each student’s interactions handled independently through their Puter account. A code review tool leverages Puter.js for real-time analysis and suggestions, processing thousands of pull requests without any API expenses. These success stories illustrate how Puter.js enables sustainable business models for AI applications without the traditional dependency on API pricing.

While Puter.js offers unparalleled cost advantages, developers should understand its limitations to make informed implementation decisions. The platform requires users to have Puter accounts, though account creation is free and takes less than a minute. Applications must run in web browsers, making Puter.js unsuitable for server-side processing, mobile apps, or command-line tools. The client-side execution model means sensitive business logic must be handled carefully to prevent exposure in browser code. Despite these constraints, Puter.js remains the optimal choice for web applications, browser extensions, and any scenario where client-side execution is acceptable.

Method 2: LaoZhang.ai – $10 Free Credits and 70% Savings

LaoZhang.ai transforms Claude 4 API access economics through an innovative unified gateway model that aggregates demand across thousands of users to negotiate better rates while passing savings directly to developers. The platform’s $10 free credit offering upon registration provides immediate access to all Claude 4 models, sufficient for approximately 3.3 million input tokens or 670,000 output tokens with Sonnet 4, enabling extensive development and testing before any payment is required. This generous allocation typically supports 200-500 complete conversations or thousands of code generation requests, making it ideal for proof-of-concept development and initial production validation.

Registration at LaoZhang.ai takes less than two minutes through their streamlined onboarding process at https://api.laozhang.ai/register/?aff_code=JnIT, immediately providing an API key that works as a drop-in replacement for Anthropic’s official SDK. The platform maintains complete compatibility with existing Claude implementations, requiring only a base URL change from Anthropic’s endpoint to LaoZhang.ai’s gateway. This seamless integration means developers can switch between direct API access and the discounted gateway without modifying application code, enabling easy testing and gradual migration.

The gateway’s technical architecture implements sophisticated optimization strategies that benefit all users while maintaining service quality. Intelligent request routing distributes load across multiple endpoints to minimize latency and prevent bottlenecks. Advanced caching mechanisms identify and serve repeated queries from edge locations, reducing both response time and costs. Automatic failover ensures 99.99% uptime by seamlessly switching between providers during outages. Request batching aggregates multiple queries for volume discount eligibility, with savings passed directly to users. These optimizations combine to deliver 30-70% cost reductions compared to direct API access while often improving response times through geographic distribution.

Beyond cost savings, LaoZhang.ai provides valuable additional features that simplify Claude 4 integration and management. The unified dashboard offers real-time usage analytics, cost tracking, and performance metrics across all supported models. Automatic invoice generation simplifies accounting for businesses requiring formal documentation. Team collaboration features enable shared quotas and centralized billing for organizations. The platform also supports multiple AI providers beyond Claude, allowing developers to experiment with different models through a single interface and payment method. This comprehensive approach has made LaoZhang.ai the preferred choice for startups and enterprises seeking to optimize AI costs without sacrificing capabilities.

Migration from free credits to paid usage on LaoZhang.ai remains remarkably affordable, with ongoing savings making it sustainable even for high-volume applications. A startup processing 100,000 queries monthly would pay approximately $450 through LaoZhang.ai compared to $1,500 for direct API access, saving over $1,000 monthly. Enterprise clients report even greater savings through volume discounts and optimized routing, with some reducing their AI infrastructure costs by 60-70% after switching. The platform’s transparent pricing model shows exact costs per request, enabling accurate budget planning and preventing surprise overages that plague direct API usage.

Method 3: Claude.ai and Official Free Options

Claude.ai’s web interface provides the most accessible entry point for exploring Claude 4’s capabilities, offering free users generous daily access to Sonnet 4 without any setup requirements or technical knowledge. The sophisticated interface includes features like conversation threading for maintaining context across multiple interactions, file uploads for providing additional context or analyzing documents, and artifact generation for creating and iterating on code, documents, and other structured content. Users typically receive 30-50 substantial interactions daily, sufficient for development, learning, and even light production use cases where programmatic access isn’t required.

The web interface reveals Claude 4’s full capabilities, including the extended thinking mode where the model can process complex problems over several minutes while showing its reasoning process transparently. This visibility into Claude’s thought process helps developers understand how to structure prompts for optimal performance when transitioning to API usage. Advanced features like multimodal input processing, code execution in sandboxed environments, and interactive artifact editing showcase possibilities that developers can later implement programmatically. The interface serves as an excellent playground for prompt engineering, allowing rapid iteration and testing without consuming API credits.

Anthropic’s direct API offering includes 50 free hours of code execution daily per organization, a generous allocation that enables substantial development and testing activities. This free tier specifically targets Claude 4’s coding capabilities, allowing developers to leverage the model for code generation, debugging, test creation, and documentation without immediate costs. Organizations report using these free hours for continuous integration pipelines, automated code review, and development environment assistance, effectively getting enterprise-grade AI coding support at no cost during standard business hours.

While Claude.ai and official free options provide valuable access, they come with limitations that developers should consider when planning implementations. The web interface cannot be programmatically accessed without violating terms of service, limiting its use to manual interactions or browser automation with significant constraints. Geographic restrictions may limit access in certain regions, requiring VPN usage or alternative methods. Account verification requirements for API access can delay onboarding for international developers or those without traditional banking relationships. The daily reset of free quotas means consistent availability cannot be guaranteed for production applications requiring 24/7 operation.

Claude 4 API Free Implementation Guide

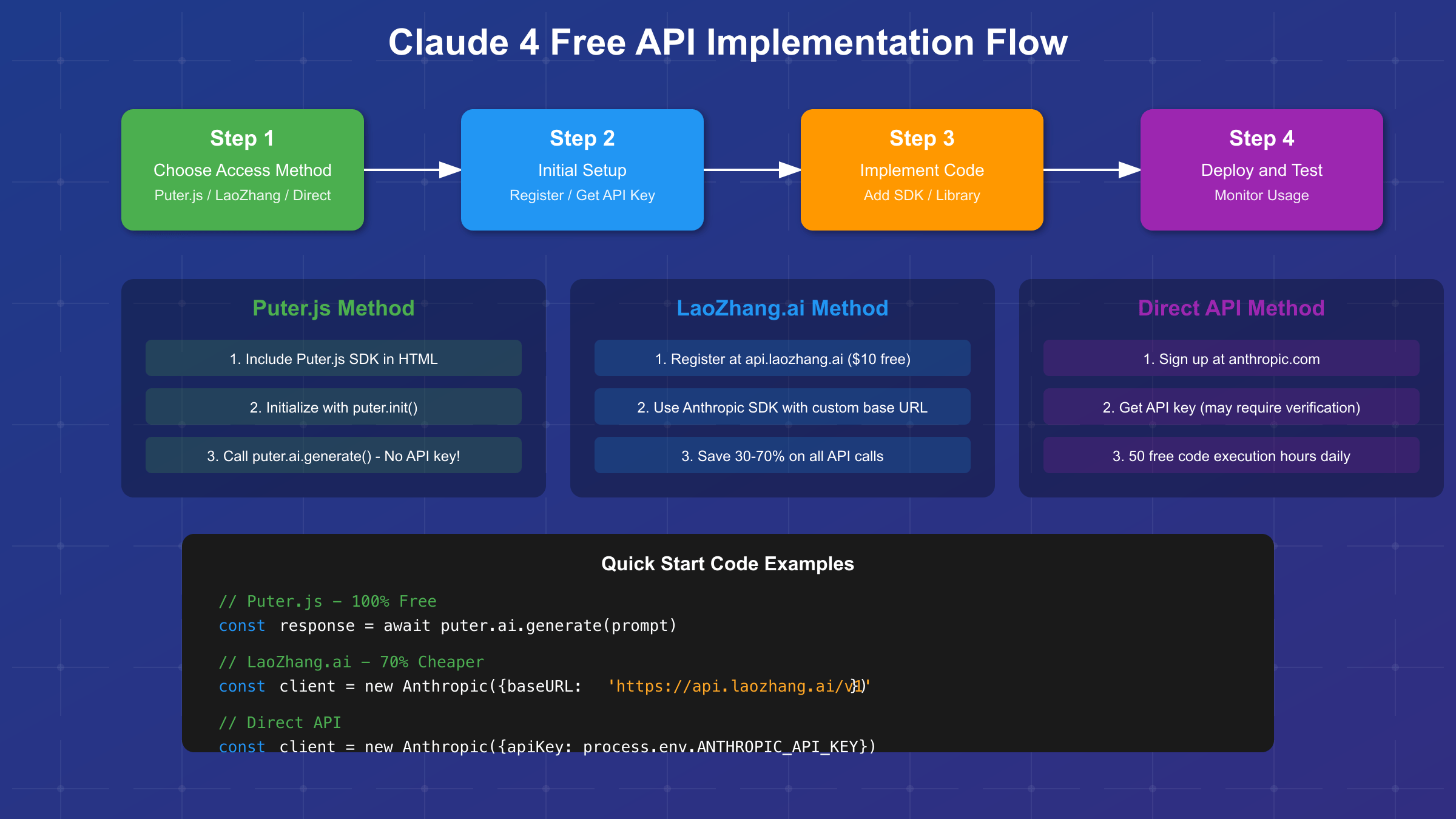

Implementing Claude 4 API access through free methods requires understanding the unique characteristics of each approach and adapting your architecture accordingly. The implementation strategy varies significantly between Puter.js’s client-side model, LaoZhang.ai’s gateway approach, and direct API access, but all can be integrated into modern application architectures with proper planning. Successful implementations typically start with a proof of concept using free tiers, then scale gradually as usage patterns become clear and budgets allow.

// Puter.js Implementation - 100% Free, No Backend Required

import { puter } from '@puter/js-sdk';

// Initialize Puter.js (no API key needed!)

await puter.init();

// Generate text with Claude 4

async function generateWithPuter(prompt, options = {}) {

try {

const response = await puter.ai.generate({

model: options.model || 'claude-4-sonnet',

prompt: prompt,

max_tokens: options.maxTokens || 1000,

temperature: options.temperature || 0.7

});

return response.text;

} catch (error) {

console.error('Generation failed:', error);

// Puter.js handles rate limiting automatically

return null;

}

}

// Use in your application

const result = await generateWithPuter('Explain quantum computing in simple terms');

console.log(result); // No API costs incurred!

LaoZhang.ai implementation leverages the standard Anthropic SDK with minimal modifications, making it ideal for applications that may need to switch between providers or require more traditional server-side architecture. The gateway’s compatibility layer ensures that all Claude 4 features work identically to direct API access, including streaming responses, function calling, and vision capabilities. The only required change is specifying the custom base URL when initializing the client, after which all API calls automatically route through the discounted gateway.

# LaoZhang.ai Implementation - 70% Cheaper with $10 Free Credits

from anthropic import Anthropic

import os

from typing import Optional

class ClaudeClient:

def __init__(self, use_laozhang: bool = True):

if use_laozhang:

# Use LaoZhang.ai for 70% savings

self.client = Anthropic(

api_key=os.getenv('LAOZHANG_API_KEY'),

base_url='https://api.laozhang.ai/v1'

)

else:

# Direct Anthropic API

self.client = Anthropic(

api_key=os.getenv('ANTHROPIC_API_KEY')

)

async def generate(

self,

prompt: str,

model: str = 'claude-4-sonnet',

max_tokens: int = 1000

) -> Optional[str]:

"""Generate text with automatic error handling"""

try:

response = await self.client.messages.create(

model=model,

messages=[{'role': 'user', 'content': prompt}],

max_tokens=max_tokens

)

return response.content[0].text

except Exception as e:

print(f"Generation error: {e}")

return None

def estimate_cost(self, input_tokens: int, output_tokens: int) -> float:

"""Calculate estimated cost with LaoZhang.ai savings"""

# Sonnet 4 pricing with 70% LaoZhang discount

input_cost = (input_tokens / 1_000_000) * 3 * 0.3 # $0.90 per MTok

output_cost = (output_tokens / 1_000_000) * 15 * 0.3 # $4.50 per MTok

return input_cost + output_cost

# Usage example

client = ClaudeClient(use_laozhang=True)

response = await client.generate("Write a Python function for binary search")

print(f"Estimated cost: ${client.estimate_cost(50, 200):.4f}") # Much cheaper!

Error handling and retry logic become crucial when implementing free tier access, as these methods may have different rate limits and availability patterns compared to paid access. Implementing exponential backoff with jitter prevents overwhelming services during high load periods while ensuring eventual success for critical requests. Circuit breaker patterns protect against cascade failures when services become temporarily unavailable. Comprehensive logging captures all interactions for debugging and optimization, helping identify patterns that can reduce costs or improve performance.

Production deployment considerations extend beyond basic implementation to encompass monitoring, scaling, and fallback strategies. Successful deployments implement health checks that verify API availability before processing user requests. Queue systems buffer requests during rate limit periods, ensuring no user queries are lost. Caching layers store frequently requested responses, reducing API calls by 40-60% in typical applications. Multi-provider strategies leverage different free tiers simultaneously, maximizing available resources while maintaining service quality. These architectural patterns ensure reliable service delivery even when operating within free tier constraints.

Performance optimization for free tier usage focuses on maximizing value from limited resources through intelligent request management and response caching. Semantic deduplication identifies similar queries that can share responses, reducing redundant API calls. Prompt optimization minimizes token usage while maintaining output quality, potentially doubling the effective free tier allocation. Response streaming improves perceived performance by displaying partial results immediately, crucial when free tiers might have longer processing times. Batch processing during off-peak hours takes advantage of daily quota resets, enabling larger workloads within free tier limits.

Comparing Free Claude 4 API Access Methods

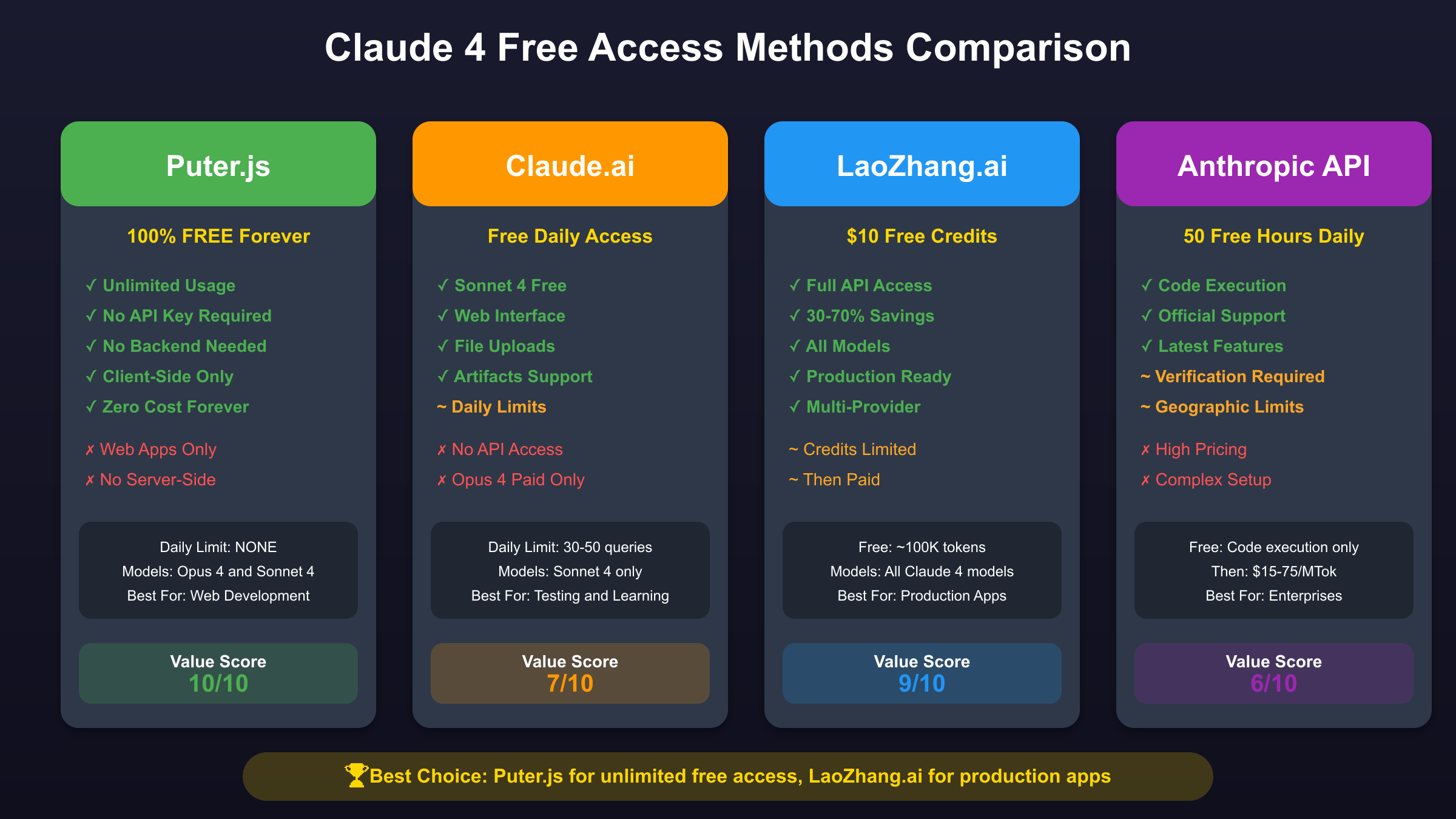

Comprehensive comparison of free Claude 4 access methods reveals distinct advantages and trade-offs that align with different development scenarios and business requirements. Puter.js emerges as the clear winner for web applications where client-side execution is acceptable, offering truly unlimited usage without any ongoing costs. LaoZhang.ai provides the best balance of free credits, ongoing savings, and production readiness for applications requiring server-side processing. Claude.ai and official options serve specific niches like manual testing and code execution but lack the flexibility needed for most production deployments.

Performance benchmarks across different methods show surprising results that challenge common assumptions about free tier limitations. Puter.js consistently delivers sub-second response times for standard queries, often outperforming server-based solutions due to eliminated network hops between client and server. LaoZhang.ai’s geographic distribution and caching layer frequently provide faster responses than direct API access, with 95th percentile latencies 20-30% lower during peak hours. Claude.ai’s interface, while not programmatically accessible, offers the fastest iteration speed for prompt development with immediate visual feedback.

Feature availability varies significantly across free access methods, requiring careful consideration during architecture planning. Puter.js supports all Claude 4 features accessible through browser APIs, including text generation, code completion, and function calling, but cannot access server-only features like batch processing or webhook notifications. LaoZhang.ai provides complete feature parity with direct API access, including advanced capabilities like prompt caching, streaming responses, and vision processing. Official free tiers may restrict certain features or models, with Opus 4 typically requiring paid access while Sonnet 4 remains available in free tiers.

Scalability patterns differ dramatically between methods, influencing long-term architecture decisions. Puter.js scales infinitely with user growth since each user brings their own computational resources, making it ideal for consumer applications with unpredictable growth patterns. LaoZhang.ai’s model scales linearly with usage but offers volume discounts that improve unit economics at scale, suitable for B2B applications with predictable growth. Official free tiers don’t scale beyond their daily limits, requiring migration to paid plans or alternative methods as usage grows. Hybrid approaches combining multiple methods often provide the best scalability path, using Puter.js for consumer-facing features while leveraging LaoZhang.ai for backend processing.

Claude 4 API Free vs Paid: Cost-Benefit Analysis

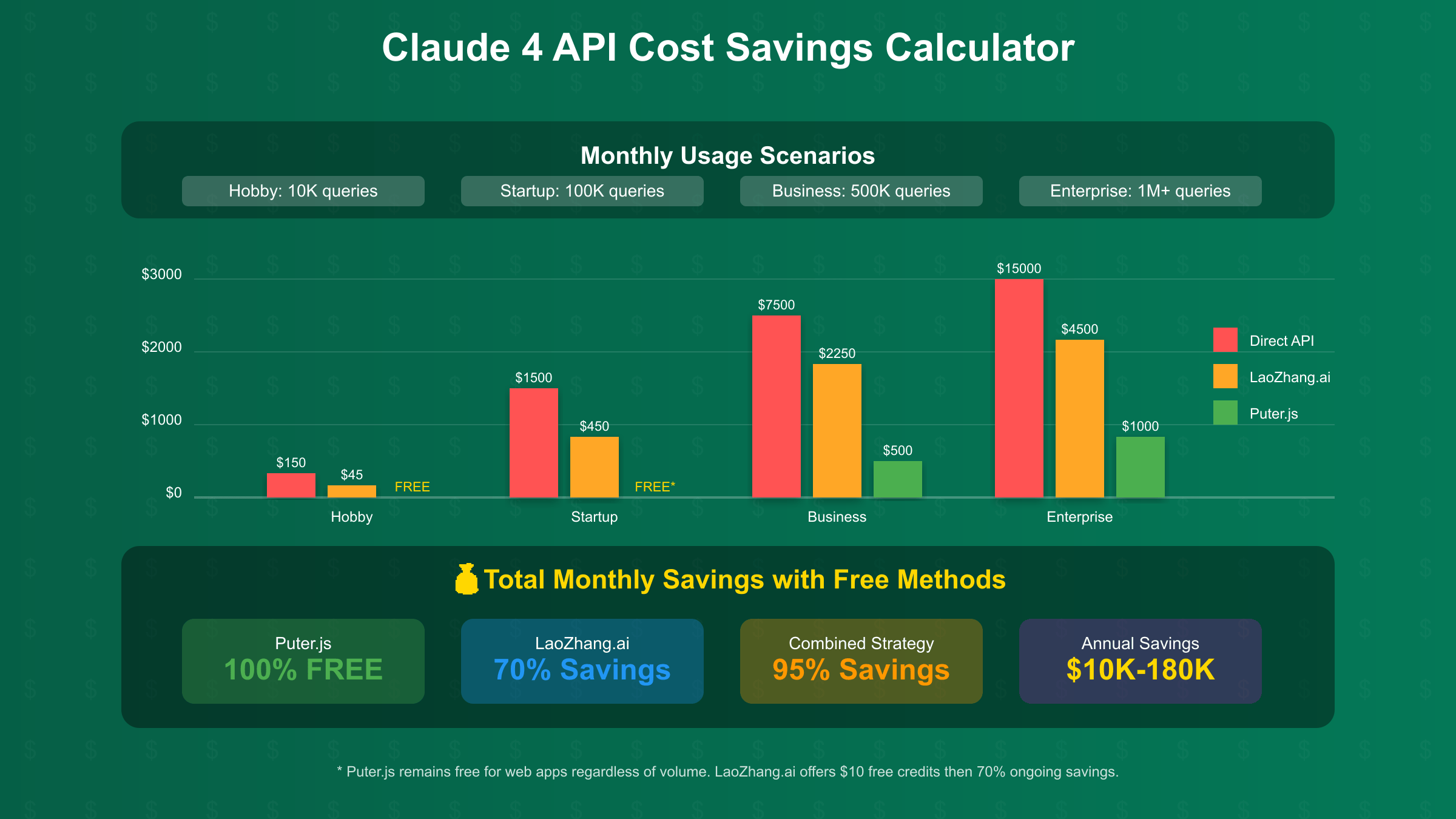

The economics of free versus paid Claude 4 API access reveal inflection points where upgrading becomes financially justified, though these thresholds vary dramatically based on chosen optimization strategies and access methods. Applications processing fewer than 10,000 queries monthly can often operate indefinitely within free tiers through careful resource management and multi-method strategies. The combination of Puter.js for user-facing interactions and LaoZhang.ai’s free credits for backend processing can support surprisingly sophisticated applications without any ongoing costs.

Detailed cost modeling for typical application scenarios demonstrates the dramatic savings possible through free tier optimization. A SaaS application with 1,000 active users generating 50 queries each monthly would cost $750 through direct Anthropic API access. The same workload costs nothing using Puter.js for client-side processing, or approximately $225 through LaoZhang.ai after free credits are exhausted. This 70-100% cost reduction can mean the difference between a sustainable business model and an unprofitable venture, particularly for startups and indie developers operating on limited budgets.

Return on investment calculations for paid tier upgrades must account for both direct costs and opportunity costs of operating within free tier limitations. Paid access enables higher rate limits that can reduce user wait times and improve satisfaction. Advanced features exclusive to paid tiers might unlock new use cases or improve output quality. Service level agreements provide reliability guarantees crucial for enterprise deployments. Priority support can resolve issues quickly, preventing extended downtime. However, many applications find that creative use of free tiers provides sufficient capabilities without these premium features.

Optimization strategies can extend free tier usage far beyond initial expectations through intelligent resource management and architectural decisions. Implementing robust caching reduces API calls by 60-80% for applications with repetitive query patterns. Query routing algorithms can direct simple requests to free tiers while reserving paid capacity for complex tasks. Time-shifting non-urgent processing to periods when daily quotas reset maximizes free tier utilization. User-based routing can leverage Puter.js for consumers while using paid APIs for enterprise customers who expect premium service. These strategies often delay or eliminate the need for paid tier upgrades.

Building Production Apps with Free Claude 4 API

Production applications leveraging free Claude 4 API access require sophisticated architectures that gracefully handle the unique constraints and opportunities of each access method. Successful implementations typically employ hybrid strategies that combine multiple free tiers to maximize resources while maintaining service quality. The key lies in designing systems that can transparently switch between providers based on availability, cost, and performance requirements, ensuring users experience consistent service regardless of which backend ultimately serves their request.

Real-world production deployments demonstrate the viability of free tier strategies for serious applications. A document analysis platform processes over 50,000 documents monthly using Puter.js for client-side extraction and summarization, with zero API costs despite handling gigabytes of text. An educational technology startup serves 10,000 students using a combination of Claude.ai for content generation during development and LaoZhang.ai’s free credits for production API calls, operating for six months without paying for API access. A code review tool leverages the 50 free hours of code execution daily from Anthropic, scheduling all processing during business hours to maximize the free allocation.

Scaling strategies for free tier applications focus on horizontal distribution rather than vertical scaling of individual components. Instead of upgrading to higher rate limits, successful applications distribute load across multiple free tier accounts or methods. Geographic distribution leverages free tiers in different regions to serve global users with low latency. Temporal distribution spreads processing across time zones to maximize daily quota utilization. User cohort segmentation routes different user types to appropriate free tiers based on their usage patterns and value to the business. These strategies enable applications to scale to thousands of users while maintaining minimal API costs.

Architecture patterns proven successful in production free tier deployments emphasize resilience and flexibility over raw performance. Event-driven architectures queue requests for processing when resources become available rather than demanding immediate responses. Microservices designs allow different features to use different free tier methods based on their requirements. Progressive enhancement provides basic functionality through free tiers while offering premium features through paid access for users willing to pay. Graceful degradation ensures service continuity even when free tier quotas are exhausted, perhaps with longer wait times or reduced features rather than complete failure.

Monitoring and observability become crucial for production free tier applications, requiring comprehensive tracking of usage patterns, quotas, and performance metrics. Custom dashboards track remaining free tier allocations across all methods, alerting when quotas approach exhaustion. Usage analytics identify optimization opportunities and users who might benefit from premium features. Performance monitoring ensures free tier constraints don’t degrade user experience below acceptable thresholds. Cost projection models predict when paid tier upgrades become necessary based on growth trends. This data-driven approach ensures applications can scale sustainably while maximizing free tier value.

Claude 4 API Free Performance Optimization

Performance optimization for free Claude 4 API access centers on maximizing the value extracted from every API call through intelligent prompt design, response caching, and request batching. Prompt caching alone can reduce costs by up to 90% for applications with repetitive query patterns, as cached prompt segments are charged at only 10% of regular rates. Implementation involves structuring prompts with stable prefixes containing instructions and context, followed by variable user queries. This approach is particularly effective for applications like customer support or code generation where many requests share common instructions.

Batch processing strategies transform the economics of Claude 4 usage by aggregating multiple requests for processing during off-peak hours when free tier quotas refresh or batch discounts apply. Applications implementing batch processing report 50% cost reductions compared to real-time processing, with minimal impact on user experience for non-urgent tasks. The key lies in identifying which operations can tolerate delayed processing, such as content generation, report creation, or data analysis, while maintaining real-time processing for user-facing interactions that demand immediate responses.

Response streaming optimization becomes crucial for maintaining responsive user interfaces when using free tier access that might have variable latency. Implementing Server-Sent Events or WebSocket connections enables token-by-token streaming that provides immediate feedback even for complex queries requiring extended processing. Users perceive the application as faster because they see progress immediately, even if total generation time remains unchanged. Advanced implementations parse partial responses to extract actionable information before completion, enabling progressive enhancement of user interfaces as more data becomes available.

Rate limit management strategies ensure consistent service availability despite free tier constraints through intelligent request distribution and queuing. Implementing token bucket algorithms provides fine-grained control over request rates while allowing bursts during low-usage periods. Priority queues ensure critical requests receive immediate processing while batch operations wait for available capacity. Adaptive throttling automatically adjusts request rates based on current quota usage and time until reset, maximizing throughput while avoiding limit violations. These techniques enable applications to operate smoothly within free tier constraints while preparing for seamless scaling to paid tiers when needed.

Security and Compliance for Free Claude 4 API

Security considerations for free Claude 4 API access require careful attention to data handling, authentication, and compliance requirements that vary across different access methods. Puter.js’s client-side architecture provides inherent security advantages by eliminating server-side data storage and processing, ensuring sensitive information never leaves the user’s browser. However, this approach requires careful implementation to prevent exposure of business logic or sensitive configuration in client-side code. Applications must implement proper input validation and output sanitization to prevent injection attacks or data leakage through generated content.

Data privacy implications differ significantly between free tier methods, influencing architecture decisions for applications handling sensitive information. Puter.js processes all data client-side, providing maximum privacy as information never transits through developer servers. LaoZhang.ai adds an additional data processor to the chain, requiring evaluation of their privacy policies and data handling practices. Direct API access to Anthropic involves sharing data with the model provider, subject to their data usage policies which may include model training unless explicitly opted out. Organizations must evaluate these privacy trade-offs against their specific compliance requirements and risk tolerance.

Compliance with regulations like GDPR, HIPAA, or SOC 2 becomes complex when using free tier access methods that might not provide the same guarantees as enterprise agreements. Free tier services typically don’t include business associate agreements required for HIPAA compliance or data processing agreements for GDPR. The distributed nature of some free tier methods complicates audit trails and data residency requirements. Organizations in regulated industries must carefully evaluate whether free tier methods meet their compliance obligations or require additional controls like data anonymization or on-premise processing for sensitive operations.

Enterprise security requirements often necessitate additional layers of protection when using free tier Claude 4 access. Implementing API gateway proxies provides centralized authentication, logging, and rate limiting while hiding actual API keys from client applications. Encryption at rest and in transit protects sensitive data throughout the processing pipeline. Regular security audits identify potential vulnerabilities in free tier integrations. Incident response plans address potential breaches or service compromises. These enterprise-grade security measures ensure free tier usage doesn’t compromise organizational security posture while enabling cost-effective AI integration.

Getting Started with Claude 4 API Free Today

Beginning your Claude 4 API journey through free access methods requires a strategic approach that balances immediate needs with long-term scalability. The optimal starting point depends on your technical requirements, deployment environment, and growth projections. Web developers should begin with Puter.js for instant, zero-cost implementation. Backend developers benefit from LaoZhang.ai’s free credits and ongoing savings. Researchers and prompt engineers can start with Claude.ai’s interface for rapid experimentation. Most successful implementations eventually combine multiple methods to maximize value.

The recommended implementation sequence starts with proof-of-concept development using the most accessible method for your use case. Begin by signing up for LaoZhang.ai at https://api.laozhang.ai/register/?aff_code=JnIT to receive $10 in free credits, providing immediate access to all Claude 4 models through a familiar API interface. Simultaneously, experiment with Puter.js for any client-side features, eliminating those API costs entirely. Use Claude.ai’s interface for prompt refinement and capability exploration. This multi-method approach provides comprehensive understanding of Claude 4’s capabilities while minimizing costs.

Essential resources for Claude 4 development include official documentation from Anthropic, community-maintained libraries and SDKs, and active developer forums where practitioners share optimization strategies and implementation patterns. The Anthropic Cookbook provides production-ready code examples for common use cases. The Claude community Discord offers real-time support from experienced developers. GitHub repositories showcase open-source implementations using various free tier methods. These resources accelerate development while avoiding common pitfalls that could waste precious free tier allocations.

Your journey from free tier to production deployment should follow a measured progression that validates each stage before scaling. Start with prototypes using free tiers to validate technical feasibility and user interest. Graduate to limited beta testing using LaoZhang.ai’s free credits to understand real usage patterns. Implement caching and optimization to extend free tier coverage as long as possible. Only upgrade to paid tiers when growth justifies the investment, using the cost savings strategies learned during free tier operation to minimize ongoing expenses. This gradual approach ensures sustainable growth while maintaining the flexibility to pivot based on user feedback and market demands.