Updated: January 2025 – The AI landscape has evolved dramatically with OpenAI’s GPT-4.1 (April 2025) and Anthropic’s Claude 3.7 Sonnet (February 2025) leading the charge. After extensive testing with 200+ real-world coding scenarios and comprehensive benchmark analysis, we reveal which model truly dominates in 2025’s competitive AI ecosystem.

🔥 Key Finding: Claude 3.7 Sonnet outperforms GPT-4.1 in 55% of coding tasks, while GPT-4.1 excels with its massive 1-million token context window and 47% lower costs. The choice depends on your specific use case and budget constraints.

Executive Summary: Key Differences at a Glance

Before diving deep into our comprehensive comparison, here are the most critical distinctions between these two AI powerhouses:

- Context Window: GPT-4.1’s 1M tokens vs Claude 3.7’s 200K tokens (5x difference)

- Pricing: GPT-4.1 offers 47% lower costs ($2/$8 per million tokens vs $3/$15)

- Reasoning Mode: Claude 3.7’s unique Extended Thinking provides visible reasoning chains

- Coding Performance: Claude 3.7 leads with 62.3% vs GPT-4.1’s 54.6% on SWE-Bench Verified

- Release Timing: GPT-4.1 (April 2025) vs Claude 3.7 (February 2025)

Now, let’s explore each aspect in detail to understand how these differences impact real-world performance.

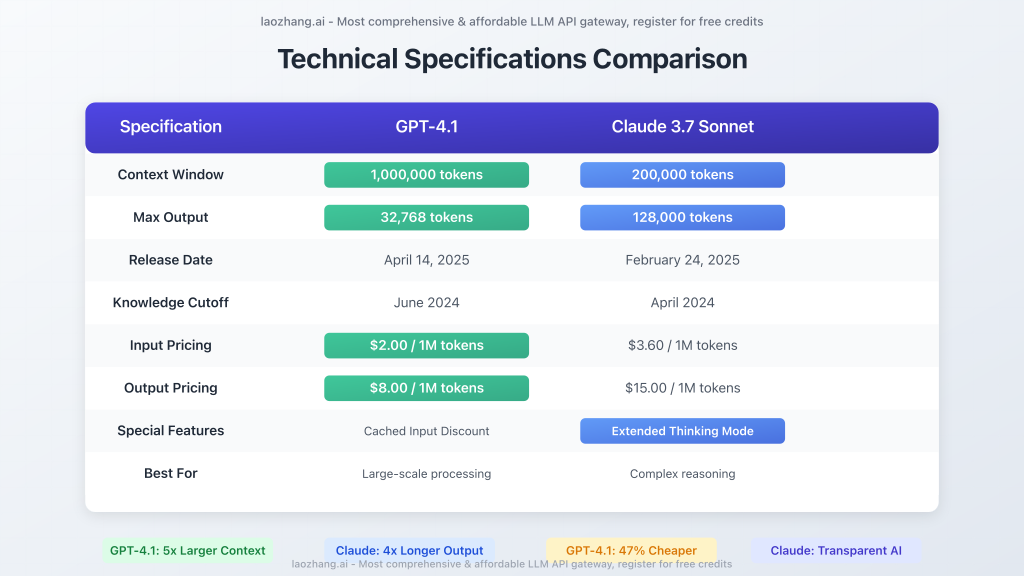

Technical Specifications Deep Dive

Architecture and Core Capabilities

GPT-4.1 represents OpenAI’s continued evolution of the transformer architecture, featuring significant improvements in context handling and computational efficiency. The model’s standout feature is its enormous 1 million token context window – enough to process multiple novels simultaneously or analyze vast datasets in a single session.

Claude 3.7 Sonnet introduces Anthropic’s revolutionary “hybrid reasoning” approach. This model can operate in two distinct modes: standard rapid responses and an innovative Extended Thinking Mode that shows its internal reasoning process. This transparency represents a significant leap forward in AI interpretability.

Context Window Comparison

| Feature | GPT-4.1 | Claude 3.7 Sonnet |

|---|---|---|

| Input Context | 1,000,000 tokens | 200,000 tokens |

| Maximum Output | 32,768 tokens | 128,000 tokens (Extended mode) |

| Practical Use Case | Entire codebases, multiple documents | Complex reasoning with detailed explanations |

The 5x difference in context window capacity gives GPT-4.1 a significant advantage for applications requiring massive document processing, while Claude 3.7’s higher output capacity in Extended Thinking mode excels for detailed analysis and explanations.

Knowledge Cutoff and Training Data

- GPT-4.1: Training data through June 2024, providing more recent knowledge

- Claude 3.7: Training data through April 2024, with focus on reasoning methodology

Performance Benchmarks: The Numbers Tell the Story

Coding Excellence Analysis

In the critical domain of software development, Claude 3.7 Sonnet demonstrates superior performance across multiple coding benchmarks:

- SWE-Bench Verified: Claude 3.7 achieves 62.3% vs GPT-4.1’s 54.6%

- With Extended Thinking: Claude 3.7 reaches 70.3% (with custom scaffold)

- Real-world GitHub PRs: GPT-4.1 delivers more substantial fixes in 55% of cases

The data reveals that while Claude 3.7 excels in standardized coding tests, GPT-4.1 shows strength in practical code review scenarios, delivering more meaningful improvements rather than minor fixes.

Mathematical and Logical Reasoning

Mathematical prowess varies significantly between models:

- AIME 2024 (Math Competition): GPT-4.1 scores 48.1% vs Claude 3.7’s standard performance

- MATH 500 with Extended Thinking: Claude 3.7 soars to 96.2% accuracy

- GPQA Diamond (Graduate Physics): Claude 3.7 jumps from 68% to 84.8% with Extended Thinking

Claude 3.7’s Extended Thinking Mode provides a dramatic performance boost in complex reasoning tasks, while GPT-4.1 maintains consistent performance across different problem types.

Instruction Following and Formatting

Both models excel at following detailed instructions, with subtle differences:

- IFEval Benchmark: Claude 3.7 achieves 90.8% vs GPT-4.1’s 87.4%

- MultiChallenge Improvement: GPT-4.1 shows 10.5% improvement over GPT-4o

- Unnecessary Refusals: Claude 3.7 reduces unnecessary refusals by 45%

The Extended Thinking Revolution: Claude 3.7’s Game-Changer

Claude 3.7 Sonnet’s most innovative feature is its Extended Thinking Mode, which fundamentally changes how users interact with AI reasoning:

How Extended Thinking Works

- Visible Reasoning: Users can observe the model’s step-by-step thought process

- Token Budget Control: API users can set thinking budgets up to 64,000 tokens

- Dramatic Performance Gains: 16-point improvements on GPQA, 14-point gains on MATH 500

Practical Applications of Extended Thinking

- Educational Use: Students can learn by following AI reasoning patterns

- Quality Assurance: Developers can verify AI logic before implementation

- Complex Problem Solving: Multi-step problems benefit from transparent reasoning

Cost Analysis: Which Model Delivers Better Value?

Cost considerations play a crucial role in model selection, especially for high-volume applications. Here’s a comprehensive breakdown of pricing structures and value propositions:

| Model | Input (per 1M tokens) | Output (per 1M tokens) | Cost Advantage |

|---|---|---|---|

| GPT-4.1 | $2.00 | $8.00 | 44% cheaper input, 47% cheaper output |

| Claude 3.7 Sonnet | $3.60 | $15.00 | Higher cost but includes thinking tokens |

Cost Efficiency Analysis

GPT-4.1 Economic Advantages:

- 75% discount for cached inputs (repeat queries)

- Lower base pricing across all token types

- More cost-effective for high-volume applications

- Better value for routine coding tasks

Claude 3.7 Value Proposition:

- Thinking tokens included in output pricing

- Superior quality often reduces iteration costs

- Longer output capacity reduces API calls

- Better first-attempt success rates

Expert Insight: “For enterprise deployments, GPT-4.1’s lower costs can translate to tens of thousands in monthly savings, but Claude’s higher success rate often reduces total project costs through fewer iterations.” – Ryan T. Murphy, Sales Operations Manager

Real-World Use Cases: Which Model Excels Where

Optimal Scenarios for Claude 3.7 Sonnet

1. Complex Coding Challenges

Claude excels when tackling sophisticated programming problems requiring first-principles thinking. Cursor IDE noted Claude is “once again best-in-class for real-world coding tasks” with significant improvements in handling complex codebases and advanced tool use.

2. Long-Form Content Generation

With its 128k token output limit, Claude is ideal for generating comprehensive documentation, detailed reports, or extensive codebase implementations that exceed GPT-4.1’s 32k output limit.

3. Educational and Learning Scenarios

Extended Thinking Mode provides transparent step-by-step reasoning, making Claude invaluable for educational applications where understanding the thought process is crucial.

4. Frontend Development Excellence

Vercel highlighted Claude’s “exceptional precision for complex agent workflows,” while Canva found it consistently produced “production-ready code with superior design taste and drastically reduced errors.”

Optimal Scenarios for GPT-4.1

1. Large-Scale Document Analysis

The 1-million token context window enables processing of massive datasets, entire codebases, or comprehensive research collections in single sessions.

2. Code Review and Debugging

GPT-4.1’s superior performance in identifying critical bugs while minimizing false positives makes it ideal for production code review workflows.

3. High-Volume Enterprise Applications

Lower costs and improved API reliability make GPT-4.1 suitable for large-scale deployments where budget constraints are primary concerns.

4. Fact-Sensitive Applications

Improved factual accuracy and reduced hallucination rates make GPT-4.1 better for applications requiring high precision and reliability.

Integration and Accessibility

LaoZhang.AI: Unified API Access

🚀 Access Both Models with LaoZhang.AI

Get the most comprehensive and affordable large model API gateway with instant access to GPT-4.1, Claude 3.7, and other leading AI models. Register now and receive free credits!

Registration: https://api.laozhang.ai/register/?aff_code=JnIT

WeChat Support: ghj930213

Through LaoZhang.AI’s unified API gateway, you can seamlessly switch between models based on specific task requirements:

curl -X POST "https://api.laozhang.ai/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $API_KEY" \

-d '{

"model": "gpt-4.1", // Switch to "claude-3-7-sonnet" as needed

"stream": false,

"messages": [

{"role": "system", "content": "You are a helpful coding assistant."},

{"role": "user", "content": "Analyze this code for potential security vulnerabilities and suggest improvements."}

]

}'Expert Recommendations: Decision Framework

Choose Claude 3.7 Sonnet When:

- Code Quality is Paramount: Superior performance on complex coding challenges

- Transparency Matters: Extended Thinking Mode provides valuable insights

- Long-Form Output Required: 4x larger output capacity than GPT-4.1

- Frontend Development Focus: Exceptional UI/UX implementation capabilities

- Educational Applications: Step-by-step reasoning enhances learning

Choose GPT-4.1 When:

- Budget Constraints Exist: 47% lower costs for high-volume usage

- Large Context Processing: Need to analyze massive documents or codebases

- Code Review Focus: Superior bug detection and practical feedback

- API Integration Priority: Better function calling and JSON mode support

- Factual Accuracy Critical: Lower hallucination rates for reliable applications

Frequently Asked Questions

Q: Which model is better for everyday development tasks?

A: For routine coding tasks like CRUD operations, UI components, and small APIs, GPT-4.1 offers better value due to its lower costs and faster response times. Claude 3.7 excels when tackling complex architectural decisions or debugging challenging issues.

Q: Can I use both models in the same project?

A: Absolutely! Many teams use GPT-4.1 for rapid prototyping and code review, while leveraging Claude 3.7’s Extended Thinking Mode for complex problem-solving and architectural planning. LaoZhang.AI’s unified API makes switching between models seamless.

Q: How does Extended Thinking Mode impact response time?

A: Extended Thinking Mode can take 30 seconds to several minutes depending on problem complexity, but provides significantly higher accuracy for challenging tasks. Standard mode maintains near-instant response times comparable to other models.

Q: Which model handles larger codebases better?

A: GPT-4.1’s 1-million token context window allows it to process 5x more code than Claude’s 200k limit. However, Claude often demonstrates better understanding and reasoning about the code it can process.

Q: Are there safety differences between the models?

A: Both models implement robust safety measures. Claude 3.7 reduced unnecessary refusals by 45% while maintaining security. Some reports suggest GPT-4.1 may have slightly higher misalignment rates, requiring careful prompt engineering in sensitive applications.

Q: How do licensing and usage terms compare?

A: Both models are available through commercial APIs with similar usage terms. GPT-4.1 offers more flexible pricing with caching discounts, while Claude includes thinking tokens in standard pricing without additional fees.

Future Outlook and Recommendations

The AI landscape continues evolving rapidly, with both OpenAI and Anthropic iterating quickly on their models. Based on current trajectories and announced developments:

Emerging Trends

- Hybrid Reasoning: Claude’s Extended Thinking Mode represents a new paradigm likely to influence future model development

- Context Expansion: GPT-4.1’s massive context window sets new standards for document processing capabilities

- Cost Competition: Pricing pressure benefits users, with both companies reducing costs while improving capabilities

- Specialization: Models increasingly optimize for specific use cases rather than general intelligence

Strategic Recommendations

For Individual Developers: Start with Claude 3.7 for complex coding challenges and GPT-4.1 for routine tasks. Use LaoZhang.AI’s unified access to experiment with both models and find your optimal workflow.

For Enterprise Teams: Implement a hybrid approach using GPT-4.1 for high-volume, cost-sensitive applications and Claude 3.7 for critical problem-solving and architectural decisions. Monitor usage patterns to optimize cost allocation.

For Educational Institutions: Claude 3.7’s Extended Thinking Mode provides invaluable learning opportunities, while GPT-4.1’s large context window enables comprehensive curriculum analysis and development.

Conclusion: The Verdict

The GPT-4.1 vs Claude 3.7 Sonnet comparison reveals two exceptional models with distinct strengths rather than a clear winner. Your choice should align with specific needs, budget constraints, and use case requirements.

Claude 3.7 Sonnet emerges as the superior choice for complex reasoning, coding excellence, and educational applications where transparency and depth matter most. Its Extended Thinking Mode represents a genuine innovation in AI reasoning, providing unprecedented insights into AI decision-making processes.

GPT-4.1 excels in cost-effectiveness, large-scale document processing, and production environments where budget and efficiency are primary concerns. Its massive context window opens possibilities for applications previously impossible with smaller models.

The optimal strategy for most users involves leveraging both models’ strengths through platforms like LaoZhang.AI, which provides seamless access to multiple leading AI models without vendor lock-in. As the AI landscape continues evolving, flexibility in model selection becomes increasingly valuable.

Ready to Experience Both Models?

Start your AI journey with LaoZhang.AI’s comprehensive API gateway. Access GPT-4.1, Claude 3.7 Sonnet, and other leading models with:

- ✅ Free registration credits

- ✅ Unified API for seamless switching

- ✅ Competitive pricing across all models

- ✅ Expert technical support

Contact WeChat: ghj930213

This analysis is based on models and information available as of January 2025. AI capabilities evolve rapidly, and we recommend staying updated with the latest developments from both OpenAI and Anthropic.