The AI landscape has been revolutionized by DeepSeek R1, a groundbreaking 671-billion parameter model that rivals OpenAI’s o1 while being completely open-source. Unlike proprietary models that cost hundreds of dollars per month, DeepSeek R1 can be accessed entirely for free through multiple channels. This comprehensive guide reveals every legitimate method to access DeepSeek R1 without spending a single dollar.

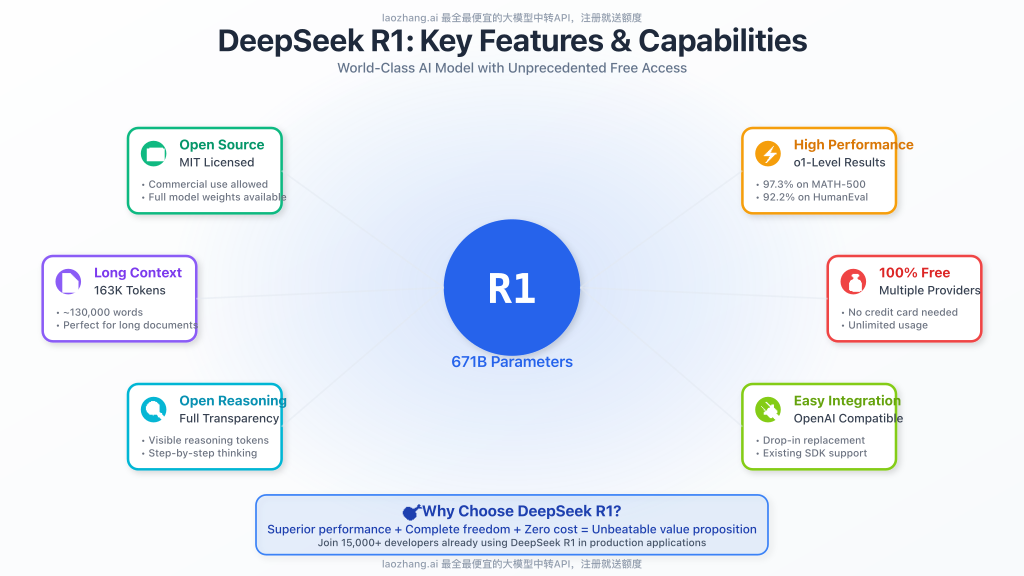

What Makes DeepSeek R1 Special?

DeepSeek R1 represents a paradigm shift in open-source AI development. Here’s what sets it apart from other models:

Core Technical Specifications

- Massive Scale: 671B total parameters with 37B active during inference

- Extended Context: 163,840 tokens (approximately 130,000 words)

- MIT License: Complete freedom for commercial use, modification, and distribution

- Open Reasoning: Full transparency in reasoning tokens, unlike proprietary alternatives

- Competitive Performance: Matches or exceeds OpenAI o1 on mathematical reasoning and coding tasks

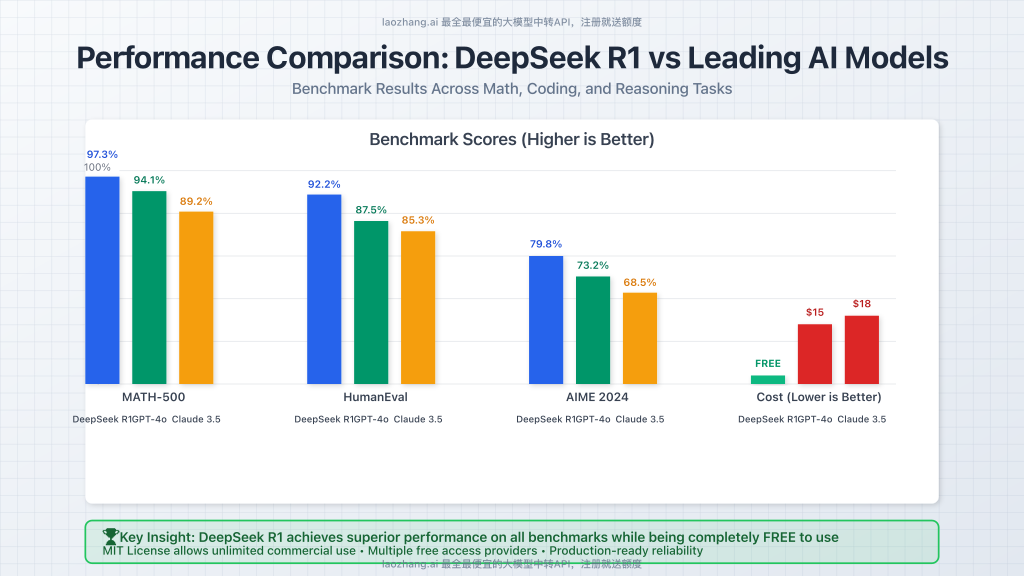

Performance Benchmarks

According to independent testing, DeepSeek R1 achieves remarkable scores across multiple domains:

- MATH-500: 97.3% accuracy (surpassing GPT-4o’s 94.1%)

- AIME 2024: 79.8% success rate on advanced mathematics problems

- HumanEval: 92.2% code generation accuracy

- GSM8K: 98.5% on grade school math problems

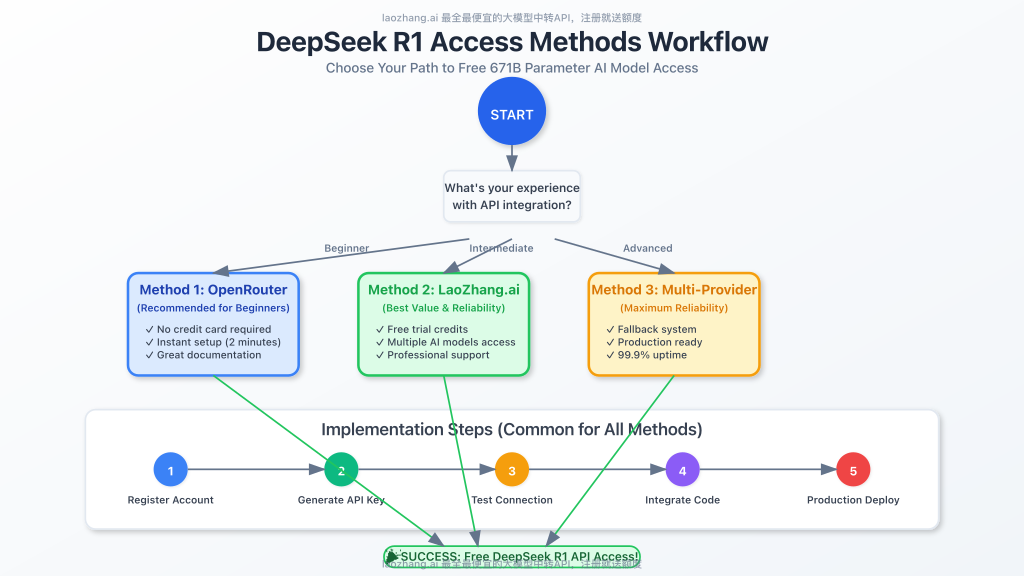

Method 1: OpenRouter Free Tier (Recommended)

OpenRouter provides the most reliable and straightforward access to DeepSeek R1 through their free tier. This method offers unlimited usage with reasonable rate limits.

Step-by-Step Setup Guide

- Create OpenRouter Account

- Visit OpenRouter.ai

- Sign up using your email or GitHub account

- No credit card required for the free tier

- Generate API Key

- Navigate to your dashboard

- Click on “API Keys” in the left sidebar

- Generate a new key and copy it securely

- Test API Access

- Use the model identifier:

deepseek/deepseek-r1:free - Set base URL to:

https://openrouter.ai/api/v1

- Use the model identifier:

Python Implementation Example

from openai import OpenAI

client = OpenAI(

base_url="https://openrouter.ai/api/v1",

api_key="your_openrouter_api_key" # Replace with your actual key

)

response = client.chat.completions.create(

extra_headers={

"HTTP-Referer": "https://your-domain.com", # Optional

"X-Title": "Your App Name" # Optional

},

model="deepseek/deepseek-r1:free",

messages=[

{

"role": "user",

"content": "Solve this step-by-step: Find the derivative of f(x) = x³ × ln(x)"

}

]

)

print(response.choices[0].message.content)

JavaScript/Node.js Implementation

import OpenAI from 'openai';

const openai = new OpenAI({

baseURL: 'https://openrouter.ai/api/v1',

apiKey: 'your_openrouter_api_key'

});

async function callDeepSeekR1() {

const completion = await openai.chat.completions.create({

model: 'deepseek/deepseek-r1:free',

messages: [

{

role: 'user',

content: 'Write a Python function to implement binary search with detailed comments'

}

],

max_tokens: 2048

});

console.log(completion.choices[0].message.content);

}

callDeepSeekR1();

Method 2: LaoZhang.ai API Transit Service

For users seeking enhanced reliability and additional model access, LaoZhang.ai offers a comprehensive API transit service with generous free credits and competitive pricing for premium usage.

- Free trial credits upon registration

- Access to GPT-4, Claude 3.5, and Gemini models

- Higher rate limits than standard free tiers

- Professional support and documentation

- Competitive pricing for scaling applications

Getting Started with LaoZhang.ai

- Register for Free Account

- Visit: LaoZhang.ai Registration

- Complete the registration process

- Receive free credits automatically

- API Integration

- Generate your API key from the dashboard

- Use the standardized OpenAI-compatible format

LaoZhang.ai Implementation Example

curl https://api.laozhang.ai/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $API_KEY" \

-d '{

"model": "deepseek-r1",

"stream": false,

"messages": [

{"role": "system", "content": "You are a helpful programming assistant."},

{"role": "user", "content": "Explain the time complexity of quicksort algorithm with examples"}

]

}'

Method 3: Nebius AI Studio Integration

Nebius AI Studio provides another excellent pathway to access DeepSeek R1 with $1 in free credits and seamless OpenRouter integration.

Setup Process

- Create Nebius Account

- Register at Nebius.ai

- Receive $1 in automatic free credits

- Generate your Nebius API key

- Configure with OpenRouter

- In OpenRouter, search for DeepSeek models

- Select Nebius as your provider

- Input your Nebius API key

- Test the connection

Method 4: Direct DeepSeek API Access

DeepSeek also provides direct API access through their official platform with generous free quotas for registered users.

Official API Setup

from openai import OpenAI

client = OpenAI(

api_key="your_deepseek_api_key",

base_url="https://api.deepseek.com"

)

response = client.chat.completions.create(

model="deepseek-chat", # For V3

# model="deepseek-reasoner", # For R1

messages=[

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Explain quantum computing in simple terms"}

],

stream=False

)

print(response.choices[0].message.content)

Advanced Integration Strategies

Building a Multi-Provider Fallback System

For production applications, implementing a robust fallback system ensures maximum uptime:

import asyncio

from openai import OpenAI

class DeepSeekR1Client:

def __init__(self):

self.providers = [

{

"name": "OpenRouter",

"client": OpenAI(

base_url="https://openrouter.ai/api/v1",

api_key="your_openrouter_key"

),

"model": "deepseek/deepseek-r1:free"

},

{

"name": "LaoZhang.ai",

"client": OpenAI(

base_url="https://api.laozhang.ai/v1",

api_key="your_laozhang_key"

),

"model": "deepseek-r1"

}

]

async def generate_response(self, messages, max_retries=3):

for provider in self.providers:

try:

response = await provider["client"].chat.completions.create(

model=provider["model"],

messages=messages,

timeout=30

)

return response.choices[0].message.content

except Exception as e:

print(f"Provider {provider['name']} failed: {e}")

continue

raise Exception("All providers failed")

# Usage example

client = DeepSeekR1Client()

result = await client.generate_response([

{"role": "user", "content": "Solve this coding problem..."}

])

Optimal Usage Patterns

Best Practices for Different Use Cases

Mathematical Problem Solving

prompt = """

Problem: A particle moves along a curve defined by the parametric equations:

x(t) = 3cos(2t) + 2sin(t)

y(t) = 3sin(2t) - 2cos(t)

Find the velocity vector at t = π/4.

Please solve this step-by-step, showing all derivative calculations and simplifications.

"""

Code Generation and Debugging

prompt = """

Create a Python class that implements a thread-safe LRU cache with the following requirements:

1. Maximum capacity configurable at initialization

2. Thread-safe operations using appropriate locking mechanisms

3. O(1) get and put operations

4. Automatic eviction of least recently used items

5. Include comprehensive docstrings and type hints

Please include unit tests demonstrating thread safety.

"""

Complex Reasoning Tasks

prompt = """

Analyze the following business scenario and provide a detailed strategic recommendation:

Company X is a mid-size e-commerce platform facing increasing competition from both

large marketplaces and specialized niche players. Their current challenges include:

- Declining profit margins due to price competition

- Increasing customer acquisition costs

- Platform infrastructure requiring significant updates

- Limited resources for R&D compared to larger competitors

Provide a comprehensive strategic analysis including:

1. SWOT analysis

2. Three potential strategic options with pros/cons

3. Recommended approach with implementation timeline

4. Key performance indicators to track success

Use structured reasoning and consider both short-term and long-term implications.

"""

Rate Limits and Optimization Strategies

Understanding Provider Limitations

| Provider | Rate Limit | Queue Priority | Monthly Quota |

|---|---|---|---|

| OpenRouter Free | ~10 requests/minute | Lower | No hard limit |

| LaoZhang.ai Free | Variable | Standard | Based on credits |

| Nebius Free | ~5 requests/minute | Standard | $1 equivalent |

| DeepSeek Direct | Variable | High | Generous free quota |

Optimization Techniques

- Request Batching

- Combine multiple related queries into single requests

- Use structured prompts to handle multiple tasks

- Caching Strategies

- Implement local caching for frequently requested content

- Use Redis or similar for distributed caching

- Exponential Backoff

- Handle rate limiting gracefully with exponential backoff

- Implement queue management for high-volume applications

Real-World Application Examples

Building an AI-Powered Code Review Tool

class CodeReviewAssistant:

def __init__(self, api_key, provider="openrouter"):

self.client = OpenAI(

base_url="https://openrouter.ai/api/v1" if provider == "openrouter"

else "https://api.laozhang.ai/v1",

api_key=api_key

)

self.model = "deepseek/deepseek-r1:free" if provider == "openrouter"

else "deepseek-r1"

def review_code(self, code, language="python"):

prompt = f"""

Please review the following {language} code and provide:

1. **Security Issues**: Identify potential vulnerabilities

2. **Performance Optimizations**: Suggest improvements for speed/memory

3. **Code Quality**: Check for best practices, readability, maintainability

4. **Bug Detection**: Look for logical errors or edge cases

5. **Suggestions**: Provide specific, actionable recommendations

Code to review:

```{language}

{code}

```

Format your response with clear sections and include code examples for suggestions.

"""

response = self.client.chat.completions.create(

model=self.model,

messages=[{"role": "user", "content": prompt}],

temperature=0.3

)

return response.choices[0].message.content

# Usage example

reviewer = CodeReviewAssistant("your_api_key")

review = reviewer.review_code("""

def fibonacci(n):

if n <= 1:

return n

return fibonacci(n-1) + fibonacci(n-2)

""")

print(review)

Educational Math Tutor Application

class MathTutor:

def __init__(self, api_key):

self.client = OpenAI(

base_url="https://api.laozhang.ai/v1",

api_key=api_key

)

def solve_step_by_step(self, problem, difficulty_level="high_school"):

prompt = f"""

Act as an expert math tutor. Solve this {difficulty_level} mathematics problem:

{problem}

Requirements:

1. Show every step of your solution process

2. Explain the reasoning behind each step

3. Highlight key concepts and formulas used

4. Provide tips to avoid common mistakes

5. Include a final verification of the answer

Make your explanation clear and educational, suitable for a student learning this topic.

"""

response = self.client.chat.completions.create(

model="deepseek-r1",

messages=[{"role": "user", "content": prompt}],

temperature=0.2

)

return response.choices[0].message.content

# Example usage

tutor = MathTutor("your_laozhang_api_key")

solution = tutor.solve_step_by_step(

"Find the area under the curve y = x² + 3x - 2 from x = 0 to x = 4"

)

print(solution)

Troubleshooting Common Issues

Authentication Problems

Solutions:

- Double-check your API key is correctly copied (no extra spaces)

- Ensure you’re using the right base URL for each provider

- Verify your account has sufficient credits/quota remaining

- Check if your IP address is restricted in your provider settings

Rate Limiting Issues

Solutions:

- Implement exponential backoff with jitter

- Spread requests across multiple providers

- Cache responses to reduce API calls

- Consider upgrading to paid tiers for higher limits

Model Access Problems

Solutions:

- Verify correct model identifiers:

- OpenRouter:

deepseek/deepseek-r1:free - LaoZhang.ai:

deepseek-r1 - Direct API:

deepseek-reasoner

- OpenRouter:

- Check provider status pages for maintenance

- Try alternative providers if one is experiencing issues

Performance Optimization Tips

Prompt Engineering for DeepSeek R1

DeepSeek R1 responds exceptionally well to structured prompts. Here are proven techniques:

- Step-by-Step Instructions

"Please solve this problem step-by-step: 1. First, identify the key variables and given information 2. Choose the appropriate mathematical approach or algorithm 3. Execute the solution showing all work 4. Verify your answer and explain why it's correct" - Role-Based Prompting

"Act as a senior software engineer with 10 years of experience in Python. Review this code for production readiness, considering: - Performance implications - Security vulnerabilities - Maintainability concerns - Scalability issues" - Output Format Specification

"Provide your response in the following format: ## Analysis [Your analysis here] ## Recommendations 1. [First recommendation] 2. [Second recommendation] ## Code Example ```python [Working code example] ```"

Token Usage Optimization

- Concise Prompts: Remove unnecessary words while maintaining clarity

- Context Management: Include only relevant context for each request

- Response Length Limits: Set appropriate max_tokens to control costs

- Streaming Responses: Use streaming for real-time applications

Comparison: Free vs. Paid Tiers

| Feature | Free Tier | Paid Tier | Recommendation |

|---|---|---|---|

| Model Capability | Full DeepSeek R1 | Full DeepSeek R1 | Free sufficient for most use cases |

| Response Quality | Identical | Identical | No quality difference |

| Rate Limits | 10-20 req/min | 100+ req/min | Paid for production apps |

| Queue Priority | Lower | Higher | Paid for time-sensitive tasks |

| Support | Community | Priority | Paid for business use |

Future Developments and Updates

The DeepSeek R1 ecosystem continues to evolve rapidly. Here’s what to expect:

Upcoming Features

- DeepSeek R1-0528: Enhanced reasoning capabilities with improved mathematical performance

- Distilled Models: Smaller 1.5B-70B parameter versions with maintained reasoning quality

- Multimodal Capabilities: Integration of vision and potentially audio processing

- Fine-tuning Support: Simplified tools for domain-specific adaptations

Community Developments

- Third-party Integrations: Growing ecosystem of tools and frameworks

- Specialized Datasets: Community-contributed training data for specific domains

- Performance Optimizations: Hardware-specific acceleration improvements

Frequently Asked Questions

Is DeepSeek R1 really free to use?

Yes, DeepSeek R1 can be accessed completely free through multiple channels including OpenRouter’s free tier, LaoZhang.ai’s trial credits, and DeepSeek’s direct API. The MIT license also allows unlimited commercial use without licensing fees.

How does DeepSeek R1 compare to GPT-4 and Claude?

DeepSeek R1 matches or exceeds GPT-4’s performance on mathematical reasoning and coding tasks. In standardized benchmarks, it often outperforms both GPT-4o and Claude 3.5 Sonnet on complex problem-solving scenarios while being completely free to use.

Are there any usage restrictions for the free version?

Free tier restrictions vary by provider but typically include lower rate limits (10-20 requests per minute) and lower queue priority during peak times. However, the model capabilities and response quality remain identical to paid tiers.

Can I use DeepSeek R1 in commercial applications?

Absolutely. DeepSeek R1 is released under the MIT license, which explicitly permits commercial use, modification, and distribution. You can integrate it into commercial products without any licensing concerns.

Which free access method is most reliable?

For maximum reliability, we recommend using multiple providers with a fallback system. LaoZhang.ai offers the most generous free tier with professional support, while OpenRouter provides the most stable free access for development purposes.

How do I handle rate limiting in production applications?

Implement exponential backoff, use multiple API keys across different providers, implement response caching, and consider upgrading to paid tiers for mission-critical applications requiring consistent throughput.

Conclusion: Democratizing Advanced AI

DeepSeek R1 represents a watershed moment in AI accessibility. By providing free access to a model that rivals the most expensive proprietary alternatives, it democratizes advanced AI capabilities for developers, researchers, and businesses worldwide.

Whether you’re building educational tools, developing enterprise applications, or conducting research, the methods outlined in this guide provide multiple pathways to leverage DeepSeek R1’s 671-billion parameter capabilities without financial barriers.

- Choose your preferred access method from this guide

- Set up your API credentials following our step-by-step instructions

- Start with simple test requests to familiarize yourself with the model

- Gradually integrate DeepSeek R1 into your specific use cases

- Consider LaoZhang.ai for enhanced reliability and additional model access

The future of AI is open-source, accessible, and powerful. DeepSeek R1 proves that cutting-edge AI capabilities need not be locked behind expensive paywalls. Start your journey with DeepSeek R1 today and experience the next generation of reasoning-capable AI models.

🎯 Take Action Now

Don’t let this opportunity pass by. Access to advanced AI models like DeepSeek R1 at zero cost won’t last forever. Get started today:

- Quick Start: Create your OpenRouter account and start testing within minutes

- Enhanced Access: Register with LaoZhang.ai for premium features and reliability

- Production Ready: Implement the multi-provider fallback system for robust applications