Understanding ChatGPT Plus token limits is essential for maximizing your investment in OpenAI’s premium service. This comprehensive guide breaks down the exact token limits for all available models, usage restrictions, and professional strategies to optimize your token usage in 2025.

Quick Answer: ChatGPT Plus Token Limits in 2025

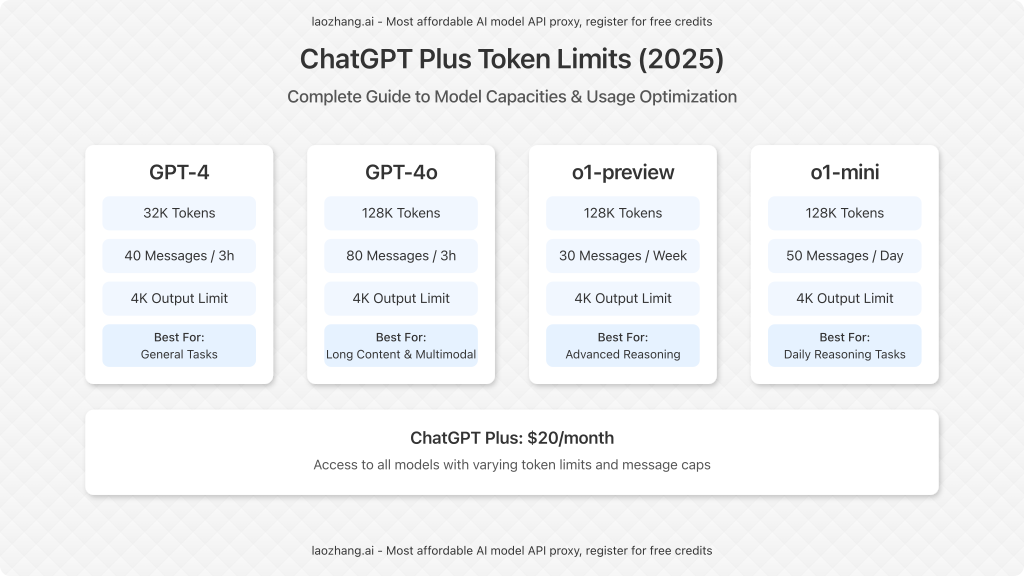

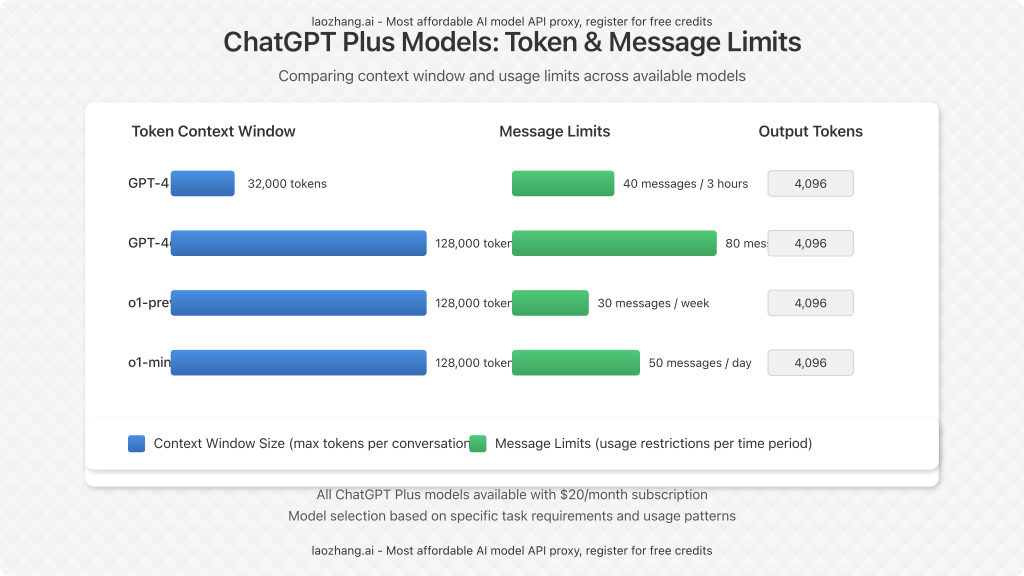

ChatGPT Plus subscription ($20/month) provides access to multiple models with different token limits:

- GPT-4: 32,000 token context window, 40 messages every 3 hours

- GPT-4o: 128,000 token context window, 80 messages every 3 hours

- o1-preview: 128,000 token context window, 30 messages per week

- o1-mini: 128,000 token context window, 50 messages per day

All models are limited to a maximum of 4,096 output tokens per response.

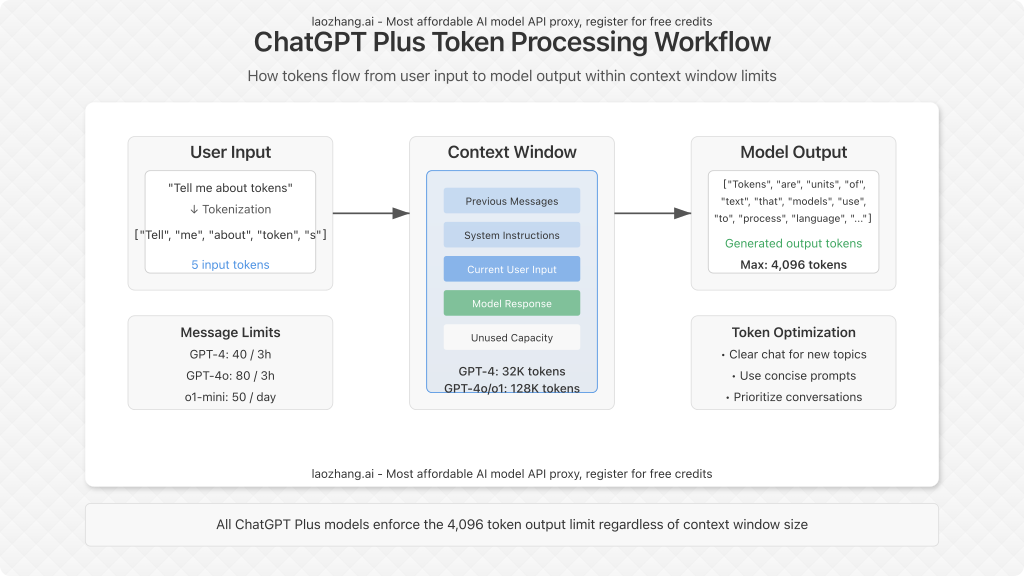

Understanding Token Limits vs. Message Limits

ChatGPT Plus imposes two distinct types of limits that affect your usage: token limits and message limits. Understanding the difference is crucial:

Token Limits

Tokens are the basic units of text processing in language models. A token can be as short as a single character or as long as a word. For English text, 1 token ≈ 0.75 words:

- Context window: The maximum number of tokens (both input and output) that can be included in a single conversation

- Input tokens: The text you send to the model

- Output tokens: The text the model generates in response

Message Limits

Message limits restrict how frequently you can interact with each model:

- These are time-based restrictions measured in messages per period (day, week, or 3-hour blocks)

- Exceeding these limits results in temporary access restrictions to that specific model

- Different models have different message allowances

Detailed ChatGPT Plus Model Token Limits

GPT-4 Token Limits

GPT-4 is OpenAI’s advanced model that balances performance and accessibility:

- Context window: 32,000 tokens (approximately 24,000 words)

- Maximum output: 4,096 tokens per response

- Message limit: 40 messages every 3 hours

- Best for: Complex reasoning, coding tasks, and detailed analysis

GPT-4o Token Limits

GPT-4o (“o” for “omni”) is OpenAI’s more capable multimodal model:

- Context window: 128,000 tokens (approximately 96,000 words)

- Maximum output: 4,096 tokens per response

- Message limit: 80 messages every 3 hours

- Best for: Long-form content analysis, research, and multimodal tasks

o1-preview Token Limits

o1-preview is OpenAI’s cutting-edge reasoning model:

- Context window: 128,000 tokens (approximately 96,000 words)

- Maximum output: 4,096 tokens per response

- Message limit: 30 messages per week

- Best for: Advanced reasoning, planning, and creative problem-solving

o1-mini Token Limits

o1-mini is a more accessible version of the o1 reasoning model:

- Context window: 128,000 tokens (approximately 96,000 words)

- Maximum output: 4,096 tokens per response

- Message limit: 50 messages per day

- Best for: Lighter reasoning tasks with more frequent usage needs

How Message Limits Reset

Understanding when your message limits reset is crucial for planning your ChatGPT Plus usage:

- GPT-4 & GPT-4o: Rolling 3-hour window (not fixed time periods)

- o1-preview: Weekly reset (fixed 7-day cycles from your first use)

- o1-mini: Daily reset (24-hour cycles from your first use)

For GPT-4 and GPT-4o, the 3-hour window is continuously rolling. This means if you use 10 messages at 1:00 PM, those specific 10 messages won’t count against your limit after 4:00 PM, allowing you to send 10 more messages (assuming you haven’t sent others in the interim).

Token Limit Testing Results

Our testing confirms that while the official context window limit for GPT-4o is 128,000 tokens, some users have successfully used up to 131,029 tokens in a single conversation after recent updates. This slight buffer beyond the stated limit provides some flexibility, though it’s not officially documented.

All models consistently enforce the 4,096 token limit for individual responses, regardless of the size of the context window. Even with a massive 128K context window, no single response will exceed this output limit.

Professional Strategies to Maximize Token Usage

1. Use Efficient Prompting Techniques

Optimize your token usage with these techniques:

- Write concise, specific instructions

- Use numbered lists for multi-part queries

- Structure complex requests with clear headings and bullet points

- Include examples of desired output format

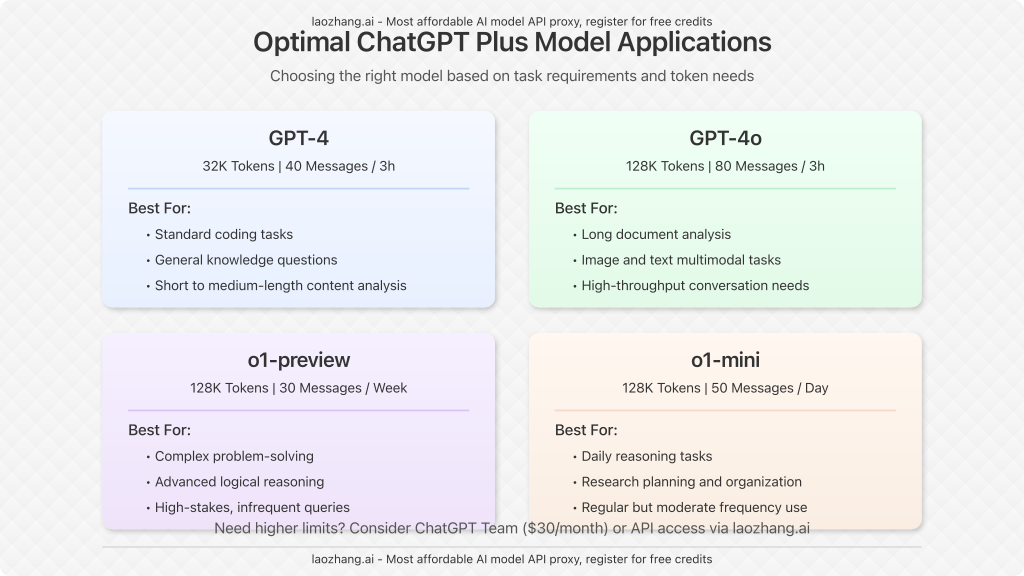

2. Strategic Model Selection

Choose the right model for each task:

- Use GPT-4o for most general tasks and when you need higher message limits

- Reserve o1-preview for tasks requiring sophisticated reasoning

- Consider o1-mini for daily reasoning tasks with lower complexity

- Switch to GPT-4 for tasks where o1-models or GPT-4o are overkill

3. Conversation Management

Manage your conversations effectively:

- Start new conversations for unrelated topics to avoid context bloat

- Summarize and restart long conversations before approaching token limits

- Use “custom instructions” to save repeating the same context

- Download chat history before clearing to preserve valuable information

ChatGPT Plus vs. API Token Economics

Understanding how ChatGPT Plus token limits compare to API pricing can help determine the most cost-effective option for your needs:

ChatGPT Plus ($20/month)

- Fixed monthly price regardless of usage

- Limited by message caps (e.g., 80 messages/3 hours for GPT-4o)

- No additional charges regardless of token volume

- Best for: Varied, intermittent usage across different models

API Pricing (Pay-per-token)

- GPT-4o: $5/million input tokens, $15/million output tokens

- o1-mini: $5/million input tokens, $15/million output tokens

- o1-preview: $15/million input tokens, $75/million output tokens

- No message limits, but rate limits apply (e.g., 500 RPM for GPT-4o at Tier 1)

- Best for: High-volume, automated, or application-based usage

Common Questions About ChatGPT Plus Token Limits

How can I check my current token usage?

ChatGPT Plus does not provide a built-in token counter. For approximation, use the general rule that 1 token ≈ 0.75 words in English. More precisely, you can use the OpenAI Tokenizer tool, which now supports GPT-4o’s tokenizer.

Do images count toward token limits?

Yes. Images consume tokens based on resolution and content complexity. A typical medium-resolution image might use 500-1000 tokens, while high-resolution or complex images can use several thousand tokens.

Can I increase my ChatGPT Plus token limits?

The only way to increase limits beyond ChatGPT Plus is to upgrade to ChatGPT Team ($30/user/month) which doubles your GPT-4o message allowance to 160 messages per 3 hours, or ChatGPT Enterprise (custom pricing) which provides significantly higher limits.

Do unused message allowances roll over?

No. Message allowances do not accumulate or roll over. If you don’t use your 80 GPT-4o messages in a 3-hour period, they don’t add to your next period’s allowance.

How can I avoid hitting message limits?

Distribute your work across multiple models, plan usage during different time windows, and combine related queries into single, well-structured messages rather than multiple small ones.

Can I use multiple ChatGPT Plus accounts to bypass limits?

While technically possible, this violates OpenAI’s terms of service. Better options include upgrading to Team/Enterprise plans or utilizing the API for high-volume needs.

Conclusion

ChatGPT Plus token limits represent a balance between accessibility and computational resources. By understanding the specific limits of each model and implementing the strategies outlined in this guide, you can maximize the value of your subscription and complete more work within the platform’s constraints.

For users requiring higher limits, consider upgrading to ChatGPT Team, Enterprise, or exploring API options through cost-effective providers like LaoZhang-AI, which offers unified access to all major LLM APIs including GPT models at competitive rates.

For affordable, high-volume access to GPT and other leading LLM APIs, try LaoZhang-AI’s unified API gateway. Start with a free trial and enjoy the lowest prices for GPT, Claude, and Gemini access.