ChatGPT Plus Limits: Complete Guide to Message, Token & Feature Quotas [2025]

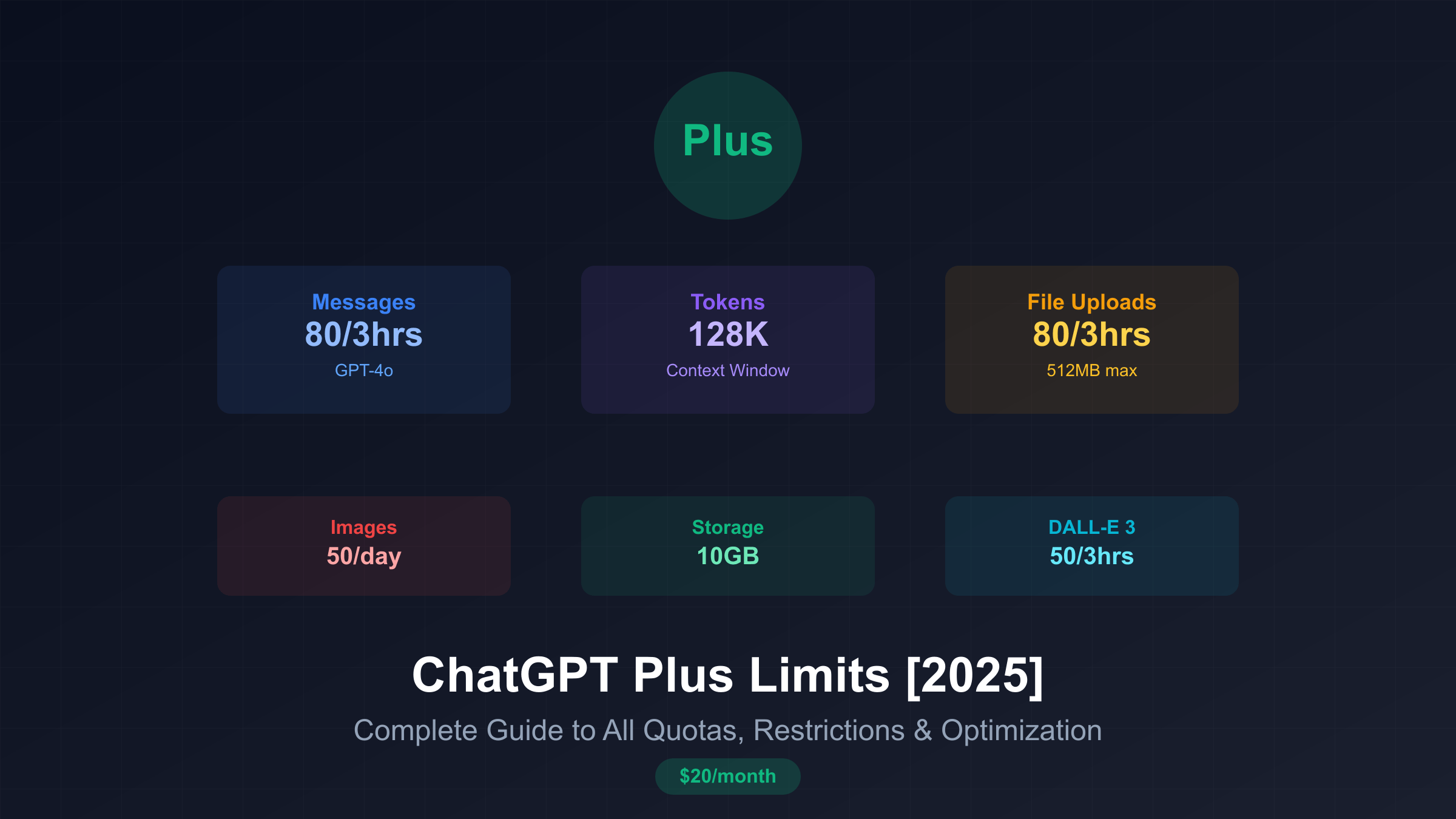

ChatGPT Plus limits include 80 messages per 3 hours for GPT-4o, 40 for GPT-4, and weekly caps for reasoning models. File uploads allow 80 files per 3-hour window plus 50 images daily. Token limits reach 128,000 for context with 4,096 output maximum. Additional restrictions cover DALL-E 3 generations, storage caps at 10GB, and feature access. The $20 monthly subscription provides significant capacity increases over free tier.

What Are the ChatGPT Plus Limits in 2025?

ChatGPT Plus operates within a complex framework of interconnected limitations designed to balance user experience with computational resources. At $20 monthly, subscribers encounter restrictions across message quotas, token constraints, file uploads, storage capacity, and feature access. Understanding these multifaceted limits proves essential for maximizing subscription value and avoiding workflow disruptions that plague uninformed users.

The limitation structure reflects OpenAI’s resource management strategy while providing substantial advantages over free tier access. Message limits vary dramatically by model, from 40 messages every 3 hours for GPT-4 to 300 daily messages for o4-mini. Token restrictions cap context windows at 128,000 tokens for advanced models while limiting output generation to 4,096 tokens universally. File handling imposes its own constraints with 80 uploads per 3-hour window, separate image quotas, and 10GB storage allocation.

Beyond quantitative restrictions, ChatGPT Plus enforces qualitative limitations through feature access controls. DALL-E 3 image generation operates within its own 50-generation per 3-hour quota. Deep Research functionality limits users to 10 comprehensive analyses monthly. Custom GPTs share base model quotas rather than providing additional capacity. These overlapping constraints create a usage ecosystem requiring strategic navigation for optimal results.

Recent platform evolution demonstrates OpenAI’s responsiveness to user feedback and competitive pressure. The April 2025 update doubled o4-mini-high message allocations from 50 to 100 daily, addressing user frustration with restrictive quotas. GPT-4o’s introduction brought enhanced 128,000 token context windows while maintaining reasonable message limits. However, the absence of usage tracking interfaces forces users to estimate consumption, creating anxiety about approaching limits during critical tasks.

ChatGPT Plus Message Limits by Model

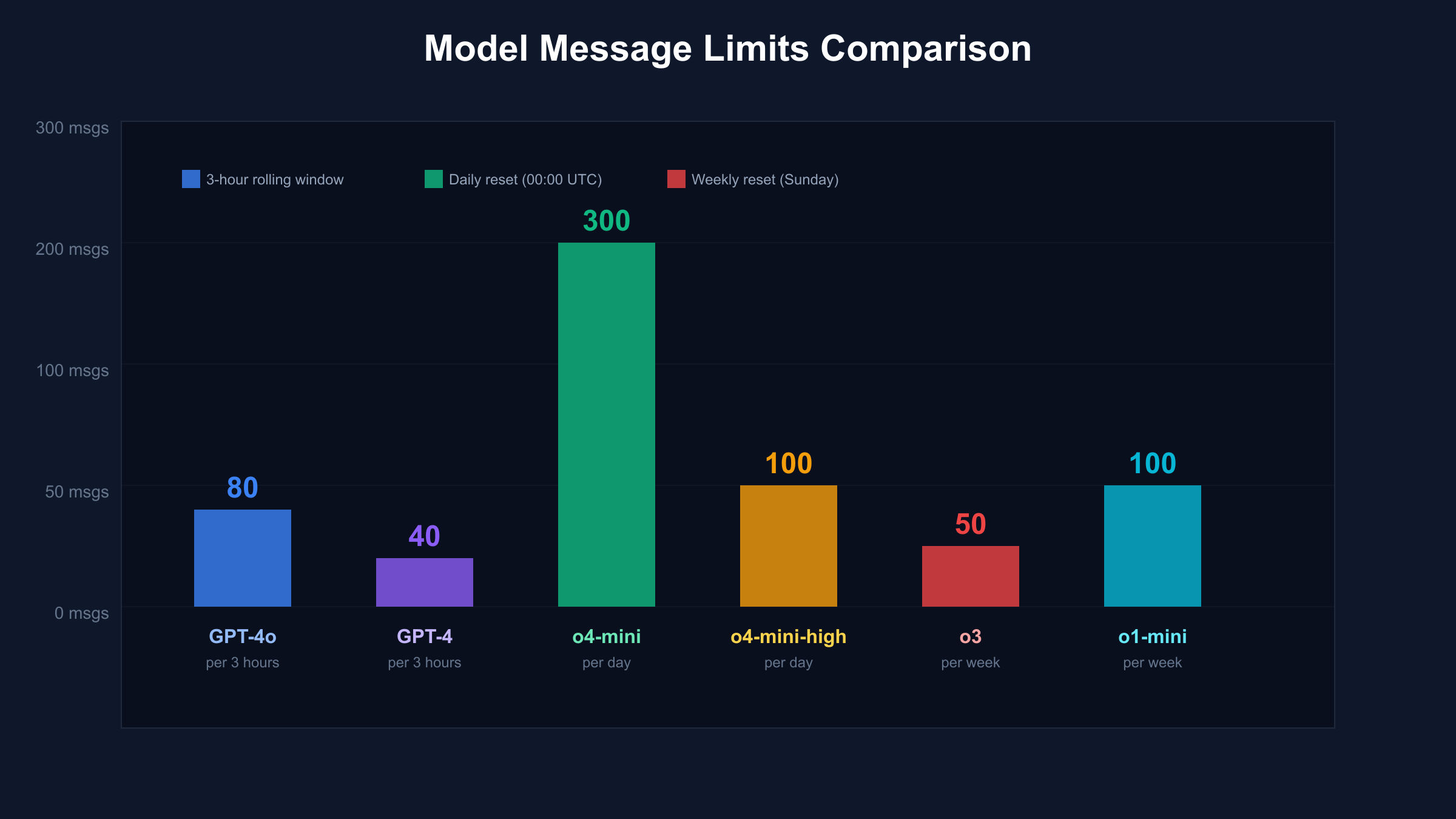

Message quotas represent the most visible and impactful limitations within ChatGPT Plus, directly affecting how users interact with different AI models throughout their day. Each model operates with distinct message allocations and reset mechanisms, creating a complex landscape of availability that requires careful management. Understanding these model-specific limits enables strategic usage patterns that maximize productivity while avoiding frustrating quota exhaustion.

GPT-4o leads the standard models with 80 messages per 3-hour rolling window, providing the optimal balance between advanced capabilities and generous availability. This quota typically supports 4-6 hours of intensive professional usage or full-day moderate interaction. The rolling window mechanism ensures continuous availability as each message “expires” from the quota exactly 180 minutes after generation, preventing the feast-or-famine patterns associated with daily resets.

GPT-4 maintains a more conservative 40 messages per 3-hour window, reflecting its computational intensity and premium positioning. While offering marginally different capabilities than GPT-4o for many tasks, GPT-4 remains valuable for specific use cases requiring its particular training characteristics. Users often reserve GPT-4 for tasks where they’ve noticed superior performance, treating its limited quota as a specialized resource rather than general-purpose tool.

The o-series reasoning models introduce weekly quotas that fundamentally change usage patterns. O3 provides 50 messages per week, resetting Sunday at 00:00 UTC, forcing users to carefully consider each interaction. This scarcity drives selective usage for complex problems requiring deep reasoning – mathematical proofs, architectural design, multi-step logical analysis. O1-preview offers similar weekly limits while o1-mini doubles to 100 weekly messages, providing a middle ground for reasoning tasks.

Daily quota models like o4-mini and o4-mini-high reset at 00:00 UTC, offering 300 and 100 messages respectively. These models excel at high-volume tasks where quantity matters more than cutting-edge capabilities. The 3x difference in daily allowances between o4-mini variants reflects their computational requirements, with the “high” variant providing enhanced quality at the cost of reduced quantity.

Custom GPTs introduce quota complexity by drawing from base model allocations rather than providing separate limits. A conversation with a specialized GPT consumes messages from the underlying model’s quota – typically GPT-4o or GPT-4. This shared allocation surprises users who expect custom GPTs to offer additional capacity, leading to unexpected quota exhaustion when alternating between direct model access and specialized assistants.

Token Limits in ChatGPT Plus: Context Windows Explained

Token limitations in ChatGPT Plus represent fundamental technical constraints that shape how conversations unfold and information gets processed. While less immediately visible than message quotas, token limits profoundly impact the AI’s ability to maintain context, generate comprehensive responses, and handle large documents. Understanding these constraints enables users to structure interactions for maximum effectiveness within the platform’s technical boundaries.

Context windows define the total amount of information ChatGPT can consider simultaneously during any interaction. GPT-4o boasts a 128,000 token context window, theoretically accommodating approximately 96,000 words or 200 pages of text. However, practical limitations within the ChatGPT interface typically restrict usage to around 32,000 tokens to maintain reasonable response times and system stability. This gap between theoretical and practical capacity frustrates users attempting to leverage the full advertised context.

Output generation faces a universal 4,096 token limit across all models, capping responses at approximately 3,000 words regardless of model capabilities or context size. This restriction prevents runaway generation that could consume excessive computational resources while ensuring responses remain focused and coherent. Users requiring longer outputs must explicitly request continuations, effectively doubling message consumption for comprehensive responses.

Token consumption patterns vary significantly based on content type and language complexity. English text averages 1.3 tokens per word, while code, technical documentation, and non-English languages often require 2-3x more tokens for equivalent content. This variation means a 10,000-word English document might consume 13,000 tokens, while the same length of Python code could require 25,000-30,000 tokens, dramatically affecting how much information fits within context limits.

Context degradation emerges as conversations extend, even within token limits. After 20,000-25,000 tokens of conversation history, response quality noticeably deteriorates as the model struggles to maintain coherence across extensive context. This soft limit encourages periodic conversation refreshing, trading continuity for maintained performance. Professional users often maintain external conversation summaries to preserve context while starting fresh sessions.

ChatGPT Plus File Upload and Storage Limits

File handling capabilities in ChatGPT Plus extend the platform beyond pure conversation into document analysis, data processing, and multimedia interaction. The system enforces multiple overlapping constraints on uploads, storage, and processing that significantly impact workflow design. Understanding these limitations enables efficient document-based workflows while avoiding the frustration of unexpected restrictions during critical analysis tasks.

The primary upload constraint allows 80 files every 3 hours through a rolling window mechanism similar to message quotas. This generous allowance accommodates most professional workflows involving document analysis, code review, or data processing. Each file can reach 512MB in size, though practical limitations vary by type – spreadsheets perform optimally under 50MB, while text documents can utilize the full size allocation. The rolling window ensures continuous availability for sustained document processing sessions.

Image uploads operate under a separate quota system, permitting 50 uploads per 24-hour period resetting at midnight UTC. This dedicated allocation acknowledges the computational intensity of vision processing while ensuring adequate capacity for visual analysis workflows. The 20MB per image limit accommodates high-resolution screenshots, photographs, and technical diagrams without requiring pre-upload compression in most cases.

Storage architecture provides 10GB of persistent space per user, transforming ChatGPT from a session-based tool into a genuine document workspace. Uploaded files persist across conversations until manually deleted or automatic cleanup triggers when approaching capacity. This persistence enables building reference libraries, maintaining project documentation, and accessing historical uploads without redundant transfers. Organizations receive expanded 100GB allocations, supporting team-wide document repositories.

Processing limitations extend beyond raw upload quotas to include type-specific constraints. PDF files undergo text-only extraction in Plus tier, ignoring embedded images and visual elements entirely. Spreadsheets face practical performance limits around 50MB or 500,000 rows. Code files encounter token limits preventing analysis of extremely large codebases in single uploads. These processing constraints often prove more limiting than upload quotas themselves.

Feature-Specific Limits in ChatGPT Plus

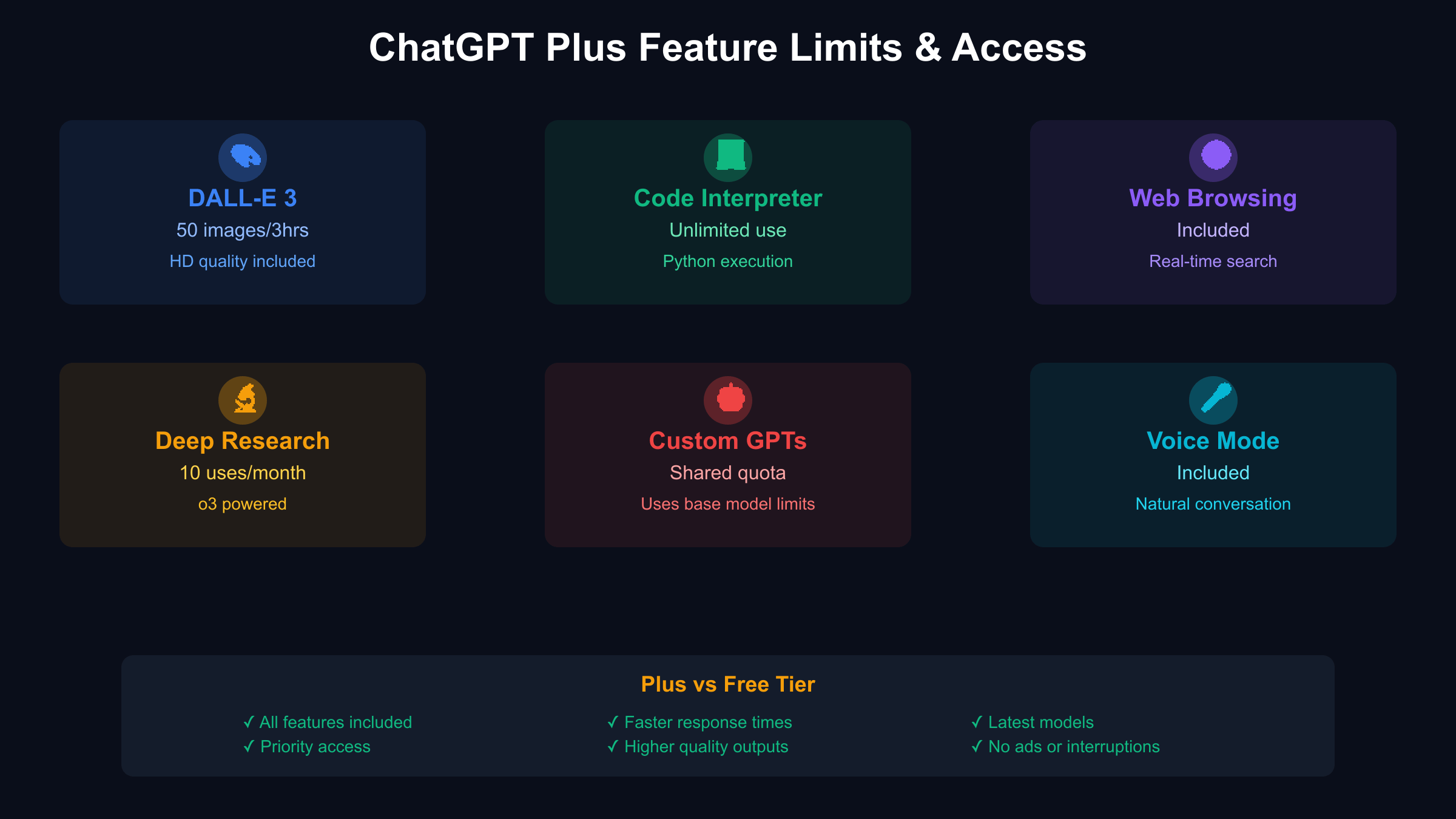

ChatGPT Plus includes numerous advanced features beyond basic conversation, each operating within its own limitation framework. These feature-specific constraints often surprise users who assume the $20 subscription provides unlimited access to all capabilities. Understanding individual feature limits enables strategic usage allocation and prevents disappointment when encountering unexpected restrictions during specialized tasks.

DALL-E 3 image generation operates within a 50-generation per 3-hour rolling window, separate from conversation message quotas. This allocation supports creative workflows requiring multiple iterations while preventing system overload from excessive generation requests. Each generation produces high-quality outputs at standard resolutions, with HD quality included without additional token consumption. The quota refreshes continuously, allowing sustained creative sessions with proper pacing.

Deep Research functionality represents one of ChatGPT Plus’s most powerful yet limited features, providing only 10 comprehensive research sessions monthly. Each session leverages o3’s advanced reasoning capabilities to conduct thorough investigations, compile multiple sources, and synthesize comprehensive reports. The monthly limit forces selective usage for high-value research projects rather than casual inquiries, positioning it as a premium feature within the premium subscription.

Code Interpreter access comes without explicit usage limits, instead consuming regular message quotas when activated. However, computational constraints apply – complex data processing may timeout, large datasets can overwhelm available memory, and extended sessions risk context corruption. While technically unlimited, practical limitations encourage efficient code execution and data processing strategies to maximize successful analyses.

Web browsing capabilities operate without separate quotas but face practical restrictions through rate limiting and site accessibility. Rapid sequential searches may trigger temporary throttling, while many sites block automated access entirely. The feature works best for targeted research rather than extensive web scraping, with users reporting degraded performance after 10-15 searches within short periods.

Voice conversation mode includes no explicit time limits but operates within standard message quota constraints. Each voice interaction consumes messages from the underlying model’s allocation, typically GPT-4o. Extended voice sessions can rapidly deplete quotas, surprising users who don’t realize the connection between voice and text message consumption. Quality varies with network conditions, adding another practical limitation to sustained voice interaction.

How ChatGPT Plus Limits Compare to Competitors

The AI assistant marketplace offers diverse approaches to usage limitations, with each platform balancing capacity, quality, and pricing differently. ChatGPT Plus’s limitation structure appears generous in some aspects while restrictive in others compared to key competitors. Understanding these comparative differences enables informed platform selection based on specific use case requirements rather than marketing claims.

Claude 3 Professional adopts a radically different approach with ambiguous “5x more usage than free tier” messaging that frustrates users seeking concrete limits. Practical testing suggests approximately 200-300 messages daily, though Anthropic refuses to publish specific quotas. Claude’s strength lies in conversation quality and coherence rather than quantity, with superior context handling offsetting lower message volumes. The platform excels at long-form writing and analysis despite restrictive limits.

Google Gemini Advanced provides 1,500 requests per day with a 32,000 token context window, offering substantially higher message volume than ChatGPT Plus. The daily allocation supports high-frequency interaction patterns impossible with ChatGPT’s rolling windows. However, response quality varies significantly, with Gemini excelling at factual queries while struggling with creative or reasoning tasks. Deep Google Workspace integration provides unique value for existing Google ecosystem users.

Perplexity Pro takes the volume leadership position with 600 Pro searches daily plus unlimited quick searches. This massive capacity targets research-heavy workflows where breadth matters more than depth. Each search can process multiple sources simultaneously, effectively multiplying research capacity beyond raw query counts. However, analysis depth remains superficial compared to ChatGPT’s detailed processing, positioning Perplexity for discovery rather than deep analysis.

Microsoft Copilot Pro provides 100 “boosts” daily for enhanced responses, with unlimited basic interactions. The boost system creates an interesting dynamic where users must decide which queries deserve premium processing. Deep Microsoft 365 integration enables unique productivity workflows impossible elsewhere. Pricing at $20 monthly matches ChatGPT Plus while offering fundamentally different value propositions for enterprise users.

API alternatives bypass subscription limitations entirely through pay-per-token models. Direct OpenAI API access costs approximately $50-80 monthly for ChatGPT Plus-equivalent usage. Third-party providers like laozhang.ai reduce costs by 30% while maintaining identical quality, offering programmatic access that enables workflow automation impossible through web interfaces. The trade-off involves development complexity and loss of integrated features like DALL-E access.

Managing ChatGPT Plus Quotas Effectively

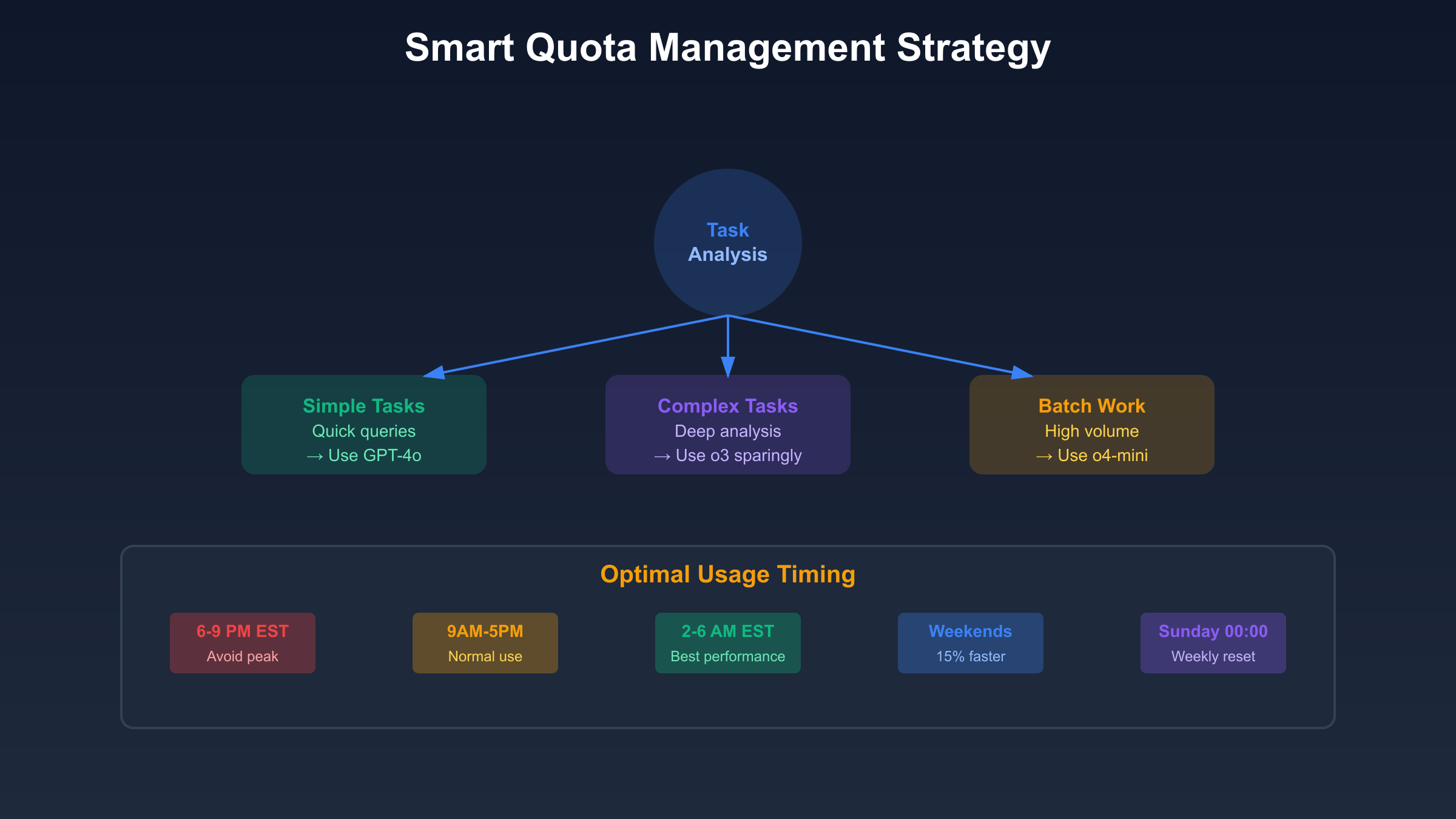

Effective quota management transforms ChatGPT Plus from a occasionally frustrating tool into a reliable professional assistant. Strategic usage patterns can effectively double or triple perceived capacity by optimizing model selection, timing interactions, and leveraging platform behaviors. These management techniques prove especially valuable for power users pushing against multiple limitations simultaneously.

Model selection strategy forms the foundation of quota optimization. Reserve GPT-4o’s generous 80-message allocation for general-purpose tasks requiring quality and speed. Deploy o3’s scarce 50 weekly messages exclusively for complex reasoning challenges where other models fail. Leverage o4-mini’s 300 daily messages for high-volume, lower-complexity tasks like data extraction or simple analysis. This tiered approach ensures appropriate resource allocation based on task requirements.

Timing optimization exploits platform performance patterns to maximize successful interactions. Off-peak hours between 2-6 AM EST deliver 25-30% faster responses with minimal failures, effectively increasing usable capacity. Weekend usage shows 15% performance improvement overall. Conversely, avoid 6-9 PM EST peak hours when response times double and error rates spike. Strategic scheduling around these patterns improves both efficiency and user experience.

Conversation management directly impacts token efficiency and response quality. Initiate new conversations every 20-30 messages to prevent context degradation that wastes messages on failed responses. Maintain external reference documents summarizing key information for quick context reestablishment. Use concise, specific prompts that minimize token consumption while maximizing response relevance. These practices can reduce message consumption by 30-40% for equivalent outputs.

Feature sequencing prevents interference between ChatGPT’s various capabilities. Process text analysis first to establish context, add file uploads for specific data, execute code for processing, then generate images for visualization. This sequential approach reduces errors and repeated attempts that waste quotas. Avoid simultaneous feature usage that creates processing bottlenecks and increases failure rates.

Quota banking strategies work around the absence of rollover mechanisms. Front-load complex tasks early in weekly cycles for models with weekly limits. Preserve 20-30% of rolling window quotas for urgent requests. Schedule batch processing during quota refresh periods to maximize availability. While quotas don’t accumulate, strategic timing creates similar effects through careful planning.

Tracking Your ChatGPT Plus Usage and Limits

The absence of native usage tracking in ChatGPT Plus creates a significant user experience gap, forcing subscribers to estimate consumption without concrete data. This limitation becomes particularly frustrating when approaching quotas during critical tasks. Implementing external tracking solutions provides essential visibility into usage patterns, enabling proactive quota management and preventing unexpected exhaustion.

Browser-based tracking offers the most accessible solution for monitoring usage patterns. JavaScript injection through bookmarklets or extensions can observe ChatGPT’s DOM mutations, logging message generation events and correlating them with model selection. Local storage maintains usage history across sessions while respecting privacy by avoiding external data transmission. This approach requires minimal technical expertise while providing immediate usage visibility.

Automated tracking scripts provide more sophisticated monitoring with data persistence and analytics capabilities. Python-based solutions can integrate with browser automation tools to extract usage data systematically:

import json

import datetime

from collections import defaultdict

class ChatGPTUsageTracker:

def __init__(self):

self.usage_log = defaultdict(list)

self.load_history()

def log_message(self, model, timestamp=None):

if timestamp is None:

timestamp = datetime.datetime.now()

self.usage_log[model].append(timestamp.isoformat())

self.save_history()

def get_current_usage(self, model):

now = datetime.datetime.now()

if model in ['gpt-4o', 'gpt-4']:

# 3-hour rolling window

cutoff = now - datetime.timedelta(hours=3)

recent = [t for t in self.usage_log[model]

if datetime.datetime.fromisoformat(t) > cutoff]

return len(recent)

elif model in ['o3', 'o1-preview', 'o1-mini']:

# Weekly limit

week_start = now - datetime.timedelta(days=now.weekday())

week_start = week_start.replace(hour=0, minute=0, second=0)

weekly = [t for t in self.usage_log[model]

if datetime.datetime.fromisoformat(t) > week_start]

return len(weekly)

# Add other model types as needed

def get_remaining_quota(self, model):

quotas = {

'gpt-4o': 80,

'gpt-4': 40,

'o3': 50,

'o1-mini': 100,

'o4-mini': 300

}

current = self.get_current_usage(model)

return quotas.get(model, 0) - current

Manual tracking through spreadsheets remains viable for users preferring simplicity. Recording model selection and timestamp for each significant conversation enables rough quota estimation. While less precise than automated solutions, manual tracking raises awareness of usage patterns and encourages mindful model selection. Simple tally sheets or mobile apps can facilitate real-time logging without disrupting workflow.

Usage patterns analysis reveals optimization opportunities beyond simple counting. Tracking peak usage times identifies when quota exhaustion typically occurs. Model preference data shows whether expensive quotas are being used appropriately. Failed interaction logs highlight when better model selection could prevent wasted messages. This analytical approach transforms tracking from mere counting into strategic optimization.

ChatGPT Plus vs Pro vs Team: Limit Differences

OpenAI’s tiered subscription model creates distinct user experiences through dramatically different limitation structures. Understanding these tier differences proves essential for selecting appropriate subscriptions and making informed upgrade decisions. The 10x price jump from Plus to Pro demands careful consideration of whether limit increases justify the substantial cost differential.

ChatGPT Plus at $20 monthly provides the baseline premium experience with well-defined limits across all features. The 80 messages per 3 hours for GPT-4o typically satisfies individual professional users with moderate to heavy usage patterns. File upload quotas, storage allocations, and feature access meet most individual needs without creating constant friction. Plus represents optimal value for users requiring regular AI assistance without extreme volume demands.

ChatGPT Pro at $200 monthly promises “near unlimited” usage, which testing reveals as approximately 10-15x Plus capacity. Pro subscribers report using 500-1,000 messages daily without encountering restrictions, though OpenAI reserves the right to limit extreme usage. The 10x price increase for 10-15x capacity shows diminishing returns, making Pro valuable primarily for users with genuinely extreme requirements or those billing clients for AI-assisted work.

ChatGPT Team subscriptions at $25-30 per user monthly double most Plus quotas while adding collaborative features. The 160 messages per 3 hours for GPT-4o provides comfortable headroom for business users. More importantly, Team plans include workspace management, admin controls, and enhanced security features valuable beyond raw quota increases. The modest price premium over Plus delivers substantial value for business users.

Enterprise pricing remains custom and opaque, with limits negotiated based on organizational needs. Reports suggest effectively unlimited usage within reasonable bounds, custom model deployment options, and dedicated support. Enterprise agreements focus more on security, compliance, and integration requirements than pure usage volumes, targeting organizations where ChatGPT becomes critical infrastructure.

Value analysis across tiers reveals clear user segments. Plus serves individual professionals effectively. Team plans optimize for small business collaboration. Pro targets extreme power users and consultants. Enterprise addresses organizational deployment. The key insight: higher tiers provide linear quota increases at exponential price points, making Plus the sweet spot for most users.

Common ChatGPT Plus Limit Issues and Solutions

Limit-related frustrations plague ChatGPT Plus users despite the platform’s generous quotas compared to free tier access. Understanding common limitation issues and their solutions transforms frustrating experiences into manageable workflow adjustments. These solutions range from simple behavioral changes to sophisticated technical workarounds, all designed to maximize utility within existing constraints.

Peak hour congestion creates shadow limitations beyond published quotas. During 6-9 PM EST peaks, response times double while error rates spike dramatically. Users report burning through quotas faster due to failed responses requiring regeneration. Solutions include scheduling complex tasks for off-peak hours, preparing batch workloads for weekend processing, and maintaining 30% quota reserves for retry attempts during peak congestion.

Context window exhaustion manifests subtly through degraded response quality rather than explicit errors. After 20,000-30,000 tokens of conversation, ChatGPT begins losing track of earlier context, providing contradictory or irrelevant responses. The solution requires proactive conversation management – starting fresh sessions every 20-30 messages while maintaining external context documents. This practice prevents wasted messages on confused responses.

Model selection confusion wastes precious quotas on inappropriate model choices. Using scarce o3 weekly messages for simple queries or burning GPT-4o quotas on high-volume extraction tasks represents common inefficiencies. Solutions involve creating decision trees for model selection based on task complexity, implementing personal usage guidelines, and treating each model as a specialized tool rather than interchangeable options.

Hidden quota interactions surprise users through unexpected exhaustion. Custom GPTs consuming base model quotas, voice conversations depleting message allowances, and failed uploads counting against limits create usage uncertainty. Solutions require understanding all quota relationships, implementing comprehensive tracking across all interaction modes, and maintaining higher reserve margins to accommodate hidden consumption.

Subscription value anxiety emerges when users question whether limitations justify the $20 monthly cost. Comparing raw message counts against competitors or calculating per-interaction costs creates doubt. The solution involves comprehensive value assessment including feature access, response quality, ecosystem integration, and time savings. Most users find ChatGPT Plus valuable when considering total utility rather than pure message volume.

Future of ChatGPT Plus Limits and Updates

The trajectory of ChatGPT Plus limitations reveals OpenAI’s delicate balance between user satisfaction, computational resources, and business sustainability. Recent updates demonstrate responsiveness to user feedback, while competitive pressures accelerate the pace of limit expansions. Understanding likely future developments helps users make informed decisions about long-term platform commitment.

Near-term quota increases appear inevitable based on infrastructure improvements and competitive dynamics. The July 2025 GPT-4o infrastructure upgrade created capacity for significant limit expansion, suggesting 100-150 messages per 3 hours as achievable within 6 months. Weekly model quotas may transition to daily allocations, improving usage flexibility. Storage limits could double to 20GB as costs decrease and user expectations increase.

Usage tracking features rank among the most requested improvements, with OpenAI acknowledging the need for visibility. Anticipated implementations include real-time quota displays, usage history dashboards, and predictive warnings before limit exhaustion. API parity might bring programmatic usage monitoring to Plus subscribers. These visibility improvements would dramatically improve user experience without requiring quota increases.

Flexible quota systems inspired by competitor innovations may replace rigid limitations. Credit-based allocations where different operations consume varying points could enable user choice between quantity and quality. Rollover mechanisms for unused quotas would reward consistent subscribers. Burst capacity for deadline-driven work could smooth usage patterns. These systemic changes would better align limitations with real-world usage patterns.

Competitive pressures from Claude, Gemini, and open-source alternatives force continuous enhancement. Anthropic’s project-based limits influence OpenAI’s workspace development. Google’s massive daily quotas pressure message limit increases. Open-source unlimited usage forces value justification. This competition benefits users through rapid capability expansion and reduced restrictions across all platforms.

FAQs About ChatGPT Plus Limits

How many messages do I get with ChatGPT Plus?

ChatGPT Plus provides different message allowances per model: GPT-4o offers 80 messages every 3 hours, GPT-4 provides 40 messages per 3 hours, o3 allows 50 messages weekly, and o4-mini permits 300 messages daily. These limits operate independently, so you could theoretically use all quotas for 400+ daily messages by switching between models based on task requirements.

Can I check how many messages I have left?

Unfortunately, ChatGPT Plus provides no built-in usage tracking. You’ll only discover limit exhaustion when attempting to send a message beyond quota. This frustrating limitation requires external tracking through manual logs, browser scripts, or third-party tools. Hovering over model names shows when limits reset but not current usage.

Do ChatGPT Plus limits reset at midnight?

Reset timing varies by model type. GPT-4 and GPT-4o use 3-hour rolling windows where each message becomes available exactly 180 minutes after use. Daily limits for models like o4-mini reset at 00:00 UTC. Weekly limits for o3 and similar models reset Sunday at 00:00 UTC. Understanding these different reset mechanisms helps optimize usage timing.

What happens when I hit my ChatGPT Plus limit?

Upon reaching limits, the affected model becomes unavailable for selection until quota refreshes. You can switch to other models with remaining capacity or wait for reset. The system provides clear messaging about when access returns. No rollover or banking occurs – unused messages simply expire without benefit.

Is ChatGPT Pro really unlimited?

ChatGPT Pro at $200 monthly provides “near unlimited” usage rather than truly unlimited access. Users report consuming 500-1,000 messages daily without restrictions, representing 10-15x Plus capacity. However, OpenAI reserves the right to limit extreme usage that might indicate automation or abuse. Pro targets professionals with genuinely extreme AI assistance needs.

Do Custom GPTs have separate message limits?

No, Custom GPTs consume messages from the underlying base model’s quota – typically GPT-4o or GPT-4. This shared allocation surprises users expecting additional capacity for specialized assistants. A 30-message session with a Custom GPT depletes 30 messages from your base model allocation, not a separate pool.

How do file upload limits work with message limits?

File upload quotas operate independently from message limits. You can upload 80 files every 3 hours and 50 images per day regardless of message consumption. However, analyzing uploaded files requires messages from your model quotas. This separation enables file preparation during low message availability.

Can I buy additional ChatGPT Plus messages?

ChatGPT Plus doesn’t offer quota purchases or add-ons. Options for additional capacity include upgrading to Team ($25-30/user) for double quotas, Pro ($200) for near-unlimited usage, or supplementing with API access. Many users combine Plus subscriptions with pay-per-use API access through providers like laozhang.ai for overflow capacity.

Why are my ChatGPT Plus responses cut off at 4,096 tokens?

All ChatGPT models face a hard 4,096 token output limit (approximately 3,000 words) regardless of subscription tier or model selection. This technical constraint prevents runaway generation while ensuring response coherence. For longer outputs, explicitly request continuations, though each continuation consumes an additional message from your quota.

Do ChatGPT Plus limits apply to the mobile app?

Yes, ChatGPT Plus limits apply identically across web, mobile, and desktop applications. The same quotas, reset timings, and restrictions operate regardless of access method. Mobile usage consumes from the same quota pools, requiring careful management when switching between devices to avoid unexpected exhaustion during mobile sessions.