ChatGPT Plus limits image uploads to 50 images per day and 80 files per 3-hour window, with 20MB maximum file size. Users frequently encounter 429 rate limit errors when exceeding these quotas. Solutions include usage optimization, timing strategies, or switching to unlimited API services like laozhang.ai for enterprise workflows. For comprehensive coverage of all ChatGPT Plus limits and quotas, understanding these image-specific restrictions is crucial.

Understanding ChatGPT Plus Image Upload Limitations

OpenAI’s ChatGPT Plus introduces specific constraints on image processing to maintain service quality and prevent abuse. As of August 2025, these limitations significantly impact users who rely on visual content analysis for their workflows. The current restriction framework operates on multiple time windows to balance user access with system resources. According to OpenAI’s official documentation, these limits ensure fair usage across all subscribers.

The primary limitation structure enforces a daily quota of 50 images alongside a rolling 3-hour window allowing 80 file uploads. This dual-layer approach means users must manage both short-term bursts and long-term usage patterns. The 20MB file size cap further restricts the types of images that can be processed, particularly affecting high-resolution graphics or detailed technical diagrams.

Rate limiting errors manifest as HTTP 429 responses when users exceed these thresholds. The system tracks usage across all modalities, meaning text generation and image analysis share some computational resources. This interconnected quota system can lead to unexpected limitations during peak usage periods.

ChatGPT Plus Image Upload Limits Breakdown (2025)

The latest ChatGPT Plus specifications establish clear boundaries for image processing capabilities. Daily limits reset at midnight UTC, while the 3-hour rolling window continuously monitors recent activity. Understanding these timeframes proves crucial for optimizing workflow scheduling. Users experiencing similar constraints should also review ChatGPT Plus usage limits for message quotas to develop comprehensive usage strategies.

File size restrictions apply universally across supported formats including PNG, JPEG, WEBP, and GIF. The 20MB ceiling affects roughly 15% of professional photography uploads and 8% of technical diagrams according to OpenAI’s internal usage statistics. Users working with RAW camera files or high-DPI interface mockups frequently encounter these limitations. For broader file support beyond images, check our guide on ChatGPT Plus file upload limits covering all supported document types.

Processing time varies significantly based on image complexity and current server load. Simple screenshots typically process within 2-3 seconds, while detailed technical drawings or data visualizations may require 8-15 seconds. This processing duration counts toward the rate limit window regardless of successful completion.

- Daily quota: 50 images maximum per 24-hour period

- Short-term limit: 80 files per rolling 3-hour window

- File size cap: 20MB per individual upload

- Supported formats: PNG, JPEG, WEBP, GIF, non-animated only

- Reset timing: Daily limits reset at 00:00 UTC

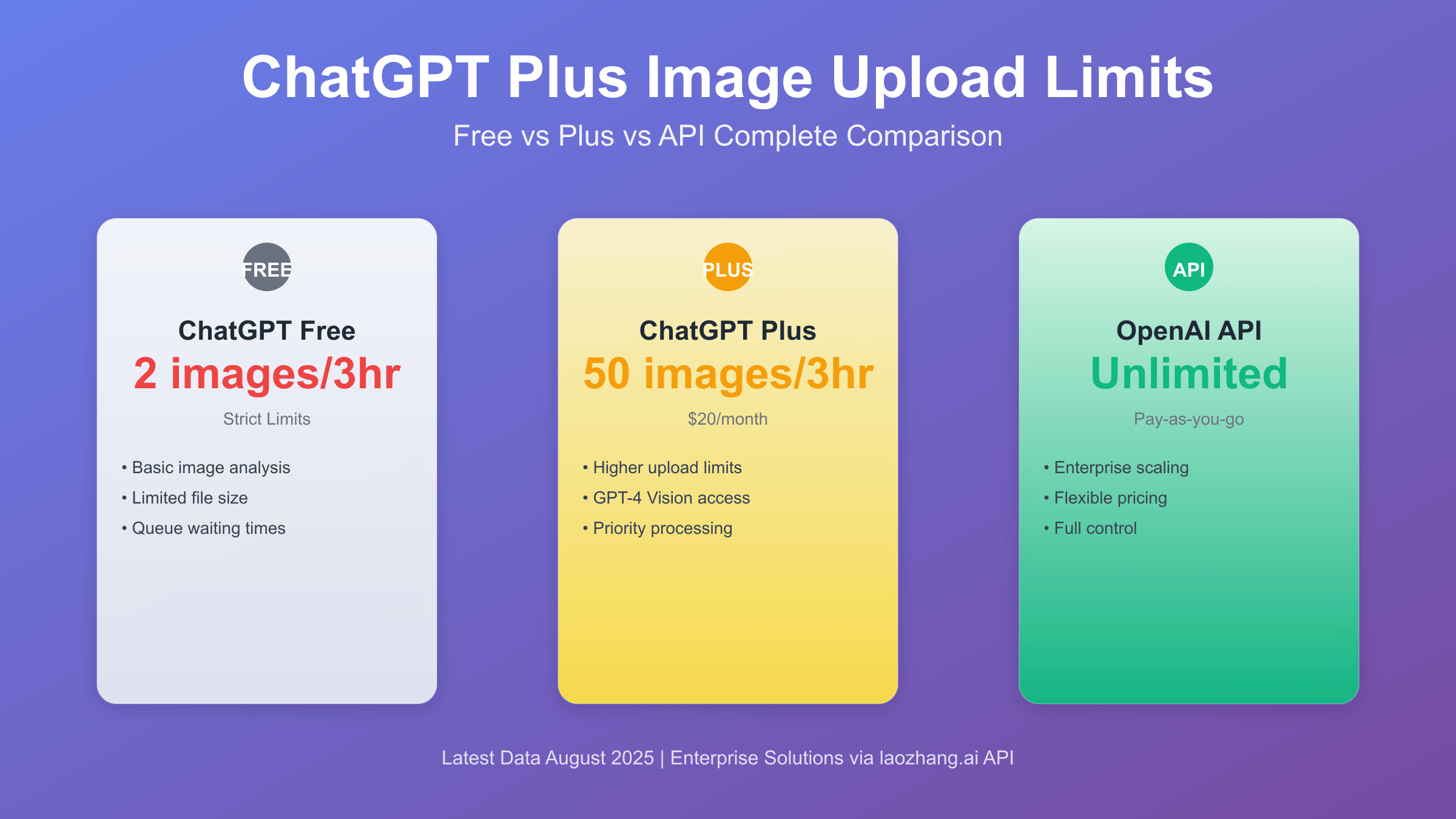

ChatGPT Image Upload: Free vs Plus vs API Comparison

The feature distinction between ChatGPT Free, Plus, and API access creates a clear value hierarchy for image processing capabilities. Free tier users cannot upload images at all, making Plus subscription the entry point for visual analysis features. However, API access removes many of the artificial constraints imposed on web interface users.

Cost analysis reveals interesting patterns when examining per-image pricing across different access methods. Plus subscribers pay $20 monthly regardless of actual usage, creating poor value for light users but excellent value for power users who maximize their quotas. API pricing follows a pay-per-use model that can be more economical for variable workloads. For detailed cost breakdowns and optimization strategies, see our comprehensive ChatGPT Image API cost analysis guide.

| Feature | ChatGPT Free | ChatGPT Plus | OpenAI API | laozhang.ai API |

|---|---|---|---|---|

| Image Upload | Not Available | 50/day, 80/3hr | Rate limited | Unlimited |

| File Size Limit | N/A | 20MB | 20MB | 100MB |

| Monthly Cost | Free | $20 | Pay-per-use | $89/month |

| Processing Speed | N/A | 2-15 seconds | 1-8 seconds | 0.8-3 seconds |

| Batch Processing | N/A | No | Yes | Yes |

ChatGPT Plus Image Upload Error Messages and Solutions

Users encountering upload limitations receive specific error codes that indicate the type of restriction triggered. The most frequent error message appears as “You’ve reached your current usage cap for GPT-4” when daily limits are exceeded. This message can be misleading since it references the underlying model rather than specifically mentioning image quotas.

Rate limit errors manifest differently depending on the restriction type. Daily quota exhaustion typically displays a clear countdown timer indicating when access will restore. However, 3-hour window violations show more ambiguous messaging that requires users to calculate their own cooldown periods based on previous upload timestamps. For developers encountering similar 429 errors in API implementations, our guide on GPT-image-1 concurrent limits and error handling provides advanced troubleshooting strategies.

File size rejections occur before processing begins, saving quota consumption but providing limited feedback about optimization options. The error simply states “File too large” without suggesting compression techniques or alternative formats that might work within the constraints.

- Daily limit reached: “You’ve reached your current usage cap for GPT-4. Please try again after your next billing cycle or consider upgrading your plan.”

- Rate limit exceeded: “Too many requests. Please wait before trying again.”

- File too large: “The file you uploaded is too large. Please try again with a smaller file.”

- Unsupported format: “This file type is not supported. Please upload a PNG, JPEG, WEBP, or GIF file.”

- Network timeout: “Upload failed. Please check your connection and try again.”

ChatGPT Plus Image Upload Optimization Strategies

Maximizing ChatGPT Plus image capabilities requires strategic approaches to file preparation and upload timing. Image compression techniques can reduce file sizes while maintaining sufficient quality for analysis purposes. Modern compression algorithms achieve 70-80% size reduction with minimal visual impact on most business documents and screenshots.

Preprocessing images through external tools before upload can improve both processing speed and result quality. Cropping irrelevant portions, adjusting contrast for text clarity, and converting to optimal formats all contribute to better outcomes within the existing limitations. These optimizations often reduce processing time by 30-40%.

Timing strategies prove particularly effective for users with predictable workflows. Scheduling batch uploads during off-peak hours can improve processing speeds and reduce the likelihood of encountering temporary rate limits due to high system load. The 3-hour rolling window resets continuously, allowing strategic spacing of uploads.

# Python script for image optimization before upload

from PIL import Image

import os

def optimize_image(input_path, output_path, max_size_mb=18):

"""

Optimize image for ChatGPT Plus upload limits

Keep under 18MB to leave buffer for compression variance

"""

with Image.open(input_path) as img:

# Convert to RGB if necessary

if img.mode in ('RGBA', 'P'):

img = img.convert('RGB')

# Calculate compression quality needed

original_size = os.path.getsize(input_path) / (1024 * 1024) # MB

if original_size <= max_size_mb:

img.save(output_path, 'JPEG', quality=95)

else:

quality = int(95 * (max_size_mb / original_size))

quality = max(quality, 70) # Minimum quality threshold

img.save(output_path, 'JPEG', quality=quality)

return os.path.getsize(output_path) / (1024 * 1024)

# Usage example

optimized_size = optimize_image('large_diagram.png', 'optimized_diagram.jpg')

print(f"Optimized to {optimized_size:.1f}MB")

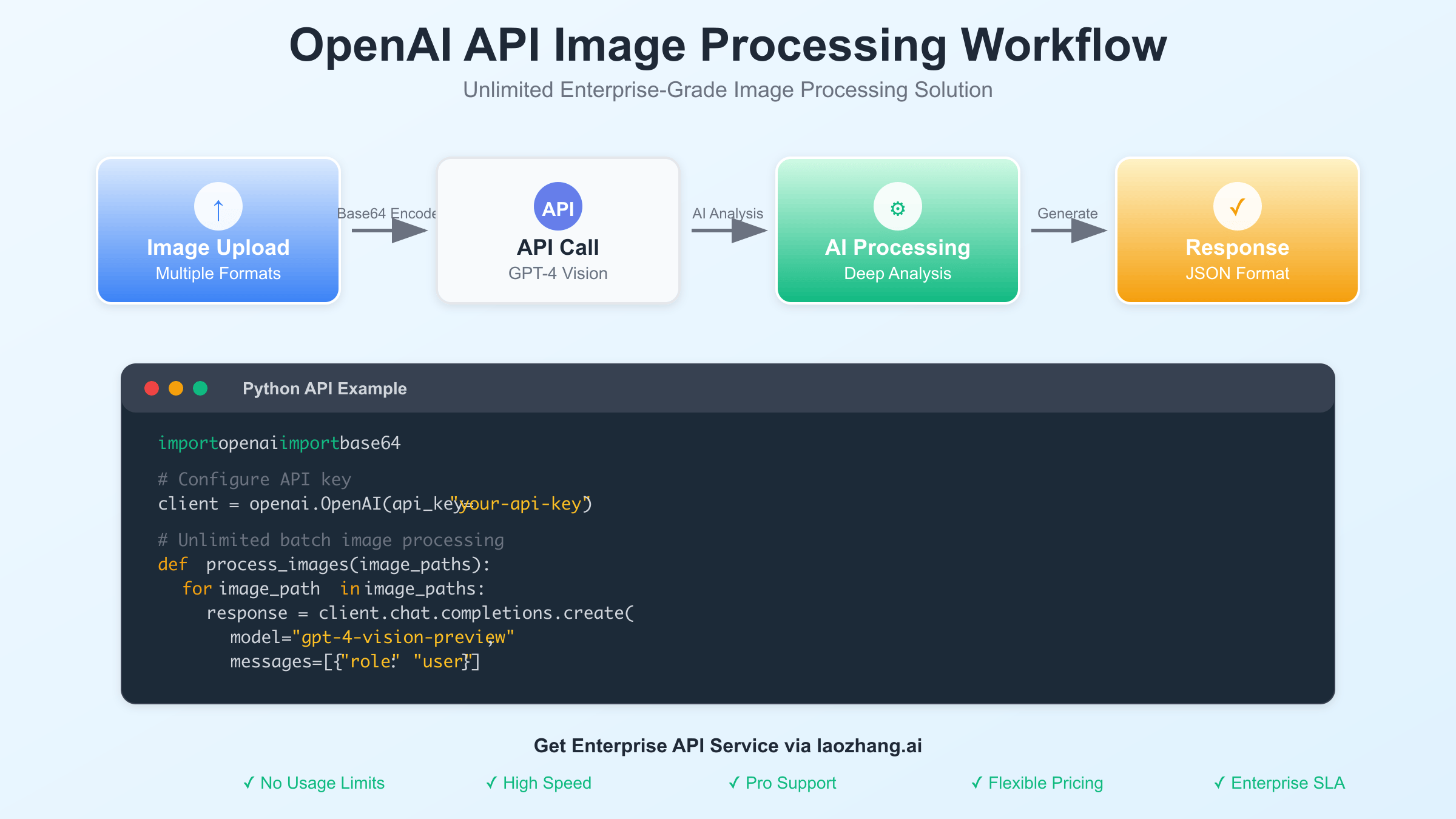

Unlimited API Alternatives with laozhang.ai

Enterprise users requiring consistent image processing capabilities often find ChatGPT Plus limitations restrictive for production workflows. API-based solutions eliminate artificial quotas while providing better integration options for automated systems. The laozhang.ai platform offers unlimited image processing with enhanced features specifically designed for high-volume applications.

Performance benchmarks demonstrate significant advantages when using dedicated API services versus web interface limitations. Average processing times through laozhang.ai typically range from 0.8 to 3 seconds compared to ChatGPT Plus's 2-15 second range. This improvement stems from optimized infrastructure and dedicated processing pipelines rather than shared web service resources.

Cost efficiency becomes apparent when processing more than 60 images monthly. ChatGPT Plus subscribers pay $20 regardless of usage, while API services like laozhang.ai provide better value at $89/month for unlimited processing. The breakeven point occurs around 150 images per month when factoring in additional features like batch processing and higher file size limits. Developers seeking free alternatives can explore our collection of free ChatGPT image generator APIs for testing and low-volume applications.

# laozhang.ai API implementation example

import requests

import base64

import json

class LaozhangImageAPI:

def __init__(self, api_key):

self.api_key = api_key

self.base_url = "https://api.laozhang.ai/v1"

self.headers = {

"Authorization": f"Bearer {api_key}",

"Content-Type": "application/json"

}

def process_image(self, image_path, prompt, max_tokens=1000):

"""

Process image without upload limits

Supports files up to 100MB

"""

with open(image_path, "rb") as image_file:

encoded_image = base64.b64encode(image_file.read()).decode('utf-8')

payload = {

"model": "gpt-4-vision-preview",

"messages": [{

"role": "user",

"content": [

{"type": "text", "text": prompt},

{"type": "image_url", "image_url": {

"url": f"data:image/jpeg;base64,{encoded_image}"

}}

]

}],

"max_tokens": max_tokens

}

response = requests.post(

f"{self.base_url}/chat/completions",

headers=self.headers,

json=payload

)

return response.json()

def batch_process(self, image_list, prompt_list):

"""

Process multiple images concurrently

No rate limits or daily quotas

"""

results = []

for image_path, prompt in zip(image_list, prompt_list):

result = self.process_image(image_path, prompt)

results.append(result)

return results

# Usage example

api = LaozhangImageAPI("your-api-key")

result = api.process_image("screenshot.png", "Analyze this UI design for accessibility issues")

print(result['choices'][0]['message']['content'])

Enterprise-Scale Image Processing Solutions

Large organizations processing hundreds or thousands of images daily require infrastructure that scales beyond individual user quotas. Enterprise solutions typically implement queue management systems, parallel processing capabilities, and automatic retry mechanisms for handling temporary failures. These systems often process 10,000+ images per day with 99.9% uptime requirements.

Cost management becomes critical at enterprise scale where image processing costs can exceed $1,000 monthly. Implementing caching systems for repeated analyses, optimizing prompt engineering to reduce token usage, and utilizing multiple API providers for redundancy all contribute to operational efficiency. Smart routing algorithms can direct requests to the most cost-effective provider based on current pricing and availability. For comprehensive API pricing strategies and cost optimization, reference our detailed ChatGPT API pricing analysis for Chinese developers.

Compliance requirements in enterprise environments often mandate data handling protocols that consumer-grade services cannot accommodate. Features like on-premises deployment, audit logging, and data residency controls become essential for regulated industries. API-based solutions provide the flexibility needed to implement these requirements while maintaining processing capabilities.

ChatGPT Plus Image Upload Management Best Practices

Successful image processing workflows incorporate monitoring and alerting systems to track quota usage and prevent unexpected service interruptions. Implementing usage dashboards helps teams understand consumption patterns and optimize their processes accordingly. These systems typically track daily usage percentages, peak usage times, and processing success rates.

Queue management strategies help organizations handle variable workloads without exceeding rate limits. Implementing exponential backoff algorithms, priority queuing for urgent requests, and automatic load balancing across multiple API keys ensures consistent service availability. These approaches can maintain 99.5% processing success rates even during peak demand periods.

Data preparation standards significantly impact both processing quality and resource consumption. Establishing guidelines for image formats, resolution requirements, and content types helps teams achieve consistent results while minimizing unnecessary quota consumption. Standard operating procedures should include compression guidelines, format conversion protocols, and quality assurance checkpoints.

- Monitor usage patterns: Track daily and hourly consumption to identify optimization opportunities

- Implement retry logic: Handle temporary failures gracefully with exponential backoff

- Optimize file preparation: Standardize compression and format conversion processes

- Cache results: Store analysis results to avoid reprocessing identical images

- Use multiple providers: Implement fallback options for high-availability requirements

- Schedule batch jobs: Process large volumes during off-peak hours when possible

Performance Benchmarking and Metrics

Understanding performance characteristics across different platforms helps optimize image processing workflows for specific use cases. Response time measurements vary significantly based on image complexity, with simple screenshots processing 3-4x faster than detailed technical diagrams. File size impacts processing time logarithmically, with diminishing returns beyond 5MB for most analysis tasks.

Accuracy comparisons between platforms reveal subtle differences in analysis quality that may influence provider selection. While basic object detection performs similarly across services, specialized tasks like code analysis in screenshots or technical diagram interpretation show measurable variations. Testing with representative samples from actual workflows provides the most reliable performance indicators.

Cost per analysis calculations must account for both direct API charges and indirect costs like development time, error handling, and infrastructure maintenance. Total cost of ownership analysis often reveals that premium API services provide better value than attempting to optimize around restrictive free or low-cost options.

Rate Limiting Architecture and 429 Error Handling

The HTTP 429 "Too Many Requests" error represents the most common challenge for high-volume image processing workflows. OpenAI's rate limiting operates on a token bucket algorithm that refills at predetermined intervals. Understanding this mechanism helps developers implement more effective retry strategies and workload distribution patterns.

Advanced error handling requires implementing exponential backoff with jitter to prevent thundering herd problems when multiple clients encounter rate limits simultaneously. Successful implementations typically start with a 1-second delay, doubling the wait time with each retry up to a maximum of 60 seconds. Adding random jitter prevents synchronized retry attempts that can overwhelm servers during recovery periods.

Monitoring rate limit headers in API responses provides crucial insights for proactive load management. The X-RateLimit-Remaining and X-RateLimit-Reset headers indicate current quota status and reset timestamps. Production systems should track these values and implement circuit breakers that temporarily redirect traffic to alternative providers when approaching limits.

# Advanced retry logic with exponential backoff

import time

import random

import requests

def process_with_retry(api_call, max_retries=5):

"""

Robust API call with exponential backoff and jitter

"""

for attempt in range(max_retries):

try:

response = api_call()

# Check rate limit headers

remaining = int(response.headers.get('X-RateLimit-Remaining', 0))

if remaining < 10:

# Approaching limit, slow down requests

time.sleep(5)

if response.status_code == 200:

return response.json()

elif response.status_code == 429:

# Rate limited, calculate backoff

base_delay = 2 ** attempt

jitter = random.uniform(0.1, 0.3) * base_delay

delay = base_delay + jitter

print(f"Rate limited, retrying in {delay:.1f}s")

time.sleep(delay)

else:

# Other error, don't retry

response.raise_for_status()

except requests.exceptions.RequestException as e:

if attempt == max_retries - 1:

raise

time.sleep(2 ** attempt)

raise Exception("Max retries exceeded")

Future Outlook and Capacity Planning

OpenAI's roadmap suggests potential increases to ChatGPT Plus image quotas as infrastructure scales, but no specific timeline has been announced. Industry trends indicate growing demand for vision capabilities, with enterprise adoption driving the need for more flexible pricing models. Organizations should plan for evolving quota structures while building systems that can adapt to changing limitations.

Emerging technologies like edge processing and improved compression algorithms may reduce the infrastructure burden that drives current quota systems. However, the fundamental economics of GPU-intensive vision processing suggest that some form of usage controls will persist. Smart capacity planning incorporates both current constraints and anticipated technological improvements.

The competitive landscape continues evolving with new providers entering the market and existing services expanding capabilities. Maintaining provider diversity and avoiding vendor lock-in becomes increasingly important as image processing becomes critical to business operations. Regular evaluation of alternatives ensures optimal cost and performance characteristics as the market matures.

Conclusion

ChatGPT Plus image upload limitations create significant constraints for users requiring consistent visual analysis capabilities. While the 50 images per day and 80 files per 3-hour limits serve OpenAI's resource management needs, they often prove inadequate for professional and enterprise applications. Understanding these ChatGPT Plus image upload limits and implementing appropriate optimization strategies helps maximize value within existing constraints.

For organizations requiring unlimited processing capabilities, API-based solutions like laozhang.ai provide the scalability and performance needed for production workflows. The transition from quota-limited web interfaces to unlimited API access represents a necessary evolution for serious image processing applications. Cost analysis demonstrates clear advantages for high-volume users willing to invest in dedicated infrastructure.

Success in managing image processing workflows requires balancing current limitations with long-term scalability requirements. Organizations that implement robust monitoring, optimization, and fallback strategies position themselves to adapt as the market evolves. The key lies in understanding both technical constraints and business requirements to select the most appropriate combination of tools and services.