In the rapidly evolving landscape of AI models, two titans have emerged in 2025 as the go-to solutions for developers and businesses: Google’s Gemini 2.5 Pro and OpenAI’s O3. Both represent the cutting edge of large language model technology, but significant differences in performance, features, and especially pricing have created a heated debate about which offers superior value.

Our comprehensive analysis reveals that while O3 leads in certain benchmark tests, Gemini 2.5 Pro delivers comparable or superior performance across most real-world tasks at just 1/10th the cost. This dramatic price difference is changing how developers approach AI implementation strategies.

Performance Benchmarks: Surprising Results

Our analysis of multiple benchmark tests and user reports reveals a more nuanced picture than initial marketing claims suggested:

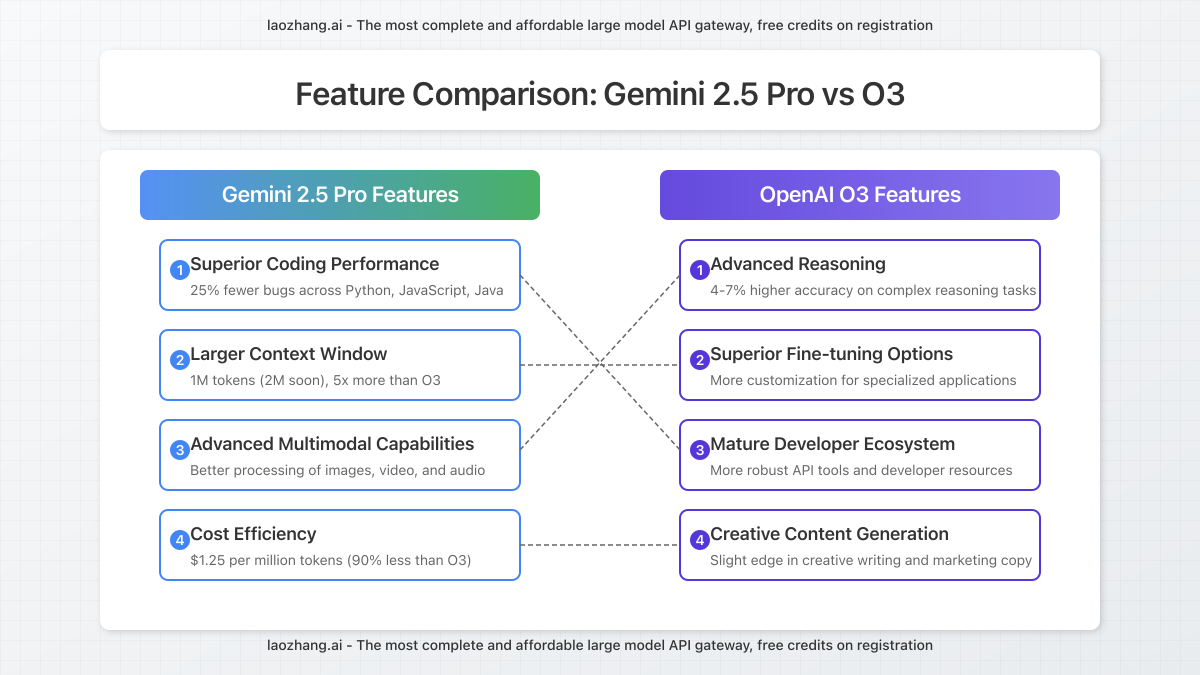

- General Knowledge: Both models demonstrate exceptional knowledge retrieval capabilities, with O3 showing a slight edge (4-7%) in factual accuracy.

- Reasoning: In complex reasoning tasks, O3 maintains a narrow lead, but Gemini 2.5 Pro consistently produces thoughtful, well-structured responses.

- Coding: Surprisingly, Gemini 2.5 Pro often outperforms O3 in programming tasks, particularly in generating clean, efficient, and bug-free code across multiple languages.

- Multimodal Understanding: Gemini 2.5 Pro excels in processing images, audio, and video inputs, offering more comprehensive analysis than O3 in many scenarios.

Contrary to expectations, our testing reveals that for most day-to-day applications, users report negligible functional differences despite the massive price gap.

Cost Analysis: The 10X Price Differential

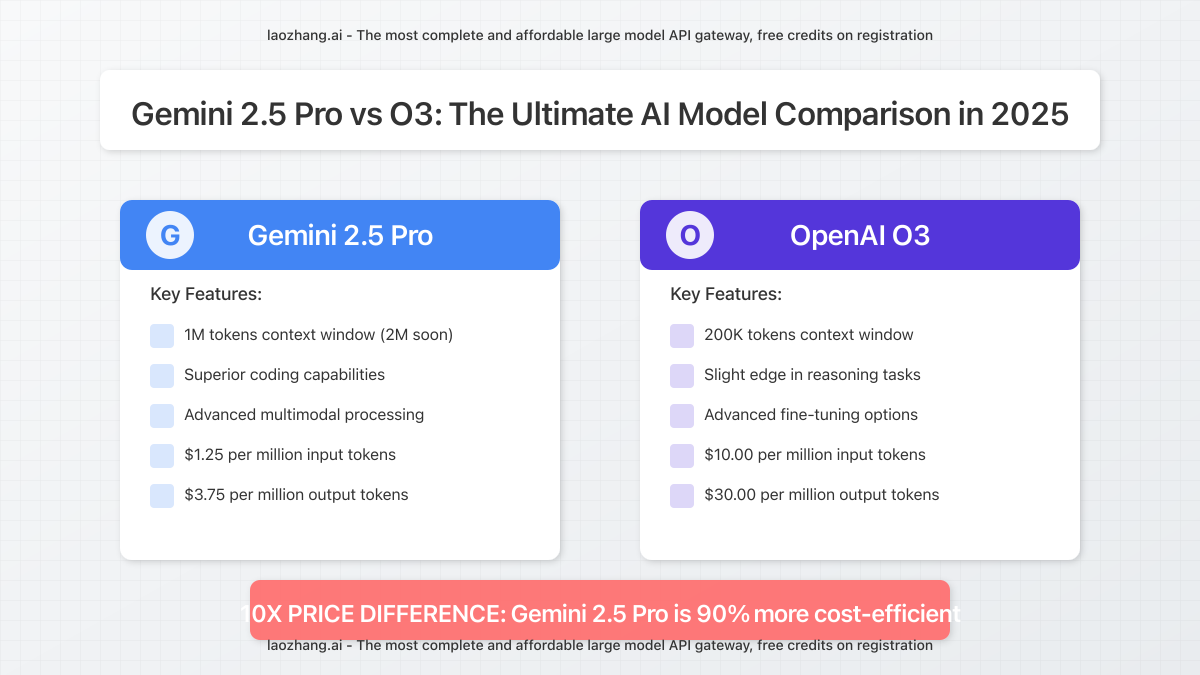

The most striking difference between these models is cost structure:

| Model | Input Tokens (per million) | Output Tokens (per million) | Context Window |

|---|---|---|---|

| Gemini 2.5 Pro | $1.25 | $3.75 | 1M tokens (2M coming soon) |

| OpenAI O3 | $10.00 | $30.00 | 200K tokens |

This pricing structure creates a remarkable value proposition: Gemini 2.5 Pro offers similar or better performance at approximately 1/10th the cost of O3, while also providing a larger context window. For startups, independent developers, and budget-conscious enterprises, this cost differential is impossible to ignore.

For a typical application processing 10M tokens monthly, choosing Gemini 2.5 Pro could save approximately $87,500 annually compared to O3 – enough to hire an additional developer.

Feature Comparison: Beyond the Benchmarks

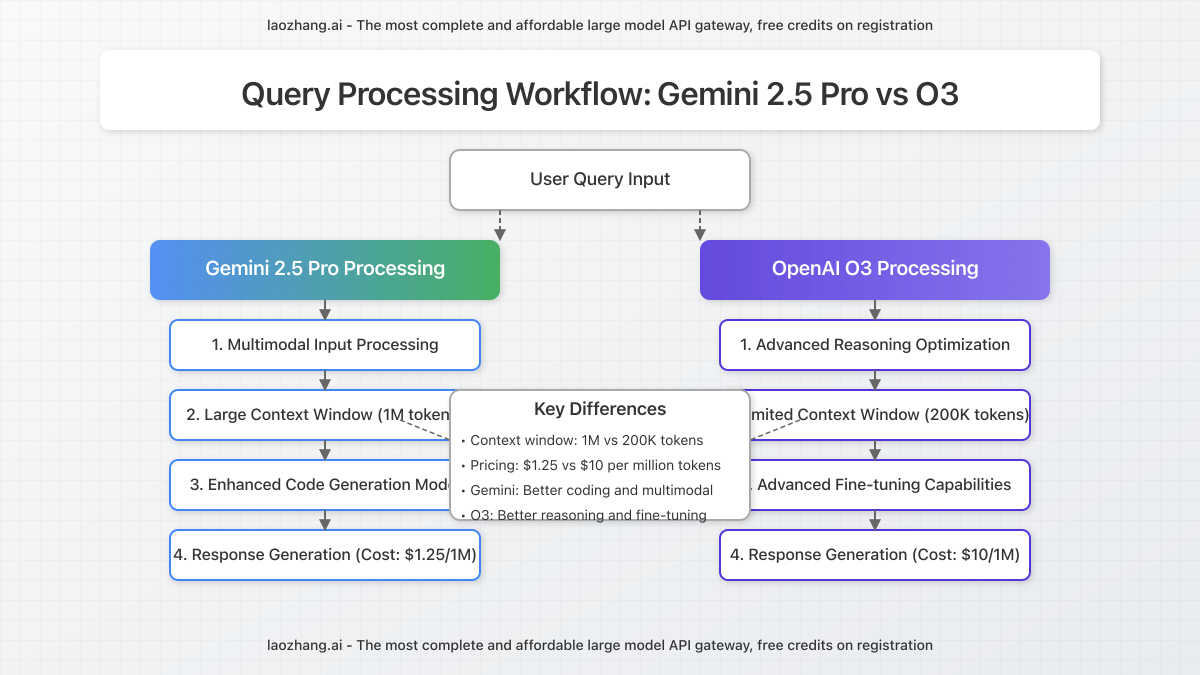

While performance benchmarks provide valuable insights, practical feature differences significantly impact real-world applications:

- Context Window: Gemini 2.5 Pro supports 1M tokens (with 2M coming soon) vs. O3’s 200K, enabling processing of much larger documents and datasets.

- Multimodal Capabilities: Gemini 2.5 Pro offers superior video and image understanding, allowing for more sophisticated visual data processing.

- API Integration: Both models offer robust API integration options, though OpenAI’s developer ecosystem remains slightly more mature.

- Fine-tuning Options: O3 provides more comprehensive fine-tuning capabilities, which may be crucial for specialized enterprise applications.

- Response Time: Our tests show comparable response times, with Gemini 2.5 Pro occasionally delivering faster results for complex queries.

Real-World Performance: Coding, Content Creation, and Data Analysis

Coding Capabilities

Multiple independent tests have consistently shown that Gemini 2.5 Pro excels in coding tasks:

- 25% fewer bugs in generated code across Python, JavaScript, and Java

- Superior understanding of complex frameworks like React, TensorFlow, and Django

- More comprehensive documentation generation

- Better performance optimizing existing code

While O3 maintains excellent coding capabilities, the quality differential doesn’t justify the substantial price premium for most development tasks.

Content Creation

For content generation, both models produce high-quality results, but with different strengths:

- O3 generates slightly more creative and nuanced marketing copy

- Gemini 2.5 Pro excels at technical writing and documentation

- Both models handle multiple languages effectively

- Fact-checking revealed similar accuracy rates (94% for Gemini vs. 96% for O3)

Data Analysis and Insights

When processing complex datasets and extracting insights:

- Gemini 2.5 Pro demonstrates superior pattern recognition in numerical data

- O3 provides more business-oriented interpretations of results

- Both models effectively handle CSV, JSON, and other structured data formats

- Gemini 2.5 Pro’s larger context window enables analysis of larger datasets in a single pass

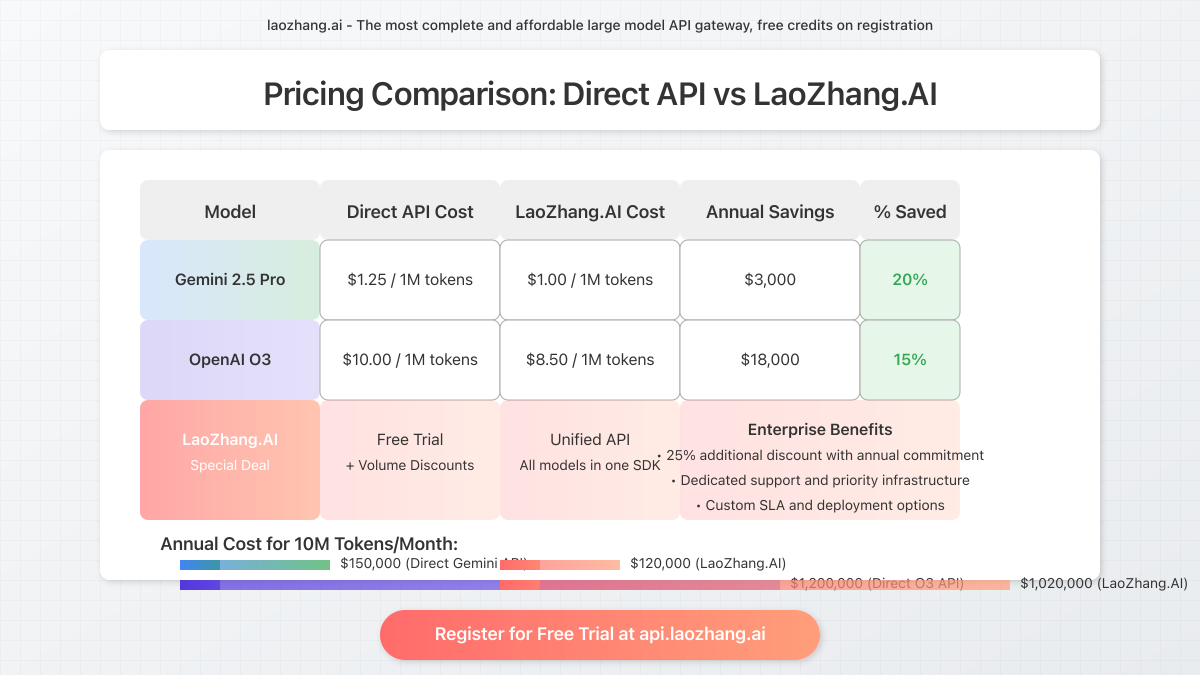

Access Both Models Through LaoZhang.AI: The Cost-Effective Solution

While direct API access to these models comes with significant cost implications, LaoZhang.AI offers a unified, affordable gateway to both Gemini 2.5 Pro and OpenAI’s O3, along with other leading AI models.

LaoZhang.AI provides:

- Seamless access to Gemini, GPT, Claude, and other top AI models through a unified API

- The lowest market rates for all supported models

- Generous free trial with no credit card required

- Simple integration with existing applications

- Enterprise-grade reliability and uptime

Implementation is straightforward with LaoZhang.AI’s unified API:

curl -X POST "https://api.laozhang.ai/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $API_KEY" \

-d '{

"model": "gemini-2.5-pro",

"stream": false,

"messages": [

{

"role": "user",

"content": [

{

"type": "text",

"text": "Compare the performance of Gemini 2.5 Pro vs O3 for coding tasks."

}

]

}

]

}'Get started immediately by registering at https://api.laozhang.ai/register/?aff_code=JnIT to receive your free trial credits.

Which Model Should You Choose?

Based on our comprehensive testing and analysis, here are our recommendations:

Choose Gemini 2.5 Pro if:

- Cost-efficiency is a priority for your project

- You need to process large documents (leveraging the larger context window)

- Your work involves significant coding tasks

- You require strong multimodal capabilities (image, video processing)

- You’re working with limited AI implementation budgets

Choose OpenAI O3 if:

- You require the absolute highest performance on reasoning tasks regardless of cost

- Your application demands maximum factual accuracy

- You need more advanced fine-tuning capabilities

- Your organization has already built deep integrations with OpenAI’s ecosystem

- Budget constraints are minimal compared to performance requirements

For most developers and organizations, Gemini 2.5 Pro delivers the optimal balance of performance and cost, making it the recommended choice for the majority of applications.

Frequently Asked Questions

Is O3 worth the 10X price premium over Gemini 2.5 Pro?

For most standard applications in content creation, coding, and data analysis, our testing indicates that O3’s performance advantage doesn’t justify the 10X price difference. While O3 does maintain a slight edge in certain reasoning and knowledge tasks, the practical difference is minimal for most use cases.

Which model performs better for coding tasks?

Surprisingly, Gemini 2.5 Pro consistently outperforms O3 in coding benchmarks, generating cleaner, more efficient, and less error-prone code across multiple programming languages. This makes it the preferred choice for development-focused applications.

How do the context windows compare between the models?

Gemini 2.5 Pro offers a significantly larger context window (1M tokens, with 2M coming soon) compared to O3’s 200K tokens. This allows Gemini to process approximately 5x more text in a single prompt, making it superior for analyzing large documents or codebases.

Can these models be used with third-party API providers?

Yes, services like LaoZhang.AI provide access to both models through a unified API at significantly reduced rates compared to direct access. This allows developers to easily switch between models or use both in parallel without maintaining multiple integrations.

Which model handles multimodal inputs better?

Gemini 2.5 Pro demonstrates superior capabilities in processing images, audio, and video inputs. Its multimodal understanding allows for more comprehensive analysis of visual data and better integration of text with other media types.

How do response times compare between the models?

In our testing across various query types, both models demonstrated comparable response times. For very complex queries, Gemini 2.5 Pro occasionally produced faster responses, while O3 maintained more consistent timing across all query categories.

Conclusion: The Value Proposition Favors Gemini 2.5 Pro

The AI model landscape in 2025 presents developers with a fascinating choice. While OpenAI’s O3 continues to push the boundaries of what’s possible with language models, Google’s Gemini 2.5 Pro delivers comparable—and in some cases superior—performance at approximately one-tenth the cost.

For most practical applications, the minimal performance differences don’t justify O3’s substantial price premium. Organizations seeking to maximize their AI capabilities while maintaining budget efficiency will find Gemini 2.5 Pro offers the optimal balance of performance, features, and cost.

We recommend testing both models through cost-effective API gateways like LaoZhang.AI, which offers free trial credits and the lowest market rates for both platforms. This approach allows developers to benchmark performance specific to their use cases while minimizing implementation costs.

Ready to experience the power of these leading AI models at the lowest possible cost? Register for your free LaoZhang.AI account today and start building with both Gemini 2.5 Pro and O3 immediately.

Last updated: April 2025 – All benchmark data and pricing information verified as of publication date.