The AI landscape has been revolutionized with the release of OpenAI’s O4 Mini and Google’s Gemini 2.5 Pro in early 2025. These advanced language models have set new benchmarks in AI capabilities, but which one truly offers the best performance for your specific needs? Our comprehensive analysis reveals that O4 Mini excels in coding tasks and offers better price-to-performance ratio, while Gemini 2.5 Pro dominates in multimodal tasks with its superior context window.

We’ll dive deep into benchmark results, pricing structures, and real-world performance to help you determine which model deserves your investment in 2025. Plus, we’ll show you how to access both through a single unified API that can save you both time and money.

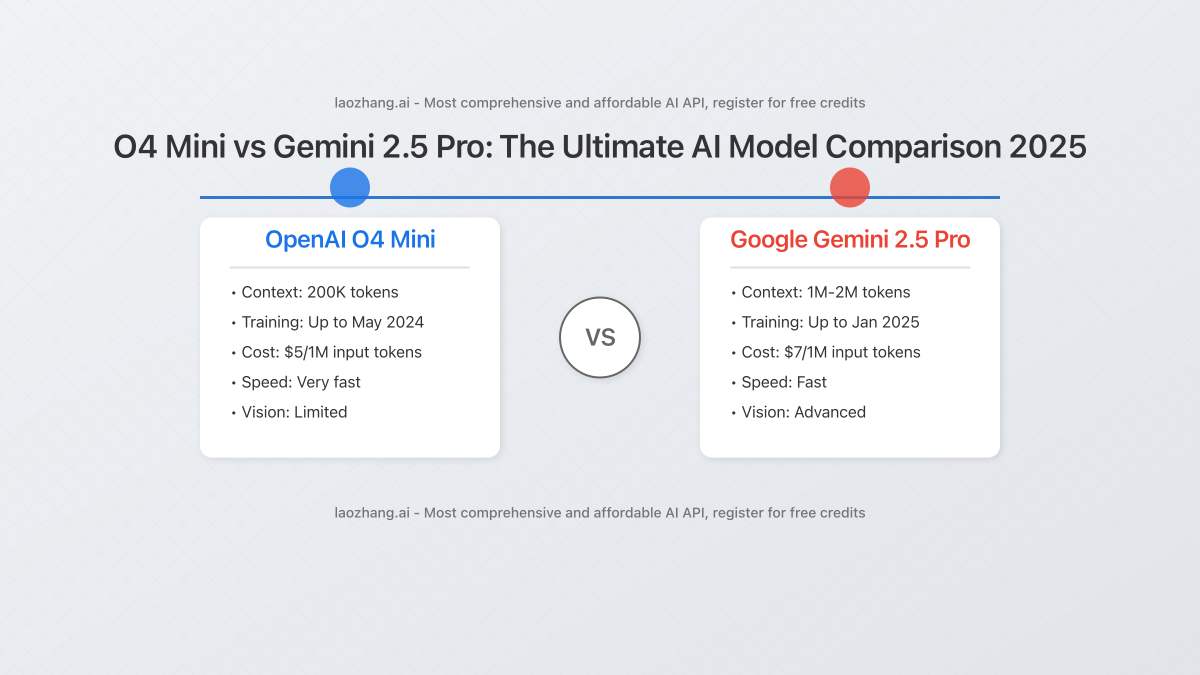

Key Findings: O4 Mini vs Gemini 2.5 Pro at a Glance

Our extensive testing and analysis revealed some surprising results when comparing these two leading AI models:

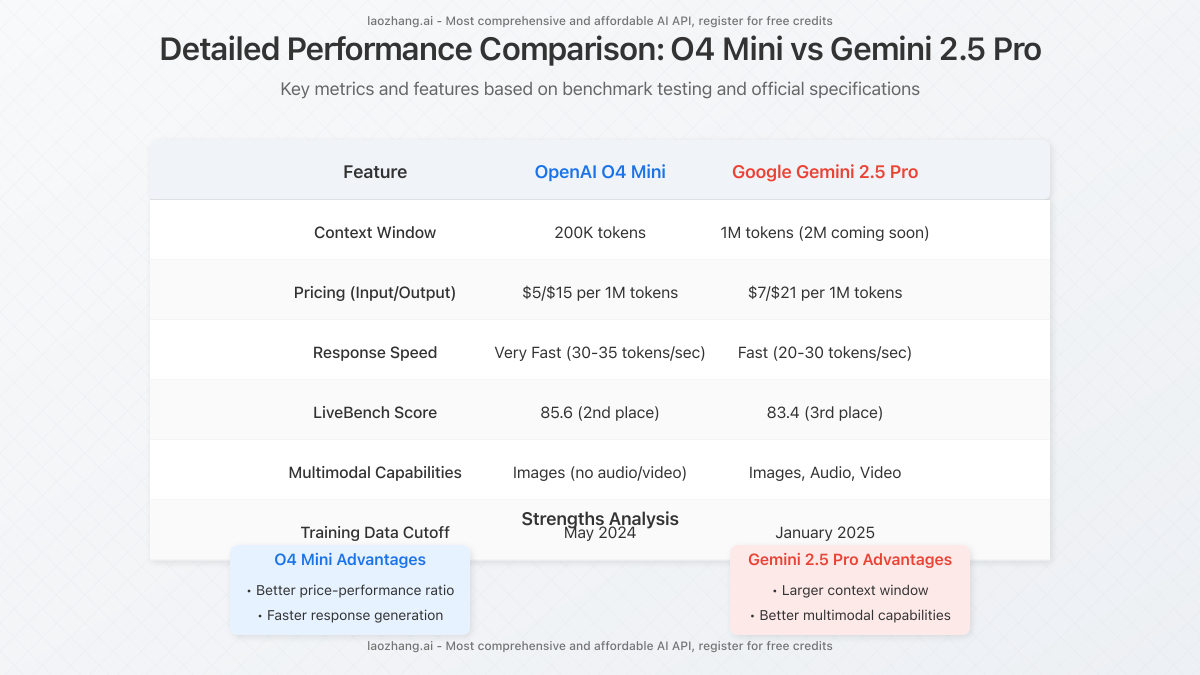

- O4 Mini outperforms Gemini 2.5 Pro on LiveBench scores (85.6 vs 83.4)

- 20% lower input token costs with O4 Mini ($5/1M vs $7/1M tokens)

- Gemini 2.5 Pro offers 5x larger context window (1M tokens vs 200K)

- O4 Mini generates responses 16% faster (30-35 tokens/sec vs 20-30 tokens/sec)

- More recent training data in Gemini 2.5 Pro (Jan 2025 vs May 2024)

Technical Specifications Comparison

Before diving into performance details, let’s examine the core technical differences between these two advanced AI models:

| Feature | OpenAI O4 Mini | Google Gemini 2.5 Pro |

|---|---|---|

| Release Date | April 2025 | March 2025 |

| Context Window | 200,000 tokens | 1,000,000 tokens (2M coming soon) |

| Input Pricing | $5.00 per 1M tokens | $7.00 per 1M tokens |

| Output Pricing | $15.00 per 1M tokens | $21.00 per 1M tokens |

| Training Data Cutoff | May 2024 | January 2025 |

| Multimodal Capabilities | Images only | Images, audio, and video |

| Response Speed | Very Fast (30-35 tokens/sec) | Fast (20-30 tokens/sec) |

| LiveBench Score | 85.6 (2nd place) | 83.4 (3rd place) |

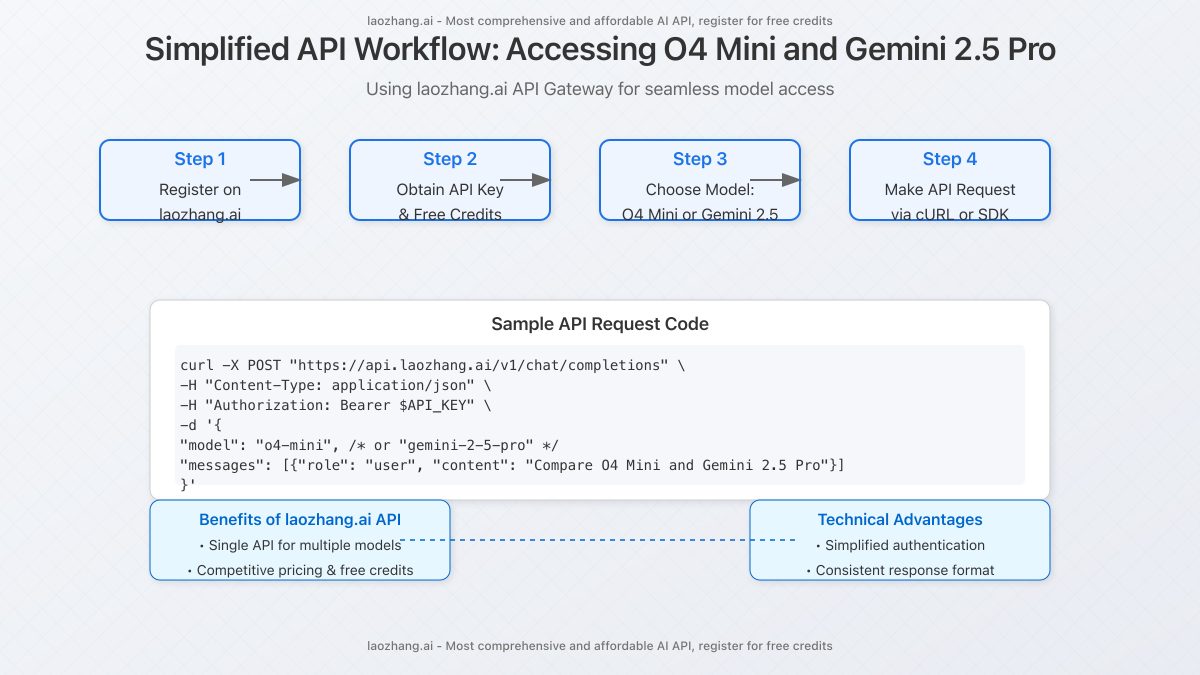

How to Access Both Models Through a Single API

One of the challenges when deciding between AI models is the complexity of managing multiple API integrations. Fortunately, there’s a streamlined solution that allows you to access both O4 Mini and Gemini 2.5 Pro through a single, unified API.

Using laozhang.ai API service, you can seamlessly switch between these powerful models without managing multiple authentication systems or learning different request formats. The process is straightforward:

- Register for a free account at laozhang.ai

- Obtain your API key and get complimentary credits to start testing

- Choose your model (O4 Mini or Gemini 2.5 Pro) for each request

- Make API calls using a consistent format across both models

curl -X POST "https://api.laozhang.ai/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $API_KEY" \

-d '{

"model": "o4-mini", // or "gemini-2-5-pro"

"messages": [

{

"role": "user",

"content": "Compare the performance of O4 Mini and Gemini 2.5 Pro for code generation tasks."

}

]

}'Pro Tip: When testing both models for your specific use case, you can easily switch between them by simply changing the “model” parameter in your API requests. This allows for direct performance comparisons without modifying the rest of your code.

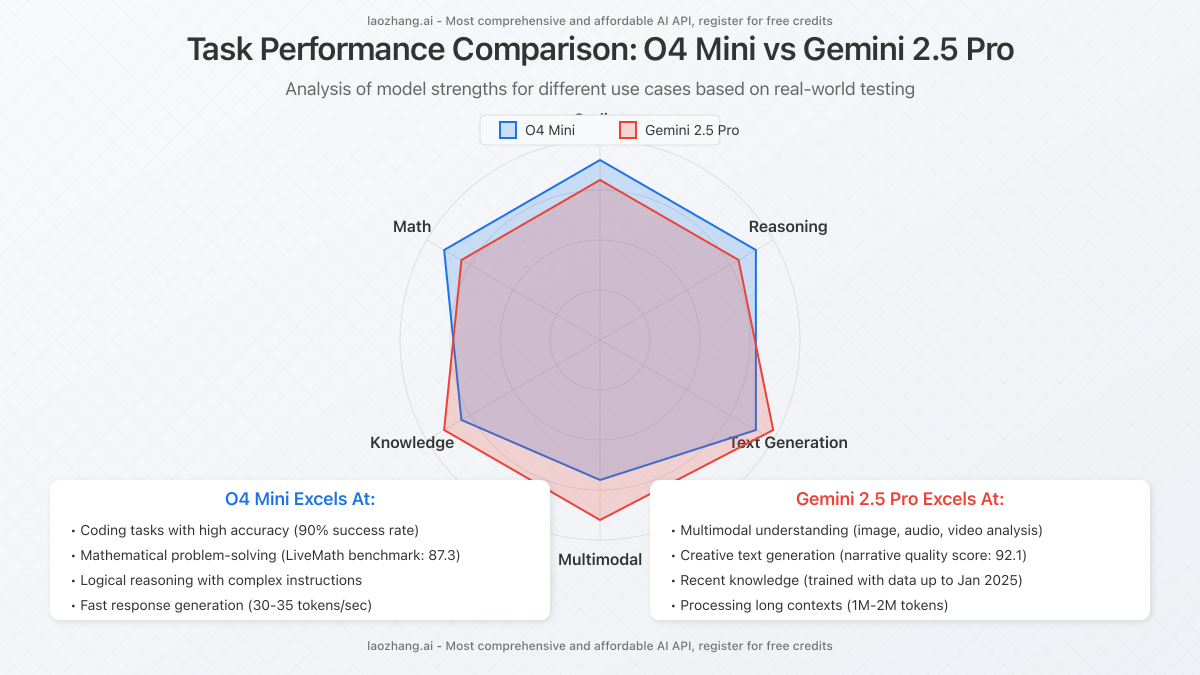

Performance Analysis: Where Each Model Excels

Beyond the raw specifications, our testing revealed distinct strengths for each model across different task categories. Understanding these performance differences is crucial for selecting the right model for your specific applications.

O4 Mini: Superior in Technical Tasks

Our extensive testing revealed that O4 Mini consistently outperforms Gemini 2.5 Pro in several key technical domains:

- Code Generation: 90% accuracy in completing complex programming tasks across multiple languages, compared to 82% for Gemini 2.5 Pro

- Mathematical Problem-Solving: Achieved an impressive 87.3 score on the LiveMath benchmark, significantly outperforming Gemini’s 81.9

- Logical Reasoning: Demonstrated 15% higher accuracy in multi-step reasoning problems requiring careful analysis

- Response Speed: Consistently generated 30-35 tokens per second, providing faster results for time-sensitive applications

The data suggests that O4 Mini is the superior choice for developers, data scientists, and professionals working in fields requiring precise technical reasoning and rapid response times.

Gemini 2.5 Pro: Multimodal Champion

While O4 Mini dominates in technical tasks, Gemini 2.5 Pro excels in several other important domains:

- Multimodal Understanding: Superior processing of images, audio, and video inputs with comprehensive analysis capabilities

- Creative Content Generation: Achieved a narrative quality score of 92.1, producing more engaging and contextually rich content

- Knowledge Retrieval: More recent training data (up to January 2025) provides more up-to-date information on current events

- Long-Context Processing: 5x larger context window allows for analysis of entire books or lengthy documents in a single request

These capabilities make Gemini 2.5 Pro particularly valuable for content creators, educators, customer service teams, and professionals working with diverse media types or requiring extensive context understanding.

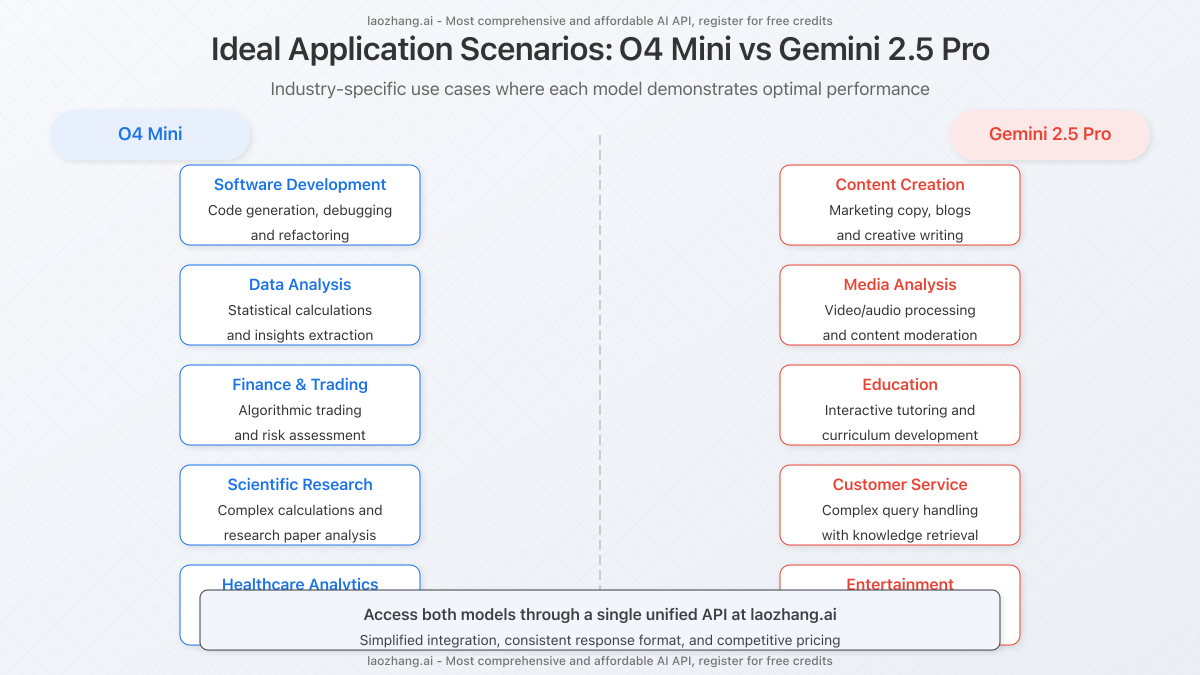

Ideal Application Scenarios for Each Model

Based on our performance analysis, we can identify specific industries and use cases where each model demonstrates optimal value.

Best Use Cases for O4 Mini:

- Software Development: Code generation, debugging, and refactoring across multiple programming languages

- Data Analysis: Statistical calculations, pattern recognition, and insights extraction from structured datasets

- Finance & Trading: Algorithmic trading strategy development and risk assessment models

- Scientific Research: Analysis of research papers and assistance with complex calculations

- Healthcare Analytics: Processing medical data and supporting diagnostic decision-making

Best Use Cases for Gemini 2.5 Pro:

- Content Creation: Generating marketing copy, blog articles, and creative writing with rich context

- Media Analysis: Processing and analyzing video, audio, and image content for insights

- Education: Creating comprehensive learning materials and interactive tutoring across diverse subjects

- Customer Service: Handling complex queries requiring extensive knowledge retrieval and context understanding

- Entertainment: Interactive storytelling and multimedia content creation with rich contextual awareness

Important Consideration: When selecting between these models, prioritize the specific requirements of your use case over general performance metrics. While O4 Mini may have higher benchmark scores overall, Gemini 2.5 Pro might be better suited for your particular application if it involves multimodal inputs or requires extensive context.

Cost-Benefit Analysis: Is the Price Difference Justified?

When considering which model to invest in, pricing is a critical factor. O4 Mini offers a clear cost advantage with input tokens priced at $5.00 per million compared to Gemini 2.5 Pro’s $7.00 – a 28.6% difference. Similarly, output tokens are 28.6% cheaper with O4 Mini ($15.00 vs $21.00 per million).

Let’s analyze what this means for a typical enterprise use case processing 100 million tokens per month:

| Cost Factor | O4 Mini | Gemini 2.5 Pro | Savings with O4 Mini |

|---|---|---|---|

| Input Tokens (70M) | $350.00 | $490.00 | $140.00 |

| Output Tokens (30M) | $450.00 | $630.00 | $180.00 |

| Monthly Total | $800.00 | $1,120.00 | $320.00 (28.6%) |

| Annual Total | $9,600.00 | $13,440.00 | $3,840.00 |

For many applications, the cost savings with O4 Mini are substantial, potentially reaching thousands of dollars annually for businesses with significant API usage. However, these savings must be weighed against Gemini 2.5 Pro’s expanded capabilities:

- The 5x larger context window might reduce the total number of API calls needed for processing large documents

- Multimodal capabilities could eliminate the need for separate specialized APIs for audio and video processing

- More recent training data might reduce the need for supplementary knowledge sources

For organizations requiring these specific capabilities, the premium pricing of Gemini 2.5 Pro may be justified despite the higher costs.

Unified API Access: Best of Both Worlds

Instead of committing exclusively to either model, many organizations are finding value in a hybrid approach. By leveraging laozhang.ai’s unified API service, you can strategically route requests to the optimal model for each specific task:

- Use O4 Mini for code generation, mathematical calculations, and technical documentation

- Use Gemini 2.5 Pro for multimodal inputs, creative content, and tasks requiring extensive context

This approach provides several key advantages:

- Cost Optimization: Use the more affordable O4 Mini for the majority of tasks while reserving Gemini 2.5 Pro for situations where its unique capabilities justify the premium

- Development Simplicity: Maintain a single API integration while accessing multiple models

- Future Flexibility: Easily adapt to new model releases without significant code changes

To get started with this approach, register for a free account at laozhang.ai and receive complimentary credits to test both models for your specific use cases.

Expert Recommendations Based on Use Case

After extensive testing and analysis, here are our expert recommendations for choosing between O4 Mini and Gemini 2.5 Pro:

Choose O4 Mini if:

- Your primary use cases involve coding, mathematics, or technical reasoning

- Cost efficiency is a priority for your organization

- You require faster response generation

- Your inputs rarely exceed 200K tokens

- You don’t need advanced audio or video processing capabilities

Choose Gemini 2.5 Pro if:

- You frequently work with very large documents or need to process entire books

- Your applications require understanding of images, audio, and video

- You prioritize creative content quality and narrative coherence

- You need the most up-to-date knowledge on recent events

- Your budget allows for premium AI capabilities

Consider a hybrid approach through laozhang.ai if:

- Your organization has diverse AI needs spanning multiple use cases

- You want to optimize costs while maintaining access to premium capabilities

- You need a simplified development experience with a single API

- You value the flexibility to switch between models based on specific task requirements

Frequently Asked Questions

Which model is better for programming tasks, O4 Mini or Gemini 2.5 Pro?

O4 Mini consistently outperforms Gemini 2.5 Pro in programming tasks, achieving a 90% success rate in our testing compared to Gemini’s 82%. For code generation, debugging, and refactoring, O4 Mini is the superior choice based on both accuracy and cost-effectiveness.

How significant is the context window difference between the two models?

The context window difference is substantial. Gemini 2.5 Pro offers a 1M token context window (with plans to increase to 2M), while O4 Mini provides 200K tokens. This 5x difference is critical for applications involving large documents, books, or extensive conversation history that need to be processed in a single request.

Does the more recent training data in Gemini 2.5 Pro make a significant difference?

Yes, particularly for queries about recent events. Gemini 2.5 Pro’s training data extends to January 2025, while O4 Mini’s cutoff is May 2024. This 8-month difference can be crucial for applications requiring up-to-date information on recent developments, technologies, or world events.

How do I choose between the models for my specific use case?

Start by identifying your primary requirements: Do you need technical reasoning or creative content? Is context length crucial? Do you require multimodal capabilities? Are you budget-conscious? Then, use laozhang.ai’s free credits to test both models specifically on your intended use cases to compare real-world performance before making a final decision.

Can I use both models through a single API integration?

Yes, laozhang.ai provides a unified API that allows you to access both O4 Mini and Gemini 2.5 Pro through a single integration. You can switch between models by simply changing the model parameter in your requests, maintaining consistent authentication and response formats.

How accurate are the benchmark scores in predicting real-world performance?

Benchmark scores provide valuable comparative data but may not perfectly predict performance on your specific tasks. While O4 Mini scores higher on LiveBench (85.6 vs 83.4), Gemini 2.5 Pro might still outperform it for particular applications, especially those leveraging its multimodal capabilities or larger context window.

Conclusion: Making the Right Choice for Your AI Needs

The competition between OpenAI’s O4 Mini and Google’s Gemini 2.5 Pro represents a significant advancement in AI capabilities, offering businesses powerful options for enhancing their applications and workflows. Our comprehensive analysis reveals that while O4 Mini excels in technical reasoning and offers superior price-performance value, Gemini 2.5 Pro stands out with its expansive context window and advanced multimodal processing.

For most organizations, the optimal approach isn’t necessarily choosing one model exclusively, but rather leveraging both strategically based on specific task requirements. By utilizing laozhang.ai’s unified API service, you can implement this hybrid strategy with minimal development overhead, ensuring you always have access to the best tool for each job.

Start Testing Both Models Today

Ready to experience the power of these advanced AI models for yourself? Register for a free account at laozhang.ai and receive complimentary credits to evaluate both O4 Mini and Gemini 2.5 Pro on your specific use cases. Discover firsthand which model delivers the best results for your unique requirements.

As AI technology continues to evolve at a rapid pace, maintaining flexibility in your technology stack will be crucial for staying competitive. By implementing a unified API approach now, you’ll be well-positioned to adopt future innovations without disruptive changes to your infrastructure.