Working with the ChatGPT API is powerful but can sometimes lead to unexpected errors that disrupt your workflow. Understanding these error codes is essential for any developer integrating OpenAI’s technology into their applications. In this comprehensive guide, we’ll explore every possible ChatGPT API error code and provide expert solutions to get you back on track quickly.

Understanding ChatGPT API Error Codes: The Complete Reference

When working with ChatGPT API, errors are inevitable but manageable with the right knowledge. Most developers encounter at least one of these errors during implementation, and knowing how to address them efficiently can save hours of debugging time.

Why Error Handling Matters in AI Development

Proper error handling is critical for several reasons:

- Ensures reliable application performance even when API issues occur

- Provides meaningful feedback to end-users rather than cryptic error messages

- Helps implement retry mechanisms and fallback strategies

- Allows better resource management and conservation of API quotas

Let’s dive into the comprehensive list of ChatGPT API error codes, organized by category for easy reference.

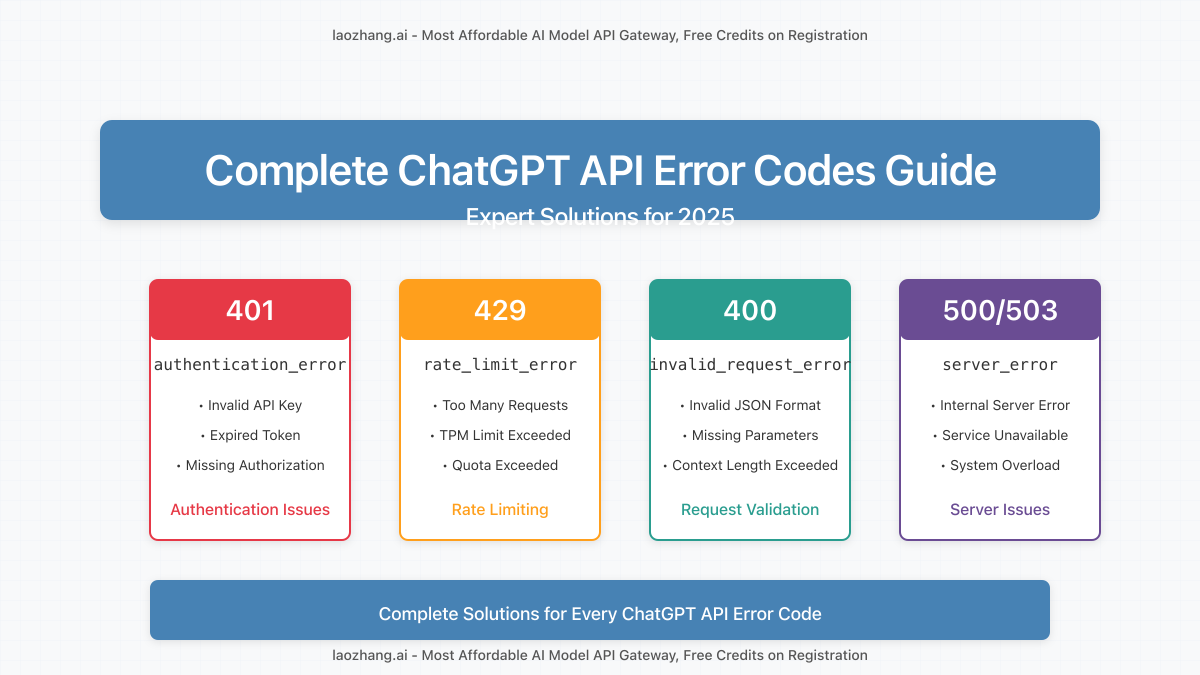

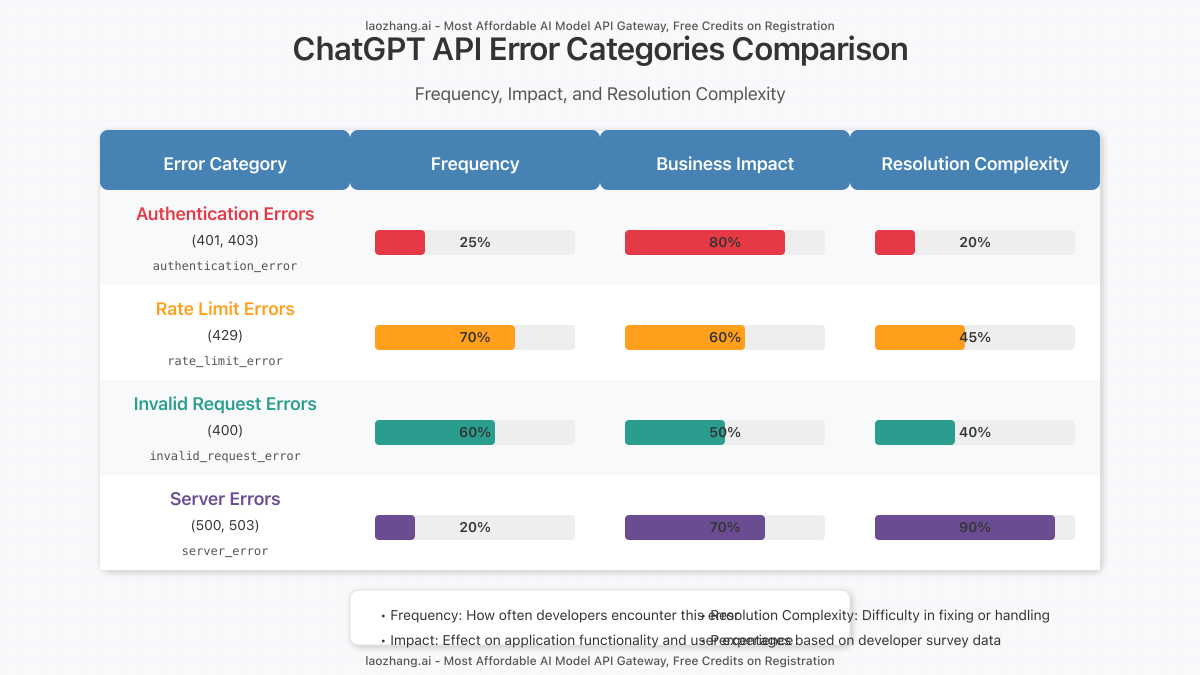

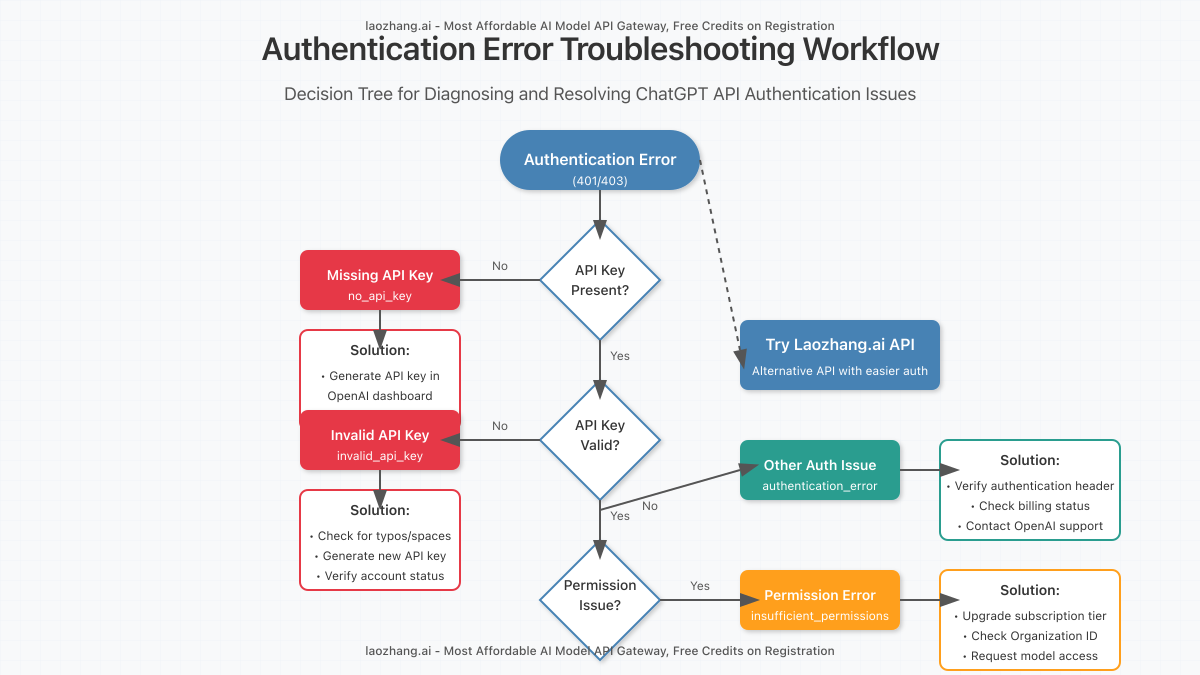

Authentication Errors (401/403)

Authentication errors occur when there are issues with your API key or permissions.

1. Authentication Error (401)

Error Format:

{

"error": {

"message": "Incorrect API key provided...",

"type": "authentication_error",

"code": "invalid_api_key"

}

}Common Causes:

- Using an invalid or expired API key

- Incorrectly formatted API key (extra spaces, missing characters)

- Using a revoked API key

- Using a key associated with a suspended account

Solutions:

- Verify your API key in the OpenAI dashboard

- Generate a new API key if necessary

- Check for whitespace or formatting issues in the key

- Ensure your account is in good standing

2. Permission Error (403)

Error Format:

{

"error": {

"message": "You don't have permission to use this model...",

"type": "permission_error",

"code": "insufficient_permissions"

}

}Common Causes:

- Attempting to access models not included in your subscription tier

- Using an API key from a different organization

- Account restrictions or limitations

Solutions:

- Upgrade your subscription to access restricted models

- Verify that you’re using the correct organization ID

- Check your account permissions in the OpenAI dashboard

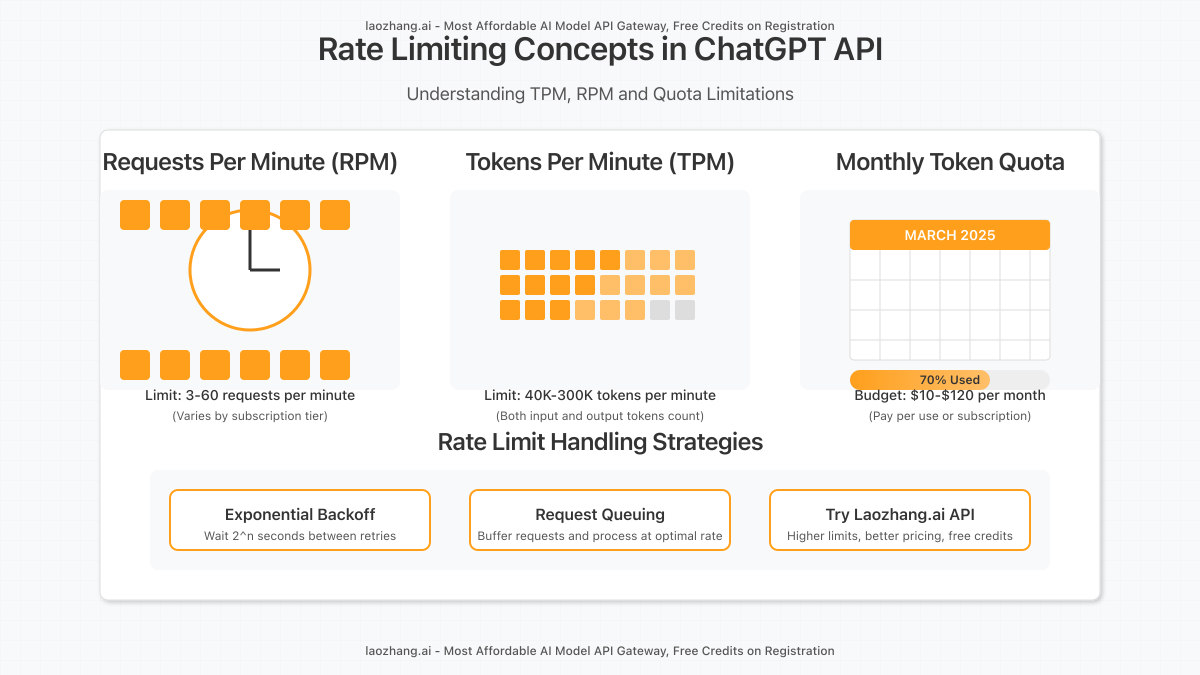

Rate Limit Errors (429)

Rate limit errors occur when you exceed the allowed number of requests in a specific timeframe.

1. Rate Limit Exceeded

Error Format:

{

"error": {

"message": "Rate limit reached for requests...",

"type": "rate_limit_error",

"code": "rate_limit_exceeded"

}

}Common Causes:

- Sending too many requests per minute

- Exceeding your tier’s TPM (tokens per minute) limit

- Making multiple concurrent requests from the same API key

- Batch operations without proper rate limiting

Solutions:

- Implement exponential backoff retry strategies

- Use request queuing to manage API call frequency

- Upgrade to a higher tier with increased rate limits

- Optimize prompts to reduce token usage

- Distribute requests across multiple API keys (if allowed by terms)

2. Tokens Exceeded Error

Error Format:

{

"error": {

"message": "This request exceeds your current quota...",

"type": "tokens_exceeded_error",

"code": "quota_exceeded"

}

}Common Causes:

- Exceeding your monthly token quota

- Using models with higher token costs than your plan allows

- Providing very long input prompts

Solutions:

- Monitor your token usage through the OpenAI dashboard

- Set up usage alerts to prevent unexpected overages

- Implement token counting in your application

- Consider upgrading your plan if you consistently hit limits

- Optimize prompt design to use fewer tokens

Invalid Request Errors (400)

Invalid request errors occur when your request format or parameters are incorrect.

1. Invalid Request Format

Error Format:

{

"error": {

"message": "Invalid request format...",

"type": "invalid_request_error",

"code": "invalid_json"

}

}Common Causes:

- Malformed JSON in the request body

- Missing required parameters

- Invalid parameter types or values

- Incorrect Content-Type header

Solutions:

- Validate your JSON before sending requests

- Check API documentation for required parameters

- Use proper data types for each parameter

- Ensure you’re setting Content-Type: application/json

2. Context Length Exceeded

Error Format:

{

"error": {

"message": "This model's maximum context length is 8192 tokens...",

"type": "invalid_request_error",

"code": "context_length_exceeded"

}

}Common Causes:

- Input + output tokens exceed the model’s maximum context window

- Using a model with insufficient context length for your needs

- Not tracking token counts in conversation history

Solutions:

- Implement token counting to stay within limits

- Use summarization techniques for long conversations

- Consider using models with larger context windows

- Implement conversation pruning strategies

- Split complex requests into multiple smaller ones

Server Errors (500-level)

Server errors occur when something goes wrong on OpenAI’s end.

1. Service Unavailable (503)

Error Format:

{

"error": {

"message": "The server is overloaded or not ready yet...",

"type": "server_error",

"code": "service_unavailable"

}

}Common Causes:

- High traffic on OpenAI’s servers

- Maintenance periods or deployments

- Service outages

Solutions:

- Implement exponential backoff retry logic

- Check OpenAI’s status page for known issues

- Consider implementing a fallback mechanism using alternative models

- Cache responses when possible to reduce API dependencies

2. Internal Server Error (500)

Error Format:

{

"error": {

"message": "The server had an error while processing your request...",

"type": "server_error",

"code": "internal_server_error"

}

}Common Causes:

- Backend issues with OpenAI’s infrastructure

- Unexpected errors in request processing

- Model-specific issues

Solutions:

- Retry the request after a reasonable delay

- Contact OpenAI support if errors persist

- Try using a different model

- Check request parameters for potential issues

Alternative API Solution: Laozhang.ai API Gateway

If you’re experiencing persistent issues with the OpenAI API or looking for an alternative with better pricing and reliability, Laozhang.ai offers a comprehensive API gateway service that addresses many common pain points:

- Higher rate limits with better pricing compared to direct OpenAI access

- Improved stability with built-in error handling and retries

- Streamlined authentication process

- Free trial credits upon registration

- Support for all OpenAI models plus additional providers

Getting Started with Laozhang.ai API

Registration is simple. Visit https://api.laozhang.ai/register/?aff_code=JnIT to create your account and receive free starting credits.

Example API Request

Here’s how to make a request using the Laozhang.ai API:

curl -X POST "https://api.laozhang.ai/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $API_KEY" \

-d '{

"model": "sora_image",

"stream": false,

"messages": [

{

"role": "user",

"content": [

{

"type": "text",

"text": "Generate three cats in a garden"

}

]

}

]

}'For support or questions, contact Laozhang directly via WeChat: ghj930213

Best Practices for Error Handling in Production

Implementing robust error handling strategies ensures your applications remain resilient when interacting with AI APIs.

Implement Graceful Degradation

- Always have fallback content or functionality ready

- Consider caching previous successful responses

- Use simpler models as backup options

- Provide meaningful error messages to users

Strategic Retry Mechanisms

Not all errors should be handled the same way:

| Error Type | Retry Strategy | Example Implementation |

|---|---|---|

| Rate Limit (429) | Exponential backoff with jitter | Wait 2^n seconds + random(0-1s) |

| Server Error (500/503) | Immediate retry, then backoff | Try immediately, then increase wait times |

| Authentication (401) | No automatic retry | Notify admin/developer |

| Invalid Request (400) | No retry (fix the request) | Log detailed error information |

Monitoring and Logging

Set up comprehensive monitoring to track API interactions:

- Log all requests, responses, and errors

- Track token usage and costs

- Set up alerts for unusual error rates

- Analyze patterns to identify optimization opportunities

Complete ChatGPT API Error Code Reference Table

For quick reference, here’s a comprehensive table of all possible ChatGPT API error codes:

| Status Code | Error Type | Error Code | Description | Recommended Action |

|---|---|---|---|---|

| 400 | invalid_request_error | invalid_json | Malformed JSON in request body | Validate JSON format |

| 400 | invalid_request_error | context_length_exceeded | Input too long for model | Reduce token count |

| 400 | invalid_request_error | parameter_missing | Required parameter not provided | Check API documentation |

| 400 | invalid_request_error | parameter_invalid | Parameter value invalid | Verify parameter values |

| 401 | authentication_error | invalid_api_key | API key invalid or incorrect | Check/regenerate API key |

| 401 | authentication_error | no_api_key | No API key provided | Add API key to request header |

| 403 | permission_error | insufficient_permissions | API key lacks permissions | Upgrade subscription |

| 403 | tokens_exceeded_error | quota_exceeded | Monthly quota exceeded | Purchase additional credits |

| 429 | rate_limit_error | rate_limit_exceeded | Too many requests in timeframe | Implement rate limiting |

| 429 | rate_limit_error | tokens_per_min_exceeded | TPM limit exceeded | Space out requests |

| 500 | server_error | internal_server_error | OpenAI server issue | Retry with backoff |

| 503 | server_error | service_unavailable | Server overloaded/unavailable | Retry after delay |

Conclusion: Mastering ChatGPT API Error Handling

Understanding and properly handling ChatGPT API errors is essential for building robust AI-powered applications. By implementing the strategies outlined in this guide, you can create more resilient systems that gracefully handle API limitations and unexpected issues.

Remember that Laozhang.ai offers an excellent alternative API gateway with better pricing, higher limits, and free starting credits. Visit their registration page to get started today.

Have questions or need personalized assistance with API integration? Reach out to Laozhang via WeChat (ghj930213) for expert support.