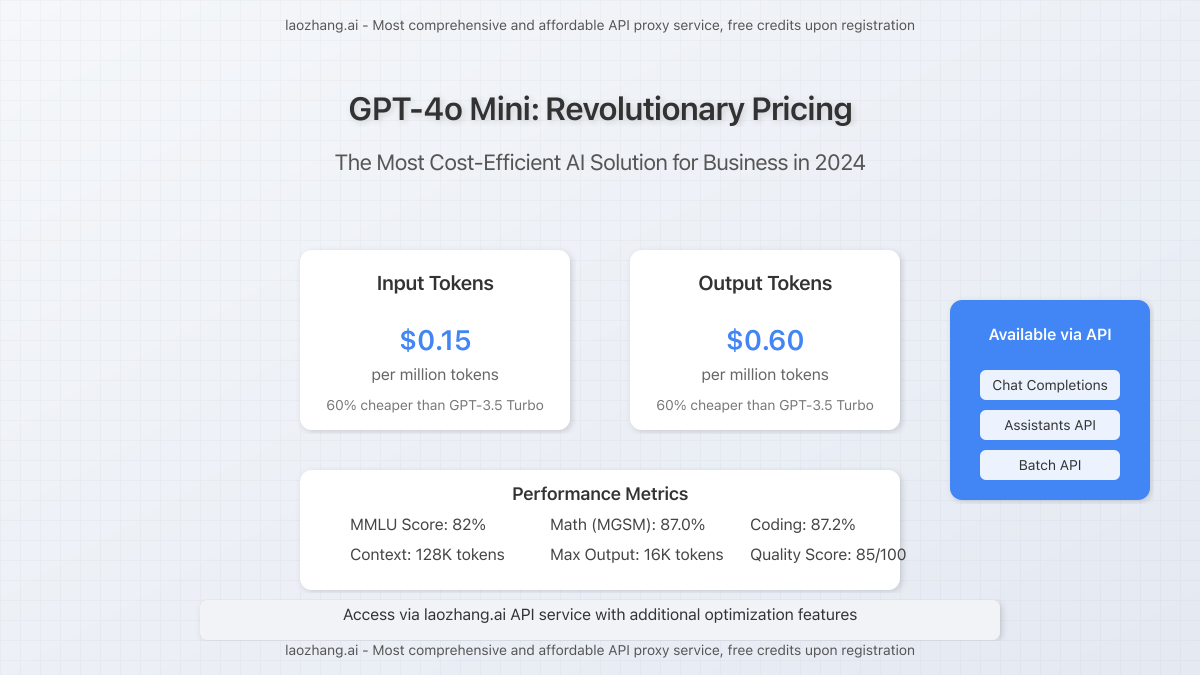

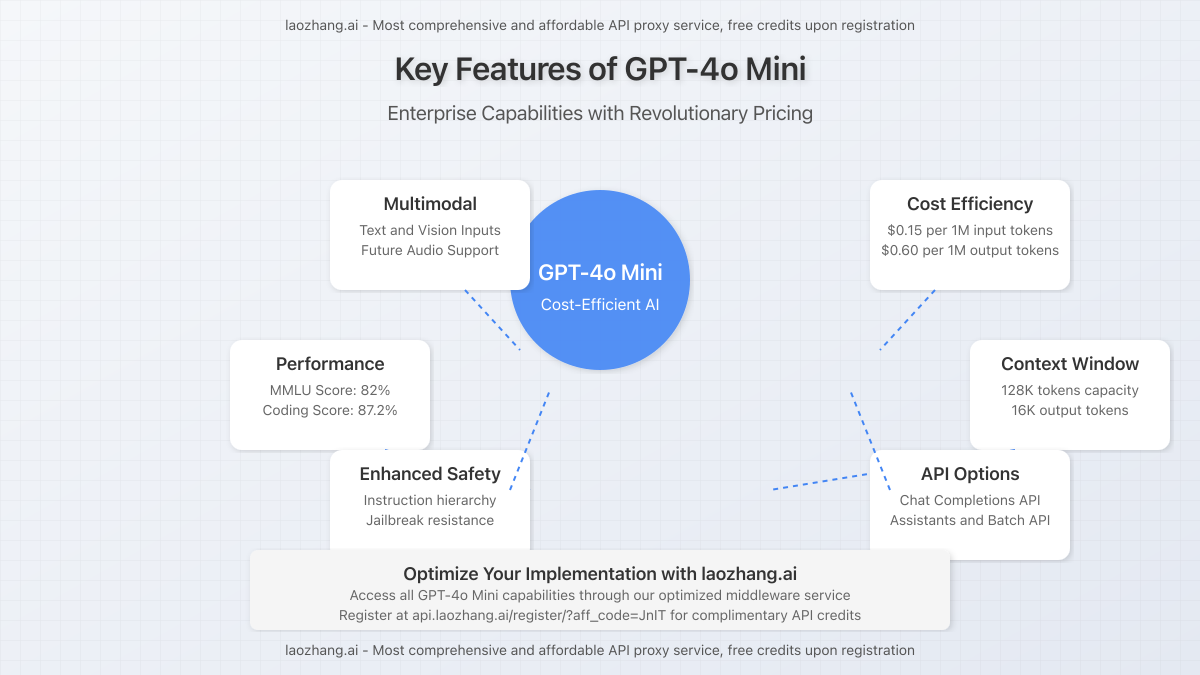

OpenAI’s GPT-4o Mini represents a breakthrough in AI affordability, delivering impressive performance at just $0.15 per million input tokens and $0.60 per million output tokens. This comprehensive guide examines how this revolutionary pricing structure makes enterprise-grade AI accessible to businesses of all sizes, while maintaining 82% MMLU benchmark scores that outperform previous models.

Understanding GPT-4o Mini Pricing Structure

GPT-4o Mini stands out as OpenAI’s most cost-efficient AI model to date, fundamentally changing the economics of AI deployment:

- Input tokens: $0.15 per million tokens

- Output tokens: $0.60 per million tokens

- Context window: 128K tokens

- Maximum output: 16,384 tokens per request

This pricing model delivers over 60% cost reduction compared to GPT-3.5 Turbo, while offering superior performance across key benchmarks. For businesses managing high-volume AI operations, this represents significant operational savings without performance compromise.

Performance vs. Cost Analysis

Despite its affordable pricing, GPT-4o Mini delivers exceptional performance metrics:

- MMLU benchmark: 82% (compared to 77.9% for Gemini Flash)

- Math reasoning (MGSM): 87.0%

- Coding proficiency (HumanEval): 87.2%

- Quality score: 85/100

This performance-to-cost ratio makes GPT-4o Mini the optimal choice for businesses seeking to implement multiple AI use cases without excessive infrastructure expenses.

API Access and Implementation Guide

GPT-4o Mini is accessible through multiple API endpoints, each optimized for different application needs:

- Chat Completions API: Ideal for conversational applications

- Assistants API: Perfect for creating specialized AI assistants

- Batch API: Optimized for processing large volumes of requests

For the most cost-effective implementation, businesses can leverage specialized API middleware services that optimize token usage and further reduce costs.

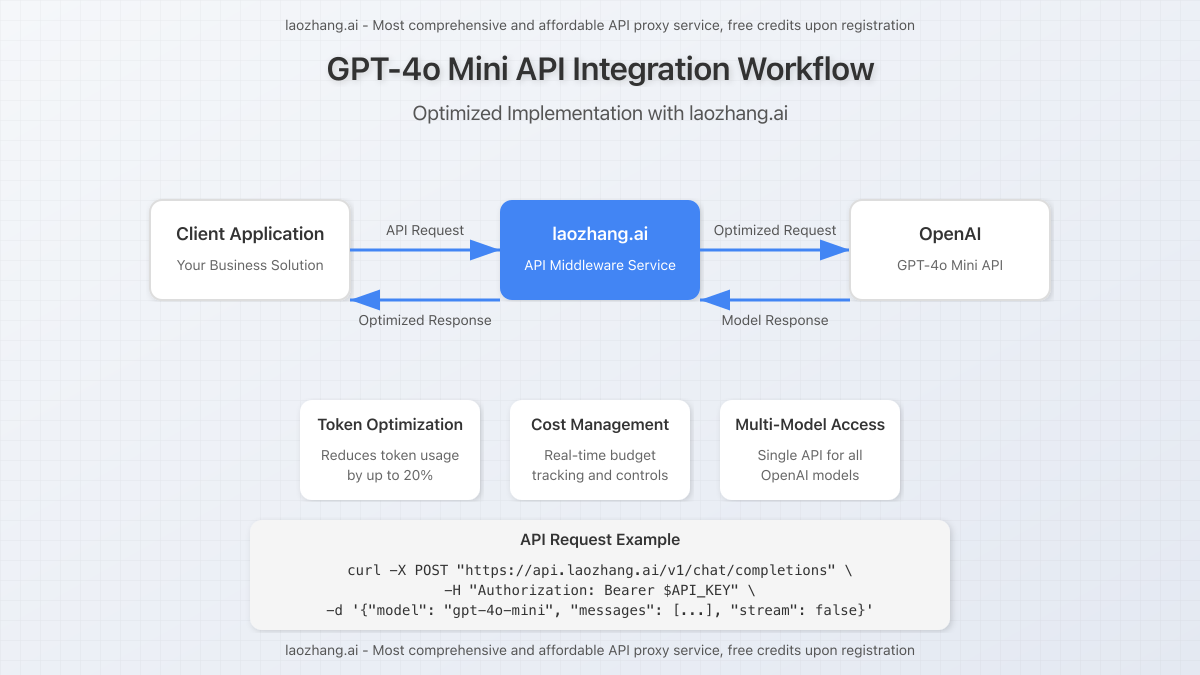

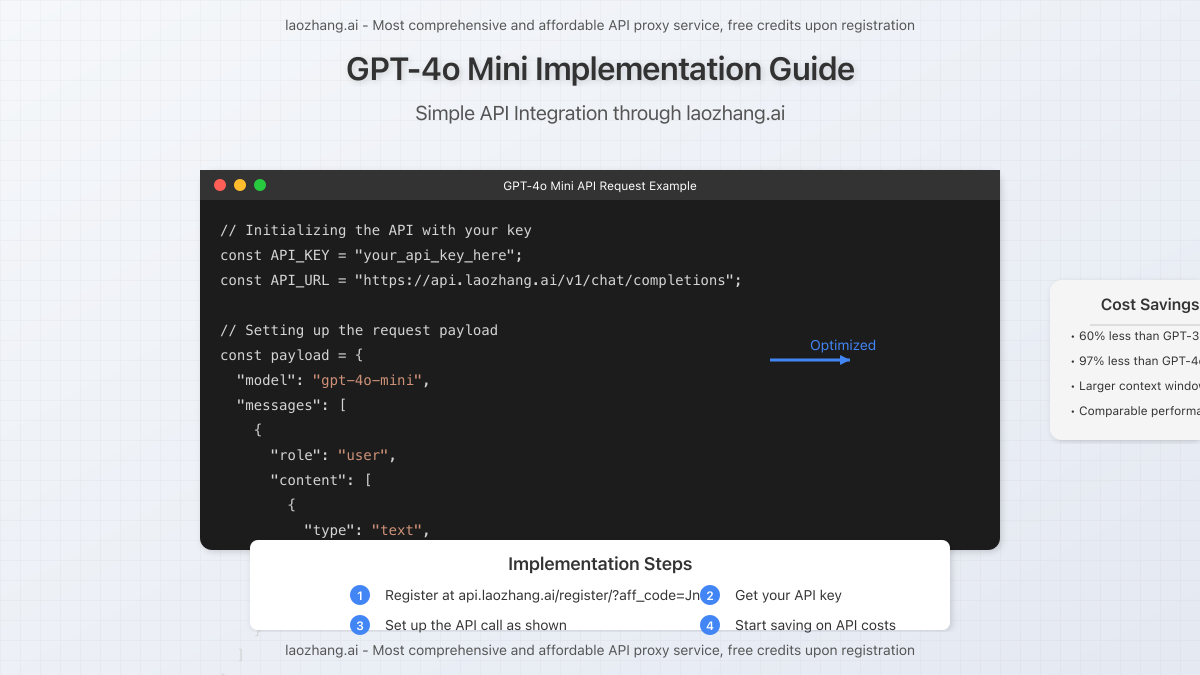

Implementing GPT-4o Mini with laozhang.ai API Service

Developers seeking the most efficient way to integrate GPT-4o Mini can utilize laozhang.ai, a specialized middleware service offering additional cost savings and simplified integration:

curl -X POST "https://api.laozhang.ai/v1/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $API_KEY" \

-d '{

"model": "gpt-4o-mini",

"stream": false,

"messages": [

{

"role": "user",

"content": [

{

"type": "text",

"text": "Summarize the benefits of GPT-4o Mini for small businesses"

}

]

}

]

}'This middleware approach provides several advantages:

- Simplified token management and optimization

- Reduced implementation complexity

- Additional cost savings beyond OpenAI’s base pricing

- Streamlined access to multiple model variants

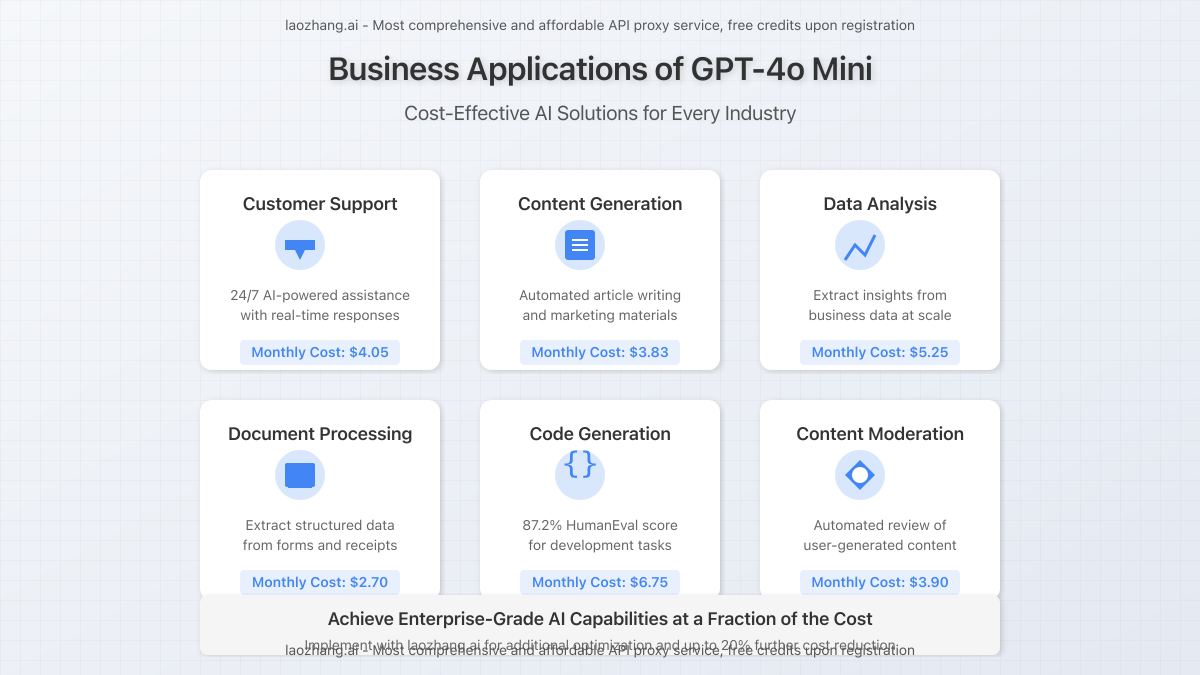

Cost Calculation Examples for Business Applications

Understanding real-world costs helps businesses plan their AI implementation budget effectively. Here are calculations for common business applications:

Customer Support Chatbot (24/7 Operation)

| Metric | Daily Volume | Monthly Cost |

|---|---|---|

| Average input (customer query) | 100,000 tokens | $0.45 |

| Average output (AI response) | 200,000 tokens | $3.60 |

| Total monthly operation | 9M tokens | $4.05 |

Content Generation System (Marketing Team)

| Metric | Daily Volume | Monthly Cost |

|---|---|---|

| Average input (content briefs) | 50,000 tokens | $0.23 |

| Average output (generated content) | 200,000 tokens | $3.60 |

| Total monthly operation | 7.5M tokens | $3.83 |

These examples illustrate how GPT-4o Mini makes enterprise-grade AI financially accessible even to small businesses with limited budgets.

Multimodal Capabilities and Business Applications

GPT-4o Mini currently supports both text and vision inputs through the API, with plans to expand to additional modalities including audio in future updates. This multimodal functionality enables diverse business applications:

- Document processing and analysis: Extract structured data from various document formats

- Visual content moderation: Cost-effective screening of user-generated content

- Enhanced customer support: Process text and image inputs for comprehensive assistance

- Data extraction from forms and receipts: Automate expense management and data entry

With its optimized cost structure, businesses can now implement these advanced capabilities without the prohibitive expenses previously associated with multimodal AI.

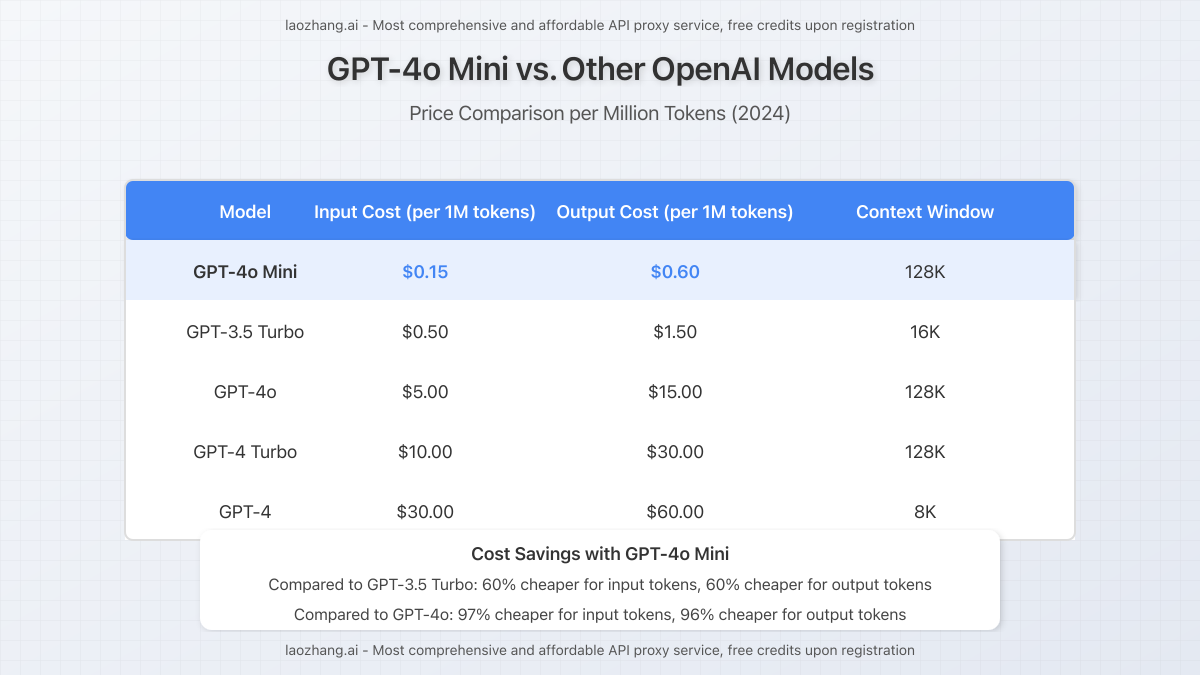

Comparison with Other Leading AI Models

To provide context for GPT-4o Mini’s pricing advantage, here’s how it compares to other leading models:

| Model | Input Cost (per 1M tokens) | Output Cost (per 1M tokens) | Context Window |

|---|---|---|---|

| GPT-4o Mini | $0.15 | $0.60 | 128K |

| GPT-3.5 Turbo | $0.50 | $1.50 | 16K |

| GPT-4o | $5.00 | $15.00 | 128K |

| GPT-4 Turbo | $10.00 | $30.00 | 128K |

| Claude Haiku | $0.25 | $1.25 | 200K |

This comparison highlights GPT-4o Mini’s position as the most cost-effective option among leading AI models, making it particularly attractive for businesses seeking to scale their AI implementation.

Integration Best Practices and Cost Optimization

To maximize the value of GPT-4o Mini’s favorable pricing structure, consider these integration best practices:

Token Optimization Strategies

- Prompt engineering: Craft concise, effective prompts to minimize input tokens

- Response parameters: Set appropriate max_tokens limits to control output costs

- Caching mechanisms: Implement response caching for common queries

- Middleware solutions: Utilize services like laozhang.ai to optimize token usage

Implementation Architecture

- Batch processing: Group similar requests to reduce API call overhead

- Hybrid approaches: Use GPT-4o Mini for most tasks, reserving more expensive models only where necessary

- Request throttling: Implement rate limiting to prevent unexpected usage spikes

- Cost monitoring: Implement usage dashboards to track expenses in real-time

These strategies help businesses maintain predictable AI operation costs while maximizing the utility of GPT-4o Mini’s capabilities.

Real-World Success Stories

Several businesses have already realized significant benefits from GPT-4o Mini’s cost-effective pricing structure:

- Ramp: Reported superior extraction of structured data from receipts compared to GPT-3.5 Turbo

- Superhuman: Achieved higher quality email response generation at significantly lower cost

- Small tech startups: Successfully implemented AI features previously considered cost-prohibitive

These case studies demonstrate how GPT-4o Mini is democratizing access to advanced AI capabilities, enabling innovative applications across diverse business sectors.

Conclusion: The Future of Affordable Enterprise AI

GPT-4o Mini represents a significant milestone in the evolution of AI economics, making sophisticated AI capabilities accessible to organizations of all sizes. With its exceptional balance of performance and affordability, this model opens new possibilities for businesses seeking competitive advantages through AI implementation.

For developers and businesses looking to leverage these capabilities efficiently, specialized API services like laozhang.ai provide an optimized pathway to implementation, adding further value through simplified integration and enhanced cost management.

As AI becomes increasingly central to business operations, cost-effective models like GPT-4o Mini will play a crucial role in democratizing access to these transformative technologies.

Ready to implement GPT-4o Mini for your business? Register for laozhang.ai’s API service at https://api.laozhang.ai/register/?aff_code=JnIT and receive complimentary API credits to start building immediately.

Frequently Asked Questions

How does GPT-4o Mini compare to GPT-3.5 Turbo in performance?

GPT-4o Mini outperforms GPT-3.5 Turbo across key benchmarks, scoring 82% on MMLU compared to GPT-3.5 Turbo’s lower performance metrics, while costing 60% less.

What makes GPT-4o Mini more cost-effective than other models?

GPT-4o Mini achieves cost efficiency through architectural optimizations that maintain high performance while reducing computational requirements, allowing OpenAI to offer the model at significantly lower prices.

Is GPT-4o Mini suitable for enterprise applications?

Yes, GPT-4o Mini is designed for enterprise deployment, with robust safety features, high reliability, and performance metrics that exceed most business requirements, making it ideal for scaling AI implementations.

How can I access GPT-4o Mini through API services?

GPT-4o Mini is accessible through OpenAI’s direct API endpoints or through specialized middleware services like laozhang.ai, which can provide additional optimization and cost management features.

What is the token context window for GPT-4o Mini?

GPT-4o Mini offers a 128K token context window, substantially larger than many competing models, allowing it to process extensive documents and maintain conversation context effectively.

How do I estimate my potential cost savings with GPT-4o Mini?

Calculate your current token usage with existing models, then apply GPT-4o Mini’s pricing ($0.15/1M input tokens and $0.60/1M output tokens) to determine potential savings. Most businesses report 60-80% cost reduction.