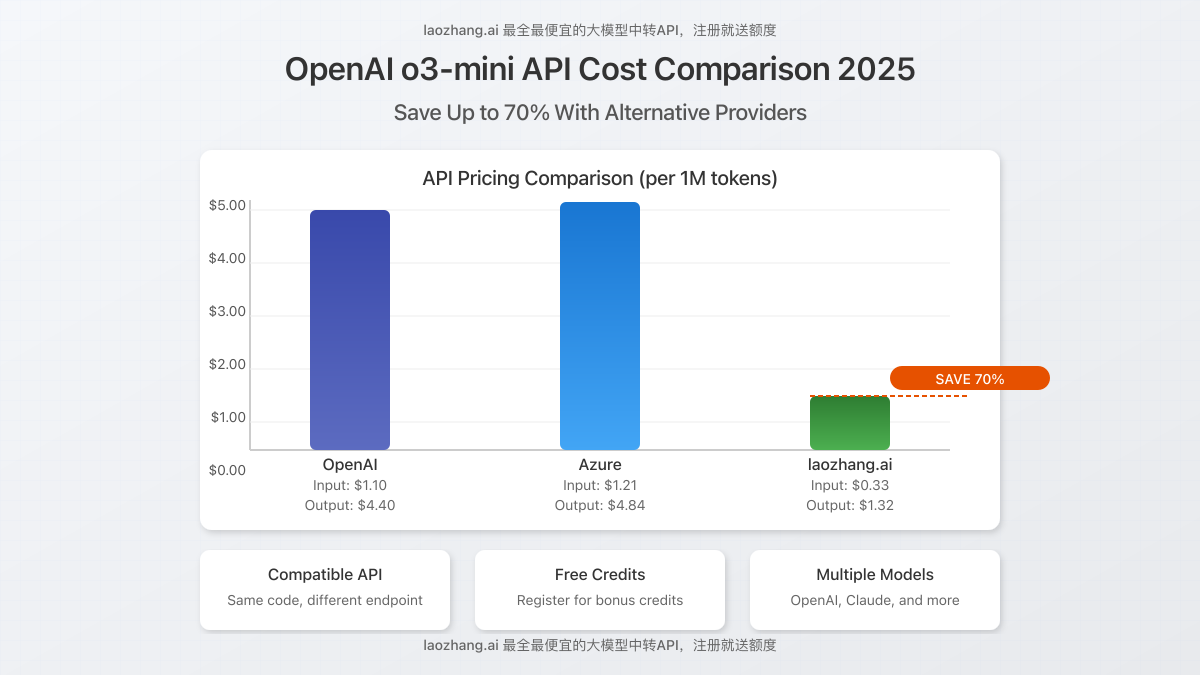

OpenAI o3-mini API Cost Comparison 2025: Save Up to 70% With Alternative Providers

Released on January 31, 2025, OpenAI’s o3-mini brings enterprise-level AI reasoning capabilities at significantly reduced costs compared to its larger counterparts. For developers and businesses building AI applications with budget constraints, understanding the exact pricing structure across different providers is crucial for optimizing operational expenses.

o3-mini API Pricing Breakdown: Official Rates

OpenAI positions o3-mini as their most cost-efficient reasoning model in the GPT series. The official pricing structure is designed to make advanced AI capabilities accessible to more developers and applications:

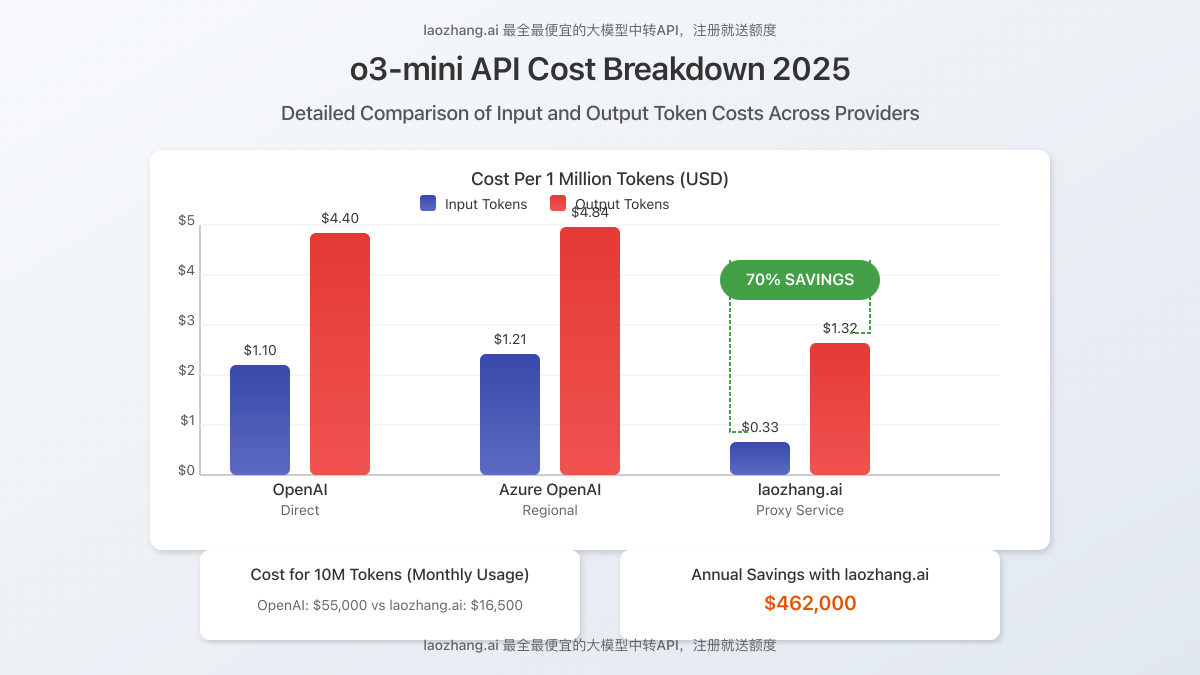

| Provider | Input Cost (per 1M tokens) | Cached Input Cost (per 1M tokens) | Output Cost (per 1M tokens) |

|---|---|---|---|

| OpenAI (Direct) | $1.10 | $0.55 | $4.40 |

| Azure OpenAI (Regional) | $1.21 | $0.605 | $4.84 |

| laozhang.ai | $0.33 | $0.165 | $1.32 |

The strategic pricing makes o3-mini approximately 10x less expensive than GPT-4o and 3x less expensive than o1-mini, making it ideal for cost-sensitive production deployments that still require solid reasoning capabilities.

Performance vs. Cost: What o3-mini Delivers

According to benchmarks from Artificial Analysis, o3-mini delivers impressive performance metrics relative to its cost:

- Reasoning capabilities approximately 90% as effective as o1-mini at one-third the price

- Output speed of 33 tokens/second (faster than average in its class)

- Context window of 128K tokens (suitable for most applications)

- Strong performance on factual accuracy, creative tasks, and code generation

The model excels at tasks requiring logical reasoning, data analysis, and text summarization while remaining cost-effective for high-volume applications.

How to Save Up to 70% on o3-mini API Costs

While o3-mini already offers excellent value compared to larger models, businesses can further optimize costs through API proxy services like laozhang.ai:

Benefits of Using laozhang.ai for o3-mini Access:

- 70% Cost Reduction: Pay only $0.33 per 1M input tokens versus $1.10 direct

- Free Credits Upon Registration: Test capabilities without initial investment

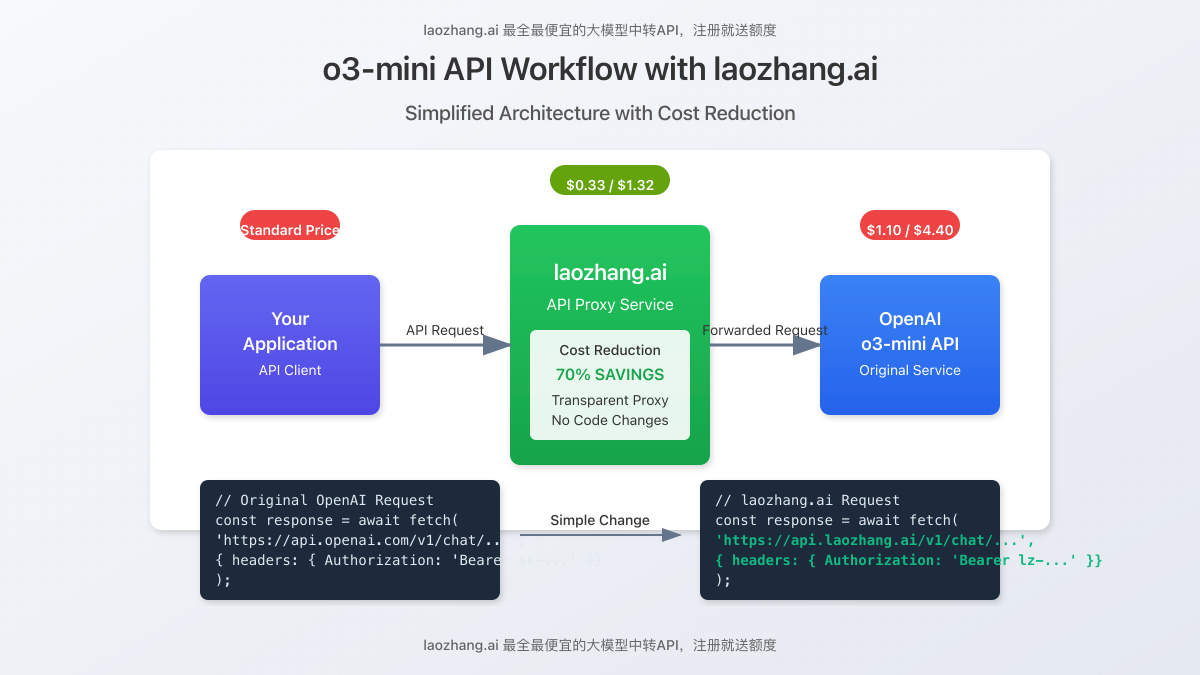

- API-Compatible Interface: No code changes required from OpenAI implementation

- Multi-Model Access: Single API key for OpenAI, Claude, and other providers

- Enterprise Volume Discounts: Additional savings for high-volume users

Getting Started with laozhang.ai

Implementation requires minimal changes to existing OpenAI code. Simply:

- Register at laozhang.ai to receive your API key

- Replace the OpenAI base URL with the laozhang.ai endpoint

- Use your new API key in authorization headers

Example API Request with laozhang.ai:

curl https://api.laozhang.ai/v1/chat/completions \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $API_KEY" \

-d '{

"model": "gpt-4o-image",

"stream": false,

"messages": [

{"role": "system", "content": "You are a helpful assistant."},

{"role": "user", "content": "Hello!"}

]

}'

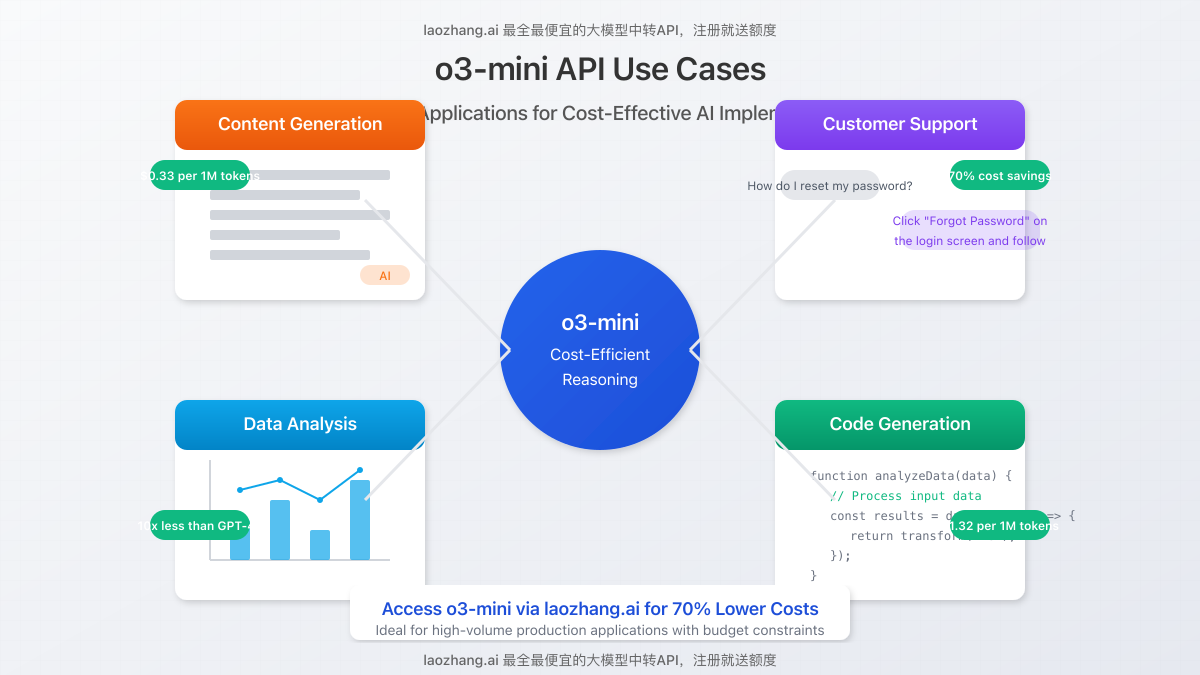

Use Cases: When to Choose o3-mini

o3-mini offers an optimal balance of capabilities and cost for numerous application types:

Content Generation

Blog posts, marketing copy, product descriptions at scale without prohibitive costs.

Customer Support Automation

Handle thousands of support queries with reasonable comprehension and response quality.

Data Analysis

Process and summarize large datasets, identify trends, and generate insights at lower costs.

Code Generation & Debugging

Assist developers with code snippets, bug identification, and optimizations.

For these use cases, o3-mini provides sufficient capabilities at approximately 10% of the cost of GPT-4o, making it ideal for production applications with budget constraints.

Cost Optimization Strategies for o3-mini API Usage

Beyond selecting a cost-effective provider, implement these strategies to maximize value:

- Prompt Engineering: Craft efficient prompts to reduce token usage

- Caching: Utilize cached input pricing for repetitive queries

- Response Streaming: Enable streaming to terminate unnecessary generation early

- Hybrid Approaches: Use o3-mini for initial processing and reserve premium models for complex cases

- Batch Processing: Combine similar requests to reduce overhead

Implementing these techniques alongside laozhang.ai’s discounted pricing can reduce operational costs by up to 85% compared to direct OpenAI API usage.

Conclusion: Balancing Performance and Budget with o3-mini

OpenAI’s o3-mini represents a significant milestone in making advanced AI capabilities financially accessible to more developers and businesses. By combining the model’s inherent cost-efficiency with strategic provider selection through laozhang.ai, organizations can implement powerful AI capabilities while maintaining tight budget controls.

Start Using o3-mini Through laozhang.ai Today

Register at laozhang.ai to receive free credits and experience enterprise-grade AI capabilities at a fraction of the standard cost.

Frequently Asked Questions

How does o3-mini compare to GPT-3.5 Turbo in terms of cost?

o3-mini is approximately 2.5x more expensive than GPT-3.5 Turbo but offers significantly improved reasoning capabilities, making it more suitable for complex tasks.

Is laozhang.ai’s implementation of o3-mini identical to OpenAI’s?

Yes, laozhang.ai provides direct access to OpenAI’s o3-mini with identical capabilities and performance, only at reduced pricing through volume discounting.

What token limits apply to o3-mini?

o3-mini supports a 128K context window, allowing for processing of very long documents or conversations in a single request.

How is cached input pricing calculated?

Cached input pricing applies when you reuse the same prompt multiple times, reducing costs by approximately 50% for the input tokens.

Can I switch between providers without changing my code?

Yes, laozhang.ai maintains API compatibility with OpenAI’s interface, requiring only endpoint and API key changes to implement.

Is there a minimum usage requirement for laozhang.ai?

No, laozhang.ai offers pay-as-you-go pricing with no minimum usage requirements, making it suitable for businesses of all sizes.