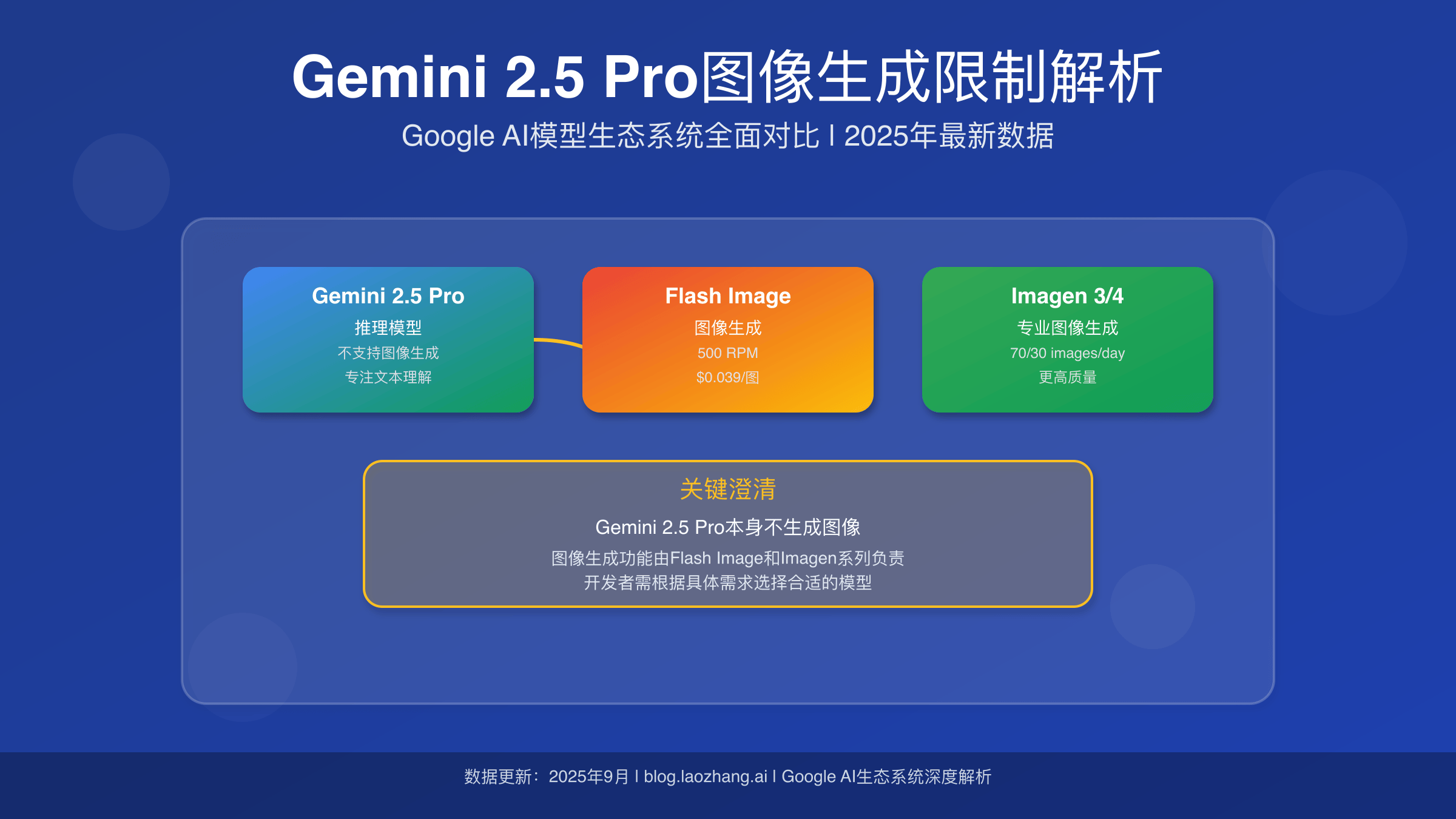

Gemini 2.5 Pro is a reasoning model that doesn’t directly generate images. Google’s image generation is handled by Gemini 2.5 Flash Image (500 RPM, $0.039/image) and Imagen models. Developers often confuse these models’ capabilities and rate limits when planning API integrations.

Understanding Google’s AI Model Architecture

The confusion surrounding Gemini 2.5 Pro’s image generation capabilities stems from Google’s complex AI model ecosystem. Gemini 2.5 Pro serves as Google’s most advanced reasoning model, optimized for complex problem-solving, code analysis, and multimodal understanding. However, it does not generate images directly. Instead, Google employs specialized models for image generation tasks, creating a clear separation between reasoning and generative capabilities.

This architectural decision reflects Google’s strategy of optimizing each model for specific tasks. Gemini 2.5 Pro excels at understanding and analyzing images within its 1-million-token context window, but image creation requires different computational approaches. For developers building applications that need both capabilities, understanding this distinction prevents integration errors and opens optimization opportunities. Learn more about Gemini API free tier limits for planning your implementation strategy.

Gemini 2.5 Flash Image: The Actual Image Generator

Gemini 2.5 Flash Image serves as Google’s primary conversational image generation model, launched in August 2025. Unlike Gemini 2.5 Pro, Flash Image specializes in real-time image creation and editing through natural language conversations. The model supports character consistency, targeted transformations, and integrates world knowledge into image generation processes.

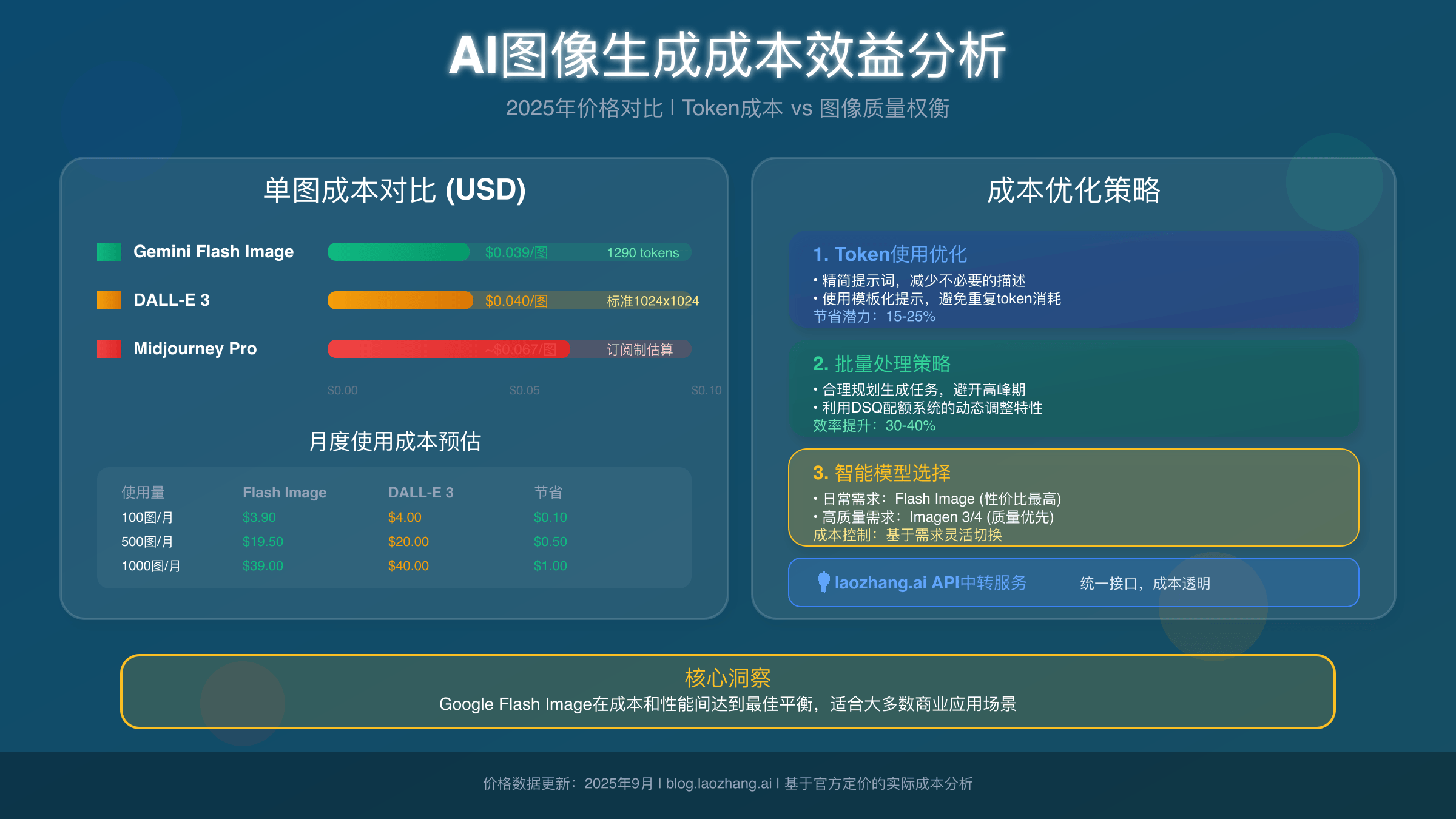

The technical specifications reveal Flash Image’s optimization for speed and efficiency. Each generated image consumes exactly 1,290 output tokens regardless of resolution, with pricing set at $0.039 per image. This token-based approach ensures predictable costs for developers integrating image generation into their applications, making budget planning more straightforward than competing services. For detailed implementation guidance, see our Gemini Flash Image API guide.

Gemini 2.5 Pro Rate Limits and API Restrictions

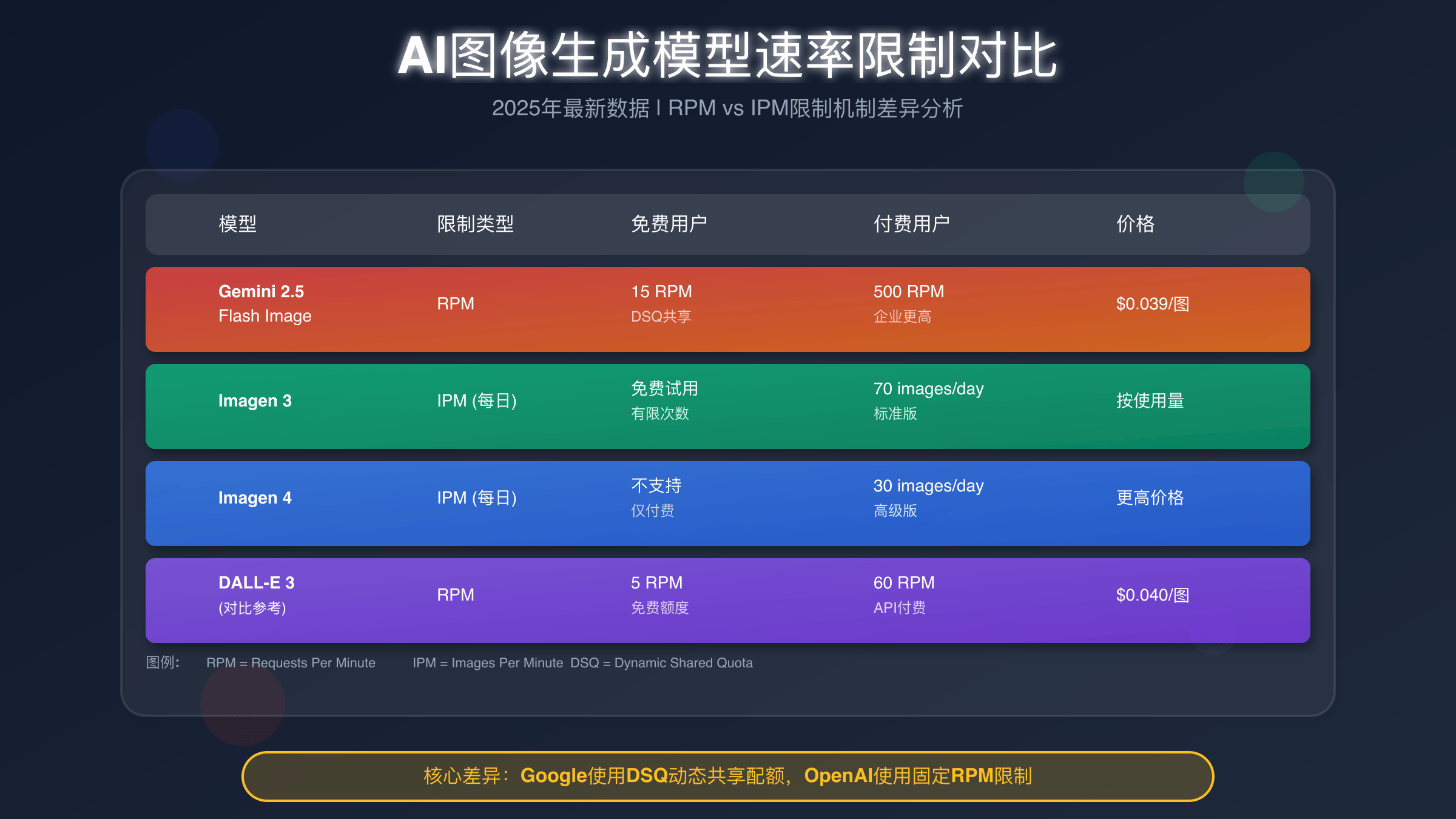

Google implements tiered rate limiting across its AI models, with significant differences between free and paid access levels. Gemini 2.5 Pro’s free tier provides 5 requests per minute (RPM), 250,000 tokens per minute (TPM), and 100 requests per day (RPD). These limits increase substantially with paid tiers, reaching 2,000 RPM for Tier 3 users with unlimited daily requests.

Gemini 2.5 Flash Image Preview operates under different constraints, offering 500 RPM for Tier 1 users with more generous token allowances. However, the preview status means these limits may change as Google transitions the model to general availability. Developers should monitor the official documentation at ai.google.dev for updates, as preview models often experience rate limit adjustments during stabilization periods. For comprehensive rate limit strategies, check our Gemini API rate limits guide.

Imagen Models: Google’s Image Generation Alternatives

Beyond the Gemini ecosystem, Google maintains the Imagen model family specifically for high-quality image generation. Imagen 4, the latest iteration, provides superior image quality but operates under much stricter rate limits. Free tier users receive only 10-20 requests per day (RPD), making it unsuitable for high-volume applications without paid subscriptions.

The Imagen models utilize different computational architectures compared to Gemini Flash Image, focusing on photorealistic output quality over conversational flexibility. This trade-off makes Imagen ideal for applications requiring premium visual content, while Flash Image better serves interactive applications needing rapid iteration and real-time generation capabilities.

Pricing Structure and Cost Analysis

Google’s pricing model for image generation reflects the computational complexity of different models. Gemini 2.5 Flash Image charges $0.039 per image through its token-based system, where each image equals 1,290 output tokens. This approach provides transparent pricing that scales linearly with usage, unlike subscription models that may include unused capacity. For detailed cost breakdowns, explore our Gemini API pricing guide.

For high-volume applications, developers can optimize costs through batch processing and intelligent caching strategies. The laozhang.ai API service offers streamlined access to Google’s image generation models with built-in optimization features, helping developers reduce token consumption through smart request batching and response caching mechanisms.

Gemini Free Tier Image Generation Limitations

Google’s free tier provides limited access to test image generation capabilities, but production applications quickly exhaust these quotas. The 100 RPD limit for Gemini 2.5 Pro and even more restrictive limits for specialized image models create bottlenecks for development teams building image-heavy applications.

Several strategies help maximize free tier usage during development phases. Implementing request caching prevents duplicate generations, while using lower-resolution outputs during testing phases conserves quota for final implementations. Developers can also leverage multiple model types strategically, using Flash Image for rapid prototyping and Imagen models for final production assets. If you encounter quota issues, our API quota error solutions guide provides universal troubleshooting techniques.

Enterprise vs Developer API Access

Google differentiates between its consumer-facing Gemini API and enterprise Vertex AI platform, with significant implications for rate limits and pricing. Vertex AI users access higher default quotas and can request quota increases through Google Cloud support channels, while Gemini API users face more restrictive limits designed for smaller-scale applications.

Enterprise users benefit from Service Level Agreements (SLAs) and dedicated support channels, making Vertex AI preferable for mission-critical applications. However, the additional complexity and higher baseline costs make the standard Gemini API more suitable for startups and individual developers exploring Google’s AI capabilities.

Common Integration Mistakes and Solutions

Developers frequently encounter issues when integrating Google’s image generation models due to architectural misunderstandings. The most common mistake involves attempting to use Gemini 2.5 Pro directly for image generation, resulting in API errors and wasted development time. Understanding each model’s specific capabilities prevents these integration failures.

Another prevalent issue involves rate limit handling. Google’s error responses provide specific information about quota exhaustion and reset times, but many developers implement generic retry logic that doesn’t account for these details. Implementing exponential backoff with quota-aware logic improves application stability and user experience during high-traffic periods. Before starting your integration, ensure you understand how to get your Gemini API key properly.

Gemini Image Generation Performance Optimization

Optimizing image generation performance requires understanding Google’s model-specific characteristics. Flash Image performs best with conversational, iterative prompts that build upon previous generations, while Imagen models prefer detailed, single-prompt approaches. Matching prompt strategies to model capabilities significantly improves both output quality and generation speed.

Request batching provides another optimization opportunity, though Google’s current API design limits batch sizes. The laozhang.ai service implements intelligent batching strategies that work within these constraints, automatically grouping compatible requests to maximize throughput while respecting rate limits and maintaining response quality.

Competitive Analysis: Google vs OpenAI vs Midjourney

Google’s image generation ecosystem competes directly with OpenAI’s DALL-E and Midjourney’s specialized platform. DALL-E offers 60 requests per minute for paid users, significantly higher than Google’s Flash Image limits, but lacks the conversational editing capabilities that distinguish Google’s approach. Midjourney provides superior artistic quality but operates primarily through Discord, limiting API integration options. For detailed comparisons, see our Gemini vs GPT-4 image API comparison.

Cost comparisons reveal Google’s competitive positioning. At $0.039 per image, Flash Image undercuts DALL-E’s $0.040 pricing while offering comparable quality and additional editing features. However, Google’s lower rate limits may offset cost savings for high-volume applications, making total cost of ownership calculations essential for model selection decisions. To understand similar limitations with other providers, check our analysis of ChatGPT Plus image generation limits.

Future Developments and Model Roadmap

Google continues expanding its image generation capabilities, with plans to increase rate limits as models transition from preview to general availability status. The company has announced intentions to integrate image generation more deeply into the broader Gemini ecosystem, potentially offering unified APIs that combine reasoning and generation capabilities seamlessly.

Developers should monitor Google’s developer blog and official documentation for updates on model availability and rate limit changes. The rapid evolution of Google’s AI offerings means current limitations may not persist long-term, but planning applications around current constraints ensures stable deployment strategies.

Best Practices for Production Deployment

Production deployments using Google’s image generation models require careful architecture planning to handle rate limits and ensure reliable service. Implementing queue-based systems helps manage request bursts while staying within API constraints, while comprehensive error handling prevents cascading failures during quota exhaustion periods.

For applications requiring guaranteed image generation capabilities, consider hybrid approaches combining multiple providers. Services like laozhang.ai offer unified access to multiple image generation APIs, automatically routing requests to available providers when primary services hit rate limits. This approach improves application resilience while maintaining cost efficiency through intelligent provider selection.