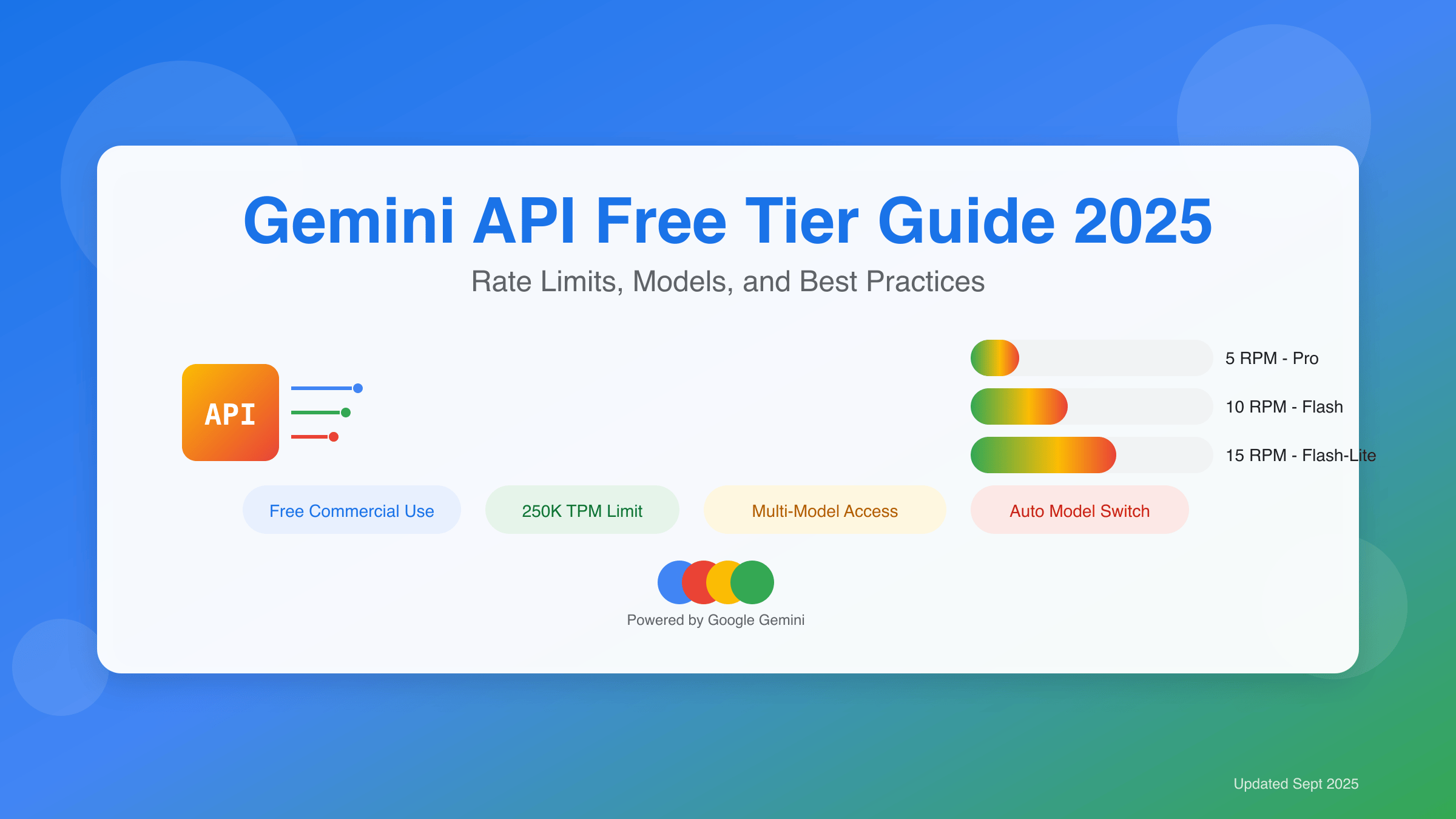

Google’s Gemini API free tier provides generous access to cutting-edge AI models with 5-15 requests per minute across three model variants. The service includes commercial usage rights, 250,000 tokens per minute capacity, and automatic model switching capabilities for optimal performance.

Understanding Gemini Free Tier Rate Limits

Google’s Gemini API free tier operates with a sophisticated rate limiting system designed to provide fair access while maintaining service quality. As of September 2025, the free tier supports three distinct model variants, each with specific performance characteristics and usage limits. Understanding these limits is crucial for developers planning their integration strategy.

The rate limiting system operates on a per-minute basis with daily quotas that reset at midnight Pacific Time. This structure allows for burst usage patterns while ensuring sustainable access across the developer community. The system tracks requests per minute (RPM), tokens per minute (TPM), and requests per day (RPD) independently.

Rate limits are enforced at the API key level, making it important to design applications that can handle temporary throttling gracefully. When limits are exceeded, the API returns a 429 status code with detailed information about when requests can be resumed. For comprehensive information about all tier specifications and advanced rate limiting strategies, see our complete Gemini API rate limits guide.

Gemini Model Variants and Specifications

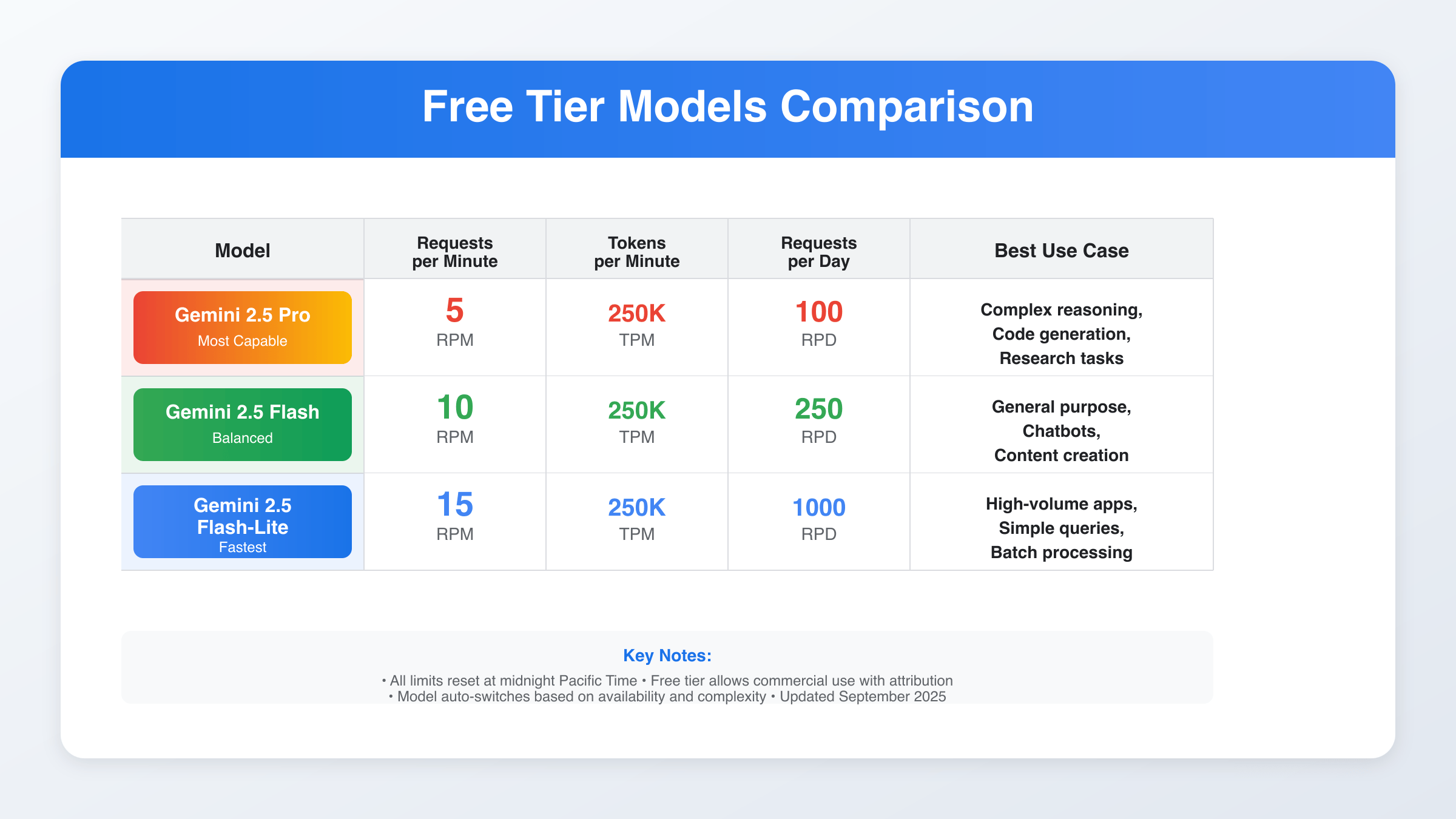

The Gemini free tier provides access to three optimized model variants, each tailored for different use cases and performance requirements. These models represent different points on the performance-speed spectrum, allowing developers to choose the most appropriate option for their specific needs.

Gemini 2.5 Pro delivers the highest reasoning capabilities with 5 requests per minute, 250,000 tokens per minute, and 100 requests per day. This model excels at complex analytical tasks, detailed code generation, and sophisticated reasoning problems. The lower request limit reflects the computational intensity required for maximum quality outputs.

Gemini 2.5 Flash strikes a balance between performance and speed, offering 10 requests per minute, 250,000 tokens per minute, and 250 requests per day. This model handles most general-purpose AI tasks effectively while providing faster response times. It’s optimized for applications requiring consistent performance with moderate complexity. For multimodal applications, you can also leverage Gemini 2.5 Flash for image processing at competitive rates.

Gemini 2.5 Flash-Lite prioritizes speed and throughput with 15 requests per minute, 250,000 tokens per minute, and 1,000 requests per day. This variant is ideal for high-frequency applications, simple queries, and real-time interactions where response latency is critical.

Gemini Model Switching Behavior and Automatic Optimization

One of the most sophisticated features of the Gemini free tier is its automatic model switching capability. The system intelligently routes requests between model variants based on query complexity, current load, and user usage patterns. This optimization typically triggers after 10-15 consecutive prompts to the same model variant.

When the system detects that a user has been consistently using Gemini 2.5 Pro for simpler tasks, it may automatically redirect subsequent requests to Gemini 2.5 Flash to preserve computational resources. This switching behavior is designed to maintain overall system performance while ensuring users receive appropriate model capabilities for their specific requests.

Developers can influence this behavior by structuring their requests appropriately and understanding the complexity thresholds that trigger switching. Simple factual queries, basic text generation, and straightforward API calls are prime candidates for automatic downgrading, while complex reasoning tasks typically maintain access to higher-tier models.

Commercial Usage Rights and Restrictions

Google’s Gemini free tier explicitly permits commercial usage, setting it apart from many other free AI API offerings. This commercial licensing allows startups, independent developers, and small businesses to integrate Gemini capabilities into production applications without immediate licensing fees.

However, commercial usage comes with specific restrictions that developers must understand. The free tier includes rate limiting that may not support high-volume commercial applications, and Google reserves the right to monitor usage patterns for compliance with their acceptable use policies.

Applications generating revenue from Gemini API integration must ensure they comply with content policies, attribution requirements, and fair usage guidelines. Google requires that commercial users respect the intended use cases and avoid activities that could negatively impact service availability for other users.

Setting Up Gemini API Access and Authentication

Getting started with the Gemini API requires creating a Google AI Studio account and generating an API key. The process is streamlined for developers and typically takes less than five minutes to complete. Once you have an API key, you can immediately begin making requests to any of the available model variants. For detailed step-by-step instructions on obtaining and configuring your API key, check our comprehensive Gemini API key setup guide.

The setup process involves visiting the Google AI Studio website, signing in with your Google account, and navigating to the API key generation section. Each Gemini API key is tied to your Google account and inherits the rate limits associated with the free tier automatically.

For production applications, consider implementing proper Gemini API key management practices including environment variable storage, rotation policies, and monitoring usage to avoid unexpected limit violations. Google’s official authentication documentation provides additional security guidelines for enterprise deployments.

Gemini API Python Integration and Code Examples

Integrating Gemini API into Python applications requires the official Google Generative AI library, which provides comprehensive support for all model variants and features. The library handles authentication, request formatting, and response parsing automatically.

import google.generativeai as genai

import time

import os

# Configure API key

genai.configure(api_key=os.environ['GEMINI_API_KEY'])

# Initialize models

model_pro = genai.GenerativeModel('gemini-2.5-pro')

model_flash = genai.GenerativeModel('gemini-2.5-flash')

model_lite = genai.GenerativeModel('gemini-2.5-flash-lite')

def generate_with_retry(model, prompt, max_retries=3):

"""Generate content with automatic retry for rate limits"""

for attempt in range(max_retries):

try:

response = model.generate_content(prompt)

return response.text

except Exception as e:

if "429" in str(e) and attempt < max_retries - 1:

wait_time = 2 ** attempt # Exponential backoff

print(f"Rate limit hit, waiting {wait_time} seconds...")

time.sleep(wait_time)

else:

raise e

# Usage example

prompt = "Explain the benefits of API rate limiting"

result = generate_with_retry(model_flash, prompt)

print(result)

This code example demonstrates proper error handling for rate limits, exponential backoff for retries, and model selection based on use case requirements. The retry mechanism is essential for production applications operating near rate limit boundaries.

Gemini API Rate Limit Optimization Strategies

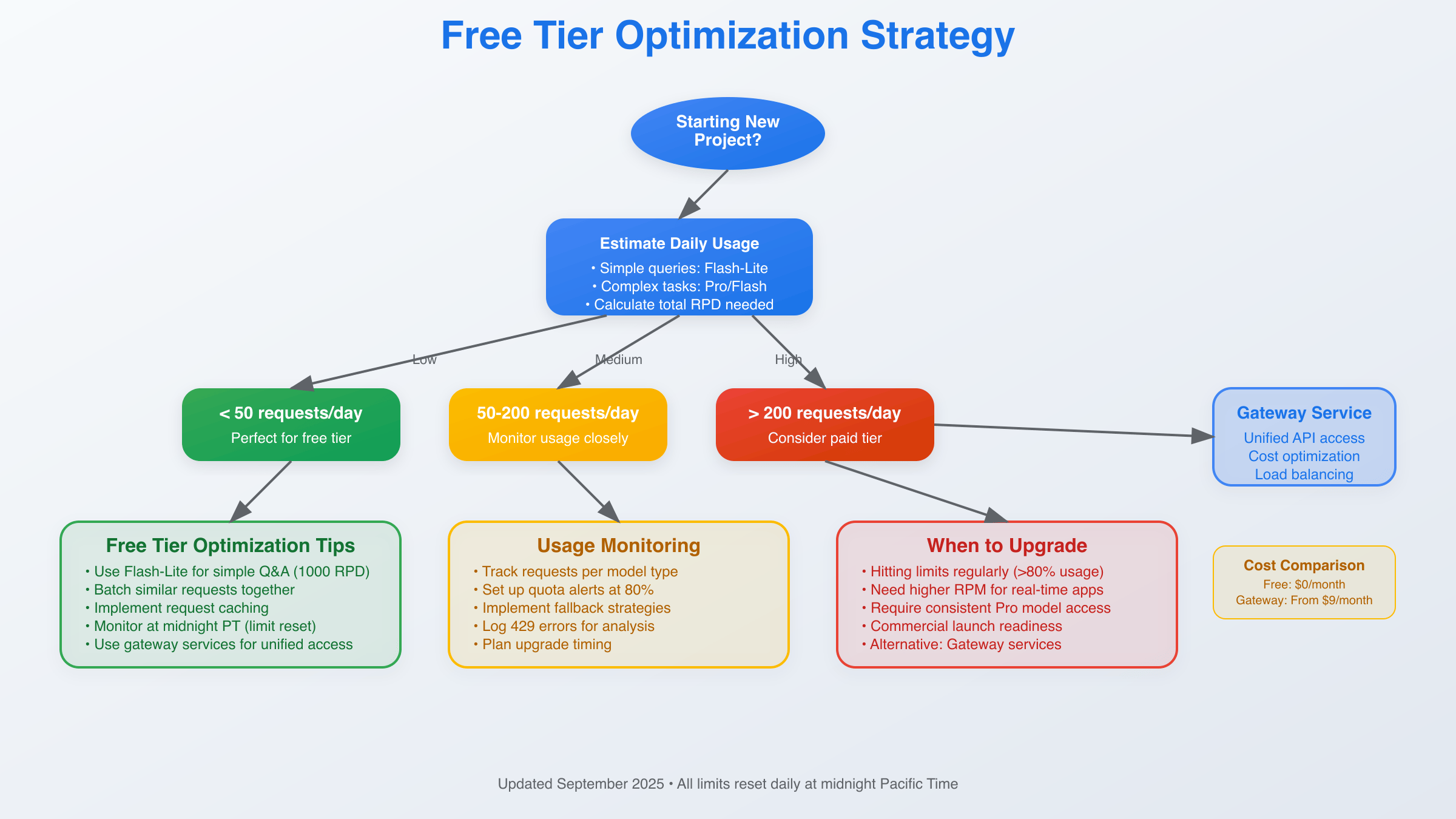

Maximizing the value of Gemini's free tier requires strategic approach to request management and model selection. Understanding when to use each model variant can significantly extend your effective usage capacity while maintaining application performance.

For applications processing fewer than 50 requests per day, the free tier provides excellent coverage across all use cases. These applications can use Gemini 2.5 Pro for complex tasks without concern for daily limits, while reserving Flash variants for higher-frequency operations.

Applications processing 50-200 requests daily need more careful optimization. Consider using Gemini 2.5 Flash as the default choice, escalating to Pro only for complex reasoning tasks, and using Flash-Lite for simple queries that don't require sophisticated processing.

High-volume applications exceeding 200 requests daily will quickly encounter free tier limitations. These applications should consider implementing request queuing, caching strategies, or evaluating paid tier options for sustainable scaling.

Token Counting and Cost Management

Understanding token consumption is crucial for effective Gemini API usage optimization. The 250,000 tokens per minute limit applies to both input and output tokens combined, making it important to monitor total token usage across all requests.

Input tokens include your prompt text, system instructions, and any context provided to the model. Output tokens represent the generated response content. Longer prompts and detailed responses consume more tokens, potentially limiting the number of requests you can make within the rate limit window.

Implementing token counting in your application helps predict and manage usage patterns. The Google Generative AI library provides methods to estimate token counts before making requests, allowing for better resource planning.

def estimate_tokens(model, prompt):

"""Estimate token count for a prompt"""

return model.count_tokens(prompt).total_tokens

def check_rate_limits(prompt, target_response_length=200):

"""Check if request fits within rate limits"""

input_tokens = estimate_tokens(model_flash, prompt)

estimated_total = input_tokens + target_response_length

if estimated_total > 4000: # Conservative per-request limit

return False, f"Estimated {estimated_total} tokens exceeds safe limit"

return True, "Request size acceptable"

Error Handling and Resilience Patterns

Building resilient applications with the Gemini free tier requires comprehensive error handling strategies that account for rate limiting, temporary service unavailability, and model switching behaviors. Proper error handling ensures graceful degradation when limits are reached.

The most common error scenarios include 429 rate limit responses, 503 service temporarily unavailable, and 400 bad request errors. Each error type requires different handling strategies to maintain application stability and user experience. If you're experiencing quota-related issues, our OpenAI API quota error solutions guide provides similar troubleshooting approaches that work across AI APIs.

Implementing circuit breaker patterns can prevent cascade failures when the API is experiencing issues. This pattern temporarily stops making requests after a threshold of failures, allowing the service to recover before resuming normal operations.

import asyncio

from datetime import datetime, timedelta

class GeminiRateLimiter:

def __init__(self):

self.requests_per_minute = 0

self.last_reset = datetime.now()

self.circuit_open = False

self.failure_count = 0

async def make_request(self, model, prompt):

# Check circuit breaker

if self.circuit_open:

if datetime.now() - self.last_reset > timedelta(minutes=5):

self.circuit_open = False

self.failure_count = 0

else:

raise Exception("Circuit breaker open")

# Reset rate limit counter if needed

if datetime.now() - self.last_reset > timedelta(minutes=1):

self.requests_per_minute = 0

self.last_reset = datetime.now()

# Check rate limit

if self.requests_per_minute >= 10: # Conservative limit

wait_time = 60 - (datetime.now() - self.last_reset).seconds

await asyncio.sleep(wait_time)

try:

response = model.generate_content(prompt)

self.requests_per_minute += 1

self.failure_count = 0

return response.text

except Exception as e:

self.failure_count += 1

if self.failure_count >= 5:

self.circuit_open = True

raise e

Comparing Gemini Free Tier with Alternatives

When evaluating AI API options, Gemini's free tier offers compelling advantages compared to other major providers. The combination of generous rate limits, commercial usage rights, and access to state-of-the-art models creates significant value for developers and businesses.

OpenAI's free tier provides $5 in credits that expire after three months, while Anthropic's Claude API requires immediate payment for access. Google's approach of providing ongoing free access with reasonable limits offers more predictable cost management for small-scale applications.

For developers requiring higher reliability and extended limits, services like laozhang.ai provide unified access to multiple AI providers including Gemini with enhanced rate limits and redundancy. These gateway services can be particularly valuable for production applications that need guaranteed availability. You can also explore additional free methods to access Google's AI beyond the official tier limits.

| Provider | Free Tier Limits | Commercial Usage | Rate Limits |

|---|---|---|---|

| Gemini API | Ongoing free access | Yes (with restrictions) | 5-15 RPM depending on model |

| OpenAI GPT | $5 credit (3 months) | Yes after credit expires | 3 RPM on free credits |

| Claude API | No free tier | Yes (paid only) | Not applicable |

| Gateway Services | Various options from $9/month | Yes | Enhanced limits with redundancy |

When to Upgrade Beyond Gemini Free Tier

Determining the right time to upgrade from Gemini's free tier depends on several factors including request volume, response time requirements, and business critical dependencies. The free tier serves many applications effectively, but certain use cases benefit from paid tier advantages.

Applications consistently hitting daily limits, requiring guaranteed response times, or needing higher availability should consider upgrading. The paid tier provides significantly higher rate limits, priority processing, and more predictable performance characteristics.

Financial considerations also play a role in upgrade decisions. For applications processing thousands of requests monthly, the cost of paid API access may be offset by the value generated through improved user experience and reduced development complexity.

Alternatively, API gateway services provide middle-ground solutions that enhance free tier capabilities through intelligent routing, caching, and fallback mechanisms. These services can extend free tier effectiveness while providing upgrade paths as requirements grow.

Gemini API Best Practices for Production Applications

Deploying Gemini API in production environments requires careful attention to security, monitoring, and scalability considerations. Proper implementation ensures reliable service delivery while maximizing the value of available rate limits.

API key security should be treated as a critical infrastructure component. Store keys in secure environment variables, implement key rotation procedures, and monitor for unauthorized usage. Never expose API keys in client-side code or public repositories.

Implementing comprehensive monitoring helps track usage patterns, identify optimization opportunities, and predict when rate limit upgrades might be necessary. Monitor metrics including request volume, response times, error rates, and token consumption trends.

Consider implementing request caching for frequently requested content, batch processing for bulk operations, and queue management for handling peak load periods. These architectural patterns help maximize free tier effectiveness while preparing for future scaling needs.

Future Considerations and Roadmap

Google continues evolving the Gemini API ecosystem with regular model updates, feature enhancements, and policy refinements. Staying informed about these changes helps developers optimize their implementations and plan for future capabilities.

The trend toward larger context windows, improved reasoning capabilities, and specialized model variants suggests that free tier value will continue increasing over time. Google's commitment to providing developer access indicates that the free tier will remain a viable option for many use cases.

As the AI landscape evolves, consider how Gemini API integration fits into broader technology strategies. The skills and infrastructure developed for Gemini API can often transfer to other Google AI services and multimodal capabilities as they become available. For example, you can complement your API usage with free Gemini image generation methods for comprehensive AI workflows.

Planning for scaling beyond free tier limits should be part of any production deployment strategy. Whether through direct Google Cloud billing or third-party gateway services, having upgrade paths defined helps prevent service disruptions as applications grow.