Gemini API Rate Limits: Complete Developer Guide for 2025

Gemini API rate limits vary by tier: Free tier allows 5 requests per minute (RPM) and 25 per day (RPD). Paid Tier 1 significantly increases limits, while Tier 2 (requires $250 spending + 30 days) offers enterprise-level quotas. Rate limits apply per project across four dimensions: RPM, TPM (tokens), RPD, and IPM (images). Exceeding any limit triggers HTTP 429 errors.

Understanding Gemini API Rate Limit Dimensions

The Gemini API enforces rate limits across four distinct dimensions, each serving a specific purpose in maintaining fair usage and system stability. Understanding these dimensions is crucial for building reliable applications that scale effectively without hitting unexpected limitations.

Requests Per Minute (RPM) represents the primary gating mechanism for API access. This limit controls how frequently your application can make API calls, regardless of the content size or complexity. For instance, the free tier’s 5 RPM restriction means you can only make one request every 12 seconds, making it suitable only for testing and development purposes. Production applications require higher tiers to maintain responsive user experiences.

Tokens Per Minute (TPM) measures the volume of content processed by the API. Each request consumes tokens based on both input prompt length and generated response size. A single token roughly equals 4 characters in English text. The TPM limit ensures fair resource allocation for computationally intensive operations. Free tier users get 32,000 TPM, while paid tiers offer millions of tokens per minute for large-scale processing.

Requests Per Day (RPD) sets a daily ceiling on total API usage. This quota resets at midnight Pacific Time (PT), creating interesting optimization opportunities for global applications. Understanding this reset timing helps developers schedule batch operations and manage workload distribution across time zones effectively.

Images Per Minute (IPM) applies specifically to models with image generation capabilities like Imagen 3. While conceptually similar to TPM, IPM tracks visual content generation separately, recognizing the higher computational cost of image synthesis compared to text generation.

The rate limiting system operates at the project level rather than individual API keys. This means all API keys within a Google Cloud project share the same rate limit pool. The enforcement uses a token bucket algorithm where each dimension maintains its own bucket that refills at a constant rate. When any bucket empties, subsequent requests receive HTTP 429 errors until tokens replenish.

Gemini API Rate Limits by Tier: Complete Breakdown

Google structures Gemini API access into distinct tiers, each designed for specific use cases ranging from experimentation to enterprise-scale deployment. Understanding these tiers helps developers choose the right level for their needs while optimizing costs.

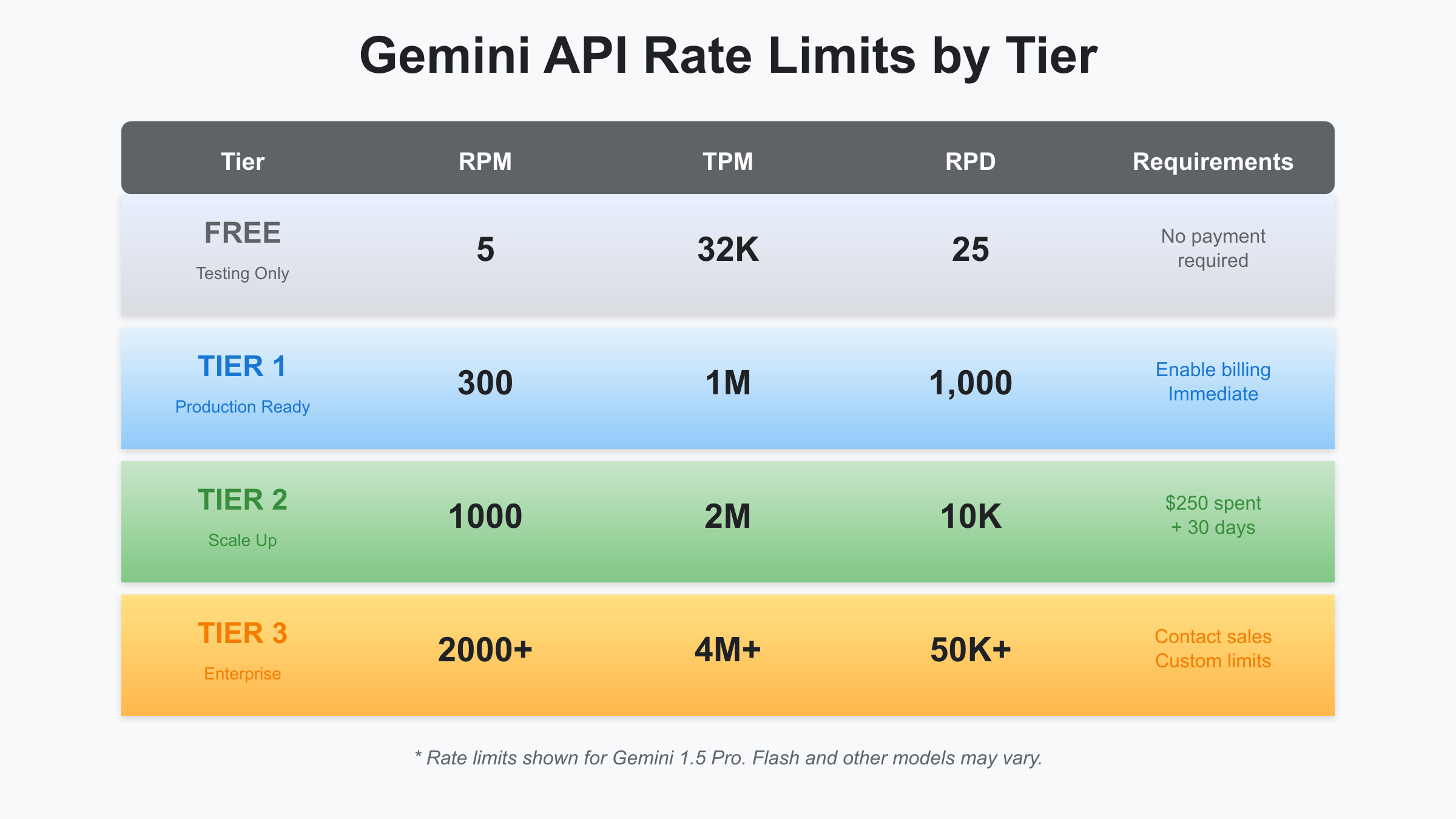

The Free Tier provides limited access ideal for prototyping and learning. With just 5 RPM and 25 RPD, developers can test basic functionality but cannot build production applications. The 32,000 TPM allowance supports processing roughly 8,000 words per minute, sufficient for simple experiments. This tier requires no payment setup, making it accessible for students, researchers, and developers evaluating the platform.

Moving to Tier 1 (Paid) dramatically expands capabilities. Upon enabling billing, projects immediately gain access to 300 RPM, 1 million TPM, and 1,000 RPD. These limits support small to medium production applications, content generation systems, and real-time chat interfaces. The transition happens instantly after adding a valid payment method, with no waiting period or manual approval required.

Tier 2 targets growing applications with substantial usage requirements. Achieving this tier requires $250 in cumulative Google Cloud spending (not just Gemini API) plus 30 days since the first successful payment. The upgrade process typically completes within 24-48 hours after meeting requirements. Tier 2 offers 1,000 RPM, 2 million TPM, and 10,000 RPD, enabling high-volume processing and multiple concurrent users.

Tier 3 (Enterprise) provides custom limits tailored to specific business needs. Organizations must contact Google Cloud sales to negotiate terms, pricing, and SLAs. This tier suits companies processing millions of requests daily, requiring guaranteed uptime, or needing dedicated infrastructure. Rate limits can reach 2,000+ RPM and 50,000+ RPD based on negotiated agreements.

Model-specific variations add another layer of complexity. Gemini 1.5 Flash, optimized for speed and cost, may have different limits than Gemini 1.5 Pro. Experimental models like Gemini 2.5 Pro often start with reduced limits during preview periods. Developers should check current limits for each model variant they plan to use.

How to Handle Gemini API Rate Limit Errors

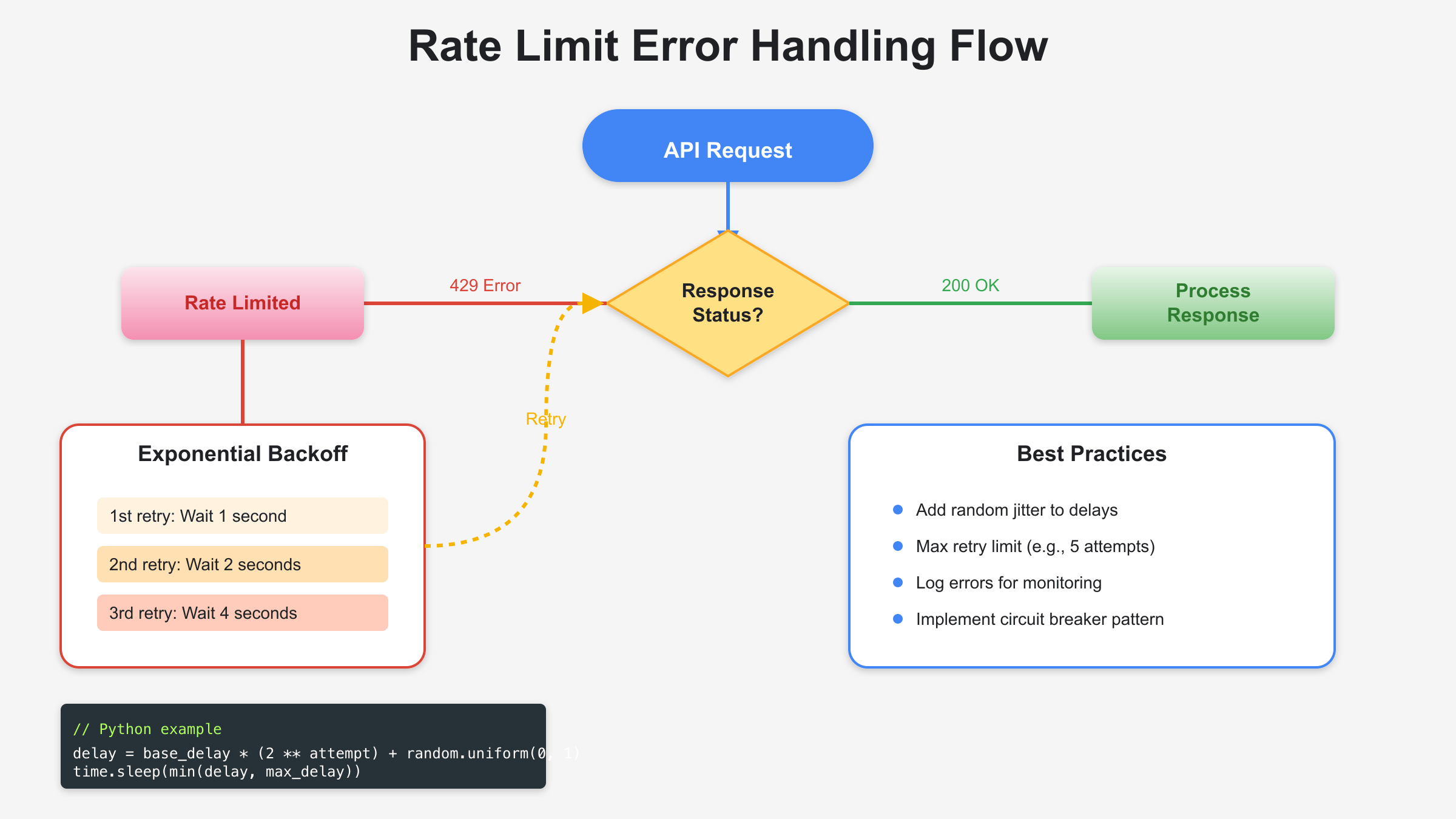

Encountering rate limit errors is inevitable when scaling Gemini API applications. The key to maintaining service reliability lies in implementing robust error handling strategies that gracefully manage these limitations while maximizing throughput.

When the Gemini API rate limit is exceeded, the service returns an HTTP 429 status code along with a response body containing error details. The error message typically includes “RESOURCE_EXHAUSTED” or similar indicators. Modern applications must detect these errors and implement appropriate retry logic rather than failing immediately.

Exponential backoff with jitter represents the gold standard for handling rate limit errors. This approach progressively increases wait times between retries while adding random variation to prevent synchronized retry storms. Here’s a production-ready Python implementation:

import random

import time

from typing import Callable, Any

import google.generativeai as genai

class GeminiRateLimitHandler:

def __init__(self, max_retries: int = 5, base_delay: float = 1.0, max_delay: float = 60.0):

self.max_retries = max_retries

self.base_delay = base_delay

self.max_delay = max_delay

def execute_with_retry(self, func: Callable, *args, **kwargs) -> Any:

"""Execute function with exponential backoff retry logic"""

last_exception = None

for attempt in range(self.max_retries):

try:

return func(*args, **kwargs)

except Exception as e:

last_exception = e

if "429" in str(e) or "RESOURCE_EXHAUSTED" in str(e):

if attempt == self.max_retries - 1:

raise

# Calculate exponential backoff with jitter

delay = min(

self.base_delay * (2 ** attempt) + random.uniform(0, 1),

self.max_delay

)

print(f"Rate limited. Retry {attempt + 1}/{self.max_retries} in {delay:.2f}s")

time.sleep(delay)

else:

# Non-rate-limit errors should bubble up immediately

raise

raise last_exception

Beyond basic retry logic, production systems benefit from implementing a circuit breaker pattern. This pattern prevents cascading failures by temporarily stopping requests when error rates exceed thresholds. The circuit breaker monitors failure patterns and enters an “open” state when problems persist, allowing time for the system to recover before attempting new requests.

Best practices for error handling include logging all rate limit events for analysis, implementing request queuing during peak loads, and using webhook callbacks for asynchronous processing when immediate responses aren’t required. Monitoring tools should track rate limit errors as key performance indicators, alerting teams when error rates spike unexpectedly.

Gemini API Rate Limit Optimization Strategies

Maximizing throughput within Gemini API rate limits requires strategic optimization across multiple dimensions. Successful applications employ various techniques to extract maximum value from available quotas while maintaining service quality.

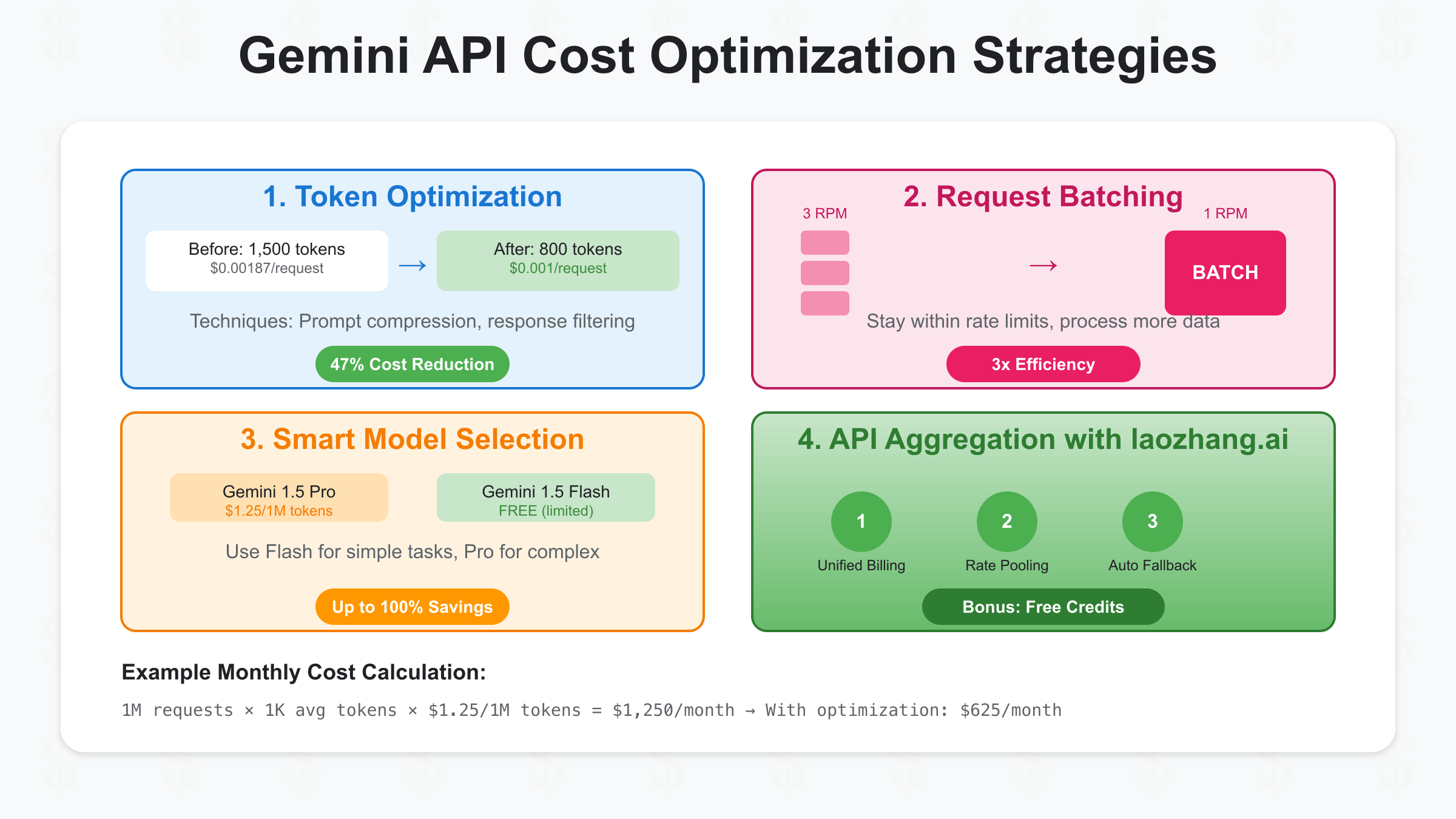

Request batching stands as the most impactful optimization technique. Instead of sending individual requests, combine multiple prompts into single API calls. This approach dramatically reduces RPM consumption while maintaining high throughput. For example, batching 20 prompts per request transforms a 100 RPM requirement into just 5 RPM, staying well within lower tier limits.

class GeminiBatchOptimizer:

def __init__(self, model_name: str = 'gemini-1.5-pro', batch_size: int = 10):

self.model = genai.GenerativeModel(model_name)

self.batch_size = batch_size

self.pending_prompts = []

def add_prompt(self, prompt: str, callback: Callable = None):

"""Add prompt to batch queue"""

self.pending_prompts.append({

'prompt': prompt,

'callback': callback

})

if len(self.pending_prompts) >= self.batch_size:

return self.process_batch()

def process_batch(self):

"""Process all pending prompts in a single request"""

if not self.pending_prompts:

return []

# Combine prompts with clear delimiters

batch_prompt = "\n\n=== REQUEST SEPARATOR ===\n\n".join(

[p['prompt'] for p in self.pending_prompts]

)

# Single API call for multiple prompts

response = self.model.generate_content(batch_prompt)

results = response.text.split("=== REQUEST SEPARATOR ===")

# Execute callbacks if provided

for i, item in enumerate(self.pending_prompts):

if item['callback'] and i < len(results):

item['callback'](results[i])

self.pending_prompts = []

return results

Token optimization directly impacts both costs and rate limits. Reducing token usage allows more requests within TPM constraints. Techniques include prompt compression using clear, concise language, implementing prompt templates that minimize redundancy, and post-processing responses to extract only essential information. Consider using system prompts that instruct the model to provide concise responses when full verbosity isn't required.

Multi-model routing leverages different Gemini models based on task complexity. Route simple queries to Gemini 1.5 Flash (free tier available) while reserving Gemini 1.5 Pro for complex reasoning tasks. This strategy can reduce costs by 80% or more while staying within rate limits. Implement intelligent routing logic that analyzes prompt characteristics to select the optimal model.

Time zone arbitrage exploits the midnight PT reset for RPD limits. Applications serving global users can schedule heavy processing during off-peak hours relative to Pacific Time. Asian businesses, for instance, can run batch jobs during their morning hours when fresh daily quotas become available. This temporal optimization effectively doubles or triples daily processing capacity without tier upgrades.

Request queuing and prioritization ensures critical requests succeed while managing rate limits. Implement priority queues that process high-value requests first, with lower-priority tasks filling available capacity. This approach maintains service quality for important operations while maximizing overall throughput.

Upgrading Gemini API Tiers: Step-by-Step Guide

Transitioning between Gemini API tiers requires understanding specific requirements and following the correct upgrade process. Many developers encounter confusion around tier qualifications, particularly the spending requirements and approval timelines.

To upgrade from Free to Tier 1, simply enable billing on your Google Cloud project. Navigate to the Billing section in Google Cloud Console, add a valid payment method, and the upgrade happens automatically. No waiting period or manual approval is required. Your rate limits increase immediately upon successful payment method verification.

Tier 2 upgrades involve more complex requirements. You must accumulate $250 in total Google Cloud spending (not just Gemini API usage) and wait 30 days from your first successful payment. Importantly, Google Cloud free credits do not count toward this spending requirement - only actual charges to your payment method qualify. The spending can come from any Google Cloud service including Compute Engine, Cloud Storage, or other APIs.

The automated approval system typically processes Tier 2 upgrades within 24-48 hours after meeting requirements. However, certain factors can trigger manual review, extending the timeline. These include unusual usage patterns, multiple failed payment attempts, rapid spending increases, or geographic restrictions. Projects less than 7 days old face additional scrutiny as part of abuse prevention measures.

Common issues during tier upgrades include confusion about what counts as valid spending, projects stuck in review despite meeting requirements, and unexpected delays in limit increases. To expedite approval, maintain consistent usage patterns before requesting upgrades, ensure your billing account has a positive payment history, and avoid sudden spikes in API calls immediately after requesting tier changes.

For Tier 3 enterprise upgrades, the process differs significantly. Organizations must contact Google Cloud sales directly to discuss requirements, negotiate custom limits, and establish service level agreements. This process typically takes 2-4 weeks and involves technical reviews, security assessments, and contract negotiations. Prepare detailed usage projections, architectural diagrams, and business justifications to streamline discussions.

Gemini API vs ChatGPT API: Rate Limit Comparison

Developers often evaluate both Gemini and ChatGPT APIs for their projects, making rate limit comparison crucial for informed decisions. Each platform takes distinctly different approaches to managing API access and scaling.

ChatGPT's free tier offers more flexibility with temporary model downgrades rather than hard stops. When free users exceed limits, ChatGPT automatically switches from GPT-4 to GPT-3.5, maintaining service availability with reduced capability. Gemini's free tier enforces strict cutoffs - once you hit 25 requests per day, the service stops until the next day's reset. This fundamental difference makes ChatGPT more suitable for consistent free-tier usage.

In paid tiers, both services offer competitive rate limits, but with different structures. ChatGPT uses a token-based system with limits varying by model version. GPT-4 starts at 10,000 tokens per minute for new accounts, increasing based on usage history. Gemini Tier 1 offers 300 RPM with 1 million TPM immediately upon payment, providing more predictable capacity planning.

Pricing models also differ significantly. ChatGPT charges per token with different rates for input and output. GPT-4 costs approximately $30 per million input tokens. Gemini 1.5 Pro charges $1.25 per million input tokens, making it substantially more cost-effective for high-volume applications. This 24x price difference often outweighs rate limit considerations for budget-conscious projects.

Reset mechanisms create operational differences. ChatGPT implements rolling windows for rate limits, providing smoother usage patterns. Gemini's hard reset at midnight PT creates burst capacity immediately after reset but can cause availability issues as limits approach. Applications requiring consistent 24/7 availability may prefer ChatGPT's approach, while batch processing systems benefit from Gemini's predictable reset timing.

The choice between platforms often depends on specific use cases. Choose Gemini for cost-sensitive applications with predictable usage patterns, integration with Google Cloud services, and need for longer context windows (up to 1 million tokens). Select ChatGPT for applications requiring consistent availability, established ecosystem integrations, and when model variety matters more than cost.

Real-Time Gemini API Rate Limit Monitoring

Effective rate limit management requires real-time visibility into current usage across all limit dimensions. Building robust monitoring systems helps prevent unexpected failures and enables proactive capacity planning.

While Gemini API doesn't provide direct rate limit status endpoints, applications can track usage through careful request logging and calculation. Implement a monitoring system that records every API call with timestamps, token counts, and response statuses. This data enables real-time usage calculation against known limits.

import time

from datetime import datetime, timezone

from collections import deque

from typing import Dict

class GeminiRateLimitMonitor:

def __init__(self, rpm_limit: int = 300, tpm_limit: int = 1000000, rpd_limit: int = 1000):

self.rpm_limit = rpm_limit

self.tpm_limit = tpm_limit

self.rpd_limit = rpd_limit

# Sliding windows for tracking

self.minute_requests = deque()

self.minute_tokens = deque()

self.daily_requests = 0

self.daily_reset_time = self._get_next_reset_time()

def _get_next_reset_time(self):

"""Calculate next midnight PT reset"""

# Implementation for PT midnight calculation

pass

def track_request(self, token_count: int):

"""Record a request and update metrics"""

current_time = time.time()

# Clean old entries from sliding windows

minute_ago = current_time - 60

while self.minute_requests and self.minute_requests[0] < minute_ago:

self.minute_requests.popleft()

while self.minute_tokens and self.minute_tokens[0][0] < minute_ago:

self.minute_tokens.popleft()

# Add new entries

self.minute_requests.append(current_time)

self.minute_tokens.append((current_time, token_count))

# Check daily reset

if current_time >= self.daily_reset_time:

self.daily_requests = 0

self.daily_reset_time = self._get_next_reset_time()

self.daily_requests += 1

def get_current_usage(self) -> Dict[str, float]:

"""Return current usage percentages"""

current_rpm = len(self.minute_requests)

current_tpm = sum(tokens for _, tokens in self.minute_tokens)

return {

'rpm_usage': (current_rpm / self.rpm_limit) * 100,

'tpm_usage': (current_tpm / self.tpm_limit) * 100,

'rpd_usage': (self.daily_requests / self.rpd_limit) * 100,

'can_make_request': all([

current_rpm < self.rpm_limit,

current_tpm < self.tpm_limit,

self.daily_requests < self.rpd_limit

])

}

Building alerting systems on top of monitoring data enables proactive management. Configure alerts at multiple thresholds - 70% for awareness, 85% for throttling non-critical requests, and 95% for emergency response. Integration with services like PagerDuty, Slack, or email ensures teams respond quickly to approaching limits.

Visualization dashboards provide intuitive understanding of usage patterns. Tools like Grafana or custom web interfaces can display real-time metrics, historical trends, and predicted limit exhaustion times. Include separate gauges for each limit dimension, time-series graphs showing usage patterns, and heat maps indicating peak usage periods.

Advanced monitoring strategies include predictive analytics using historical data to forecast limit exhaustion, automatic request throttling when approaching limits, and multi-project monitoring for organizations managing numerous Google Cloud projects. These systems become essential as applications scale beyond simple rate limit management.

Cost Optimization for Gemini API Usage

Understanding the relationship between rate limits and costs enables significant savings while maintaining service quality. Strategic optimization can reduce API expenses by 50-80% without compromising functionality.

Token-based pricing makes every character count toward your bill. Gemini 1.5 Pro charges $1.25 per million input tokens and $5.00 per million output tokens as of August 2025. With average English text containing 4 characters per token, a typical 1,000-word prompt costs approximately $0.00031, while a 1,000-word response costs $0.00125. These micro-transactions accumulate quickly at scale.

Model selection dramatically impacts costs. Gemini 1.5 Flash offers free tier access with 1,500 daily requests, perfect for simple tasks. Reserve Gemini 1.5 Pro for complex reasoning requiring its advanced capabilities. Implementing intelligent routing based on prompt analysis can maintain quality while reducing costs by 70% or more. Consider these routing criteria: prompt length, required reasoning depth, output format complexity, and real-time requirements.

class CostOptimizedRouter:

def __init__(self):

self.flash_model = genai.GenerativeModel('gemini-1.5-flash')

self.pro_model = genai.GenerativeModel('gemini-1.5-pro')

def estimate_complexity(self, prompt: str) -> str:

"""Analyze prompt to determine model requirements"""

word_count = len(prompt.split())

has_code = 'code' in prompt.lower() or 'function' in prompt.lower()

has_analysis = any(word in prompt.lower() for word in ['analyze', 'compare', 'evaluate'])

if word_count < 100 and not has_code and not has_analysis:

return 'simple'

elif word_count < 500 and not has_analysis:

return 'moderate'

else:

return 'complex'

def route_request(self, prompt: str) -> tuple:

"""Route to appropriate model based on complexity"""

complexity = self.estimate_complexity(prompt)

if complexity == 'simple':

return self.flash_model, 0 # Free tier

elif complexity == 'moderate' and self._flash_quota_available():

return self.flash_model, 0 # Still free if quota remains

else:

# Calculate Pro model cost

input_tokens = len(prompt) / 4 # Rough estimate

cost = (input_tokens / 1_000_000) * 1.25

return self.pro_model, cost

Caching strategies provide another layer of cost optimization. Implement semantic caching for frequently requested information, storing responses for common queries. This approach works particularly well for documentation queries, FAQ responses, and static content generation. Even a 20% cache hit rate translates to proportional cost savings.

Batch processing optimizes both rate limits and costs. By combining multiple requests, you reduce overhead tokens from system prompts and context setting. A batch of 10 requests might use 8,000 tokens versus 12,000 tokens if processed individually, providing a 33% reduction in costs. Schedule batch jobs during off-peak hours to take advantage of fresh daily quotas.

Regular cost analysis ensures optimization efforts remain effective. Track metrics including cost per request type, model usage distribution, cache hit rates, and cost trends over time. Set up billing alerts in Google Cloud Console to prevent unexpected charges. Many organizations find that 80% of their API costs come from 20% of their request types, making targeted optimization highly effective.

Production-Ready Code Examples

Building production-grade applications with Gemini API requires robust code architecture that handles rate limits, errors, and scaling challenges. These battle-tested implementations provide a foundation for reliable service deployment.

A comprehensive client wrapper encapsulates all rate limit handling, monitoring, and optimization logic. This approach centralizes complexity while providing a clean interface for application code:

import asyncio

import logging

from typing import Optional, List, Dict, Any

from datetime import datetime

import google.generativeai as genai

class ProductionGeminiClient:

"""Production-ready Gemini API client with comprehensive error handling"""

def __init__(self, api_key: str, tier: str = 'tier1',

enable_monitoring: bool = True, enable_caching: bool = True):

genai.configure(api_key=api_key)

self.tier_limits = {

'free': {'rpm': 5, 'tpm': 32000, 'rpd': 25},

'tier1': {'rpm': 300, 'tpm': 1000000, 'rpd': 1000},

'tier2': {'rpm': 1000, 'tpm': 2000000, 'rpd': 10000}

}

self.limits = self.tier_limits.get(tier, self.tier_limits['tier1'])

# Initialize components

self.rate_limiter = GeminiRateLimitHandler()

self.monitor = GeminiRateLimitMonitor(**self.limits) if enable_monitoring else None

self.cache = SemanticCache() if enable_caching else None

self.circuit_breaker = CircuitBreaker()

# Model initialization

self.models = {

'flash': genai.GenerativeModel('gemini-1.5-flash'),

'pro': genai.GenerativeModel('gemini-1.5-pro')

}

# Logging setup

self.logger = logging.getLogger(__name__)

async def generate_content(self, prompt: str,

model: str = 'pro',

use_cache: bool = True,

priority: int = 5) -> Optional[str]:

"""Generate content with full production safeguards"""

# Check cache first

if use_cache and self.cache:

cached_response = await self.cache.get(prompt)

if cached_response:

self.logger.info(f"Cache hit for prompt: {prompt[:50]}...")

return cached_response

# Check circuit breaker

if not self.circuit_breaker.can_proceed():

self.logger.warning("Circuit breaker is open, request rejected")

raise Exception("Service temporarily unavailable")

# Monitor rate limits

if self.monitor:

usage = self.monitor.get_current_usage()

if not usage['can_make_request']:

self.logger.warning(f"Rate limit approaching: {usage}")

await self._handle_rate_limit_approaching(usage)

try:

# Execute with retry logic

response = await self.rate_limiter.execute_with_retry(

self._generate_internal,

prompt, model

)

# Update monitoring

if self.monitor:

token_count = self._estimate_tokens(prompt, response)

self.monitor.track_request(token_count)

# Cache successful response

if use_cache and self.cache and response:

await self.cache.set(prompt, response)

self.circuit_breaker.record_success()

return response

except Exception as e:

self.circuit_breaker.record_failure()

self.logger.error(f"Request failed: {e}")

raise

async def _generate_internal(self, prompt: str, model: str) -> str:

"""Internal generation logic"""

selected_model = self.models.get(model, self.models['pro'])

response = selected_model.generate_content(prompt)

return response.text

def _estimate_tokens(self, prompt: str, response: str) -> int:

"""Estimate token usage for monitoring"""

# Rough estimation: 1 token ≈ 4 characters

return (len(prompt) + len(response)) // 4

async def _handle_rate_limit_approaching(self, usage: Dict[str, float]):

"""Handle approaching rate limits"""

# Implement throttling or queue management

max_usage = max(usage['rpm_usage'], usage['tpm_usage'], usage['rpd_usage'])

if max_usage > 90:

# Emergency throttling

await asyncio.sleep(1)

elif max_usage > 80:

# Mild throttling

await asyncio.sleep(0.1)

Request queuing systems manage load during peak times while respecting rate limits. Priority queues ensure critical requests process first:

import heapq

from dataclasses import dataclass

from typing import Callable, Any

import threading

import time

@dataclass

class QueuedRequest:

priority: int # Lower number = higher priority

timestamp: float

prompt: str

callback: Callable

def __lt__(self, other):

# Compare by priority first, then timestamp

if self.priority != other.priority:

return self.priority < other.priority

return self.timestamp < other.timestamp

class RequestQueueManager:

def __init__(self, gemini_client: ProductionGeminiClient):

self.client = gemini_client

self.queue = []

self.lock = threading.Lock()

self.worker_thread = threading.Thread(target=self._process_queue, daemon=True)

self.worker_thread.start()

def submit_request(self, prompt: str, callback: Callable, priority: int = 5):

"""Submit request to queue with priority"""

with self.lock:

heapq.heappush(self.queue, QueuedRequest(

priority=priority,

timestamp=time.time(),

prompt=prompt,

callback=callback

))

def _process_queue(self):

"""Background worker processing queued requests"""

while True:

if self.queue:

with self.lock:

if self.queue:

request = heapq.heappop(self.queue)

try:

# Check if we can make request

usage = self.client.monitor.get_current_usage()

if usage['can_make_request']:

response = self.client.generate_content(request.prompt)

request.callback(response, None)

else:

# Re-queue if rate limited

with self.lock:

heapq.heappush(self.queue, request)

time.sleep(1) # Wait before retry

except Exception as e:

request.callback(None, e)

else:

time.sleep(0.1) # Idle when queue empty

These production patterns provide reliability, scalability, and observability essential for real-world applications. Combine these components based on specific requirements, adjusting parameters for optimal performance within rate limit constraints.

Integrating Gemini API with laozhang.ai

The laozhang.ai API aggregation service offers a powerful solution for managing Gemini API rate limits while reducing complexity and costs. By routing requests through laozhang.ai's infrastructure, developers gain access to pooled resources and enterprise-grade features without managing multiple Google Cloud projects.

Rate limit multiplication represents the primary advantage of using laozhang.ai. Instead of being constrained by single-project limits, laozhang.ai maintains multiple Gemini API projects, intelligently routing requests to available capacity. This approach effectively multiplies your rate limits - if one project approaches its limits, requests automatically route to another project with available quota. For developers needing 2,000+ RPM but not qualifying for Tier 3, this provides an immediate solution.

Unified billing through laozhang.ai simplifies financial management significantly. Rather than managing separate Google Cloud billing, monitoring usage across projects, and dealing with international payment complexities, developers receive a single invoice in their preferred currency. The service supports local payment methods including Alipay and WeChat Pay, eliminating credit card requirements that often block developers in certain regions.

Cost optimization occurs through economies of scale. By aggregating usage from multiple customers, laozhang.ai maintains higher tier status across its projects, accessing better rates than individual developers might achieve. These savings pass through to users, often resulting in 20-30% cost reductions compared to direct API access. Additionally, the service implements intelligent caching and request deduplication, further reducing costs.

The integration process requires minimal code changes. Simply replace the Gemini API endpoint with laozhang.ai's endpoint and use your laozhang.ai API key. The service maintains full compatibility with Gemini's API format, ensuring existing code continues working without modification. Advanced features like automatic failover, request prioritization, and detailed analytics come built-in without additional implementation effort.

Monitoring and analytics dashboards provide unified visibility across all AI APIs, not just Gemini. Track usage patterns, costs, and performance metrics through a single interface. Set up alerts for approaching limits, unusual usage patterns, or cost thresholds. This centralized monitoring proves invaluable for applications using multiple AI services, eliminating the need to check multiple provider dashboards.

Common Gemini API Rate Limit Issues and Solutions

Real-world Gemini API deployments encounter various rate limit challenges beyond simple 429 errors. Understanding these common issues and their solutions helps build more resilient applications.

Burst traffic handling challenges many applications during peak usage periods. When multiple users simultaneously request AI-generated content, rate limits quickly exhaust. The solution involves implementing request queuing with priority management, pre-generating content during off-peak hours, and maintaining a buffer of cached responses. Consider implementing graceful degradation where non-critical features disable during high load.

Token calculation mismatches occur when applications incorrectly estimate token usage, leading to unexpected TPM limit violations. Gemini's token counting differs slightly from other models, particularly for non-English languages and special characters. Implement conservative token estimation by adding a 10-15% buffer to calculations. Track actual versus estimated token usage to refine calculations over time.

Time zone confusion around daily reset times causes availability issues for global applications. The midnight Pacific Time reset means different reset times across time zones - 3 AM Eastern, 8 AM UTC, and 4 PM in Tokyo. Applications must account for this when scheduling batch jobs or promising service availability. Implement timezone-aware scheduling that considers both user location and API reset timing.

Multi-project coordination becomes complex for organizations running multiple Google Cloud projects. Each project has independent rate limits, but coordinating usage across projects requires custom orchestration. Solutions include implementing a central request router that tracks usage across all projects, using message queues to distribute load, and building project health monitoring to detect and route around problematic projects.

Upgrade timing issues frustrate developers expecting immediate tier upgrades. The 24-48 hour processing time for Tier 2 upgrades isn't always predictable, and some upgrades face manual review delays. Plan upgrades well in advance of need, maintain consistent usage patterns before requesting upgrades, and have contingency plans using request optimization if upgrades delay. Contact Google Cloud support if upgrades take longer than 72 hours despite meeting requirements.

Future of Gemini API Rate Limits

The Gemini API rate limit landscape continues evolving as Google responds to developer needs and competitive pressures. Understanding likely future changes helps in building applications that remain viable as the platform matures.

Gemini 3.0, expected in Q4 2025, will likely introduce new rate limit tiers specifically for next-generation models. Historical patterns suggest initial conservative limits during preview periods, followed by gradual increases as infrastructure scales. Early adopters should prepare for limited access initially, with potential waiting lists for higher tiers. Planning for graceful fallbacks to current models ensures continuity during transition periods.

Regional rate limits represent a probable future development as Google expands global infrastructure. Rather than global pools, rate limits may vary by geographic region to optimize resource allocation. Asian regions might see different limits than North American or European zones. This change would benefit applications with geographically distributed users but complicate global applications requiring consistent capacity.

Dynamic pricing models may replace fixed tier structures, similar to cloud computing's evolution. Usage-based pricing with automatic tier adjustments could eliminate manual upgrade processes while optimizing costs. This shift would benefit applications with variable loads but require more sophisticated cost monitoring and budgeting systems.

Enterprise direct connect options will likely expand, offering dedicated infrastructure bypassing public API limits entirely. Large organizations could negotiate custom infrastructure with guaranteed capacity, SLAs, and specialized support. While expensive, these options provide predictability essential for mission-critical applications.

The competitive landscape with ChatGPT, Claude, and emerging models will continue driving improvements in rate limits and pricing. Developers should architect applications to easily switch between providers or use multiple APIs simultaneously. The laozhang.ai aggregation approach becomes increasingly valuable as it abstracts provider-specific limitations while offering unified access to multiple AI services.